The IoT already produces massive amounts of data. It’s time to start dealing with it. Is Fog and Edge Computing inevitable?

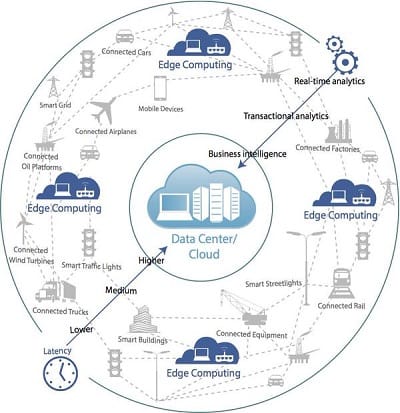

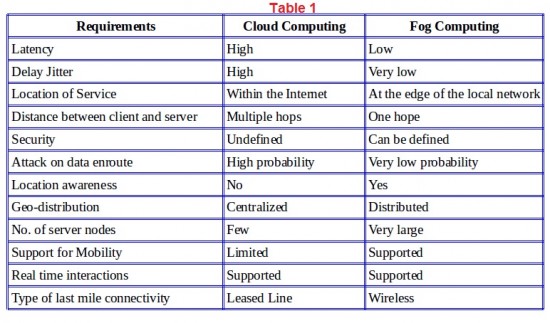

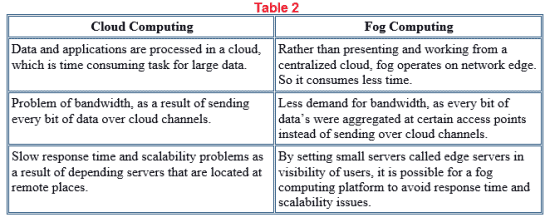

What happens when the cloud isn’t enough? This is a modern problem if there ever was one. Experts are saying 2016 will mark the rise of a new system: fog computing. Fogging involves extending cloud computing to the edge of a network. It helps end devices and data centers work together better.

Fog computing is one answer to several questions. In fact, the term “fog computing” is a recent creation of Cisco, and is often interchanged with “edge computing”. The “edge” simply refers to points nearer where data is produced than to the database and centralized processing centers. This means the edge of a network, or even access-providing devices like routers.

The Role and Effect of The Fog

The IoT and interest in big data means that the future will hold a lot of data, physically. Many are already wondering where to put all of it. From smart phones to driverless cars to wearables, everything is generating exponentially more information. The current life of regular web server hardware is almost six years. For components related to cloud servers, that number plummets to around two years. For the businesses gathering and harnessing data, that is not only cumbersome, but expensive. It means dealing with more parts more often. It means being stuck in upkeep instead of investing. In a proper edge computing system, regular web server components could live up to eight years. A study from Wikibon also found that cloud-only computing was far costlier than cloud with edge computing. For companies large and small, that could make a huge difference, both in the short and long run of technology.

Another problem within cloud computing is speed. The people fully analyzing data aren’t average folks, but major enterprises looking for quick insight and help in making split-second decisions. Some data is most useful right after it is collected. Days or weeks later, it may not hold the same weight. Edge computing affords the chance to analyze data before sending it off for collection, making that data exponentially more valuable. For example, a hacker or breach will be caught much more quickly when analysis occurs near the source. Waiting for that data to transfer back to a centralized data center wastes precious time.

Data thinning is the process of removing unnecessary data. It strips away the noise to show what really matters. Given that we create 2.5 quintillion bytes of data every day, that small step may become invaluable in the future. Driverless cars, for example, create a lot of data that doesn’t need to be stored forever. One car might create petabytes of data a year—images of every single line and bumper it crosses—and that’s not necessarily useful. Especially when it comes to the IoT, more will not always equal better.

Fog computing can also address problems in robotics. Drones examining an area collect vast amounts of data. Transmitting that data quickly, however, is not easy. Receiving instructions from the control center simply wastes time. Pacific Northwest National Laboratory research scientist, Ryan LaMothe, explained some of the perks of edge computing to Government Technology. He argues that computations need to happen on devices, themselves, rather than the center they relay data to:

“[Devices] need to be able to figure out who is in their area and they need to understand the context of their mission, and then take that data and send it to the human in the field who needs that data right at that time. It’s ultimately to make the human emergency response significantly more efficient.”

Fog computing also allows for better overall remote management. Companies in charge of oil, gas or logistics may find that computing at the edge saves time, money, and creates new opportunities. The speed with which data can be analyzed also adds a level of safety. Any information that may indicate a hazardous situation should be analyzed as close to the edge as possible.

For some, the future of the cloud will inevitably involve fog computing. Princeton already boasts their own Edge Lab, directed by Mung Chiang, co-founder of the Open Fog Consortium. His paper on Fog Networking offers four reasons that fog computing is necessary in today’s world: it offers real-time processing and cyber-physical system control, helps apps better fulfill users’ requirements, pools local resources, and allows for rapid innovation and affordable scaling.

Will The Fog Take Over The Cloud?

There will be drawbacks with fog computing, not the least of which is slow industry adaption. With some companies vying to reach the finish line first, and others dragging their feet, it will be some time before the fog is fully and properly implemented. Denny Strigl, former CEO of Verizon Wireless and lecturer in the Princeton electrical engineering department, notes that it took nearly 20 years for the industry to adopt a proper, easily maneuvered wireless system. He even describes the process as “very painful.” While fog computing may be here to stay, it will not be implemented overnight.

Questions about security make up the brunt of remaining qualms against the fog. Safety will continue to be a major topic in data and the IoT, and adding even more points of vulnerability along the chain makes companies and consumers nervous. Without a doubt, there is certain amount of safety achieved when all data can be kept together. That is something the fog will have to face. The final problem is far more human in nature: confusion. Adding yet another layer of complexity makes some worry that people and systems simply won’t be prepared to handle it. Though businesses may be wary to adapt, it is unlikely to slow the fog down in the long run. Fog computing is one of those solutions that affects nearly everyone—from technology to entertainment to retail and advertising. There is no doubt it will continue to develop, the real question is how, and who will the major players be.

image credit: Erik

Like this article? Subscribe to our weekly newsletter to never miss out!