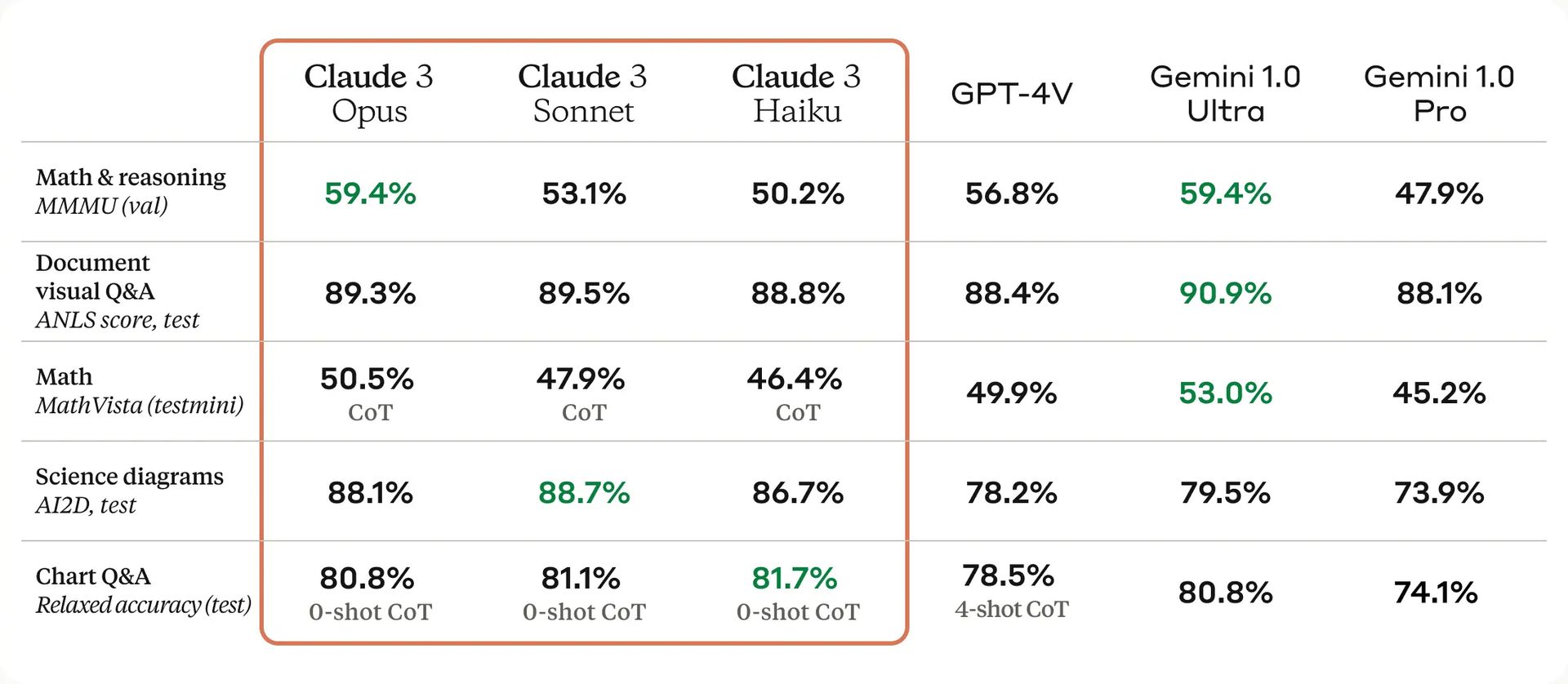

Anthropic, an AI firm founded by a group of ex-OpenAI employees, has announced that its latest series, the Claude 3 family of AI models, exhibits performance that matches or exceeds that of major competitors from Google and OpenAI. Setting it apart from its predecessors, Claude 3 boasts multimodal capabilities, enabling it to interpret both textual and visual inputs.

Introduction to Claude 3

This latest generation AI from Anthropic, known as Claude 3, encompasses various models including Haiku, Sonnet, and Opus, with Opus being the most advanced. Anthropic asserts that these models demonstrate “increased capabilities” in tasks involving analysis and forecasting, showing superior performance in specific benchmarks when compared to models such as ChatGPT, GPT-4, and Google’s Gemini 1.0 Ultra (excluding Gemini 1.5 Pro).

A significant advancement with Claude 3 is its introduction as Anthropic’s inaugural multimodal GenAI, capable of processing both text and images. This positions Claude 3 alongside certain versions of GPT-4 and Gemini in terms of capabilities, allowing it to handle photographs, charts, graphs, and technical diagrams sourced from PDFs, slideshows, and other document types.

Multimodal capabilities

The new Claude model sets a new standard among GenAI peers by offering the ability to analyze multiple images within a single inquiry, with the capacity to process up to 20 images concurrently. This feature enables Claude 3 to perform comparative and contrasting analyses of images, according to Anthropic.

However, the capabilities of Claude 3 in image processing do encounter certain limitations.

Anthropic has implemented measures to prevent the Claude 3 models from recognizing individuals, a decision likely influenced by ethical and legal considerations. The company acknowledges that Claude 3 may inaccurately process “low-quality” images (below 200 pixels) and faces challenges with tasks requiring spatial reasoning, such as interpreting an analog clock face, and object counting, with the models unable to provide precise object counts within images.

For the time being, Claude 3 is designed solely for the purpose of analyzing images and will not venture into generating artwork. This delineates its role within the realm of AI capabilities.

When handling both text and images, Anthropic reports that Claude 3 exhibits a marked improvement in executing multi-step instructions and generating structured output in formats like JSON. It also boasts enhanced conversational abilities in languages other than English compared to its earlier iterations. Moreover, this model is equipped with a “more nuanced understanding of requests,” which minimizes its propensity to decline answering questions. In the near future, the models will be updated to cite the sources of their responses, enabling users to verify the information provided.

Claude 3 is characterized by its ability to produce responses that are not only more expressive and engaging but also more responsive to direct prompts. This makes it simpler for users to guide the AI to achieve the intended outcomes with succinct and clear prompts.

These enhancements are attributed to Claude 3’s broader contextual understanding, underscoring Anthropic’s commitment to refining the model’s interaction and performance capabilities.

Should you buy Claude Pro for $20?

The concept of a model’s context, or context window, is crucial as it pertains to the breadth of input data (such as text) a model evaluates before generating an output. Models constrained by narrow context windows often struggle to retain the substance of recent interactions, leading to responses that may stray off-topic or encounter problematic issues. Conversely, models equipped with expansive context windows are adept at maintaining a cohesive narrative flow, enabling them to craft responses that are contextually nuanced and rich.

Anthropic reveals that initially, this model is set to support an impressive 200,000-token context window, which translates to roughly 150,000 words. This capacity will be extended to up to a 1-million-token context window (approximately 700,000 words) for a select group of customers, a feature that aligns Claude 3 with the capabilities of Google’s latest GenAI model, Gemini 1.5 Pro, known for its million-token context window.

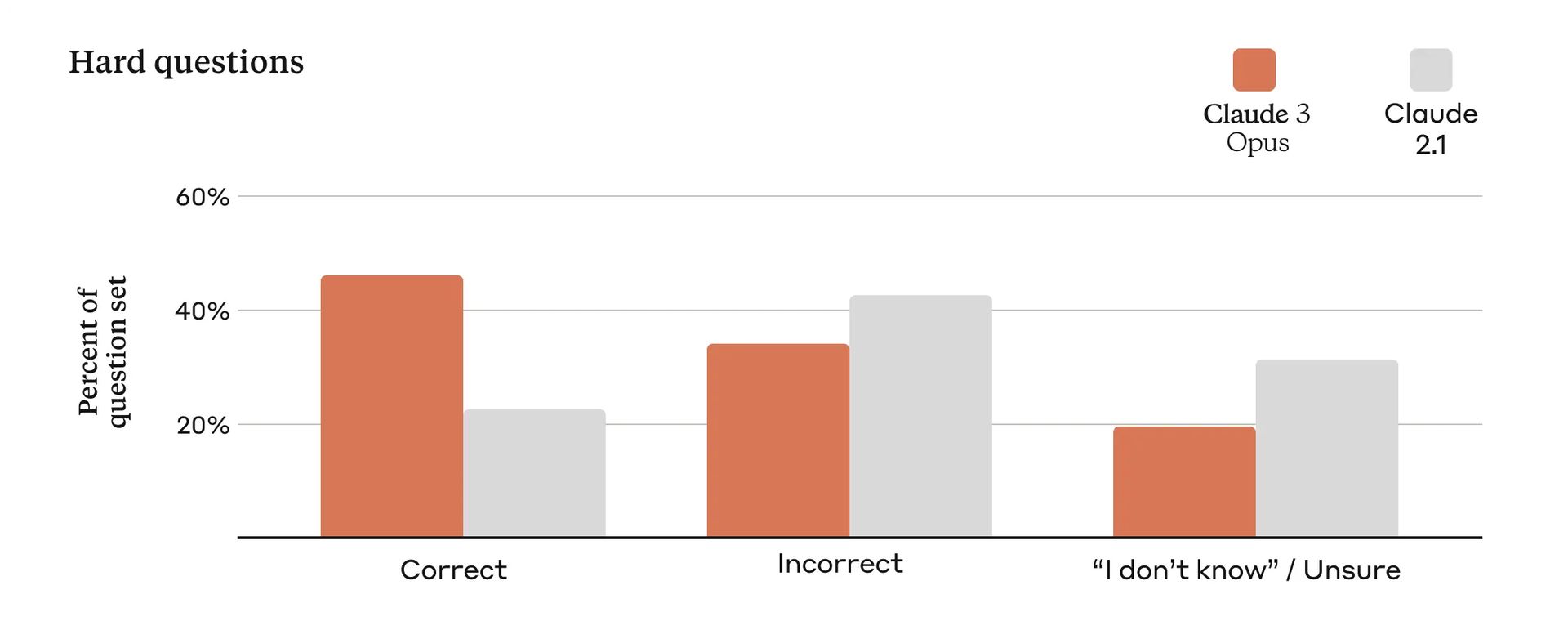

Acknowledging Claude 3’s imperfections

Despite the advancements the new Claude models represent over previous models, it is not devoid of imperfections. In a detailed technical whitepaper, Anthropic concedes that Claude 3 shares common vulnerabilities with other GenAI models, such as biases and a tendency towards hallucinations—fabricating information. Distinct from some of its counterparts, this model lacks the ability to conduct web searches, relying instead on pre-August 2023 data for generating responses. Additionally, while it is proficient in multiple languages, its fluency in certain “low-resource” languages does not match its proficiency in English.

Anthropic commits to delivering regular updates for Claude 3, aiming to refine and enhance the model’s performance and capabilities in the forthcoming months. This ongoing development effort underscores Anthropic’s dedication to mitigating the model’s limitations and broadening its utility across diverse applications.

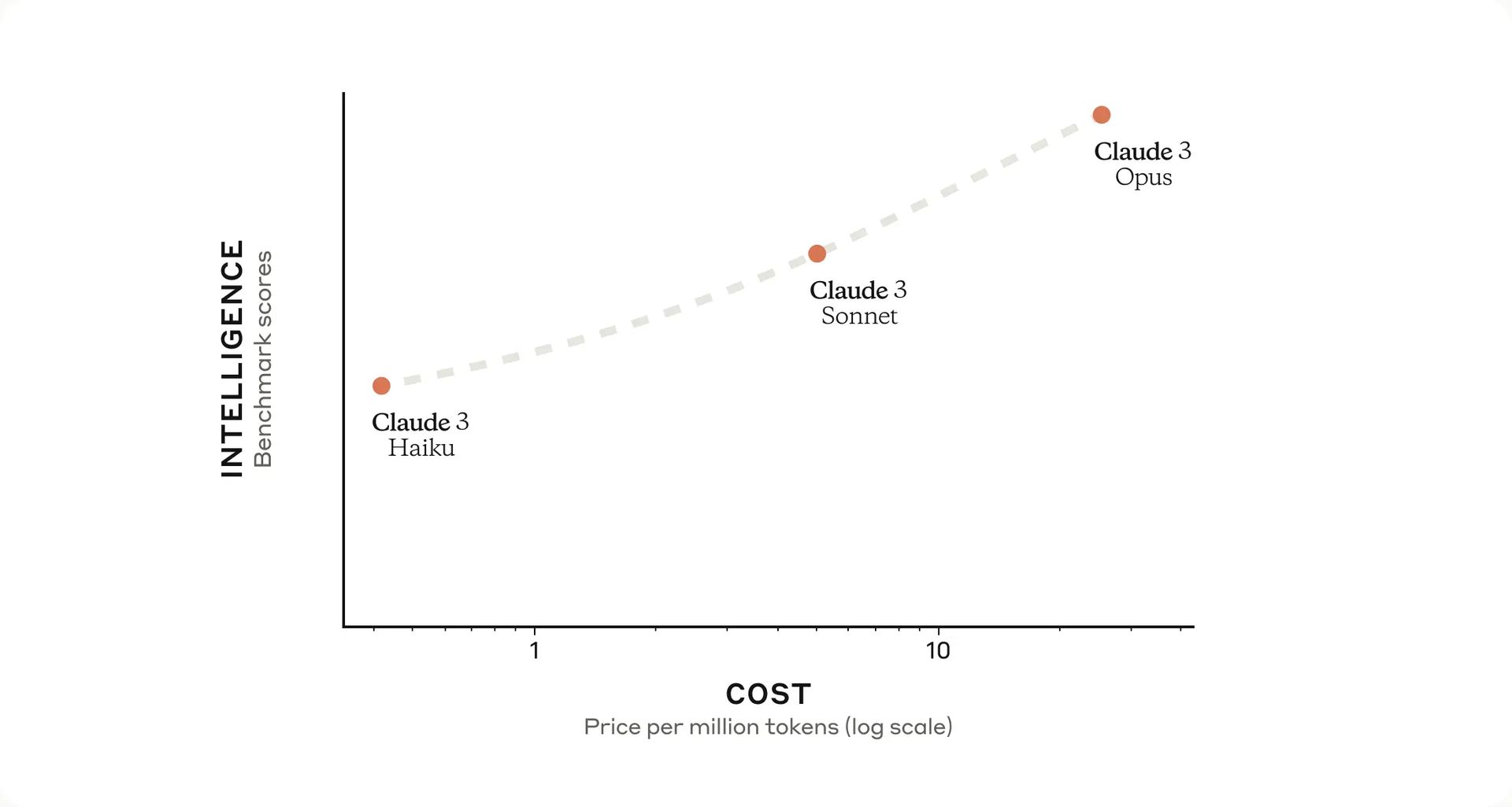

Claude pricing details

Currently, Anthropic has made both Opus and Sonnet models of Claude 3 accessible through various platforms including their own web interface, dev console and API, as well as through Amazon’s Bedrock platform and Google’s Vertex AI. The Haiku model is anticipated to be released later in the year.

Anthropic has provided a detailed pricing structure for these models as follows:

| Model | Price per million input tokens | Price per million output tokens |

| Opus | $15 | $75 |

| Sonnet | $3 | $15 |

| Haiku | $0.25 | $1.25 |

Featured image credit: Anthropic