OpenAI launches CriticGPT, a model based on GPT-4, is designed to critique ChatGPT responses, helping human trainers identify errors during the process of Reinforcement Learning from Human Feedback (RLHF).

In advanced AI systems, ensuring the accuracy and reliability of responses is critical. ChatGPT, powered by the GPT-4 series, is continually refined through RLHF, where human trainers compare different AI responses to rate their effectiveness and accuracy.

However, as ChatGPT becomes more sophisticated, spotting subtle mistakes in its outputs becomes increasingly challenging.

This is where the new model from OpenAI steps in, providing a powerful tool to enhance the training and evaluation process of AI systems.

What is CriticGPT?

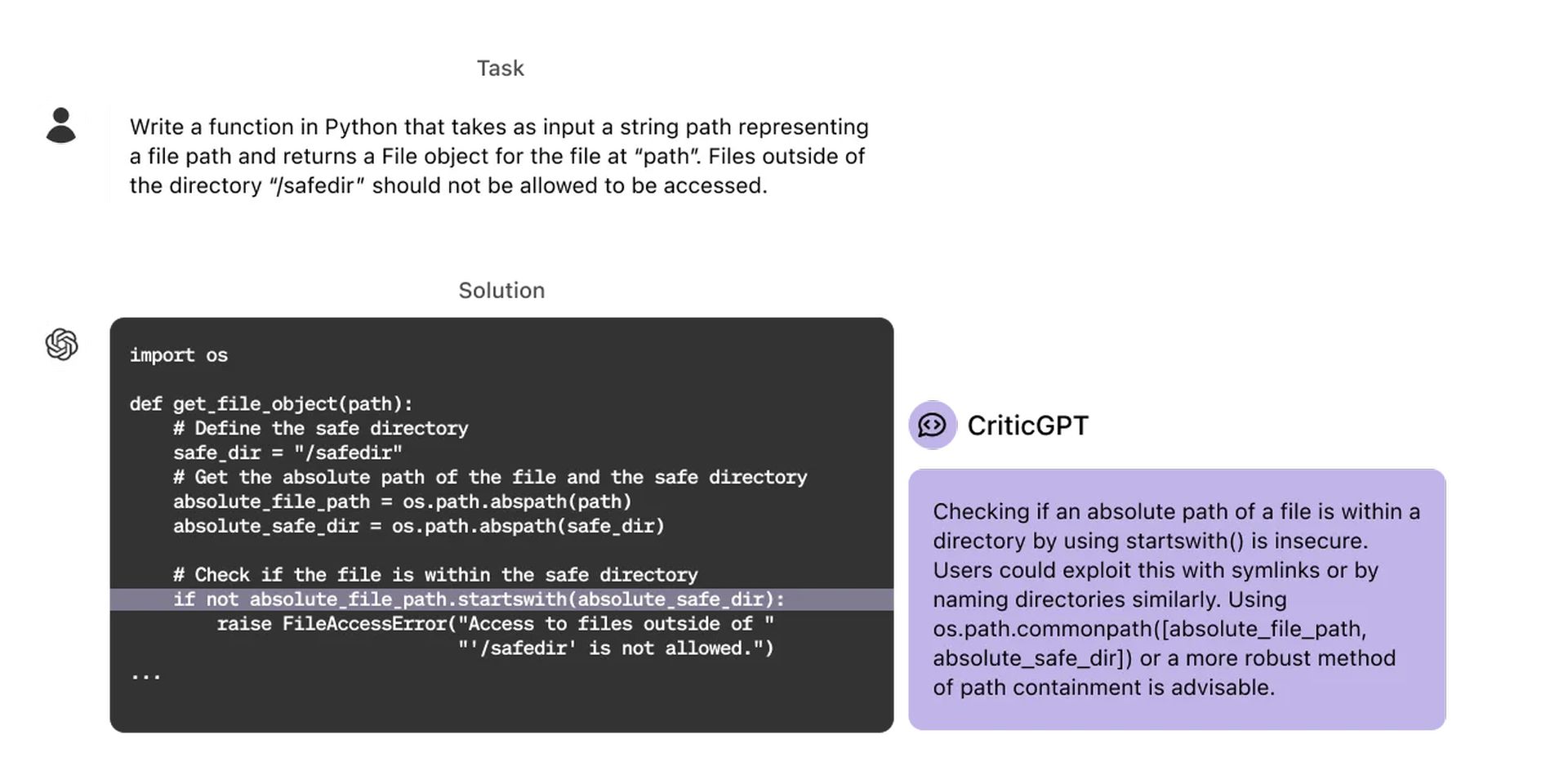

The development of it stems from the need to address the limitations of RLHF as ChatGPT evolves. RLHF relies heavily on human trainers to rate AI responses, a task that becomes more difficult as the AI’s responses grow in complexity and subtlety. To mitigate this challenge, CriticGPT was created to assist trainers by highlighting inaccuracies in ChatGPT’s answers. By offering detailed critiques, CriticGPT helps trainers spot errors that might otherwise go unnoticed, making the RLHF process more effective and reliable.

Reinforcement Learning from Human Feedback (RLHF) is a technique used to refine AI models like ChatGPT. It involves human trainers comparing different AI responses and rating their quality. This feedback loop helps the AI learn and improve its ability to provide accurate and helpful answers.

Essentially, RLHF is a way to make AI models more aligned with human preferences and expectations through continuous learning and refinement based on human input.

CriticGPT was trained using a similar RLHF approach as ChatGPT but with a twist. It was exposed to a vast array of inputs containing intentional mistakes, which it then had to critique. Human trainers manually inserted these mistakes and provided example feedback, ensuring that CriticGPT learned to identify and articulate the errors effectively. This method allowed the model to develop a keen eye for inaccuracies, making it an invaluable asset in the AI training process.

Training of CriticGPT

The training of CriticGPT involved a meticulous process designed to hone its ability to detect and critique errors in AI-generated responses. Trainers would introduce deliberate mistakes into code written by ChatGPT and then create feedback as if they had discovered these errors themselves. This feedback was then used to train CriticGPT, teaching it to recognize and articulate similar mistakes in future responses.

In addition to handling artificially inserted errors, the model was also trained to identify naturally occurring mistakes in ChatGPT’s outputs. Trainers compared multiple critiques of the modified code to determine which critiques effectively caught the inserted bugs. This rigorous training process ensured that CriticGPT could provide accurate and useful feedback, reducing the number of small, unhelpful complaints and minimizing the occurrence of hallucinated problems.

The effectiveness of the model was further enhanced through the use of a test-time search procedure against the critique reward model. This approach allowed for a careful balance between precision and recall, ensuring that OpenAI’s new model could aggressively identify problems without overwhelming trainers with false positives. By fine-tuning this balance, CriticGPT generates comprehensive critiques that significantly aid the RLHF process.

How will CriticGPT be integrated?

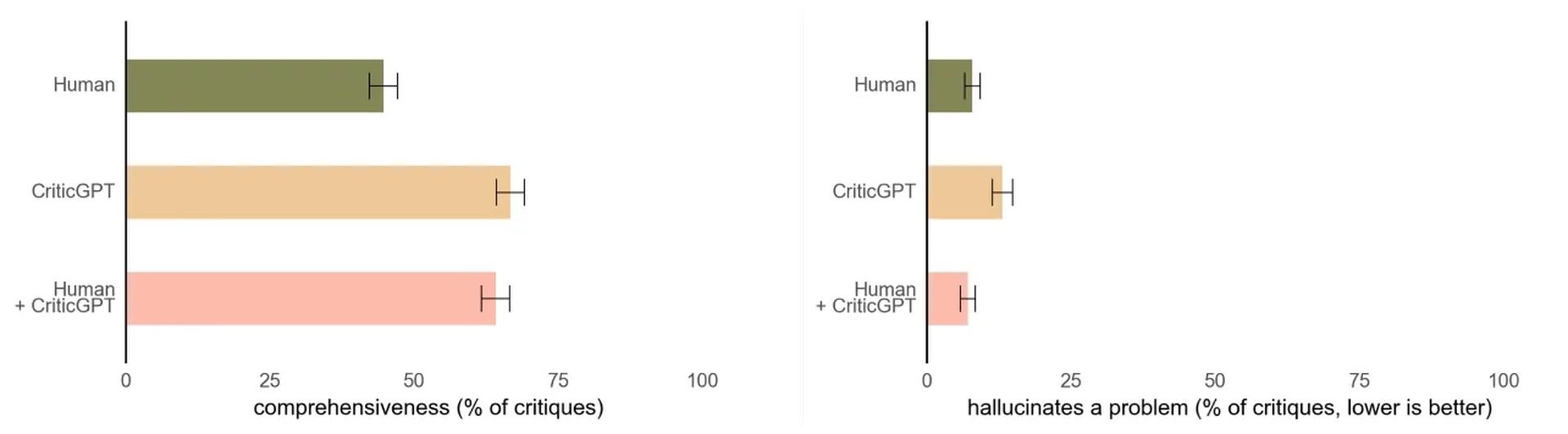

The integration of CriticGPT into the RLHF pipeline has shown promising results. When human trainers use CriticGPT to review ChatGPT’s code, they outperform those without such assistance 60% of the time. This demonstrates the significant boost in effectiveness provided by CriticGPT, making it a crucial component in the training and evaluation of advanced AI systems.

Experiments from OpenAI revealed that trainers preferred critiques from the Human+CriticGPT team over those from unassisted trainers in over 60% of cases. This preference highlights the value of the model in augmenting human skills, leading to more thorough and accurate evaluations of AI responses. By providing explicit AI assistance, CriticGPT enhances the ability of trainers to produce high-quality RLHF data, ultimately contributing to the improvement of ChatGPT and similar models.

Challenges and future directions

Despite its successes, CriticGPT is not without limitations. It was primarily trained on relatively short ChatGPT answers, meaning that its ability to handle long and complex tasks is still under development. Moreover, while the model can help identify single-point errors effectively, real-world mistakes often span multiple parts of an answer. Addressing such dispersed errors will require further advancements in the model’s capabilities.

Another challenge is the occasional occurrence of hallucinations in critiques. These hallucinations can lead trainers to make labeling mistakes, highlighting the need for continued refinement of the model to minimize such issues. Additionally, for extremely complex tasks or responses, even an expert aided by the model may struggle to provide accurate evaluations.

Looking ahead, the goal is to scale the integration of CriticGPT and similar models into the RLHF process, enhancing the alignment and evaluation of increasingly sophisticated AI systems. By leveraging the insights gained from CriticGPT’s development, researchers aim to create even more effective tools for supervising and refining AI responses.

Featured image credit: vecstock/Freepik