Max Kanaskar is an Associate for Strategy& (formerly Booz & Company). He has extensive experience in IT capability development and data management, and has advised companies in transformational data management programs, including data strategy, management and governance. He’s a regular commentator on big data and technology. His views can be found here.

BIG DATA TECHNOLOGY SERIES – PART 3

In the last installment of the Big Data Technology Series, we looked at the first thread in the story of big data technology evolution: the history of database management systems. In this installment, we will look at the second thread in the story: the origins, evolution and adoption of systems and solutions for managerial analysis and decision making. The sophisticated business intelligence and analytic platforms and solutions of today are the product of many years of interplay of and cross-influences between theory, primarily management thinking on how best to leverage data/information for business effectiveness, and practice, in particular developments of data/information management and delivery solutions and their common uses and adoption. Complex as this story is, this post is an attempt to understand the key evolutionary points with our overarching goal of better understanding today’s big data technology trends and technology landscape.

In the last installment of the Big Data Technology Series, we looked at the first thread in the story of big data technology evolution: the history of database management systems. In this installment, we will look at the second thread in the story: the origins, evolution and adoption of systems and solutions for managerial analysis and decision making. The sophisticated business intelligence and analytic platforms and solutions of today are the product of many years of interplay of and cross-influences between theory, primarily management thinking on how best to leverage data/information for business effectiveness, and practice, in particular developments of data/information management and delivery solutions and their common uses and adoption. Complex as this story is, this post is an attempt to understand the key evolutionary points with our overarching goal of better understanding today’s big data technology trends and technology landscape.

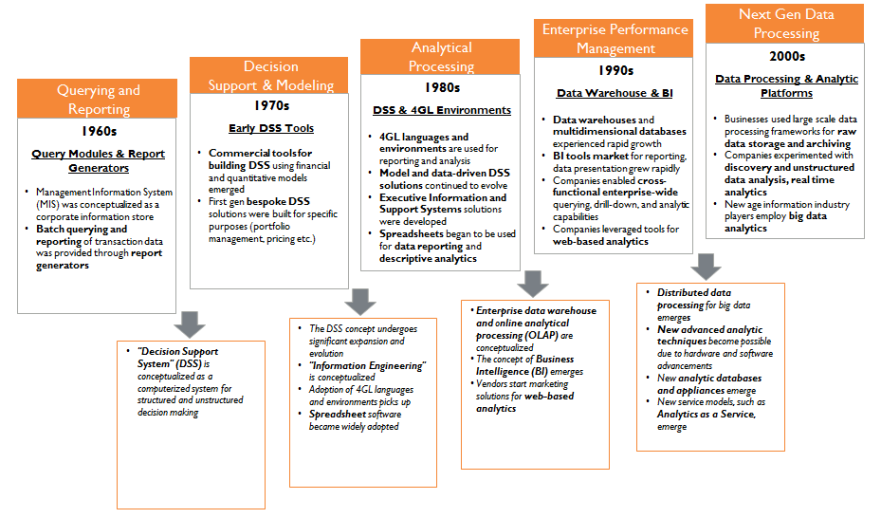

See the graphic below that summarizes the key points in our discussion.

The usage of computers for managerial analysis and decision-making traces its roots back to the 1950s-60s when the theory of management information systems (MIS) came into being in business management academic and practitioner circles as a body of knowledge and thinking on how best to leverage computers for corporate data management and its use. The power of computers in managing and processing data, already evident in the corporate world by that time, was not being fully utilized, in the view of MIS thinkers. They envisioned an environment which elevated the use of computers from a clerical administrative data processing responsibility to one that provided a corporate wide integrated interactive “bucket of facts” that managers could dip into for analysis and decision-making. Fundamental and foundational to this capability was a “data base” or a “data hub”, a place where all corporate wide data could be stored in an integrated manner.

A lot of this thinking however predated the required technology; technology in the 1960s was not developed and sophisticated enough to deliver clean data integration and interactive delivery that the MIS thinkers had envisioned. The MIS systems in those days were mainframe-based batch oriented report generators and querying modules that provided structured periodic reports to managers. As we saw in the previous instalment of the Big Data Technology Series, there was file management software in use in the “data processing” department (that is what IT used to be called back then), but those solutions were meant to ease the burden of data analysts and technical staff, and were not oriented for business managers. Even simple report generation out of the transactional and accounting systems required heavy involvement of the technical staff. The so called MIS solutions fizzled in the marketplace.

Technology evolved in the 1960s with arrival of more powerful and cheaper minicomputers, and researchers with access to these computers began implementing solutions for decision support and modelling. Concurrently, the MIS theory evolved and expanded to understand human-computer interaction and managerial decision-making, specifically how technology could a play role in that. The confluence of these developments was behind the rise of the concept of Decision Support Systems (DSS) in the late 1960s-early 1970s. DSS was defined as a computer information system that provided support for unstructured/semi-structured managerial decision-making. Several prominent thinkers and researches conceptualized and implemented early model-based personal DSS solutions for such applications as product and pricing and portfolio management during the early 1970s (See the Wikipedia page on DSS for a taxonomy of DSS types).

By the early 1980s, a number of researchers and companies had developed interactive information systems that used data and models to help managers analyze semi-structured problems. It was recognized that DSS could be designed to support decision-makers at any level in an organization across operations, financial management and strategic decision-making. DSS theory and thinking expanded in the 1980s, giving rise to such concepts and supporting solutions as Group DSS for organizational decision-making, and Executive Information/Support Systems for a bird’s eye organizational view for executive management. Advances in artificial intelligence led to the development of expert systems and knowledge-based DSS solutions. The rise of personal computers, databases and spread-sheet software in the 1980s supported the development of these DSS solutions. Spreadsheets in particular began to be used for data reporting and running basic descriptive analytics and understanding trending in data.

The 1980s also marked the beginning of the emergence of structured methodologies for information and data design and engineering. James Martin, the influential author of a series of tomes on Information Engineering, wanted to bring traditional engineering like discipline and rigor to the design of data and information delivery systems. This was also the time that computer aided software engineering (CASE) methods and 4GL language concepts were developed. All these developments were reflections of increasing importance of data analysis in the business commercial environment and the need to manage the increasing complexity in a structured and logical manner.

Early 1980s also marked the beginning of the emergence of a new class of DSS solutions, the data driven DSS that encompassed solutions that allowed users to quickly look at data, analyse data in a given database, or look at a series of databases. The emergence of data driven DSS was driven by theory around executive information systems (EIS), which were envisioned as solutions that provide data on critical success factors to top management, and also technology in the form of relational databases that provided capabilities for data storage and manipulation. The concept of EIS and the technical foundation supporting it went through a number of developments in the 1980s, as new methodologies to measure the state of the organization emerged (e.g. the Balanced Scorecard), and as the technical underpinnings were formalized in new mechanisms (e.g. multi-dimensional data structures and online analytical processing (OLAP)). Data-driven DSS became an important market in their own right, and rechristened loosely as “business intelligence (BI)” solutions in the late 1980s-early 1990s.

The development of the BI market in 1990s was driven to a large extent by the relational database vendors who had undergone growth and achieved strong market presence after the “database wars” in early part of the decade. As the BI market developed, there was a need to provide infrastructure to manage enterprise wide data in an integrated and cleansed manner, which gave rise to a market for “data warehouse” solutions, again a market that came to be dominated to a good extent by traditional database vendors. The 1990s also marked the rise of web-based analytics, and shift in the complexity, scope and frequency of traditional BI applications of alerting, reporting, and dash boarding. As business operations increased in pace and complexity, BI solutions were required to provide integration for ever-increasing variety of data sets on a more real time basis. Towards the end of the decade, BI moved from the realm of aiding decision-making to actually driving real change in organization through “enterprise performance management” (EPM) which centered on driving change in organizational processes, management and accountability structures through capture, analysis and interpretation of data and information across all levels of the organization. Complex event processing and other solutions as part of “operational intelligence” umbrella were adopted for managing real time analysis for broader event pattern identification and definition in long running business processes.

In the 2000s, with the rise of unstructured data, availability of cheap networked computers, and technology advances in networking and storage, “raw data processing platforms” such as Hadoop came to be commonly adopted. New predictive analytics techniques became feasible due to improving price-performance ratios of hardware that gave rise to “analytic platforms” (such as AsterData) which provided a hardware and software infrastructure for performing complex descriptive and predictive analytics in a “pre-packaged appliance” form factor. As we will see in the next installment, such analytic platforms are increasingly converging with statistical and machine learning processing solutions to provide a full end to end capability for advanced analytics.

In summary, the nature of adoption of BI/decision-support solutions has evolved along three dimensions: 1) from reactive to prescriptive: the batch data reporting of the 1960s was meant to provide a rear-view mirror of what happened, as opposed to today when BI solutions are used to provide recommendations on future actions, 2) from strategic long-range to operational short-term: from using reports and analysis to understand long term trends to using solutions to provide real-time feedback, and 3) from internal function-oriented to external integrated: from using siloed views of organizational information to driving views that are integrated across functions and organizations. The market for managerial data analysis and decision-making solutions continues to evolve as the cost of technology falls further, and as the business needs around management of data become more varied and complex.

In the next installment of the Big Data Technologies Series, we will examine the third thread in the story of big data technology evolution: the origins and history of packages and solutions for statistical processing and data mining.

Interested in more content like this? Sign up to our newsletter, and you wont miss a thing!

[mc4wp_form]

(Image Credit: KamiPhuc)