“Big Data” has been a major technology trend over the past years, and buzz around the term continuing strong today. By now, it is widely accepted that the “Big” in Big Data does not refer only to the terabytes and petabytes of data to be processed, but more broadly to the complexity of such data. The standard definition of Big Data by now is a data management problem that cannot be solved by old-fashioned technology because of a combination of some of the “4 Vs”, referring to four characteristics of the complexity of Big Data:

- Volume: The data is too much to be handled by traditional technologies.

- Velocity: The data is coming (streaming) too fast to be ingested by traditional technologies.

- Variety: There are too many different sources or formats of data that cannot be integrated by traditional technologies.

- Veracity: The inherent uncertainty in the data cannot be handled by traditional technologies.

Big data can create tremendous value for the companies that do put them to use. An often-overlooked factor, however, is that a bunch of hard drives full with data are useless (and thus carry no value) by themselves. What drives the value of data is really the analysis that companies conduct on the data in order to derive valuable insights. According to Gartner, companies that employ advanced forms of analysis on their data can get a higher return on investment and are expected to grow more than their competitors . A key trend is that it is not just the data that is becoming more complex, but increasingly the analysis itself. Along the lines of the four “Vs”, let us try to characterize the complexity of current “Big Analysis” requirements using four “Is”.

- In-situ: Analytics directly operate on the data where it sits without requiring an expensive process of ETL (Extract, Transform, Load)

- Iterative: Analysis iterates over the data several times in order to build and train a model of the data rather than just extract data summaries (often referred to as predictive versus descriptive analysis)

- Incremental: Analysis needs to maintain said models under high data arrival rates, and analysis need to interactively analyze data sets based on previous results.

- Interactive: Analysts work interactively with data, formulating the next question depending on the results of the previous one.

Apache Hadoop is the major available technology today for Big Data management. Does Hadoop live up to the challenge for Big Analysis? The answer is yes and no. Hadoop today has evolved way beyond the original system modeled after Google’s MapReduce research paper. A Hadoop installation is today a collection of several distinct products, ranging from data storage to distinct tools for stream processing, interactive querying, to data visualization and reporting and monitoring tools. The original Hadoop MapReduce system performs poorly on many emerging use cases. New technologies are on the rise to fill this gap.

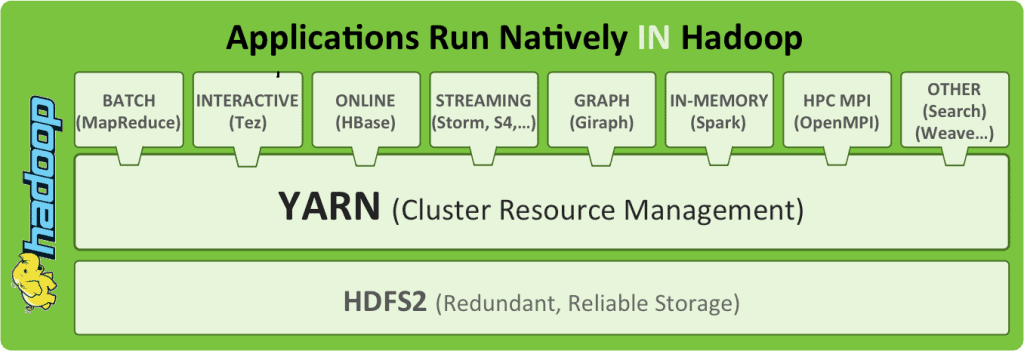

Broadly speaking, “Hadoop and co” is today a diverse set of data processing technologies that interoperate with each other on the same data. The figure (from Hortonworks) gives an example of such a Hadoop 2 installation. All data is stored in a reliable common data storage layer called the Hadoop Distributed File System, and cluster resources are managed by a common resource manager called YARN (YARN stands for Yet Another Resource Negotiator, using a commonly used tongue-in-cheek in developer circles). On top of that we see a diversification of tools that support different kinds of analysis. That way, the “in situ” analysis requirement is satisfied, as data is stored in a single place.

In the analysis platforms themselves, we see several tools that target different types of analysis.

- Hadoop MapReduce itself is still the most robust and widely-used tool for very large-scale batch data processing.

- Apache Storm (http://storm.incubator.apache.org/) was created by for applications that process data “on the move,” rather than batch processing. It was first open sourced by twitter

- Apache Tez (http://hortonworks.com/hadoop/tez/), Apache Drill (http://incubator.apache.org/drill/) and Impala from Cloudera (http://www.cloudera.com/content/cloudera/en/products-and-services/cdh/impala.html). These projects aim to offer interactive SQL or SQL-style querying on top of Hadoop data. They are inspired, interestingly, by relational database technology like columnar storage, the exact technology that Hadoop initially competed with, and by Google’s Dremel system.

- Apache Giraph (https://giraph.apache.org/) was inspired by Google’s Pregel system and is a good match for applications that need to analyze graph data.

- Apache Spark (https://spark.incubator.apache.org/) was initially created by UC Berkeley. Spark is based on the premise that if most data fits in main memory, and operations on data communicate via main memory, a lot of Big Data applications can become much faster. As such, Spark covers a wide variety of the aforementioned use cases using one system.

While there is a wealth of choice already, the above list is by no means complete. A plethora of other tools and systems are springing up. While this is an indication of a healthy market and indicates a fast pace of innovation, the choice of the right platform to use for the problem at hand can be mind boggling for many companies. Each of these platforms has its own programming interface, and programs written in one of them cannot be transferred to another. Add this to the problem of managing a Hadoop installation in the first place and finding qualified people to conduct the data analysis, which is widely considered as one of the major problems that many companies face in order to realise their Big Data strategy.

A solution to this problem is yet to be seen. Some systems are developing compatibility layers so that programs written in one can be transferred to another. While this may keep this “Babel tower” of technologies from collapsing, a need is emerging for more general-purpose platforms that can cover a wide variety of use cases better than the original Apache Hadoop design, having learned the lessons of history so far. As Mike Olson, CSO of Cloudera, recently put it: “What the Hadoop ecosystem needs is a successor system that is more powerful, more flexible and more real-time than MapReduce. While not every current (or maybe even future) application will abandon the MapReduce framework of today, new applications could use such a general-purpose engine to be faster and to do more than is possible with MapReduce.”

The evolution in this space is history that is waiting to be written. Here in Berlin, we are writing our part of this history with the Stratosphere (www.stratosphere.eu) open source platform for data analytics. Stratosphere is a general-purpose platform that can cover a wealth of analytical use cases and is compatible with Hadoop installations. It is based on the premise that combining technological ideas from Hadoop MapReduce, parallel databases, and compilers, we can create and efficient yet very powerful system for the next generation of data analysis needs.

Dr. Kostas Tzoumas is a post-doctoral researcher at TU Berlin . Kostas is currently co-leading the Stratosphere project, that develops an open-source Big Data Analytics platform, the only of its kind in Europe. His research is focused on system architectures, programming models, processing and optimization for Big Data. Kostas has published extensively on the topic in leading database conferences such as ICDE, SIGMOD or CIKM. Kostas regularly serves as Program Committee member in leading conferences such as ACM SIGMOD, VLDB, and ICDE, and initiated the workshop on Data Analytics in the Cloud. Before joining TU Berlin, Kostas received his PhD from Aalborg University, Denmark, was visiting the University of Maryland, College Park, and received his Diploma in Electrical and Computer Engineering from the National Technical University of Athens, Greece.

Image credit : Raymond Bryson