To say that the cloud computing market was exploding would be an understatement. In July, we heard multiple reports supporting the proclamation of cloud as the next revolution in the computing industry. The IDC claimed the cloud computing market at the close of the year would be worth $4 billion in EMEA. In the UK, 78% of organisations have “formally” adopted one or more cloud-based services. Fujitsu recently announced they’ve set aside $2 billion to expand their cloud portfolio. Evidently, the cloud is big business.

The market continues to be dominated by Amazon Web Services, with Microsoft and IBM making serious inroads. But there’s one industry giant missing from this list: Google. In Q2, Microsoft’s cloud infrastructure revenue grew by 164%; Google lagged at only 47%. But Google have a secret weapon in their cloud portfolio, whose release may sky-rocket their market share- Google Cloud Dataflow.

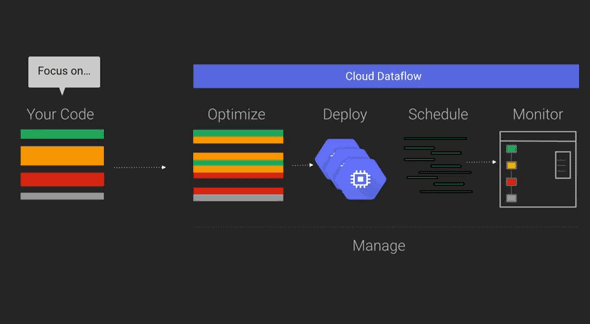

What is Google Cloud Dataflow?

- It’s multifunctional- As a generalisation, most database technologies have one speciality, like batch processing or lightning-fast analytics. Google Cloud Dataflow counts ETL, batch processing and streaming real-time analytics amongst its capabilities.

- It aims to address the performance issues of MapReduce when building pipelines- Google was the first to develop MapReduce, and the function has since become a core component of Hadoop. Cloud Dataflow has now largely replaced MapReduce at Google, which the company apparently stopped using “years ago”, according to Urs Hölzle, Google’s Senior VP of Technical Infrastructure.

- It’s good with big data- Hölzle stated that MapReduce performance started to sharply decline when handling multipetabyte datasets. Cloud Dataflow apparently offers much better performance on large datasets.

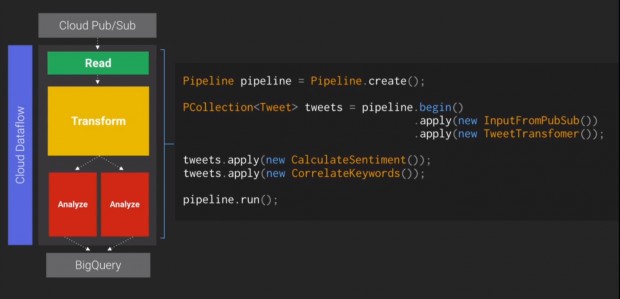

- The coding model is pretty straightforward- The Google blog post describes the underlying service as “language-agnostic”, but the first SDK is for Java. All datasets are represented in PCollections (“parallel collections”). It includes a “rich” library of PTransforms (parallel transforms), including ParDo (similar to Map and Reduce functions and WHERE in SQL), and GroupByKey (similar to the shuffle step of MapReduce and GROUPBY and JOIN in SQL). A starter set of these transforms can be used out of the box, including Top, Count and Mean.

- It “evolved” from Flume and Millwheel- Flume lets you develop and run parallel pipelines for data processing. Millwheel allows you to build low-latency data-processing applications.

What Does Cloud Dataflow Mean For Existing Google Cloud Customers?

Dataflow is designed to complement the rest of Google’s existing cloud portfolio. If you’re already using Google BigQuery, Dataflow will allow you to clean, prep and filter your data before it gets written to BigQuery. Dataflow can also be used to read from BigQuery if you want to join your BigQuery data with other sources. This can also be written back to BigQuery.

Are Google the Only Major Players Tapping into Data Flow?

Facebook have already developed a data flow architecture called Flux. The video above, explaining Facebook’s data flow project Flux, is a pretty good example of a data flow architecture, and demonstrates theirs at work within the Facebook messaging system. As the video explains, Flux “avoids cascading affects by preventing nested updates”- simply put, Flux has a single directional data flow, meaning additional actions aren’t triggered until the data layer has completely finished processing.

FlumeJava, from which Cloud Dataflow evolved, is also involved the process of creating easy-to-use, efficient parallel pipelines. At Flume’s core are “a couple of classes that represent immutable parallel collections, each supporting a modest number of operations for processing them in parallel. Parallel collections and their operations present a simple, high-level, uniform abstraction over different data representations and execution strategies.”

Many see Cloud Dataflow as a competitor to Kinesis, a managed service designed for real-time data streaming developed by industry leaders Amazon Web Services. Kinesis allows you to write applications for processing data in real-time, and works in conjunction with other AWS products such as Amazon Simple Storage Service (Amazon S3), Amazon DynamoDB, or Amazon Redshift.

Does Google Cloud Dataflow Mean the Death of Hadoop and MapReduce?

Since Cloud Dataflow is being used in place of MapReduce in the Google offices, and Google have marketed Cloud Dataflow as having “evolved” from MapReduce, many have been proclaiming the death of MapReduce, and also Hadoop, of which MapReduce is the core component.

On the subject, Ovum analyst Tony Baer told InfoWorld Cloud Dataflow forms “part of an overriding trend where we are seeing an explosion of different frameworks and approaches for dissecting and analyzing big data. Where once big data processing was practically synonymous with MapReduce, you are now seeing frameworks like Spark, Storm, Giraph, and others providing alternatives that allow you to select the approach that is right for the analytic problem.”

It is true MapReduce use in the decline. But that’s why Hadoop 2.0 introduced YARN, which allows you to circumvent MapReduce and run multiple other applications in Hadoop which all share common cluster management. One application that’s gained considerable attention is Spark; as InfoWorld states, which can perform map and reduce in-memory, making it much faster than MapReduce. Of course, such applications can run on top of Hadoop, so whilst there are now many different approaches to MapReduce, it doesn’t mean Hadoop is dead. Current Hadoop users have all of their data stored on-premise, and it’s unlikely that a considerable number of these users are going to migrate all of their data to the cloud to use Cloud Dataflow. In short: Hadoop is safe for now.

This post will be updated as and when further updates about Cloud Dataflow are announced, to give you an up-to-date guide on advancements ahead of its release.

Eileen McNulty-Holmes – Editor

Eileen has five years’ experience in journalism and editing for a range of online publications. She has a degree in English Literature from the University of Exeter, and is particularly interested in big data’s application in humanities. She is a native of Shropshire, United Kingdom.

Email: eileen@dataconomy.com