Microsoft Corp. has expanded its Phi line of open-source language models with the introduction of two new algorithms designed for multimodal processing and hardware efficiency: Phi-4-mini and Phi-4-multimodal.

Phi-4-mini and Phi-4-multimodal features

Phi-4-mini is a text-only model that incorporates 3.8 billion parameters, enabling it to run efficiently on mobile devices. It is based on a decoder-only transformer architecture, which analyzes only the text preceding a word to determine its meaning, thus enhancing processing speed and reducing hardware requirements. Furthermore, Phi-4-mini utilizes a performance optimization technique known as grouped query attention (GQA) to decrease hardware usage associated with its attention mechanism.

Microsoft Phi-4 AI tackles complex math with 14B parameters

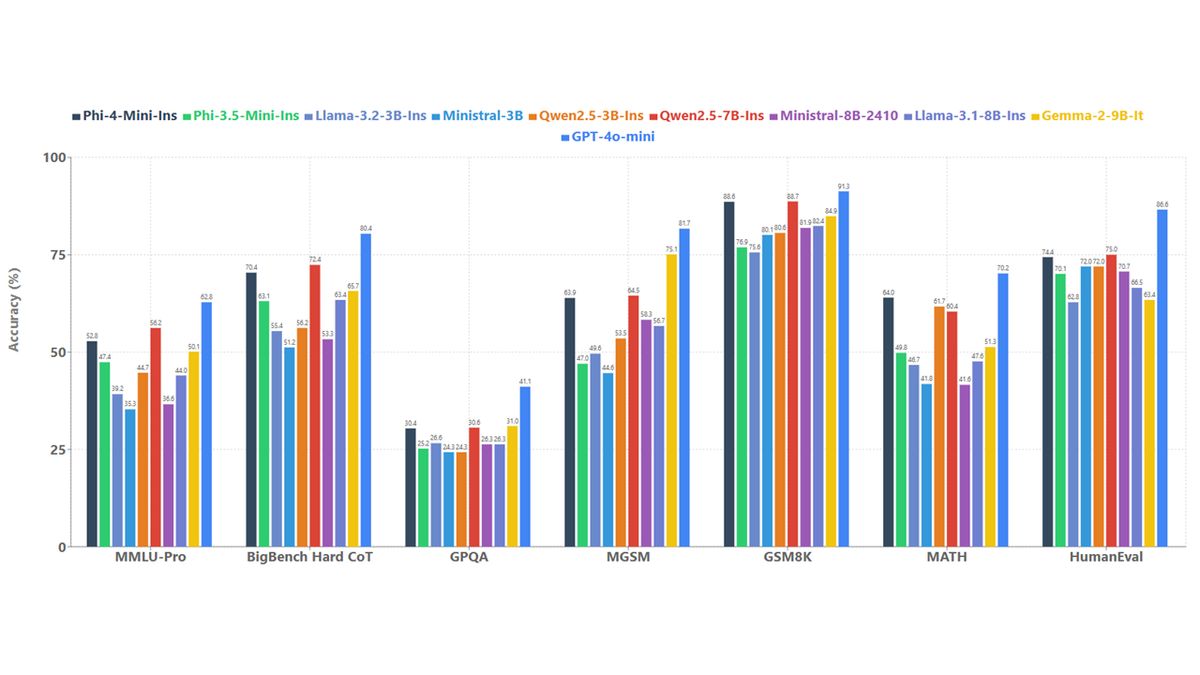

This model is capable of generating text, translating documents, and executing actions within external applications. Microsoft claims Phi-4-mini excels in tasks requiring complex reasoning, such as mathematical computations and coding challenges, achieving significantly improved accuracy in internal benchmark tests compared to other similarly sized language models.

The second model, Phi-4-multimodal, is an enhanced version of Phi-4-mini, boasting 5.6 billion parameters. It is capable of processing text, images, audio, and video inputs. This model was trained using a new technique called Mixture of LoRAs, which optimizes the model’s capabilities for multimodal processing without extensive modifications to its existing weights.

Microsoft conducted benchmark tests on Phi-4-multimodal, where it earned an average score of 72 in visual data processing, just shy of OpenAI’s GPT-4, which scored 73. Google’s Gemini Flash 2.0 led with a score of 74.3. In combined visual and audio tasks, Phi-4-multimodal outperformed Gemini-2.0 Flash “by a large margin” and surpassed InternOmni, which is specialized for multimodal processing.

Both Phi-4-multimodal and Phi-4-mini are licensed under the MIT license and will be made available through Hugging Face, allowing for commercial use. Developers can access these models through Azure AI Foundry and NVIDIA API Catalog to explore their potential further.

Phi-4-multimodal is particularly designed to facilitate natural and context-aware interactions by integrating multiple input types into a single processing model. It includes enhancements such as a larger vocabulary, multilingual capabilities, and improved computational efficiency for on-device execution.

Phi-4-mini delivers impressive performance in text-based tasks, including reasoning and function-calling capabilities, enabling it to interact with structured programming interfaces effectively. The platform supports sequences up to 128,000 tokens.

Furthermore, both models have undergone extensive security and safety testing, led by Microsoft’s internal Azure AI Red Team (AIRT), which assessed the models using comprehensive evaluation methodologies that address current trends in cybersecurity, fairness, and user safety.

Customization and ease of deployment are additional advantages of these models, as their smaller sizes allow them to be fine-tuned for specific tasks with relatively low computational demands. Examples of tasks suitable for fine-tuning include speech translation and medical question answering.

For further details on the models and their applications, developers are encouraged to refer to the Phi Cookbook available on GitHub.

Featured image credit: Microsoft