Google has set a new benchmark with the unveiling of its latest creation, Google Gemini 1.5 Pro. This AI model builds upon the success of its predecessor, Gemini 1.0, delivering even greater efficiency, versatility, and long-context understanding.

Let’s take a deep dive into the groundbreaking features of Google Gemini 1.5 Pro and explore the transformative potential it holds for developers, enterprises, and everyday users.

What is Google Gemini 1.5 Pro?

Google Gemini 1.5 Pro is a powerful, mid-sized, multimodal AI model that excels across a vast array of tasks. It’s meticulously designed for enhanced scalability and achieves performance levels comparable to Google’s largest model, 1.0 Ultra.

The most distinctive feature, however, is its groundbreaking experimental support for incredibly long contexts.

What is long-context understanding?

In simple terms, long-context understanding refers to an AI model’s ability to process and reason with significantly larger amounts of information within a single prompt. Traditionally, AI models have been limited in this capacity.

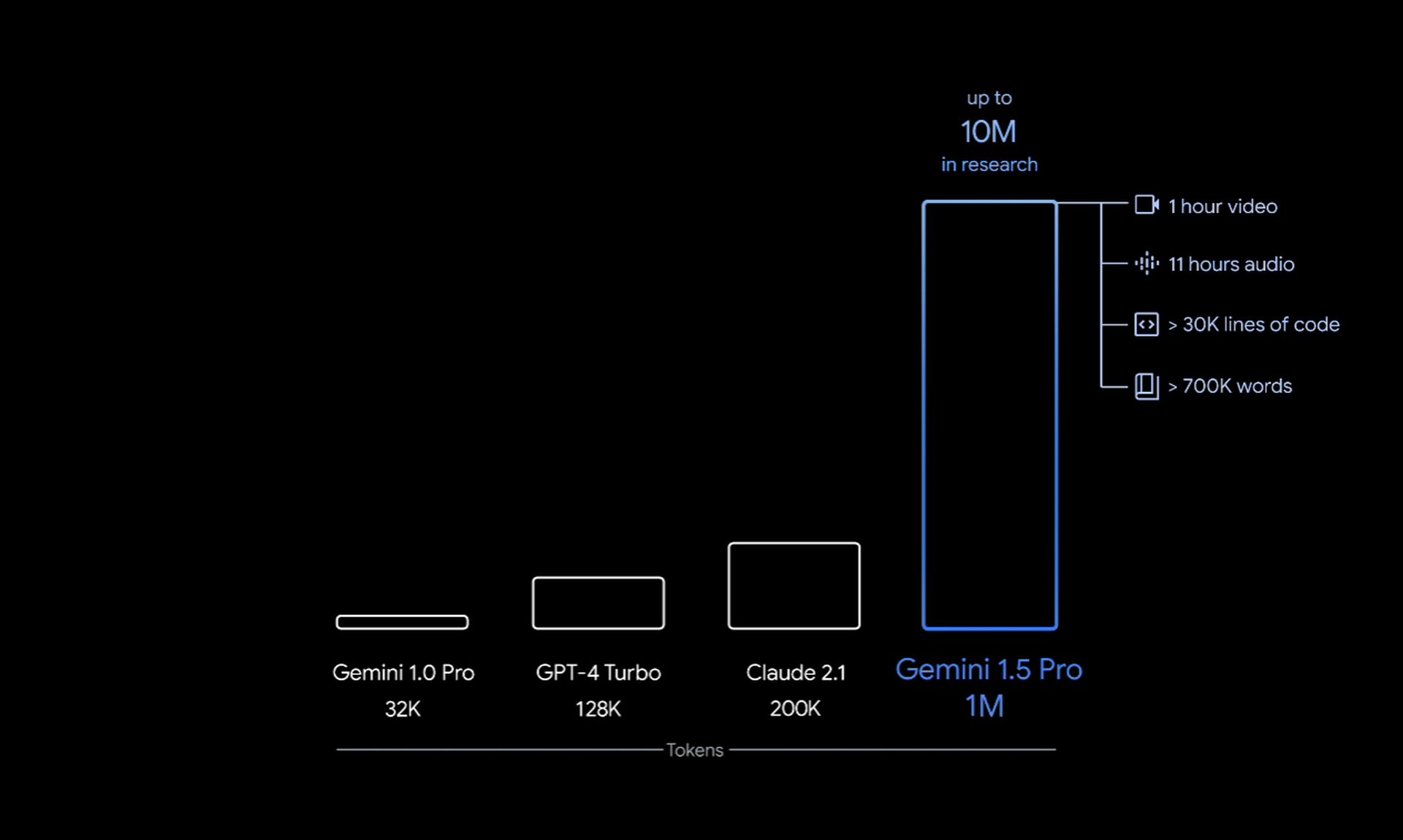

According to the blog post by Google, Google Gemini 1.5 Pro shatters those limitations with its standard context window of 128,000 tokens and an experimental context window of a whopping 1 million tokens!

Google Gemini 1.5 Pro vs Google Gemini 1.0

Google’s Gemini line of AI models aims to push helpfulness across its products and services. Gemini 1.5 continues this advancement, introducing significant improvements that surpass its predecessor, Gemini 1.0.

Let’s analyze their key differences:

Architecture

- Gemini 1.0: Traditional Transformer architecture

- Gemini 1.5: Employs state-of-the-art Mixture-of-Experts (MoE) architecture. MoE allows the model to specialize, with different groups of neural networks becoming experts in specific tasks

Context window

- Gemini 1.0: Standard context window of 32,000 tokens

- Gemini 1.5:

- Standard context window of 128,000 tokens (already a 4x increase).

- Experimental 1 million token context window for early testers

Understanding large inputs

Gemini 1.5’s huge context window empowers it to process vast amounts of data in one go:

- Documents: Up to 700,000 words (e.g., the lengthy Apollo 11 transcript)

- Video: Up to 1 hour of footage

- Audio: Up to 11 hours of content

- Code: Codebases in excess of 30,000 lines

Multimodal Capabilities

While Gemini 1.0 exhibited strength across multiple modalities, 1.5 pushes the limits further:

- Video analysis: Can understand intricate plot points and subtle details in lengthy visual recordings (e.g., silent Buster Keaton film)

- Code reasoning: Works effectively with over 100,000 lines of code to find problems, suggest revisions, and explain program functionality

- Language translation: When equipped with a grammar manual, 1.5 can learn endangered languages at rates rivaling those of a human learner

Performance

Gemini 1.5 Pro:

- Outperforms Gemini 1.0 Pro across 87% of benchmark tests

- Shows performance similar to the larger Gemini 1.0 Ultra

- Maintains accuracy as its context window widens

- Exhibits “in-context learning” (adapts quickly to new information within a prompt)

Safety and ethics

Google focuses heavily on developing ethical AI principles. Both Gemini 1.0 and 1.5 undergo rigorous checks to reduce potential harm and address issues like content safety and representational biases. These tests have become even more crucial as the long context window of 1.5 Pro brings new concerns.

Availability

- Gemini 1.0 Ultra: Now accessible to developers and Cloud customers in Google’s AI Studio and Vertex AI

- Gemini 1.5 Pro: Available via private preview. Google intends to introduce various pricing tiers from the standard 128,000 tokens up to the long-context 1 million token capability to make access more streamlined

The bigger picture

Google Gemini 1.5 offers an impressive step forward in terms of model architecture, performance, long-context understanding, and versatility.

It brings immense power to applications, and with appropriate use cases and ongoing attention to safety, opens avenues for:

- Faster discovery and problem-solving with large datasets and codebases

- Multimodal analysis and generation with increased accuracy

- More intelligent, in-depth responses in conversational AI systems

How to access Google Gemini 1.5 Pro

Google Gemini 1.5 Pro is currently available to developers and enterprises in early access with plans for wider distribution later. With the experimental long-context understanding feature, you can try it out directly in applications like AI Studio and Vertex AI in a dedicated private preview.

Featured image credit: Google.