One of the most serious difficulties with Alzheimer’s disease is that it is seldom noticed early on when it may be addressed better. Now, a team of researchers at Kaunas University of Technology (KTU) has begun researching how human-computer interfaces might be modified for people with neurologic problems to identify a visible object in front of them.

Classifying visual stimuli

If memory impairment affects the perception of facial features, brain activity data from the visual processing areas of the brain may be used to classify visual stimuli. Rytis Maskeliunas, a researcher at the Department of Multimedia Engineering at KTU, addresses this issue in the study.

“While communicating, the face “tells” us the context of the conversation, especially from an emotional point of view, but can we identify visual stimuli based on brain signals,” said Maskeliūnas.

The research aimed to assess a person’s capacity to process contextual information from the face and how they react to it.

Maskeliūnas says that several studies show that brain ailments may be diagnosed by looking at facial muscle and eye movements. This is because neurodegenerative diseases damage both memory and cognitive processes as well as the cranial nerve system linked with eye movement.

The study revealed that, in the brain of a person with Alzheimer’s disease, faces are viewed just as individuals without the illness do.

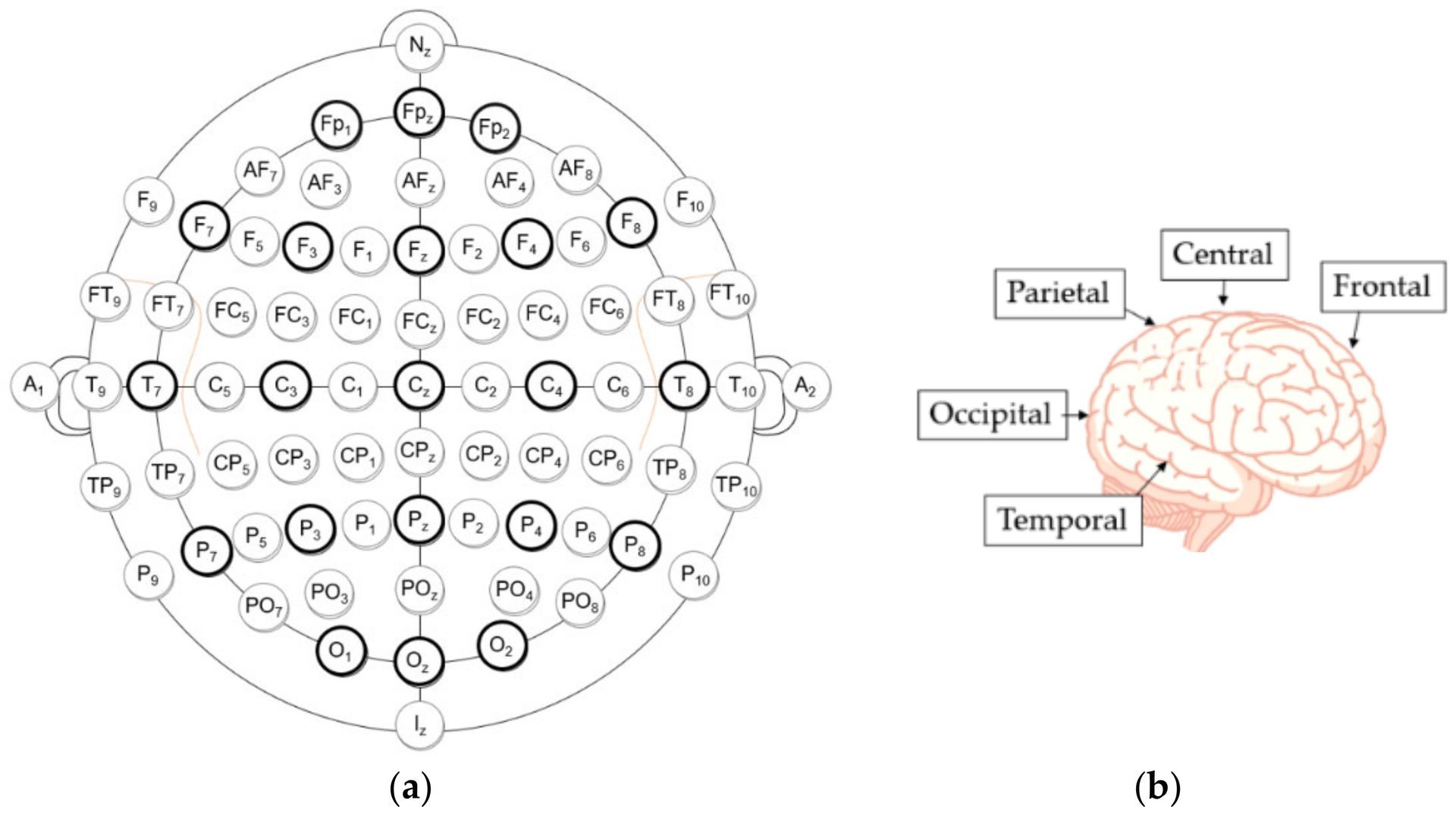

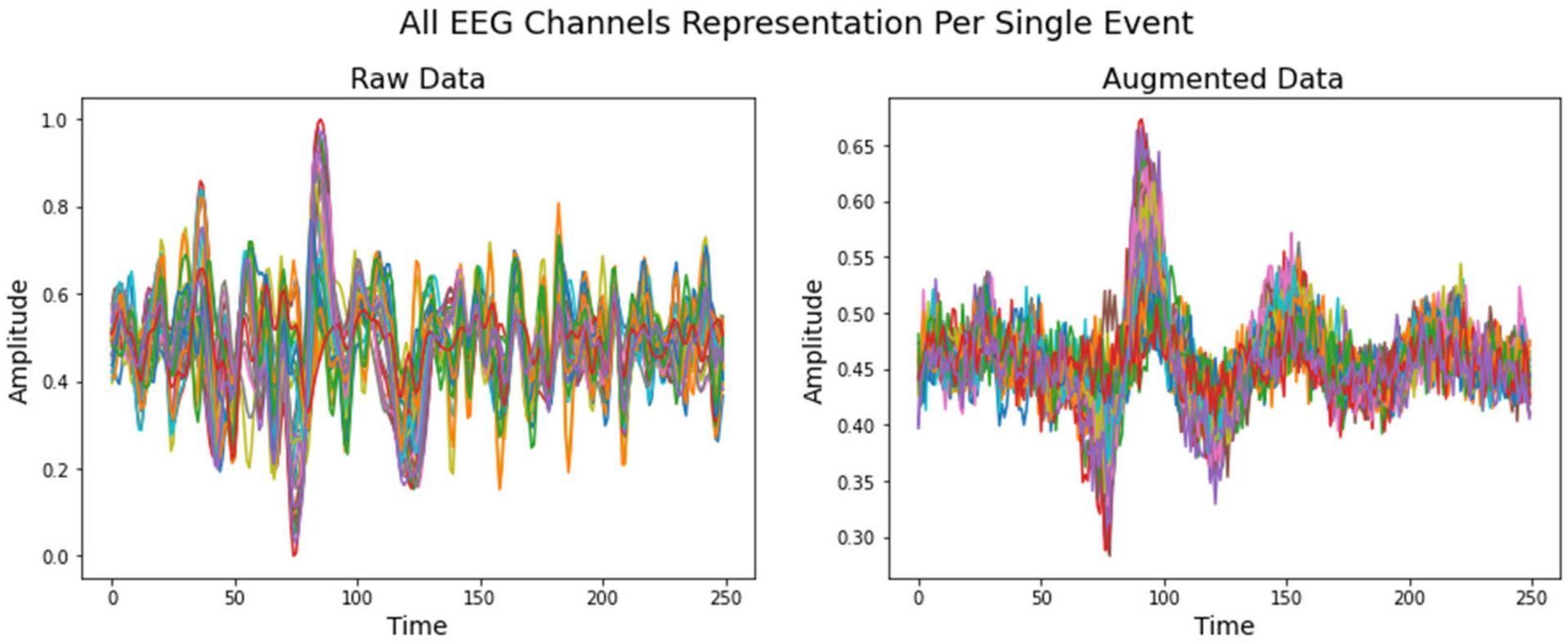

“The study uses data from an electroencephalograph, which measures the electrical impulses in the brain. The brain signals of a person with Alzheimer’s are typically significantly noisier than in a healthy person,” added Dovilė Komolovaitė, the study’s co-author.

This makes it more difficult for the individual to concentrate when suffering from symptoms. But overcoming this problem would make it possible to enable early Alzheimer’s diagnosis. Healthcare is not the only sector making use of augmented data and AI. Did you know that a new neural network is able to read tree heights using satellite images?

How was the experiment conducted?

A group of women over the age of 60 was studied in this research.

“Older age is one of the main risk factors for dementia, and since the effects of gender were noticed in brain waves, the study is more accurate when only one gender group is chosen,” said Komolovaitė.

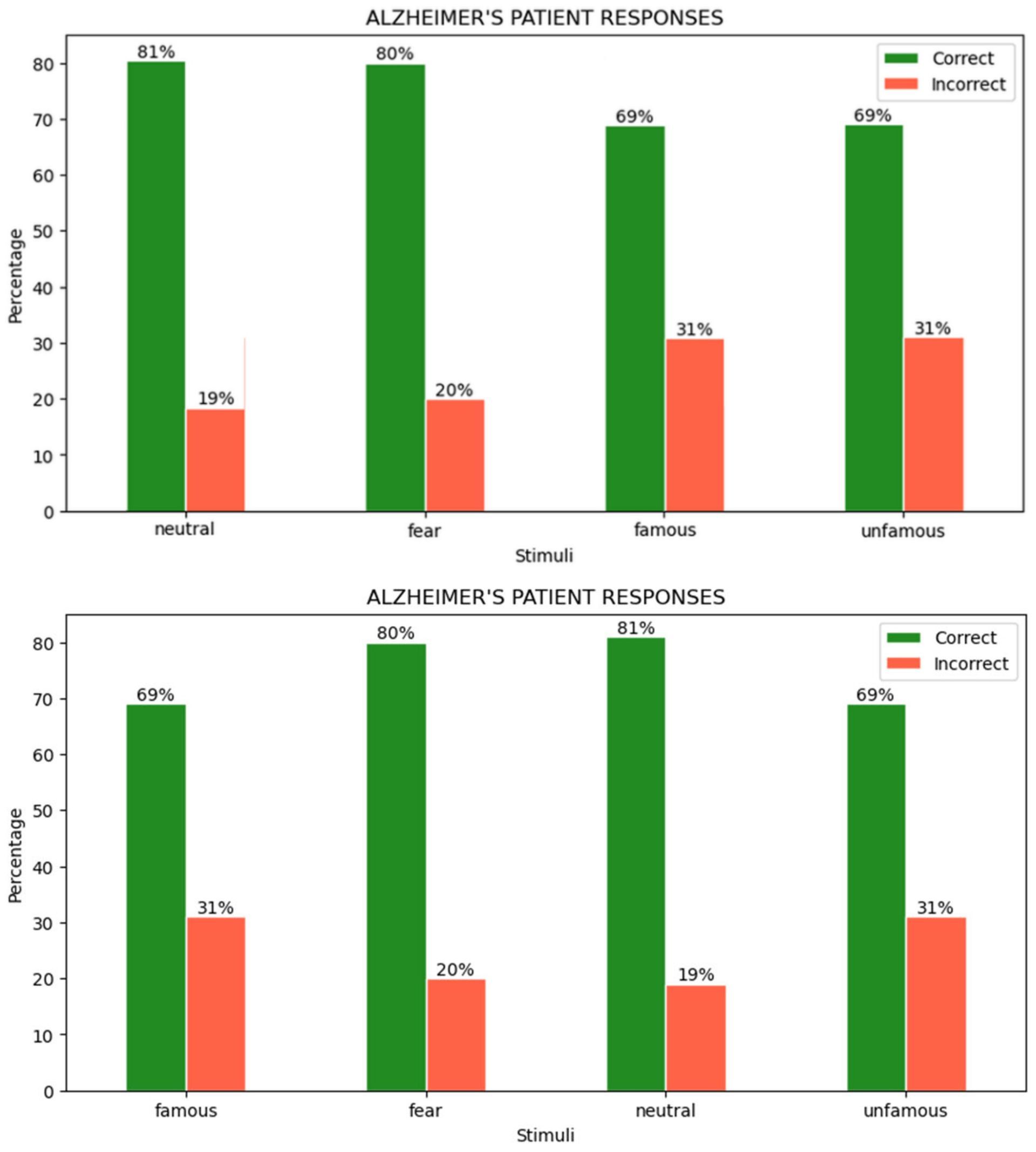

During an hour-long session, individuals were shown pictures of human faces. The photos were chosen based on a variety of criteria. Neutral and terrified faces were shown when assessing the influence of emotions. Known and random people were displayed when analyzing the familiarity factor.

After each face, participants were asked to push a button to indicate whether the face was inverted or correct.

“Even at this stage, an Alzheimer’s patient makes mistakes, so it is important to determine whether the impairment of the object is due to memory or vision processes,” explained Komolovaitė.

The research was conducted using conventional electroencephalography equipment, but invasive microelectrodes would provide better data for creating a useful tool. It would allow experts to more accurately measure neuronal activity, which will improve the quality of AI models. If you want to learn more about such models, check out the History of neural networks.

“If we want to use this test as a medical tool, a certification process is also needed,” said Komolovaitė.

“Of course, in addition to the technical requirements, there should be a community environment focused on making life easier for people with Alzheimer’s disease. Still, in my personal opinion, after five years, I think we will still see technologies focused on improving physical function, and the focus on people affected by brain diseases in this field will only come later,” added Maskeliūnas.