Data scientists suffer needlessly when they don’t account for the time it takes to properly complete all of the steps of exploratory data analysis

There’s a scourge terrorizing data scientists and data science departments across the dataland. This plague infects even the best data scientists, causing missed deadlines, overrun budgets and undermined analyses. I call this beast “The Munge Monster.”

The “Munge Monster” is the part of exploratory data that (most) data science courses never fully prepare you for. The “Munge Monster,” put simply, is the excess time that should be devoted to data acquisition, cleaning, exploring, and summarizing that you didn’t budget for when anticipating your beautifully straightforward three dimensional principal component analysis graph or your nicely trimmed decision tree.

Early on in my data science career, I encountered this ghastly phantom and devised a method for turning its dark chaotic spell into well-organized data magic. I share my simple formula with you here. I call it… ACES.

ACES. Because checklists.

Here at the data stories department of DataScience, Inc., we follow the examples of surgeons and the WHO, and use a simple checklist to save, if not lives, then time, money, and precision. By anticipating and incorporating an accurate estimation of the time needed to acquire, clean, explore, and summarize data, we’re able to better inform and rely upon the outputs of our models. In the long-term, ACES allows us to save time by creating data frames and context that can be reused for feature development without needing to return to the source. ACES also allows for the proper management of multiple projects and for the uncovering of hidden insights from data, as well as provides further confidence in results.

When most statistics or computer science students are first exposed to machine learning algorithms, their instinct is to immediately apply a logistic regression or decision tree without a proper exploration of the underlying data. Meanwhile, data science coursework often encourages this behavior by providing pre-acquired, pre-cleaned, pre-interpreted data (like the Iris, Titanic, and more) that belies the hard exploratory work that was done to get that data. In the real world application of data science, to create anything valuable often requires gathering data from ‘scratch’ — and this means starting with exploratory data analysis. The benefits of starting from scratch and applying the ACES framework is that, more often than not, you have meaningful results by the end, without even having to pour your data into an sk-learn estimator object.

The Four Steps of ACES

Exploratory data analysis can be broken up into four general steps, allowing attention to be devoted to each. They are: acquire, clean, explore, and summarize.

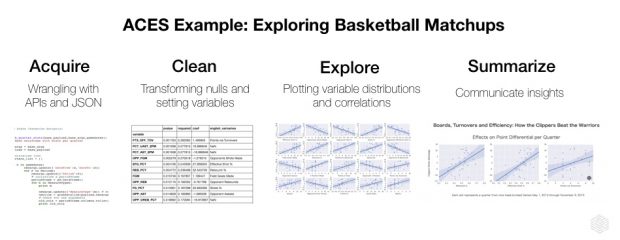

Acquire

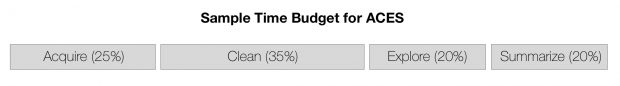

The acquisition phase is where the data is discovered, collected, and transferred into a database, dataframe, flatfile, or other format that is immediately queryable from your analytics software of choice. Data acquisition varies depending on the source or sources. At its easiest, you might export a csv from a client database. On the challenging end, you’ll need to scrape millions of poorly formatted text documents with incomplete metadata that are housed behind a wall of NDAs. I’ve found that acquisition takes, on average, 25% of my exploration time, due to the challenges of dealing with unexpected data sources, massive data streams, and combining disparate data types. When we designed a basketball prediction engine, the API access and wrangling alone took up over a quarter of our time.

Clean

During the cleaning phase, each variable is looked at to evaluate integrity, consistency, and meaning, depending on the overall goals of the project. During this stage, variable names are given and refined if they are not already present. Null values are counted and replaced, ignored or interpolated. Date fields are converted from strings to numbers. Text is pruned, divided, and encoded. Vector formats, matrices, and panels are created. The result is a clean dataframe or something similar that can be explored with the visualization and statistical tools of choice. Cleaning takes up to 35% of the exploratory time, on average. Cleaning the data is the most detail oriented, but important, component of developing an insight-producing data matrix. For our NBA analysis, we cleaned by renaming the variables, generating new features, and interpolating nulls.

Explore

Now you can finally look at descriptives, draw some histograms, and make your cross tabulations to get at the basic insights that exist near the surface of our cleaned data set. Many think of this is as the fun part, although, truth be told, my favorite part of the process is often the cleaning, where I get to write custom and reusable scripts and enjoy the satisfaction of an easily interpretable data frame. However, many data scientists and enthusiasts view this exploration phase as the bread and butter of EDA. During this phase, the mean, standard deviation, and distributions of each variable are looked at, as well as any correlations and potentially fruitful slices, or cross tabulations. Often, the basic visual outputs of good exploration reveal insights that are more powerful and valuable than those initially framed at the outset of a project. Due to the effectiveness of standardized tools for producing visuals of descriptive statistics, only about 20% of total time exploring data is actually this “E” phase. Given our cleaned dataframe of player statistics for our basketball project, the exploration phase was pure joy as we picked out variables and relationships to quickly plot and examine.

Summarize

The final stage uses no algorithms or code libraries, yet it employs the most critical data science skill: communication. In the summary stage, the data scientist collects the most valuable insights from the exploratory phase and communicates them to the stakeholders. Often what seems like a self-explanatory, well-labeled histogram turns out to require at least a few hundred words, and perhaps a diagram or two, in order to communicate its value to the internal or external stakeholder for whom the analysis is intended. A summary will often include insights that are suitable final outputs, but there will also be potential future stages where the lucky data set gets to graduate to a machine learning or predictive modelling project. If there’s future plans for the data, then the “summarize” portion of ACES serves to identify the variables that are most promising for features or targets, as well as recommendations or the algorithms to try. For example, with the NBA project we decided to predict an upcoming game between the Clippers and the Warriors, so we were able to take a subset from our exploration data, add a few labels, and provide a digestible insight.

Keep Mungy the Munge Monster Away

Just last week, yet another study was released showing that the majority of a data scientist’s time is spent begrudgingly battling the Munge Monster. Using ACES is, in many ways, a “if you can’t beat ‘em, join ‘em”-type of approach. When you approach data analysis systematically, you can turn the Munge Monster into a friendly data puppy — which is much more doable than, say, trying to turn a sith lord to the force. Since there’s munging to be done, better to recognize it, account for it, and depend on it. I recommend using the ACES framework for data exploration to transform munging from a time-eating monstrosity into what can be one of the most productive, fun, and rewarding parts of a data science team’s workflow.

Like this article? Subscribe to our weekly newsletter to never miss out!