NASA is often on the front end of tech trends. They are constantly coming out with new tools, releasing incredible footage and data, and even support open source communities. Given how much information they can and often need to receive from their space equipment, it is no surprise that they need better ways to harness big data. Furthermore, their need to store and quickly receive data means that the technology needs to progress faster and faster to keep up with them.

Deep space yields vast amounts of data

The need for better storage and faster movement is a problem that fundamentally plagues big data. One driverless car could create 1GB of data per second. A space probe could create far more. The amount of data available on the solar system completely overshadows that found on Earth, even though it has been barely explored. There are currently two walls for data in space: transferring, and storing. Many missions use radio frequency to transfer information, meaning data usually moves at megabytes per second, or gigabytes per second. This could change in the future and become much faster, as work with optical communications continues to progress. In this method, data is modulated onto and transmitted by laser beams. Such an upgrade could increase data rates 1000 times faster than they currently are. NASA is planning missions that would stream over 24 terabytes of data daily—roughly 2.4 times the entire library of congress, daily—and that would greatly benefit from new developments like these. Once the data is transmitted, the question of storage must also be addressed.

NASA missions involve hundreds of terabytes being gathered every hour—one terabyte, as they colorfully describe, is the equivalent of information printed on 50,000 trees worth of paper. Moreover, that data is not only large, it is highly complex. According to NASA, their data sets include such large amounts of significant metadata that it “challenges current and future data management practice.” Their plan, however, is not to start from the ground up. Automating programs to extract data, and better utilization of the cloud could allow them to simply store data better. One principal investigator for the Jet Propulsion Laboratory’s big data initiative, Chris Mattmann, plans to “modify open-source computer codes to create faster, cheaper solutions.” As can always be expected from NASA, they intend to return those modified codes back to the open source community for others to use. Another team, working with the Curiosity, found their own solution. By downloading raw images and telemetry directly from the Curiosity, they could move them into Amazon S3 storage buckets. This allowed them to upload, process, store and deliver every image from the cloud.

What does space data look like?

On Earth, NASA’s data helps deal with disasters in real time, predict eco-system behavior, and even offer educational tools to students and teachers. But what about in space? Could all that data be really worth it? Scientists need big data not only for Earth, but for analyzing real-time solar plasma ejections, monitoring ice caps on Mars, or searching for distant galaxies. “We are the keepers of the data,” says Eric De Jong of JPL, “and the users are the astronomers and scientists who need images, mosaics, maps and movies to find patterns and verify theories.”

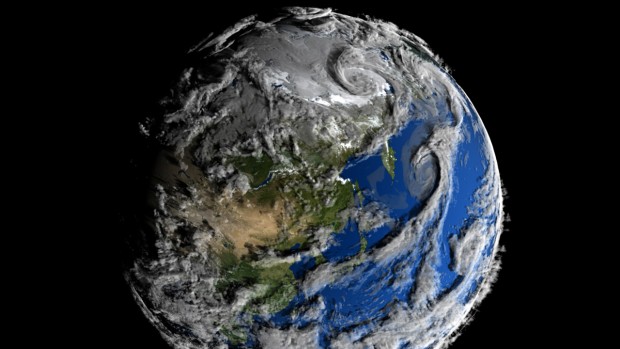

Another point of focus for NASA is sharing data with the public. Their data visualizations have made waves both internally and around the globe. One data-based visualization, titled Perpetual Ocean, even went viral, though viewers would never know how much work went into it. In order to visualize Earth’s wind, data from over 30 years of satellite observations and modeling had to be unified. The project also drew into question the way data was visualized. Rather than just showing temperature data, they opted to show the flow and unseen forces behind those changes. These visualizations, however, are very different than what people are used to. This data can be used to generate models of the real world, and even some of the simplest visualizations include incredible amounts of data. This image was based on 5 million gigabytes of data—though not all of that even made it into the final visualization.

The Scientific Visualization Studio (SVS) is one division in NASA that focuses solely on visualizing data, and they have the tools and team to do amazing work. Director Dr. Horace Mitchell told Mashable that they use a lot of the same software as Pixar, which also reveals the mindset behind the program. NASA has gone so far as to release their own data visualizing app in the Apple store. Again, they are not only telling data stories for internal scientists, but to help the general public better understand and see what is going on around them.

#NASADatanauts

NASA is far from finished in the big data field. Predictive analytics and further solutions are still out there, and NASA is officially taking the plunge to address the issues head on. They are pioneering a user community of “Datanauts” who will share experiences, identify high value datasets, and find applications that could benefit the community. It is also worth noting that NASA recently stated an intention to focus on women in the data science field and, in line with that goal, the first class of Datanauts will be women from a variety of backgrounds.

The future of big data is very important for NASA. The fact that they must process so much data that it “challenges current and future data management practice” is not to be taken lightly. Data storage solution may move at lightning speed, but so does data collection. Once all of the data is secured, there will no doubt be even more stunning and informative visualizations, challenging the ideas of what makes up a proper visualization. There’s no doubt there will be plenty of more viral space data stories in the future.

Like this article? Subscribe to our weekly newsletter to never miss out!