Big data is a big deal. Unfortunately, due to the sheer volume and velocity at which its created, many organizations still struggle to make sense of their data. Furthermore, because data is dispersed across so many disparate platforms (e.g. marketing automation, CRMs, Google Analytics, etc.), it’s become increasingly difficult to merge and analyze this data in meaningful ways.

In recent years, however, technology has made it easier to bridge the gaps between siloed data sources, and provide a unified view of data across platforms. Historical data can then be analyzed to identify patterns in past performance, and predict future outcomes. Paired with the right technology, big data has ushered in a new era of data-driven sales and marketing—the age of the Predictive Enterprise.

While predictive technologies may appear simple on the surface—input data, output predictions—anyone who’s attempted to manually derive insights from these enormous, disparate data sets knows that there’s a lot happening behind the scenes. At Fliptop, our data science team uses machine learning to create a range of predictive models. The Fliptop predictive platform, or as we call it, Darwin, automatically builds an array of machine-learning models and applies dozens of statistical measures to determine which model is most predictive at each stage of the marketing and sales lifecycle.

In this post, we’ll offer a glimpse into how Darwin works, focusing specifically on the predictive lead scoring component of our platform. From there, we’ll offer a few predictions of our own about where we see marketing technology going in coming years.

How Fliptop Uses Machine Learning

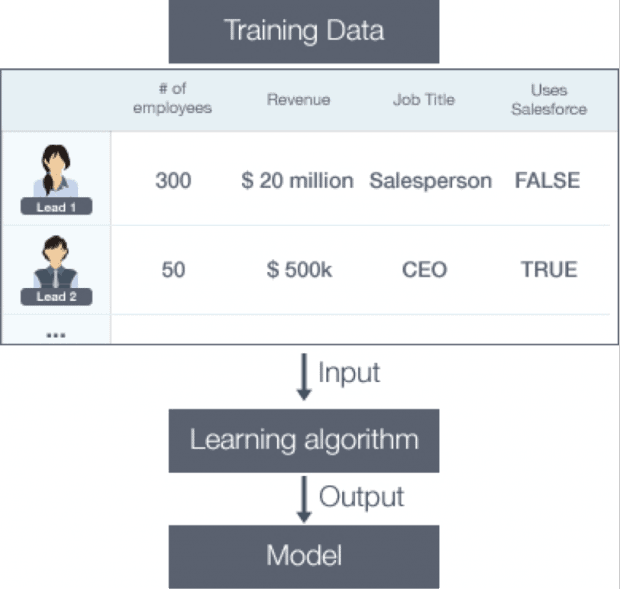

With predictive lead scoring, classification is critical because we want to understand whether a lead will or will not convert. At Fliptop, we start with a combination of CRM (e.g. Salesforce) and Marketing Automation System (e.g. Marketo, Oracle Eloqua) data to source examples (historical leads) along with basic features or characteristics of each lead. From there, we append additional data signals from a vast pool of partners; adding thousands of additional features to each example and creating robust lead profiles.

After appending external data signals, and pre-processing the data set to ensure high quality, we have a list of examples, each with a set of associated features. This data, called “training data” is used as input to a learning algorithm, which outputs a model, as shown in the figure below.

Training a Machine Learning Model

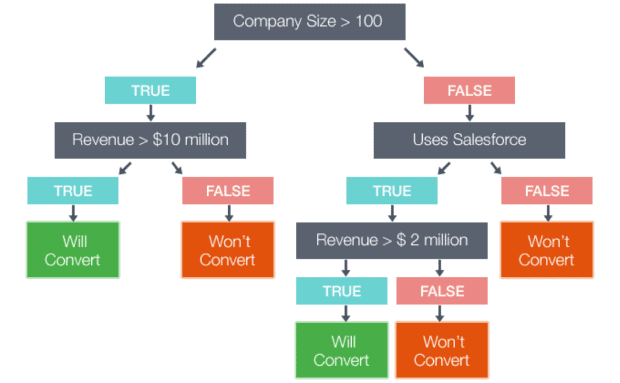

At Fliptop, our data science team used random forests and gradient boosting to build models. A random forest consists of multiple decision trees (usually, the more trees, the better.), and a simplified example of what a predictive lead scoring decision tree might look like follows:

When we want to predict whether or not a lead will convert, we get a prediction for each tree. Say we have 100 trees, and 20 trees predict the lead will not convert, and 80 trees predict the lead will convert. Then we could say that there is an 80% chance the lead will convert. (In reality, this is often more complicated, since each tree can return a probability rather than a decision. In this case we simply average the predicted probabilities).

Given the same training data and the same set of features, a learning algorithm will build the exact same decision tree. If we build the exact same tree multiple times, this doesn’t give us any more information than the original tree (since each tree will make the exact same prediction). To solve this problem, random forests add some unpredictability to the equation, helping us understand which features will be most influential in driving conversion.

One type of randomness involves the set of examples used to build the trees. Instead of training with all of the training examples, we use only a random subset. This is called “bootstrap aggregating,” and is done to stabilize the predictions and reduce over-fitting. It is used for many types of machine learning algorithms, not just random forests.

The other type of randomness involves the set of features used to build each tree. Instead of building a tree using all the features, we again use only a random subset of features. That means different trees will consider different features when asking questions. For example, as I mentioned earlier, the tree above does not include the “Job title” feature. But in a forest, other trees would include this feature, so it would still influence the overall prediction by the random forest.

Random forests have low bias (just like individual decision trees), and by adding more trees, we reduce variance, and thus over-fitting. This is one reason why they are so popular and powerful. Another reason is that they are relatively robust to the input features, and often need less feature pre-processing than other models. They are more efficient to build than other advanced models, such as nonlinear SVMs, and building multiple trees is easily parallelized.

When making predictions, we average the predictions of all the different trees. Because we are taking the average of low bias models, the average model will also have low bias. However, the average will have low variance. In this way, ensemble methods can reduce variance without increasing bias. This is why ensemble methods are so popular and the method we decided to use at Fliptop.

Once we have trained a machine-learning model, we can use the model to make predictions about future leads—whether they will or will not convert. The final output is four predictive models—one for leads, contacts, accounts, and opportunities—to help our customers predict conversions at every stage of the lead lifecycle.

Fliptop’s Predictions For The Future of MarTech

In this post, we explored how machine learning can help predict the likelihood of a prospect to convert, but the applications are truly endless. While every organization’s needs are unique, consider that following trends that machine learning and predictive marketing will enable in the very near future:

- Hyper-Targeting: Predictive martech will help marketers understand exactly who their most qualified targets are, where and when to reach them, and which offer they’ll be most receptive to—systematically advancing prospects through the buyers’ journey.

- Contextualized Content: Predictive platforms will facilitate the workflow, creation, and scaling of high-quality content, automatically identifying themes that resonate with an audience, and delivering the content most likely to convert them.

- Predictive Budgeting: When the data from various marketing technologies converges, marketers will know precisely where to increase investments and where to cut. Most importantly, technology will help us accurately forecast how these adjustments to investments will translate to pipeline and revenue.

Today, thousands of companies are already reaping the benefits of predictive marketing. From pinpointing leads most likely to convert, to account-based targeting, to pipeline forecasting and campaign optimization, predictive has empowered functions across the organization. We’ve entered the age of the predictive enterprise and the companies able to harness their data to make smarter, data-driven decisions will be the ones that win.

Doug Camplejohn is the CEO and Founder of Fliptop, a leader in Predictive Analytics applications for B2B companies. Before Fliptop, Doug founded two companies, Mi5 Networks and Myplay, and also held senior roles at Apple, Epiphany and Vontu.

Doug Camplejohn is the CEO and Founder of Fliptop, a leader in Predictive Analytics applications for B2B companies. Before Fliptop, Doug founded two companies, Mi5 Networks and Myplay, and also held senior roles at Apple, Epiphany and Vontu.

Image credit: Fliptop