Meta llama 3.1 405b kicks off a fresh chapter for open-source language models. This breakthrough brings unmatched skills to AI tech. The debut of Meta Llama 3.1 405b shakes up the big language model scene, offering top-notch performance anyone can access.

Researchers and coders have been itching to get their hands on Meta Llama 3.1 405b. This model leaps ahead in open-source AI, going toe-to-toe with fancy private models. With its huge size and clever tricks, Meta llama 3.1 405b is set to flip natural language processing on its head.

Meta Llama 3.1 405b shows off a bunch of cool skills, like sharper general smarts, better control, and ace performance in math and speaking many languages.

These upgrades make Meta Llama 3.1 405b a Swiss Army knife for all sorts of jobs, from school studies to business.

Starting today, open source is leading the way. Introducing Llama 3.1: Our most capable models yet.

Today we’re releasing a collection of new Llama 3.1 models including our long awaited 405B. These models deliver improved reasoning capabilities, a larger 128K token context… pic.twitter.com/1iKpBJuReD

— AI at Meta (@AIatMeta) July 23, 2024

What makes Meta Llama 3.1 405b so special?

The arrival of Meta Llama 3.1 405b is a big deal for open-source AI. This model can hang with the best private systems, opening doors to cutting-edge language tech for everyone.

Meta llama 3.1 405b being open-source means coders and researchers can tinker with a top-notch language model like never before. This free-for-all should speed up new ideas across the board, from grasping human talk to machine translation.

The Meta llama 3.1 405b package also comes with souped-up versions of smaller models, like the 8B and 70B.

These models can speak multiple languages and handle longer texts, making them perfect for all kinds of tasks.

Meta Llama 3.1 405b features

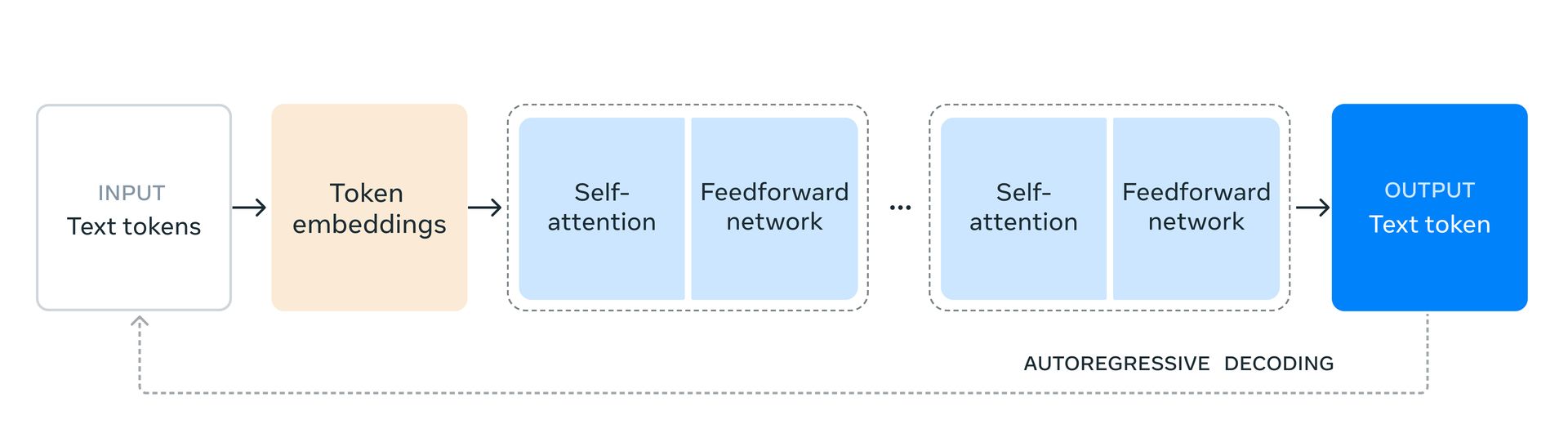

The model uses a decoder-only transformer setup, tweaked to work smoothly and pack a punch at a huge scale.

Training Meta Llama 3.1 405b took a mountain of computer power, using over 16,000 H100 GPUs. This massive number-crunching lets the model chew through tons of data, giving it its top-notch skills.

Researchers at Meta come up with some new ways to boost the model’s game. They used a back-and-forth training process and better ways to pick and clean data before and after training.

To make Meta Llama 3.1 405b easier to use in the real world, the team shrunk it from 16-bit to 8-bit math. This clever move lets the model run on just one server, making it more doable for actual use.

Push it to the limit!

The new model got way better at following orders and chatting. Meta’s AI went through several rounds of fine-tuning, including:

- Supervised Fine-Tuning

- Rejection Sampling

- Direct Preference Optimization

Building Meta Llama 3.1 405b meant juggling lots of skills. The team worked hard to keep it sharp with different text lengths while also baking in safety measures.

Meta Llama 3.1 405b is meant to play nice with other AI tools like OpenAI’s GPT and Google’s Gemini. This setup lets coders mix and match to create custom solutions for specific jobs.

The Meta Llama 3.1 405b package comes with extra goodies like Llama Guard 3, a safety model that speaks many languages, and Prompt Guard, which blocks sneaky input tricks.

These tools aim to help people build and use AI responsibly.

Safety first

Meta is all over AI safety as governments try to figure it out. They’re backing new safety groups and teaming up with old hands like NIST and ML Commons to nail down shared ideas, threat models, and ways to test things.

Meta also works with crews like the Frontier Model Forum and Partnership on AI to train best practices and chat with everyone.

As explained in their recent blog post, before letting a model loose, Meta hunts for and tames potential risks in various ways. They check for dangers before launch, run safety tests and tweaks, and put the model through its paces with both outside and inside experts.

As Llama 3.1 learned new tricks like speaking more languages and handling longer text, Meta ramped up their safety checks to match.

Meta wants to help coders protect against possible Llama misuse. They’ve woven safety measures throughout the model’s growth and whipped up a toolbox of safeguards so coders can tailor their AI apps.

The company teams up with other giants like AWS, NVIDIA, and Databricks to make sure safety tools come bundled with Llama models, pushing (and demanding) the responsible use of Llama systems.

Red team in action

Meta runs tons of red team drills using both human pros and AI-powered methods. They buddy up with subject gurus in key risk areas and have gathered a crew of experts from all sorts of backgrounds to see how their models hold up against different types of troublemakers.

The company has sized up and tamed risks in areas like cybersecurity, nasty weapons, and kid safety. They’ve run tests to see if Llama 3.1 405B could seriously up the game of bad actors in these areas.

So far, they haven’t seen any big jumps in what people with bad intentions can do with Llama 3.1 405B.

Meta is also dead set on building AI models that play by the Safety by Design rules, especially when it comes to keeping kids safe.

They’ve baked in these principles by carefully picking training data and keeping it clean of any icky kid-related stuff.

Llama 3.1 405B also went through privacy checkups at various points during training. Meta used tricks to cut down on remembering private info and ran red team drills to spot and fix privacy weak spots.

How does the Llama 3 herd of models compare?

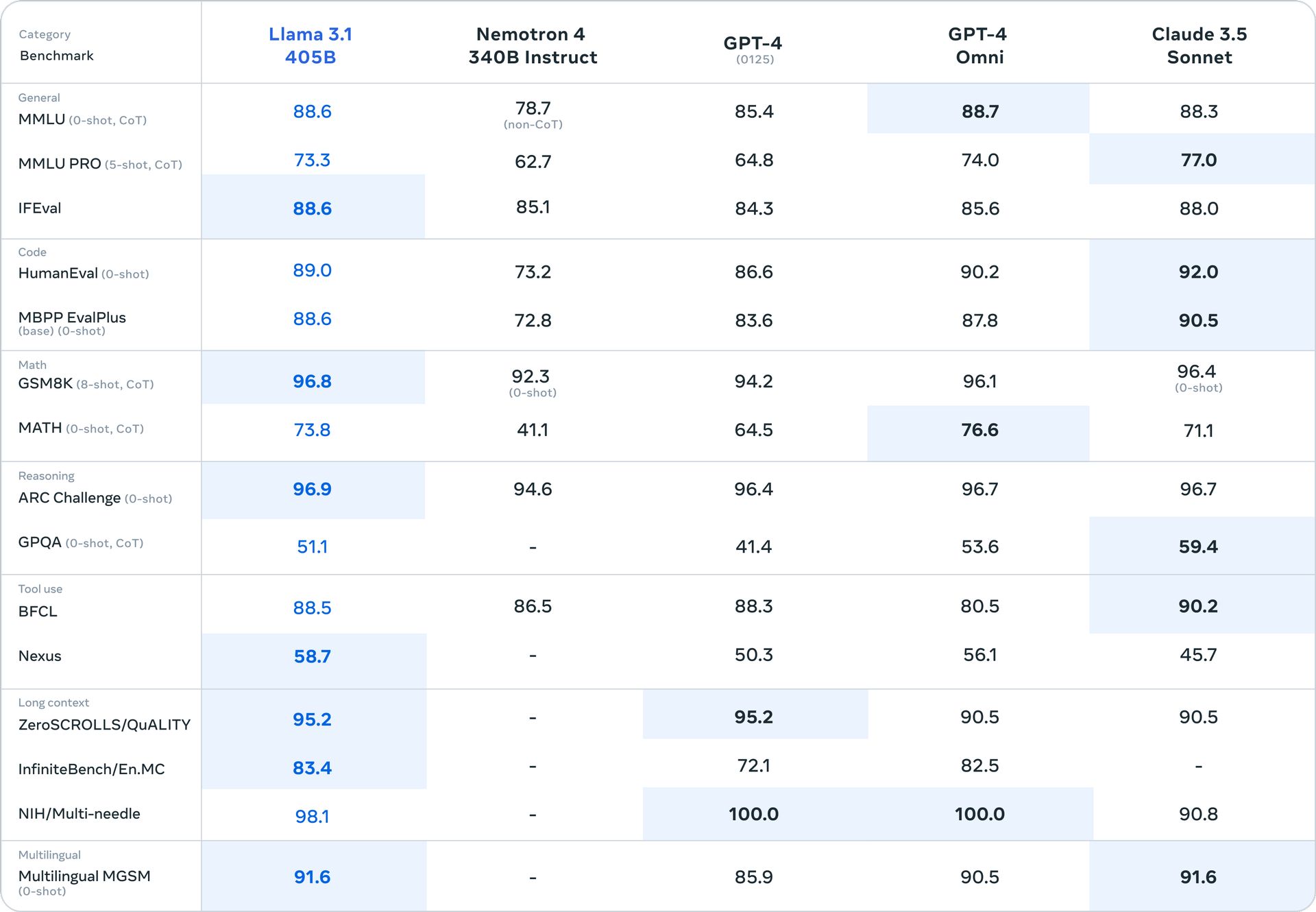

Meta has provided benchmark results of Meta Llama 3.1 405b in their blog post. Looking at these charts, Meta Llama 3.1 405b stacks up pretty well against other top AI models.

Here’s the scoop:

General smarts

Meta Llama 3.1 405b scores 88.6 on the MMLU test, which checks general knowledge. That’s right up there with GPT-4 Omni (88.7) and Claude 3.5 Sonnet (88.3). It’s beating GPT-4 (85.4) and Nemotron 4 (78.7) by a good margin.

Coding skills

For coding (HumanEval), Meta Llama 3.1 405b gets 89.0 score. It’s neck-and-neck with GPT-4 Omni (90.2) and not far behind Claude 3.5 Sonnet (92.0). It’s clearly ahead of Nemotron 4 (73.2) and base GPT-4 (86.6).

Math skills

Meta Llama 3.1 405b really shines in math. It scores 96.8 on GSM8K, beating out all the others including GPT-4 Omni (96.1) and Claude 3.5 Sonnet (96.4).

Thinking skills

In the ARC Challenge for reasoning, Meta Llama 3.1 405b scores 96.9, slightly edging out GPT-4 (96.4) and matching GPT-4 Omni and Claude 3.5 Sonnet (both 96.7).

Long text handling

Meta Llama 3.1 405b scores 95.2 on ZeroSCROLLS/QuALITY, tying with GPT-4 and beating GPT-4 Omni and Claude 3.5 Sonnet (both 90.5).

Multiple languages

For the Multilingual MGSM test, Meta Llama 3.1 405b scores 91.6, matching Claude 3.5 Sonnet and beating GPT-4 (85.9) and GPT-4 Omni (90.5).

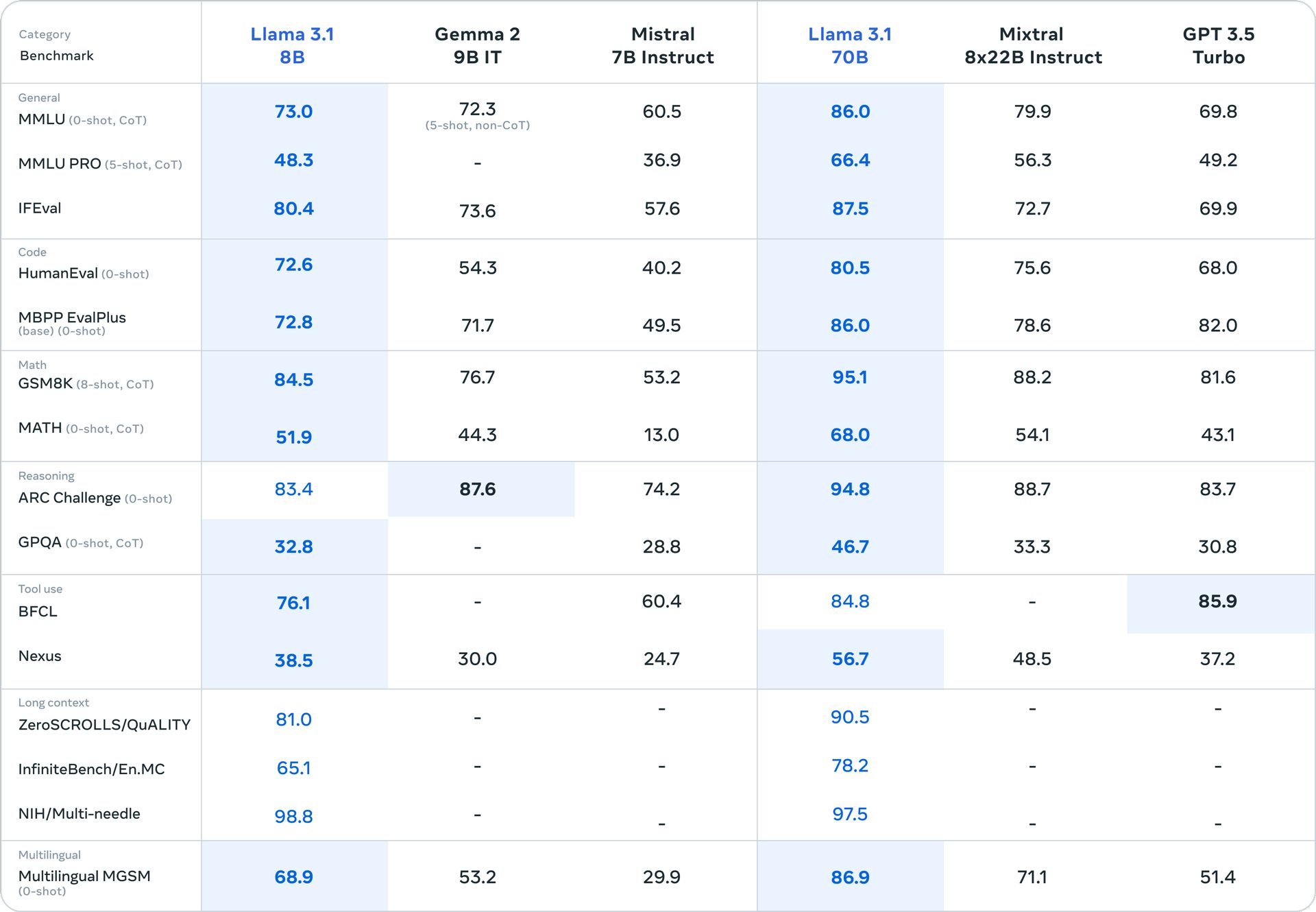

Smaller versions

The smaller Meta Llama 3.1 models (8B and 70B) also do well for their size. The 70B version often outperforms other models like Mixtral 8x22B and GPT 3.5 Turbo across various tests.

How to use Meta Llama 3.1 405b

To use Meta llama 3.1 405b, you’ve got a few options:

Download it

Head to llama.meta.com or Hugging Face. Grab the model files and set it up on your own machine or server. This way’s best if you’ve got some serious computing power and know-how.

Cloud platforms

Meta’s teamed up with big players like AWS, NVIDIA, and Databricks. They’ve got Meta Llama 3.1 405b ready to roll on their cloud services. This route’s great if you want the heavy lifting done for you.

Partner platforms

Lots of AI companies have jumped on board to offer Meta Llama 3.1 405b through their services. This could be the easiest way to start tinkering without getting too technical.

Local setup

If you’re tech-savvy and have a beefy computer, you can run smaller versions of Meta Llama 3.1 locally. The 405B model is too big for most personal setups, though.

Fine-tuning

For specific tasks, you can fine-tune Meta llama 3.1 405b on your own data. This takes some know-how but can make the model super sharp for your needs.

Hugging Face Transformers

If you’re into coding, the Hugging Face Transformers library makes it easier to work with Meta Llama 3.1 405b in Python.

Remember, Meta Llama 3.1 405b is an open-source model. This means you can dig into its core, tweak it, and use it in ways that fit your project best.

Just keep an eye on the license terms to make sure you’re playing by the rules.

By putting all this work out in the open, Meta hopes to give coders the power to build systems that fit their style and customize safety for their specific needs.

As these tech toys keep evolving, Meta plans to keep polishing these features and models, helping people build, create, and connect in fresh and exciting ways.

Meanwhile in Zuck’s world

Building on Meta’s AI push, Zuckerberg’s got big dreams for content creators. He’s cooking up AI sidekicks that’ll chat with fans, freeing up creators to do their thing. These digital mini-mes would soak up a creator’s social media vibe and goals, then interact with followers like a savvy stand-in.

This ties right into Meta Llama 3.1 405b’s wheelhouse. This brainy model could be the secret sauce powering these AI helpers, with its knack for human-like chatter and wide-ranging smarts. It’s got the chops to maybe pull off that personality-mimicking trick Zuck’s dreaming of.

But here’s the rub: AI has still got some growing pains.

Remember the Shrimp Jesus incident? Meta’s earlier AI bots stumbled, spouting nonsense in Facebook groups. While Meta Llama 3.1 405b’s stepped up its game, it’s not perfect. Creators might hesitate to hand over fan interactions to a bot that could goof up.

Zuckerberg knows he’s got some convincing to do. He’s betting on Meta llama 3.1 405b and pals to win folks over. But with some creators already side-eyeing Meta’s AI training habits, it’s an uphill battle.

The race is on to make AI helpers that creators actually trust.

Featured image credit: Meta