Meta’s latest LLM is out; meet Llama 3. This open-source wonder isn’t just another upgrade, and soon, you will learn why.

Forget complicated jargon and technicalities. Meta Llama 3 is here to simplify AI and bring it to your everyday apps. But what sets Meta Llama 3 apart from its predecessors? Imagine asking Meta Llama 3 to perform calculations, fetch information from databases, or even run custom scripts—all with just a few words. Sounds good? Here are all the details you need to know about Meta’s latest AI move.

What is Meta Llama 3 exactly?

Meta Llama 3 is the latest generation of open-source large language models developed by Meta. It represents a significant advancement in artificial intelligence, building on the foundation laid by its predecessors, Llama 1 and Llama 2. The new evaluation set includes 1,800 prompts across 12 key use cases, such as

- Asking for advice,

- Brainstorming,

- Classification,

- Closed question answering,

- Coding,

- Creative writing,

- Extraction,

- Inhabiting a character/persona,

- Open question answering,

- Reasoning,

- Rewriting,

- Summarization.

The evaluation covers a wide range of scenarios to ensure the model’s versatility and real-world applicability.

Here are the key statistics and features that define Llama 3:

Model sizes

- 8 Billion parameters: One of the smaller yet highly efficient versions of Llama 3, suitable for a broad range of applications.

- 70 billion parameters: A larger, more powerful model that excels in complex tasks and demonstrates superior performance on industry benchmarks.

Training data

- 15 trillion tokens: The model was trained on an extensive dataset consisting of over 15 trillion tokens, which is seven times larger than the dataset used for Llama 2.

- 4x more code: The training data includes four times more code compared to Llama 2, enhancing its ability to handle coding and programming tasks.

- 30+ languages: Includes high-quality non-English data covering over 30 languages, making it more versatile and capable of handling multilingual tasks.

Training infrastructure

- 24K GPU Clusters: The training was conducted on custom-built clusters with 24,000 GPUs, achieving a compute utilization of over 400 TFLOPS per GPU.

- 95% effective training time: Enhanced training stack and reliability mechanisms led to more than 95% effective training time, increasing overall efficiency by three times compared to Llama 2.

Popular feature: Llama 3 function calling

The function calling feature in Llama 3 allows users to execute functions or commands within the AI environment by invoking specific keywords or phrases. This feature enables users to interact with Llama 3 in a more dynamic and versatile manner, as they can trigger predefined actions or tasks directly from their conversation with the AI. For example, users might instruct Llama 3 to perform calculations, retrieve information from external databases, or execute custom scripts by simply mentioning the appropriate command or function name. This functionality enhances the utility of Llama 3 as a virtual assistant or AI-powered tool, enabling seamless integration with various workflows and applications.

The burning question: What can Llama 3 do that Llama 1 and Llama 2 can’t do?

First of all, Meta Llama 3 introduces significantly improved reasoning capabilities compared to its predecessors, Llama 1 and Llama 2. This enhancement allows the model to perform complex logical operations and understand intricate patterns within the data more effectively. For example, Llama 3 can handle advanced problem-solving tasks, provide detailed explanations, and make connections between disparate pieces of information. These capabilities are particularly beneficial for applications requiring critical thinking and advanced analysis, such as scientific research, legal reasoning, and technical support, where understanding the nuances and implications of complex queries is essential.

Llama 3 excels in code generation thanks to a training dataset with four times more code than its predecessors. It can automate coding tasks, generate boilerplate code, and suggest improvements, making it an invaluable tool for developers. Additionally, its Code Shield feature ensures the generated code is secure, mitigating vulnerabilities.

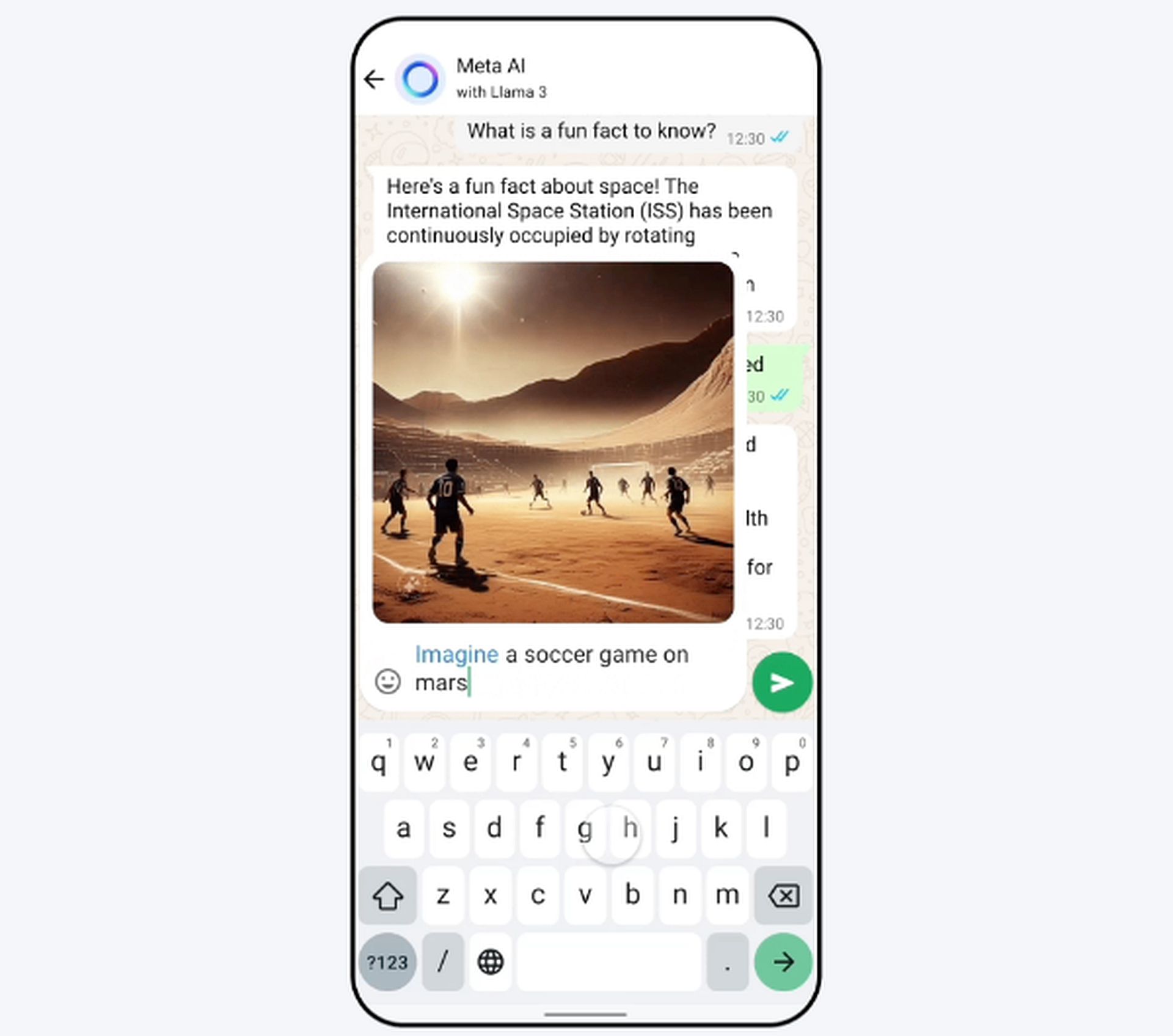

Whats more, unlike Llama 1 and Llama 2, Llama 3 supports multimodal (text and images) and multilingual applications, covering over 30 languages. This capability makes it versatile for global use, enabling inclusive and accessible AI solutions across diverse linguistic environments.

Llama 3 handles longer context windows better than its predecessors, maintaining coherence in extended conversations or lengthy documents. This is particularly useful for long-form content creation, detailed technical documentation, and comprehensive customer support, where context and continuity are key.

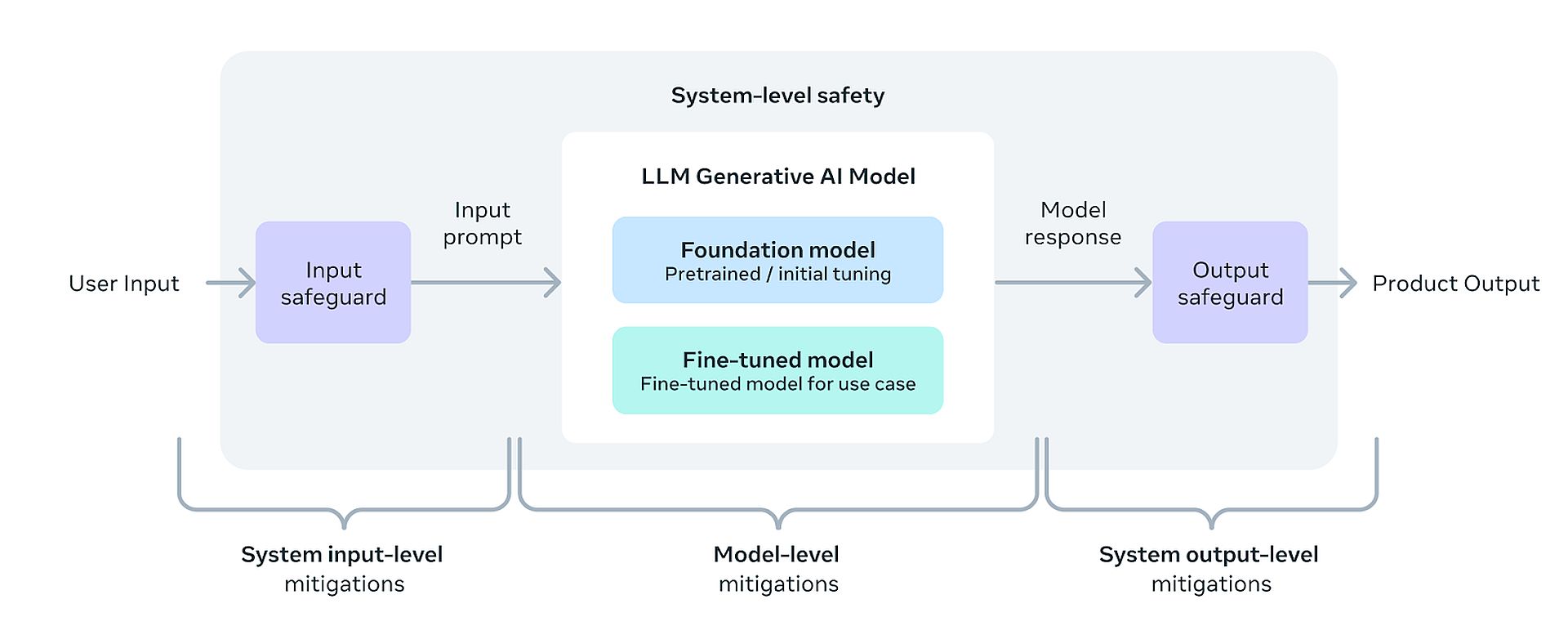

Llama 3 includes sophisticated trust and safety tools like Llama Guard 2, Code Shield, and CyberSec Eval 2, which are absent in Llama 1 and Llama 2. These tools ensure responsible use by minimizing risks such as generating harmful or insecure content, making Llama 3 suitable for sensitive and regulated industries.

Llama 3’s optimized architecture and training make it more powerful and efficient. It’s available on major cloud platforms like AWS, Google Cloud, and Microsoft Azure, and supported by leading hardware providers like NVIDIA and Qualcomm. This broad accessibility and improved token efficiency ensure smooth and cost-effective deployment at scale.

How to use Meta Llama 3?

As we mentioned, Meta Llama 3 is a versatile and powerful large language model that can be used in various applications. Using Meta Llama 3 is straightforward and accessible through Meta AI. But do you know how to access it? Here is how:

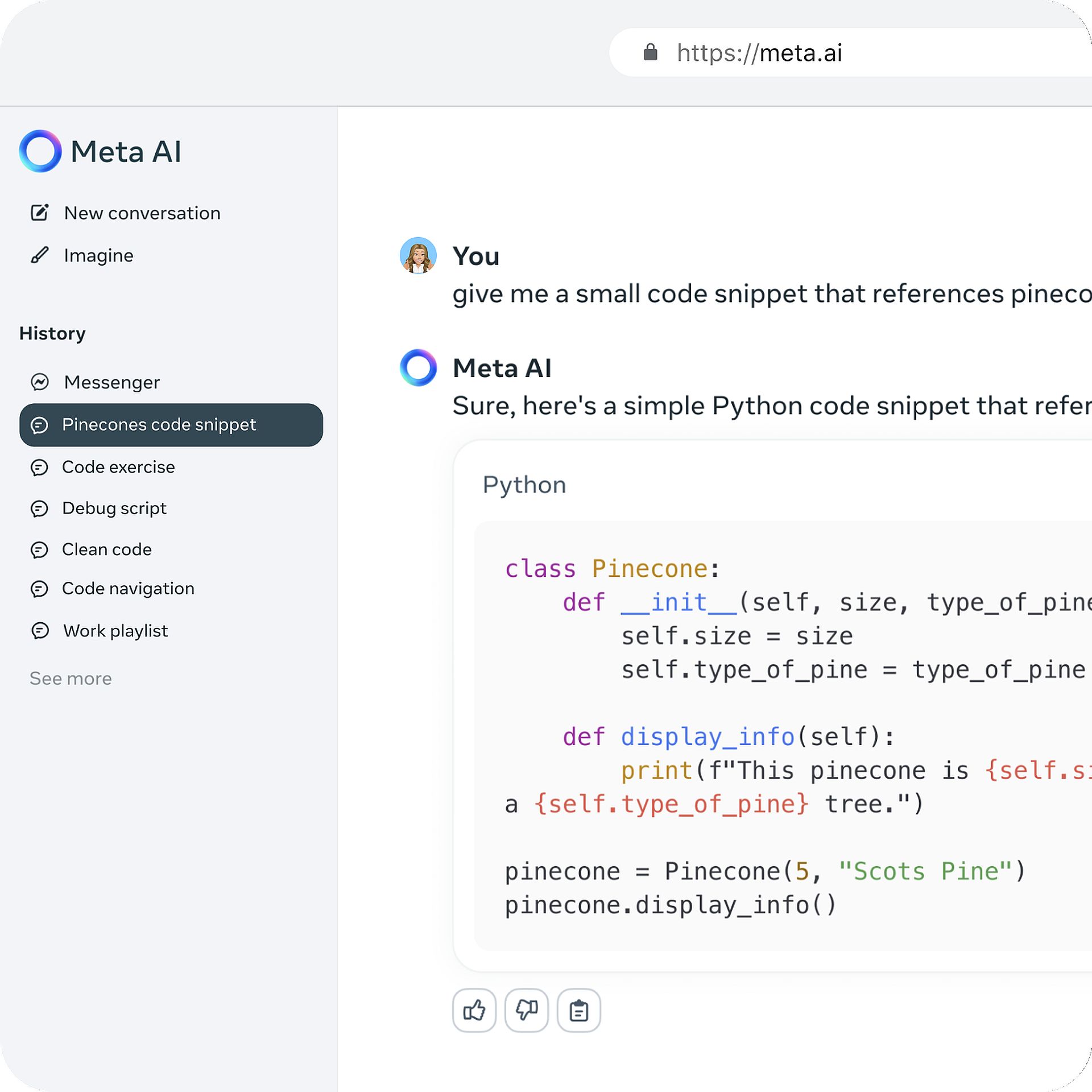

- Access Meta AI: Meta AI, powered by Llama 3 technology, is integrated into various Meta platforms, including Facebook, Instagram, WhatsApp, Messenger, and the web. Simply access any of these platforms to start using Meta AI.

- Utilize Meta AI: Once you’re on a Meta platform, you can use Meta AI to accomplish various tasks. Whether you want to get things done, learn new information, create content, or connect with others, Meta AI is there to assist you.

- Access Meta AI Across Platforms: Whether you’re browsing Facebook, chatting on Messenger, or using any other Meta platform, Meta AI is accessible wherever you are. Seamlessly transition between platforms while enjoying the consistent support of Meta AI.

- Visit the Llama 3 Website: For more information and resources on Meta Llama 3, visit the official Llama 3 website. Here, you can download the models and access the Getting Started Guide to learn how to integrate Llama 3 into your projects and applications.

Deep dive: Llama 3 architecture

Llama 3 employs a transformer-based architecture, specifically a decoder-only transformer model. This architecture is optimized for natural language processing tasks and consists of multiple layers of self-attention mechanisms, feedforward neural networks, and positional encodings.

Key components include:

- Tokenizer: Utilizes a vocabulary of 128K tokens to encode language, enhancing model performance efficiently.

- Grouped Query Attention (GQA): Implemented to improve inference efficiency, ensuring smoother processing of input data.

- Training data: Pretrained on an extensive dataset of over 15 trillion tokens, including a significant portion of code samples, enabling robust language understanding and code generation capabilities.

- Scaling up pretraining: Utilizes detailed scaling laws to optimize model training, ensuring strong performance across various tasks and data sizes.

- Instruction fine-tuning: Post-training techniques such as supervised fine-tuning, rejection sampling, and preference optimization enhance model quality and alignment with user preferences.

- Trust and safety tools: Includes features like Llama Guard 2, Code Shield, and CyberSec Eval 2 to promote responsible use and mitigate risks associated with model deployment.

Overall, Llama 3’s architecture prioritizes efficiency, scalability, and model quality, making it a powerful tool for a wide range of natural language processing applications.

What’s more?

Future Llama 3 models with over 400 billion parameters promise greater performance and capabilities, pushing the boundaries of natural language processing.

Upcoming versions of Llama 3 will support multiple modalities and languages, expanding its versatility and global applicability.

Meta’s decision to release Llama 3 as open-source software fosters innovation and collaboration in the AI community, promoting transparency and knowledge sharing.

Meta AI, powered by Llama 3, boosts intelligence and productivity by helping users learn, create content, and connect more efficiently. Additionally, multimodal capabilities will soon be available on Ray-Ban Meta smart glasses, extending Llama 3’s reach in everyday interactions.

Featured image credit: Meta