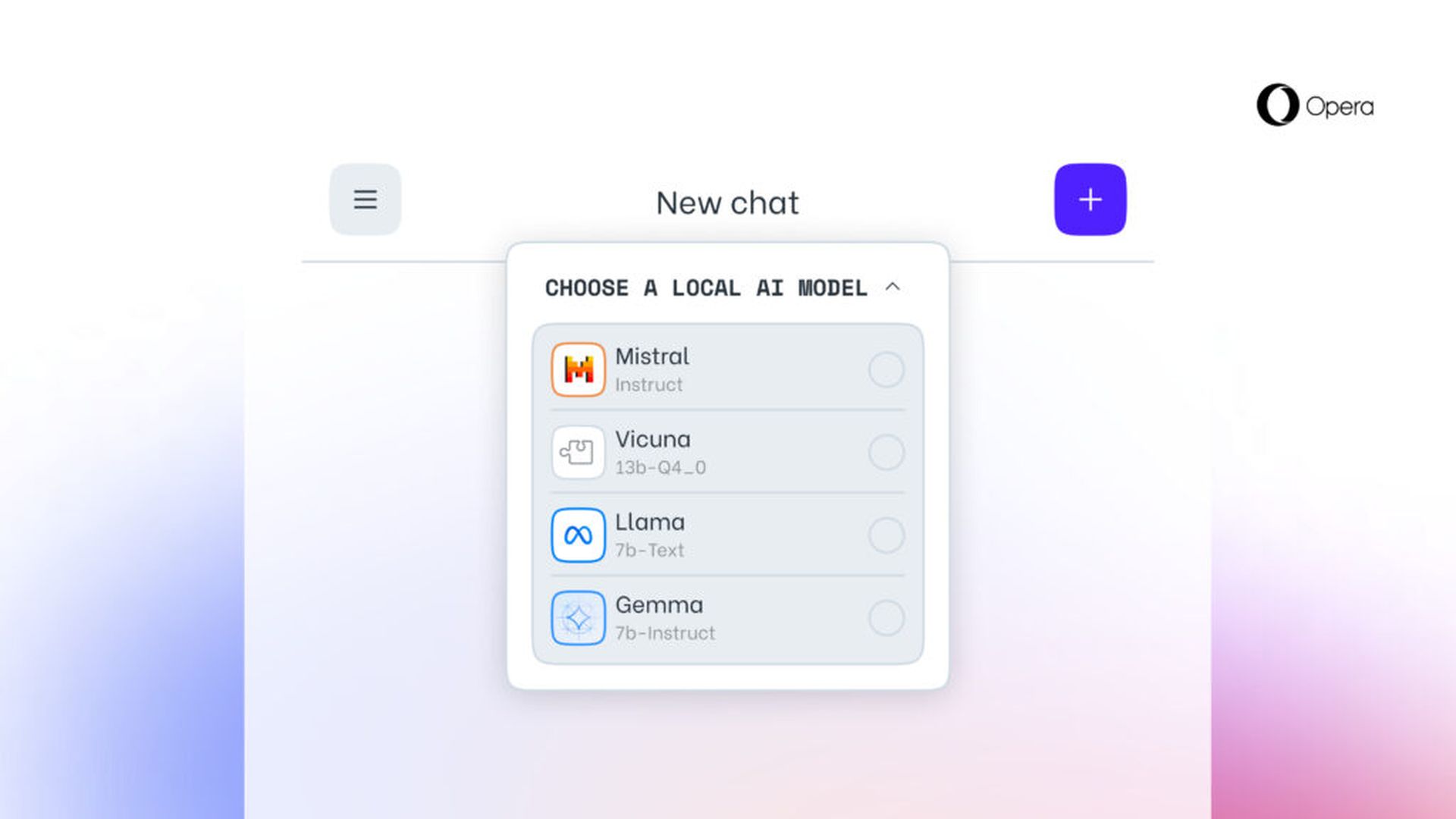

Opera revealed today its initiative to incorporate experimental support for 150 local Large Language Model (LLM) variants, originating from around 50 different model families. The feature will first find its way into Opera One on the Developer stream, bringing with it over 150 models from more than 50 families that users can choose from.

These include the Vicuna and the Llama from Google and Meta, respectively. This means that the feature is coming through Opera’s AI Feature Drops Program to consumers and would give early access to a couple of such AI features. Those models are run on your computer through your browser and run with the Ollama open-source framework. All of the models being offered for now are selected from the Ollama library, but the company hopes that in the future, it will include some from other sources.

Each of these will need more than 2GB space on your local PC, according to the company. Thus, to avoid running out of space, use your free space wisely. Interestingly, Opera doesn’t try to save the data if it’s downloading in a model form.

You can also check out other models online on websites like HuggingFace and PoE over on Quora, so it’s really for your local model testing needs.

Large Language Models now support processing data directly on the user’s device, thus enabling the use of generative AI while keeping information private and away from any external servers. Opera has announced plans to introduce this innovative approach to local LLMs through the Opera One developer channel, as part of its new AI Feature Drops Program. This initiative offers early users the chance to try out cutting-edge versions of the browser’s AI features promptly and regularly. From today, users of Opera One Developer will have the freedom to select the preferred model for processing their data.

How to try built-in LLMs in Opera?

To engage with these models, users should upgrade to the most current Opera Developer version and execute a series of steps to enable this exciting functionality:

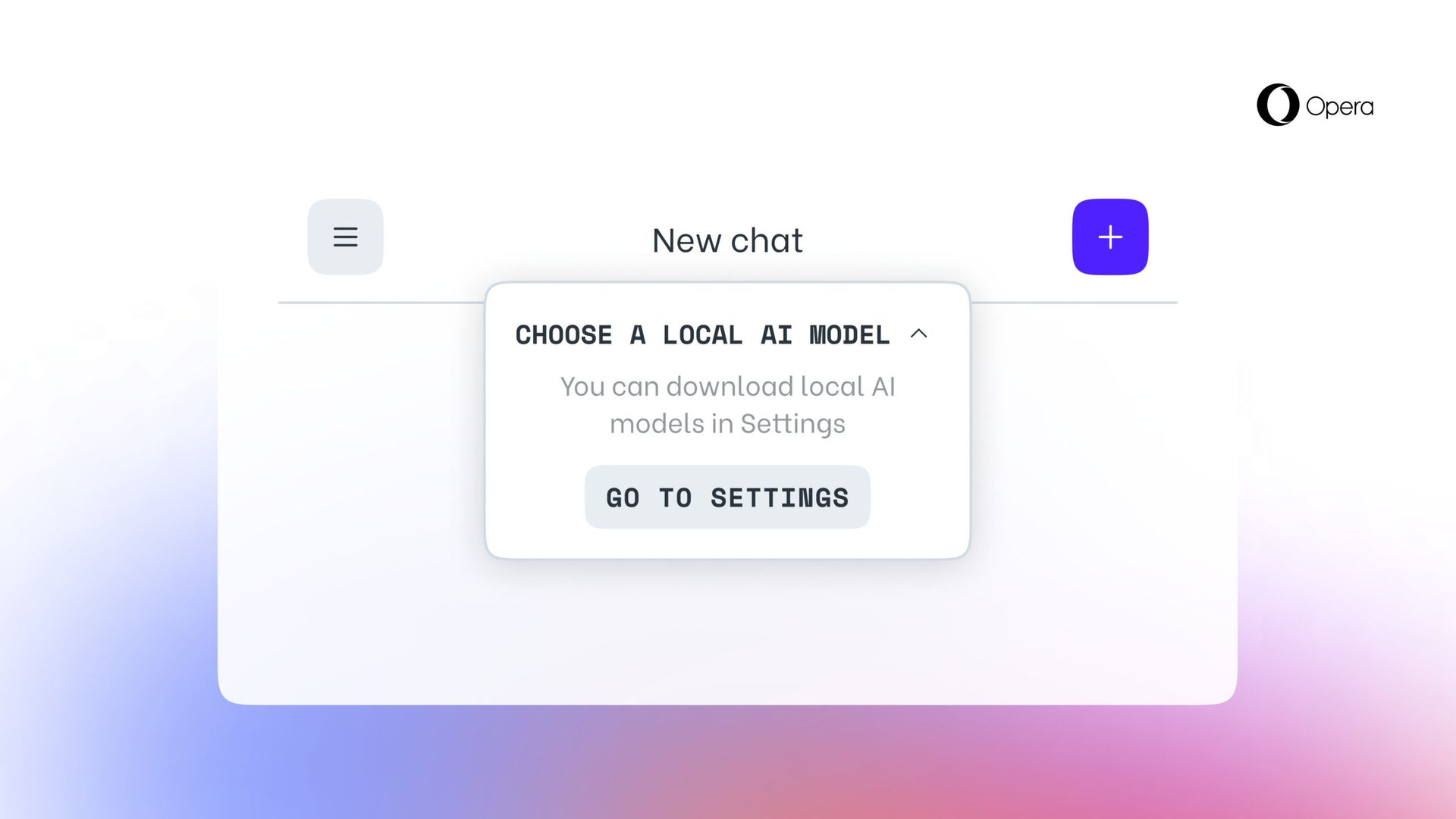

- Access the Aria Chat sidebar as you’ve done previously. At the chat’s top, there’s a drop-down menu labeled “Choose local mode.”

- Select “Go to settings” from this menu.

- This area allows you to explore and decide which model(s) you wish to download.

- For a smaller and faster option, consider downloading the model named GEMMA:2B-INSTRUCT-Q4_K_M by clicking the download icon located to its right.

- Once the download is finalized, click the menu button in the top left corner to initiate a new chat session.

- Again, you’ll see a drop-down menu at the chat’s top reading “Choose local mode” where you can select the model you’ve just installed.

- Enter your prompt into the chat, and the locally hosted model will respond.

Participants will select a local LLM to download onto their device. This approach requires between 2 to 10 GB of storage for each version, providing an alternative to using Aria, Opera’s built-in browser AI. This setup remains in place until the user initiates a new interaction with the AI or opts to reactivate Aria.

Featured image credit: Opera