Large language models (LLMs) have demonstrated remarkable abilities – they can chat conversationally, generate creative text formats, and much more. Yet, when asked to provide detailed factual answers to open-ended questions, they still can fall short. LLMs may provide plausible-sounding yet incorrect information, leaving users with the challenge of sorting fact from fiction.

Google DeepMind, the leading AI research company, is tackling this issue head-on. Their recent paper, “Long-form factuality in large language models” introduces innovations in both how we measure factual accuracy and how we can improve it in LLMs.

LongFact: A benchmark for factual accuracy

DeepMind started by addressing the lack of a robust method for testing long-form factuality. They created LongFact, a dataset of over 2,000 challenging fact-seeking prompts that demand detailed, multi-paragraph responses. These prompts cover a broad array of topics to test the LLM‘s ability to produce factual text in diverse subject areas.

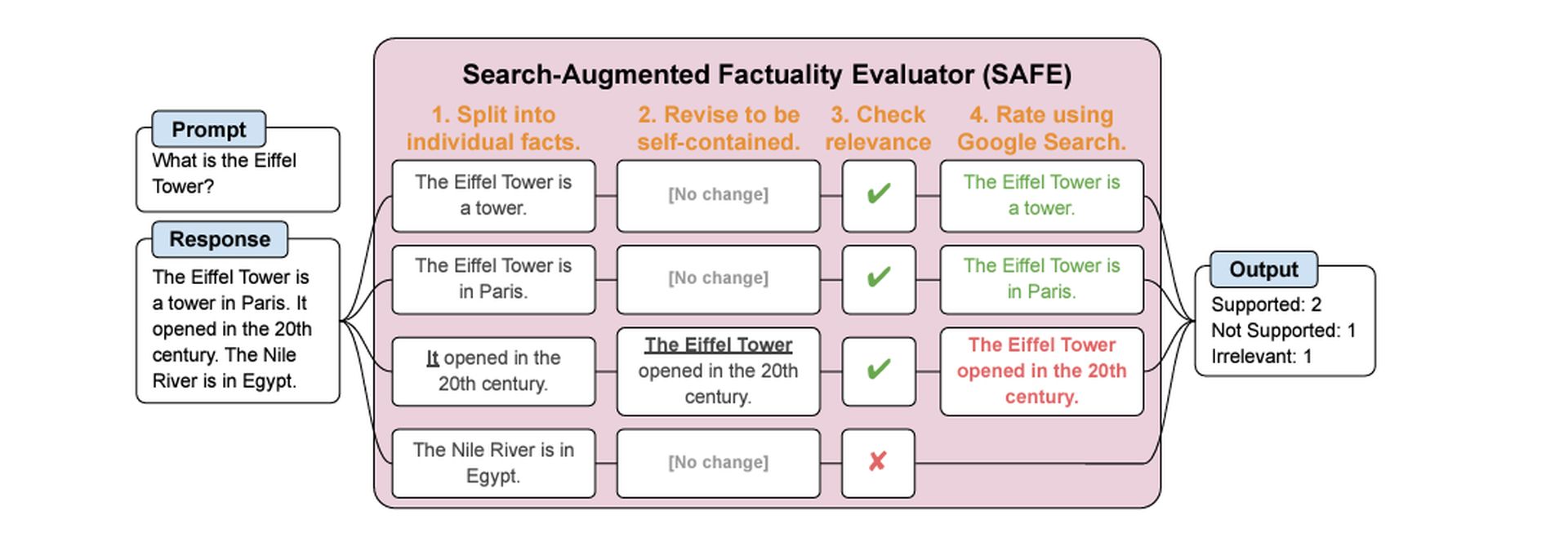

SAFE: Search-augmented factuality evaluation

The next challenge was determining how to accurately evaluate LLM responses. DeepMind developed the Search-Augmented Factuality Evaluator (SAFE). Here’s the clever bit: SAFE itself uses an LLM to make this assessment!

Here’s how it works:

- Break it down: SAFE dissects a long-form LLM response into smaller individual factual statements.

- Search and verify: For each factual statement, SAFE crafts search queries and sends them to Google Search.

- Make the call: SAFE analyzes the search results and compares them to the factual statement, determining if the statement is supported by the online evidence.

F1@K: A new metric for long-form responses

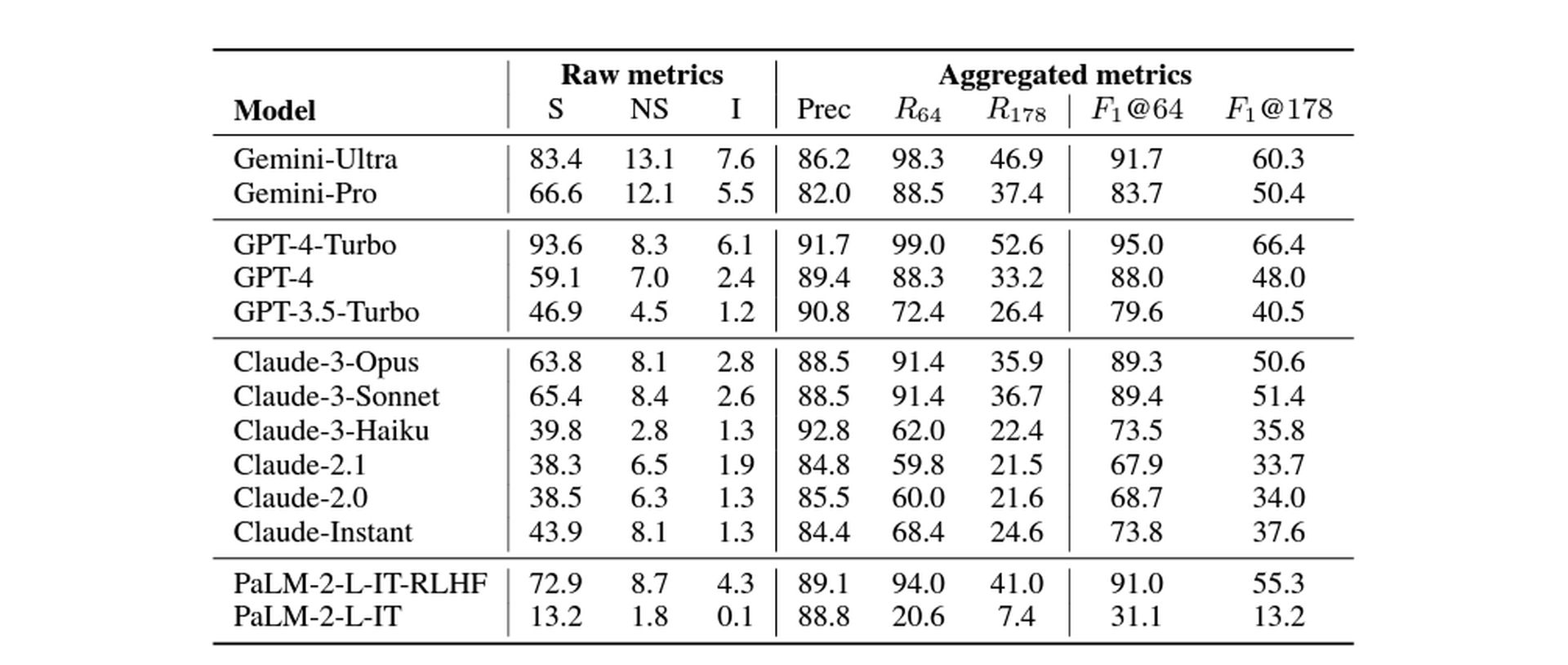

DeepMind also proposed a new way to score long-form factual responses. The traditional F1 score (used for classification tasks) wasn’t designed to handle longer, more complex text. F1@K balances precision (the percentage of provided facts that are correct) against a concept called recall.

Recall takes into account a user’s ideal response length – after all, an LLM could gain high precision by providing a single correct fact, while a detailed answer would get a lower score.

Bigger LLMs, better facts

DeepMind benchmarked a range of large langue models of varying sizes, and their findings aligned with the intuition that larger models tend to demonstrate greater long-form factual accuracy. This can be explained by the fact that larger models are trained on massive datasets of text and code, which imbues them with a richer and more comprehensive understanding of the world.

Imagine an LLM like a student who has studied a vast library of books. The more books the student has read, the more likely they are to have encountered and retained factual information on a wide range of topics. Similarly, a larger LLM with its broader exposure to information is better equipped to generate factually sound text.

In order to perform this measurement, Google DeepMind tested the following models: Gemini, GPT, Claude (versions 3 and 2), and PaLM. The results are as follows:

The takeaway: Cautious optimism

DeepMind’s study shows a promising path toward LLMs that can deliver more reliable factual information. SAFE achieved accuracy levels that exceeded human raters on certain tests.

However, it’s crucial to note the limitations:

Search engine dependency: SAFE’s accuracy relies on the quality of search results and the LLM’s ability to interpret them.

Non-repeating facts: The F1@K metric assumes an ideal response won’t contain repetitive information.

Despite potential limitations, this work undeniably moves the needle forward in the development of truthful AI systems. As LLMs continue to evolve, their ability to accurately convey facts could have profound impacts on how we use these models to find information and understand complex topics.

Featured image credit: Freepik