Meta’s latest innovation, the V-JEPA model, is here to change how computers comprehend videos. Unlike traditional methods, V-JEPA focuses on understanding the bigger picture, making it easier for machines to interpret interactions between objects and scenes.

What is Meta’s new V-JEPA model?

Meta’s new V-JEPA model, or Video Joint Embedding Predictive Architecture, is a cutting-edge technology developed to understand videos in a way that’s similar to how humans do. Unlike traditional methods that focus on tiny details, V-JEPA looks at the bigger picture, like understanding interactions between objects and scenes.

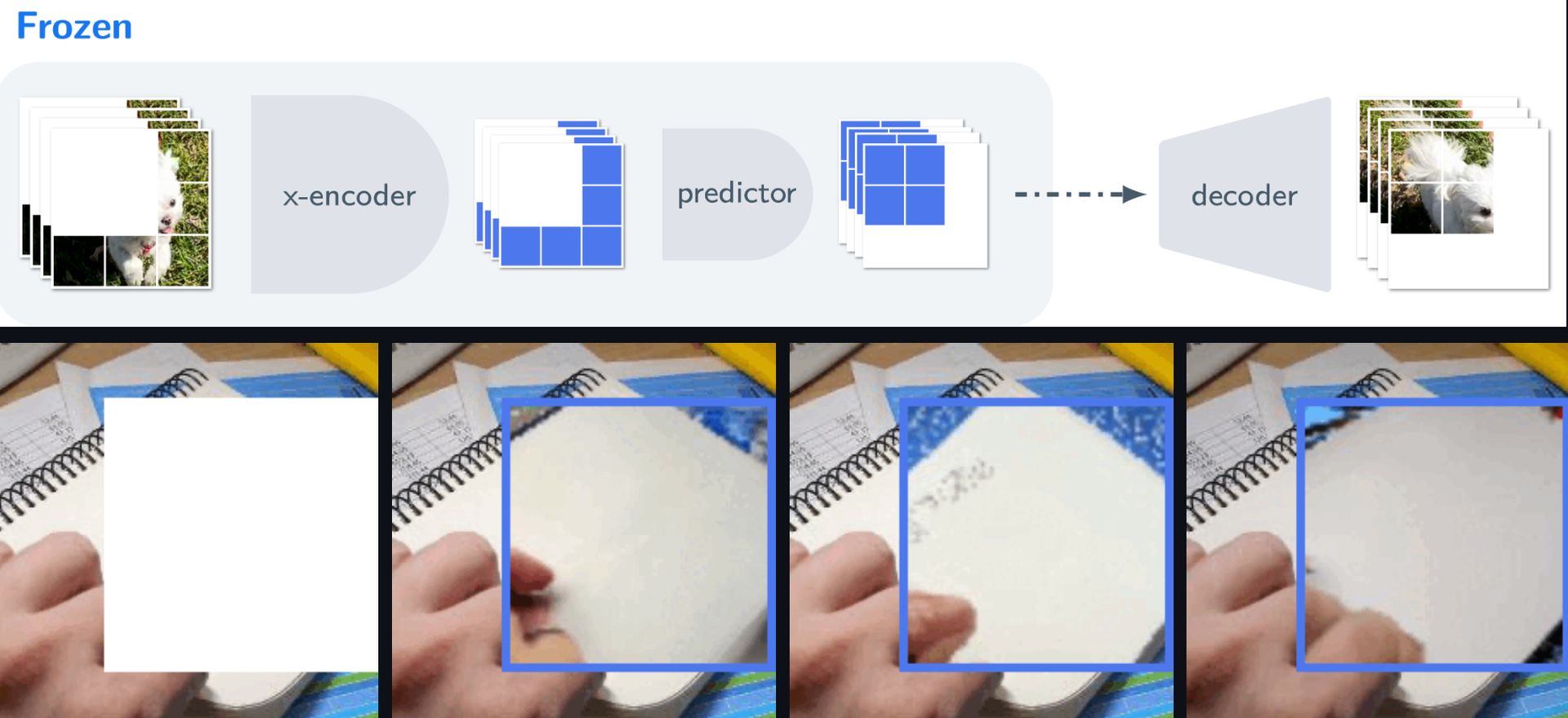

Is V-JEPA generative? Unlike OpenAI’s new text-to-video AI tool, Sora AI, Meta’s V-JEPA model is not generative. Unlike generative models that attempt to reconstruct missing parts of a video at the pixel level, the model focuses on predicting missing or masked regions in an abstract representation space. This means that the model does not generate new content or fill in missing pixels directly. Instead, it learns to understand the content and interactions within videos at a higher level of abstraction, enabling more efficient learning and adaptation across tasks.

What makes V-JEPA special is how it learns. Instead of needing lots of labeled examples, it learns from videos without needing labels. It’s like how babies learn just by watching and don’t need someone to tell them what’s happening. This makes learning faster and more efficient. It focuses on figuring out missing parts of a video in a smart way, instead of trying to fill in every detail. This helps it learn faster and understand what’s important in a scene.

Another cool thing about V-JEPA is that it can adapt to new tasks without needing to relearn everything from scratch. This saves a lot of time and effort compared to older methods that had to start over for each new task.

To get the code, click here and visit its GitHub page.

Seeing the bigger picture: Why is V-JEPA important?

Meta’s V-JEPA is a big step forward in AI, making it easier for computers to understand videos like humans do. It’s an exciting development that opens up new possibilities, such as:

- Understanding videos like humans: V-JEPA represents a notable advancement in the field of artificial intelligence, particularly in the domain of video comprehension. Its ability to understand videos at a deeper level, akin to human cognition, marks a significant step forward in AI research.

- Efficient learning and adaptation: One of the key aspects of the model is its self-supervised learning paradigm. By learning from unlabeled data and requiring minimal labeled examples for task-specific adaptation, V-JEPA offers a more efficient learning approach compared to traditional methods. This efficiency is crucial for scaling AI systems and reducing the reliance on extensive human annotation.

- Generalization and versatility: V-JEPA’s capability to generalize its learning across diverse tasks is noteworthy. Its “frozen evaluation” approach enables the reuse of pre-trained components, making it adaptable to various applications without the need for extensive retraining. This versatility is essential for tackling different challenges in AI research and real-world applications.

- Responsible open science: The model’s release under a Creative Commons NonCommercial license underscores Meta’s commitment to open science and collaboration. By sharing the model with the research community, Meta aims to foster innovation and accelerate progress in AI research, ultimately benefiting society as a whole.

In essence, Meta’s V-JEPA model holds significance in advancing AI understanding, offering a more efficient learning paradigm, facilitating generalization across tasks, and contributing to the principles of open science. These qualities contribute to its importance in the broader landscape of AI research and its potential impact on various domains.