Convai, using the new NVIDIA ACE technology, is set to elevate AI NPCs through further advancements in generative AI. Their initial demonstration, featuring a lifelike ramen shop and characters Jin and Kai, was impressive. Now, Convai is revealing a new phase in AI-driven character technology. This innovation enables characters to engage in authentic conversations with players within games, enhancing player interaction.

With the unveiling of enhanced capabilities in conversational, perceptual, and action generation, Convai has made significant strides in creating immersive gaming experiences. These developments promise a transformative leap in how players interact with game environments and characters, marking a notable advancement for AI NPC technology.

CES 2024 announcements: Most interesting products

Convai welcomes AI NPCs with NVIDIA ACE

NVIDIA ACE represents a groundbreaking step in gaming technology. Imagine a scenario where players can engage in intelligent, unscripted, and dynamic conversations with non-playable characters (NPCs) in games. These NPCs would possess evolving personalities, accurate facial animations, and the ability to interact in the player’s native language. This concept is becoming a reality thanks to generative AI technologies.

At COMPUTEX 2023, NVIDIA announced the future of NPCs with the NVIDIA Avatar Cloud Engine (ACE) for Games. NVIDIA ACE for Games is a bespoke AI model foundry service designed to revolutionize gaming by infusing non-playable characters with AI-powered natural language interactions. This development is poised to transform the gaming experience, making interactions with game characters more realistic and immersive.

Generative AI has the potential to revolutionize the interactivity players can have with game characters and dramatically increase immersion in games. Building on our expertise in AI and decades of experience working with game developers, NVIDIA is spearheading the use of generative AI in games.

-John Spitzer, vice president of developer and performance technology at NVIDIA

NVIDIA ACE for developers

NVIDIA ACE for Games is paving the way for developers in middleware, tools, and games to incorporate advanced AI capabilities. Through this platform, they can build and deploy customized speech, conversation, and animation AI models in their software and games, accessible across both cloud and PC environments.

The optimized AI foundation models integral to NVIDIA ACE for Games include:

- NVIDIA NeMo: This offers foundational language models along with model customization tools, enabling developers to further refine the models to suit their game characters. This customizable large language model (LLM) facilitates the creation of unique character backstories and personalities, tailored to a developer’s game world. NeMo Guardrails also allows developers to set programmable rules for NPCs, ensuring player interactions are consistent with the context of a scene.

- NVIDIA Riva: It extends automatic speech recognition (ASR) and text-to-speech (TTS) capabilities, fostering live speech conversation integrated with NVIDIA NeMo. This facilitates seamless interaction between players and game characters.

- NVIDIA Omniverse Audio2Face: A standout feature, Audio2Face generates expressive facial animations for game characters from just an audio source. With Omniverse connectors for Unreal Engine 5, developers can directly add facial animations to MetaHuman characters, significantly enhancing realism and expressiveness in-game.

These components of NVIDIA ACE for Games represent a substantial leap forward in game development, offering tools for creating more realistic and interactive gaming experiences. This suite of tools underscores NVIDIA’s commitment to advancing the gaming industry through innovative AI applications.

How does NVIDIA ACE work?

NVIDIA ACE for Games operates in a way that demonstrates its remarkable capabilities and provides a glimpse into the future of NPC development in games. To illustrate this, NVIDIA collaborated with Convai, an NVIDIA Inception startup dedicated to creating and deploying AI characters in games and virtual worlds. This partnership was instrumental in optimizing and integrating ACE modules into the Convai platform.

The Kairos demo, a showcase of NVIDIA ACE for Games, utilized various NVIDIA technologies:

With NVIDIA ACE for Games, Convai’s tools can achieve the latency and quality needed to make AI non-playable characters available to nearly every developer in a cost efficient way.

-Purnendu Mukherjee, founder and CEO at Convai

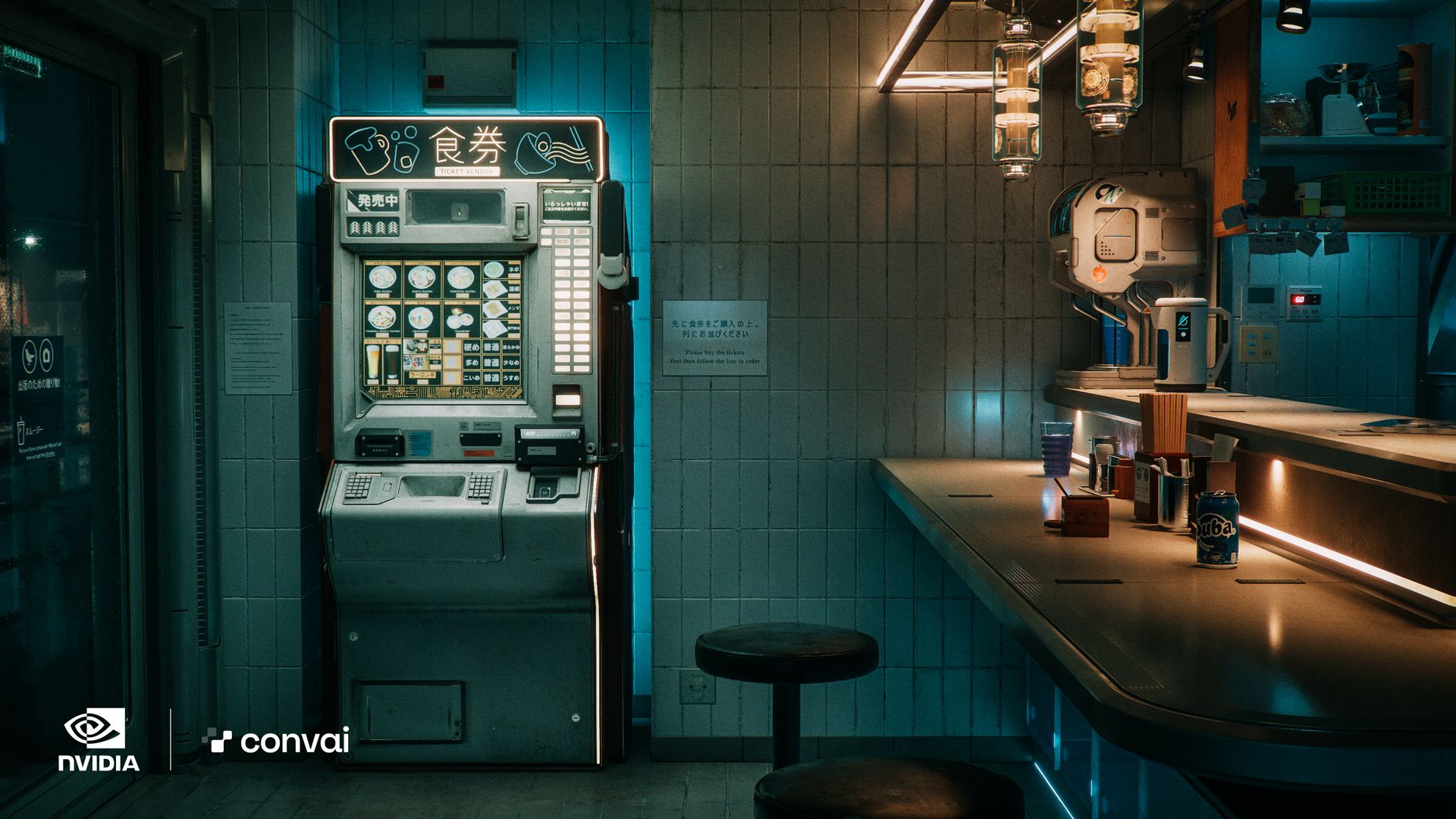

NVIDIA Riva was employed for speech-to-text and text-to-speech functions, NVIDIA NeMo powered the conversational AI, and Audio2Face was used for AI-driven facial animation derived from voice inputs. These modules were seamlessly incorporated into the Convai services platform, then integrated with Unreal Engine 5 and MetaHuman, culminating in the creation of Jin, a character in the Ramen Shop scene.

This scene, along with Jin, was crafted by NVIDIA Lightspeed Studios’ art team and fully rendered in Unreal Engine 5. The rendering utilized NVIDIA RTX Direct Illumination (RTXDI) for ray-traced lighting and shadows, and DLSS for optimizing frame rates and image quality.

The real-time demonstration of Jin and his Ramen Shop not only showcases the capabilities of GeForce RTX graphics cards, NVIDIA RTX technologies, and NVIDIA ACE for Games but also exemplifies the future potential of games that will utilize this groundbreaking technology.