However, a cool development has emerged with OpenVoice, a collaborative creation by teams from the Massachusetts Institute of Technology (MIT), Tsinghua University in Beijing, and the Canadian AI firm MyShell. OpenVoice is an open-source platform for voice cloning, distinguished by its rapid processing and advanced customization options, setting it apart from existing voice cloning technologies.

Today, we proudly open source our OpenVoice algorithm, embracing our core ethos – AI for all.

Experience it now: https://t.co/zHJpeVpX3t. Clone voices with unparalleled precision, with granular control of tone, from emotion to accent, rhythm, pauses, and intonation, using just a… pic.twitter.com/RwmYajpxOt

— MyShell (@myshell_ai) January 2, 2024

To enhance accessibility and transparency, the company has shared a link to its thoroughly reviewed research paper detailing the development of OpenVoice. Additionally, they’ve provided access points for users to experiment with this technology. These include the MyShell web app interface, which requires user registration, and HuggingFace, accessible to the public without any account.

MyShell is committed to contributing to the broader research community, viewing OpenVoice as just the beginning. Looking ahead, they plan to extend support through grants, datasets, and computing resources to bolster open-source research. MyShell’s guiding principle is ‘AI for All,’ emphasizing the significance of language, vision, and voice as the three key components of future Artificial General Intelligence (AGI).

In the research domain, while language and vision modalities have seen substantial developments in open-source models, there remains a gap in the voice sector. Specifically, there’s a need for a robust, instantly responsive voice cloning model that offers customizable voice generation capabilities. MyShell aims to fill this gap, pushing the boundaries of voice technology in AGI.

Meet Murf AI: Text-to-speech voiceovers in seconds

How to use Myshell AI?

Follow these steps:

- Go to the official website of MyShell AI.

- Click on “Start the App”

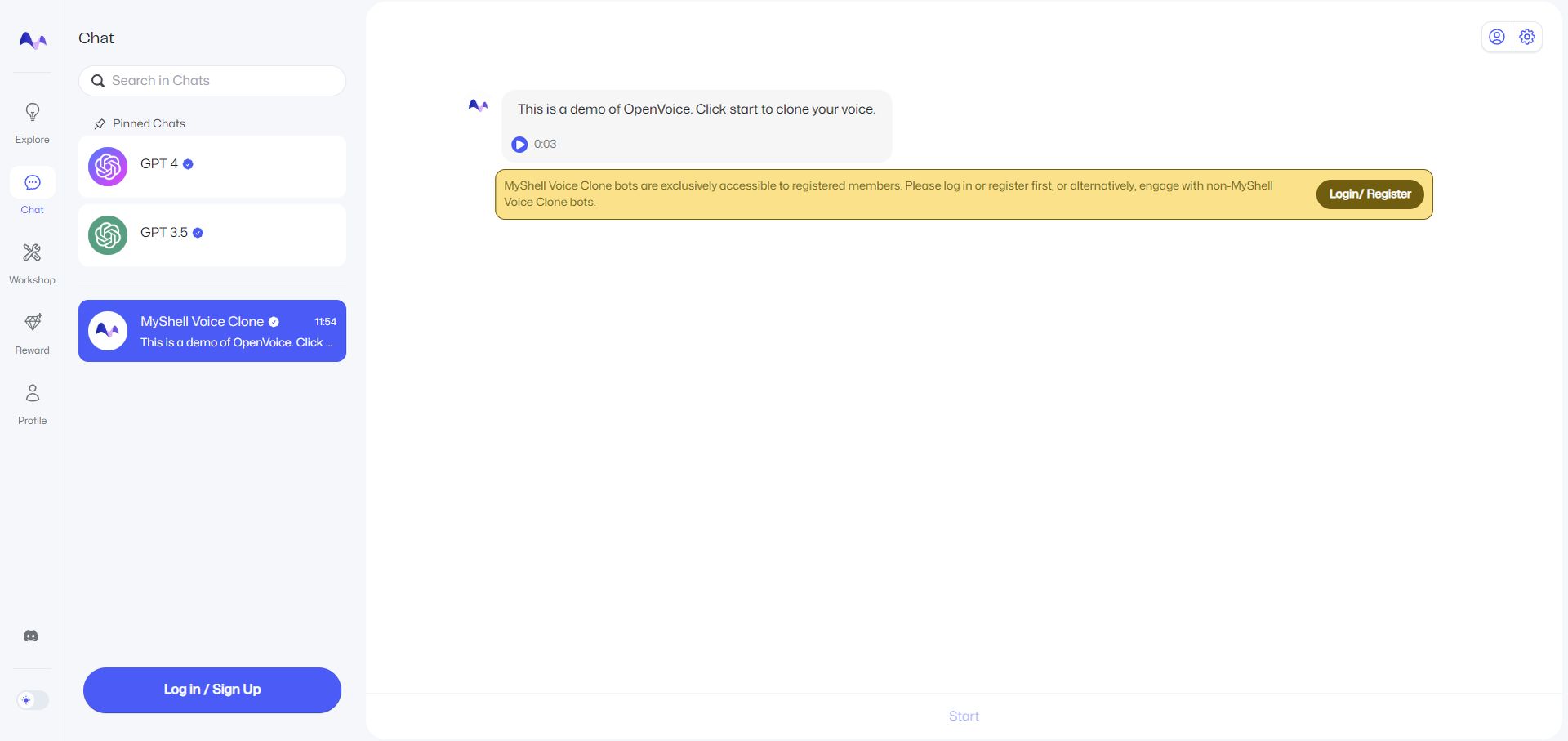

- Select “Chat” from the left hand side.

- In order to use “MyShell Voice Clone” feature, you need to sign up with an account. You can always use a Google account.

- Next click on “Start,” it’s located on the bottom of the page.

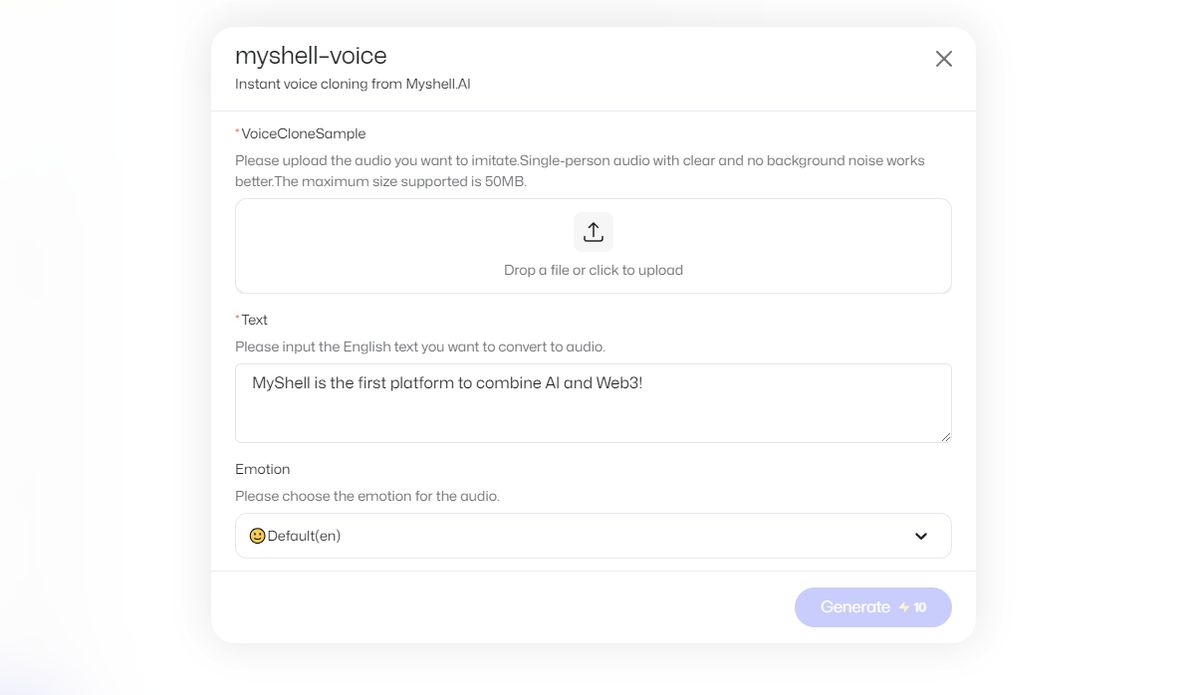

- Upload a voice recording and input the English text you want to convert to audio.

- Hit “Generate,” this will cost 10 in-app currency.

- Your output will be sent to you via chat.

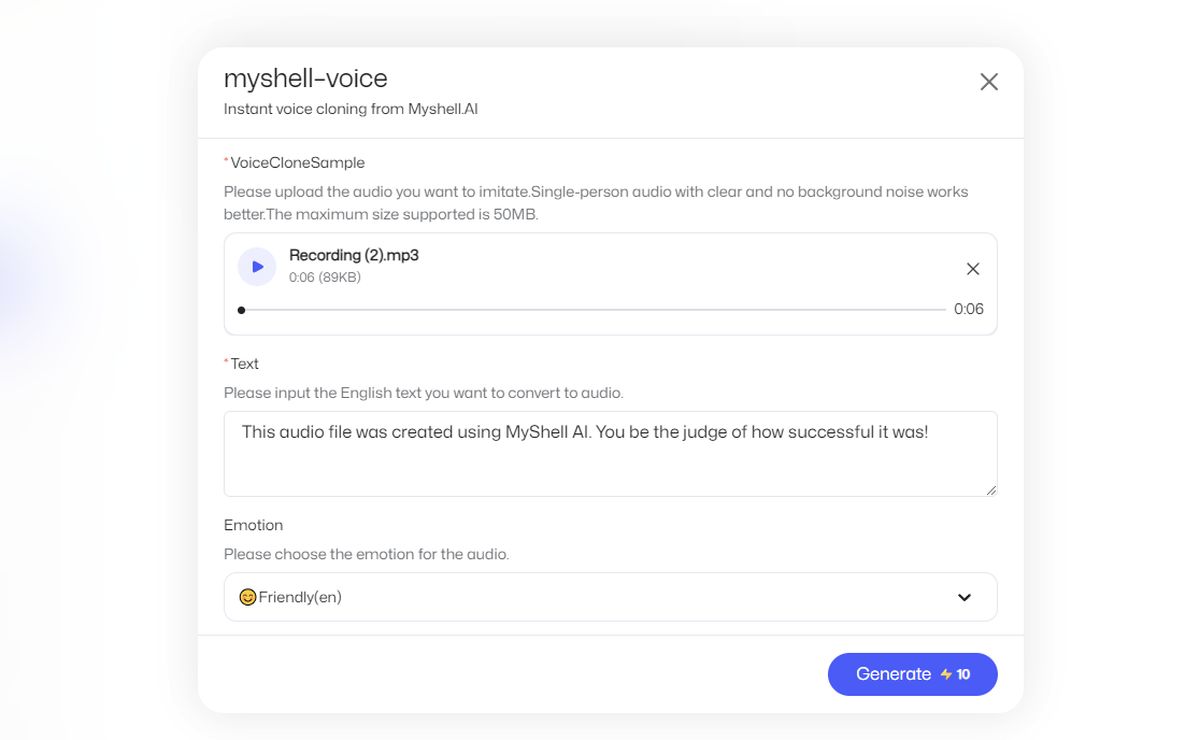

Editor’s note: For reference, I uploaded a voice recording of my own, which says: “Voice cloning technology is making strides and a noteworthy advancement has been made by startups such as ElevenLabs.”

Then, asked for an output, which reads: “This audio file was created using MyShell AI. You be the judge of how successful it was!”

Input:

Output:

I wouldn’t call the output very successful but it’s amazing to see how fast it is. Add that I’m not a native speaker.

How does OpenVoice technology work?

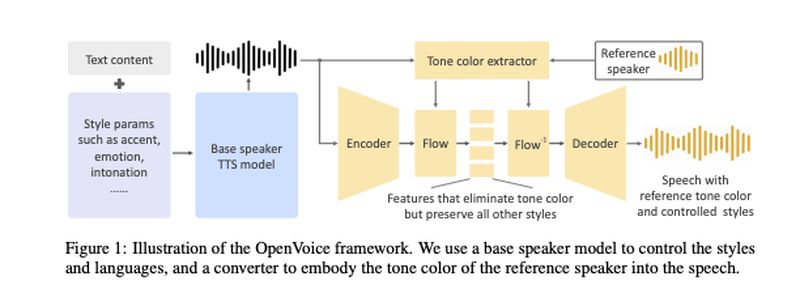

The OpenVoice technology, developed by Qin, Wenliang Zhao, and Xumin Yu from Tsinghua University, along with Xin Sun from MyShell, is articulated in their scientific paper. This voice cloning AI is based on a dual-model architecture: a Text-to-Speech (TTS) model and a “tone converter.”

The TTS model is responsible for managing style parameters and languages. It underwent training using 30,000 sentences of audio samples, which included voices with American and British accents in English, as well as Chinese and Japanese speakers. These samples were distinctively labeled to reflect the emotions expressed in them. The model learned nuances like intonation, rhythm, and pauses from these clips.

On the other hand, the tone converter model was trained with an extensive dataset of over 300,000 audio samples from more than 20,000 different speakers.

In both models, the audio of human speech was transformed into phonemes – the basic sound units that differentiate words. These were then represented through vector embeddings.

The unique process involves using a “base speaker” in the TTS model, combined with a tone derived from a user’s recorded audio. This combination allows the models to not only reproduce the user’s voice but also modify the “tone color,” meaning the emotional expression of the spoken text.

The team included a diagram in their paper to illustrate how these two models interact:

They highlight that their method is conceptually straightforward yet effective. It also requires significantly fewer computing resources compared to other voice cloning methods, such as Meta’s Voicebox.

“We wanted to develop the most flexible instant voice cloning model to date. Flexibility here means flexible control over styles/emotions/accent etc, and can adapt to any language. Nobody could do this before, because it is too difficult. I lead a group of experienced AI scientists and spent several months to figure out the solution. We found that there is a very elegant way to decouple the difficult task into some doable subtasks to achieve what seems to be too difficult as a whole. The decoupled pipeline turns out to be very effective but also very simple,” Qin stated in an email reported by VentureBeat.