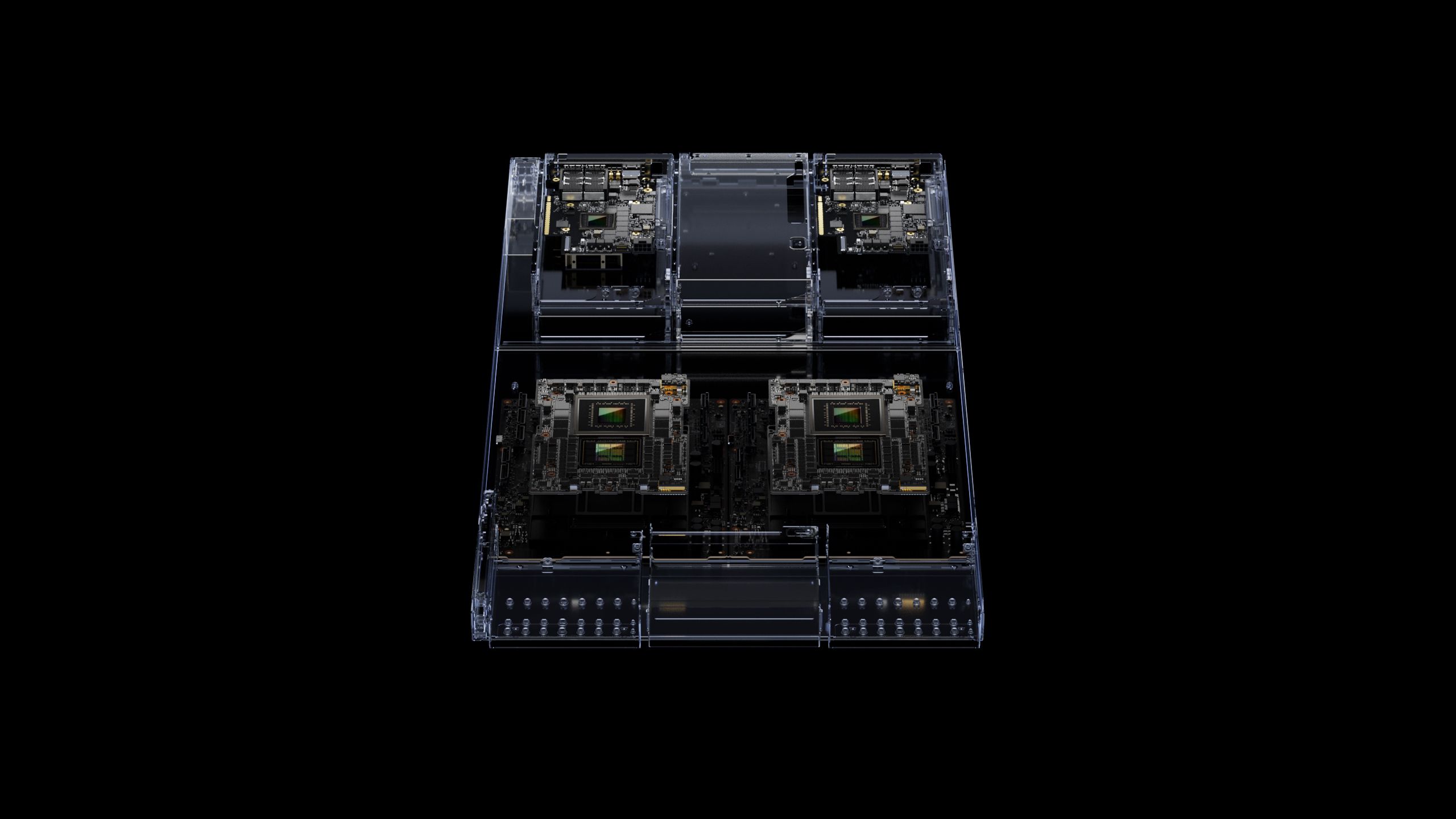

Today, NVIDIA launched the next-generation NVIDIA GH200 Grace Hopper platform, created for the era of accelerated computing and generative AI and based on a new Grace Hopper Superchip with the world’s first HBM3e CPU.

NVIDIA’s latest announcement comes on top of the previously announced GH200 with HBM3, which is now in production and scheduled to hit the market later this year. This suggests that NVIDIA will release two versions of the same device, with the model integrating HBM3 and the model with HBM3e following later.

“To meet surging demand for generative AI, data centers require accelerated computing platforms with specialized needs,” said Jensen Huang, founder and CEO of NVIDIA. “The new GH200 Grace Hopper Superchip platform delivers this with exceptional memory technology and bandwidth to improve throughput, the ability to connect GPUs to aggregate performance without compromise, and a server design that can be easily deployed across the entire data center.”

NVIDIA GH200 specs

The new GH200 Grace Hopper Superchip is built on a 72-core Grace CPU with 480 GB of ECC LPDDR5X memory and a GH100 computing GPU with 141 GB of HBM3E memory in six 24 GB stacks and a 6,144-bit memory interface. Despite the fact that NVIDIA physically installs 144 GB of memory, only 141 GB is available for improved yields.

NVIDIA Eye Contact AI can be the savior of your online meetings

The current GH200 Grace Hopper Superchip from NVIDIA has 96 GB of HBM3 memory and a bandwidth of less than 4 TB/s. In comparison, the new model improves memory capacity by roughly 50% and bandwidth by more than 25%. Such significant advancements allow the new platform to run bigger AI models than the old version and give meaningful performance gains.

“HBM3e memory, which is 50% faster than current HBM3, delivers a total of 10TB/sec of combined bandwidth, allowing the new platform to run models 3.5x larger than the previous version while improving performance with 3x faster memory bandwidth,” NVIDIA said in the official blog post.

| NVIDIA Grace Hopper Specifications | ||||

| Grace Hopper (GH200) w/HBM3 | Grace Hopper (GH200) w/HBM3e | |||

| CPU Cores | 72 | 72 | ||

| CPU Architecture | Arm Neoverse V2 | Arm Neoverse V2 | ||

| CPU Memory Capacity | <=480GB LPDDR5X (ECC) | <=480GB LPDDR5X (ECC) | ||

| CPU Memory Bandwidth | <=512GB/sec | <=512GB/sec | ||

| GPU SMs | 132 | 132? | ||

| GPU Tensor Cores | 528 | 528? | ||

| GPU Architecture | Hopper | Hopper | ||

| GPU Memory Capcity | 96GB (Physical) <=96GB (Available) | 144GB (Physical) 141GB (Available) | ||

| GPU Memory Bandwidth | <=4TB/sec | 5TB/sec | ||

| GPU-to-CPU Interface | 900GB/sec NVLink 4 | 900GB/sec NVLink 4 | ||

| TDP | 450W – 1000W | 450W – 1000W | ||

| Manufacturing Process | TSMC 4N | TSMC 4N | ||

| Interface | Superchip | Superchip | ||

| Available | H2’2023 | Q2’2024 | ||

NVIDIA GH200 availability

NVIDIA’s GH200 Grace Hopper platform with HBM3 is presently in production and will be commercially accessible next month, according to NVIDIA. The GH200 Grace Hopper platform with HBM3e, on the other hand, is presently sampling and is projected to be available in the second quarter of 2024.

NVIDIA emphasized that the new GH200 Grace Hopper uses the same Grace CPU and GH100 GPU technology as the previous version, thus, no additional revisions or steppings are required.

According to NVIDIA, the original GH200 with HBM3 and the enhanced model with HBM3E will coexist on the market, implying that the latter would be offered at a premium due to the better performance afforded by the more modern memory.

NVIDIA announced the new NeMo and BioNemo large language models at GTC 2022

NVIDIA’s next-generation Grace Hopper Superchip platform with HBM3e is completely compatible with NVIDIA’s MGX server standard, making it a drop-in replacement for existing server designs.

Featured image credit: BoliviaInteligente/Unsplash