Nvidia AI Workbench has been unveiled, signaling a potentially transformative moment in the creation and deployment of generative AI. By leveraging the Nvidia AI Workbench, developers can expect a more streamlined process, allowing them to work on different Nvidia AI platforms, such as PCs and workstations.

While it might not necessarily flood the market with AI content, there’s little doubt that the Nvidia AI Workbench is poised to make the journey towards AI development and integration a more user-friendly experience.

What does Nvidia AI Workbench have to offer?

Nvidia announced AI Workbench at the annual SIGGRAPH (Special Interest Group on Computer Graphics and Interactive Techniques) conference in Los Angeles, unveiling a user-friendly platform designed to run on a developer’s local machine.

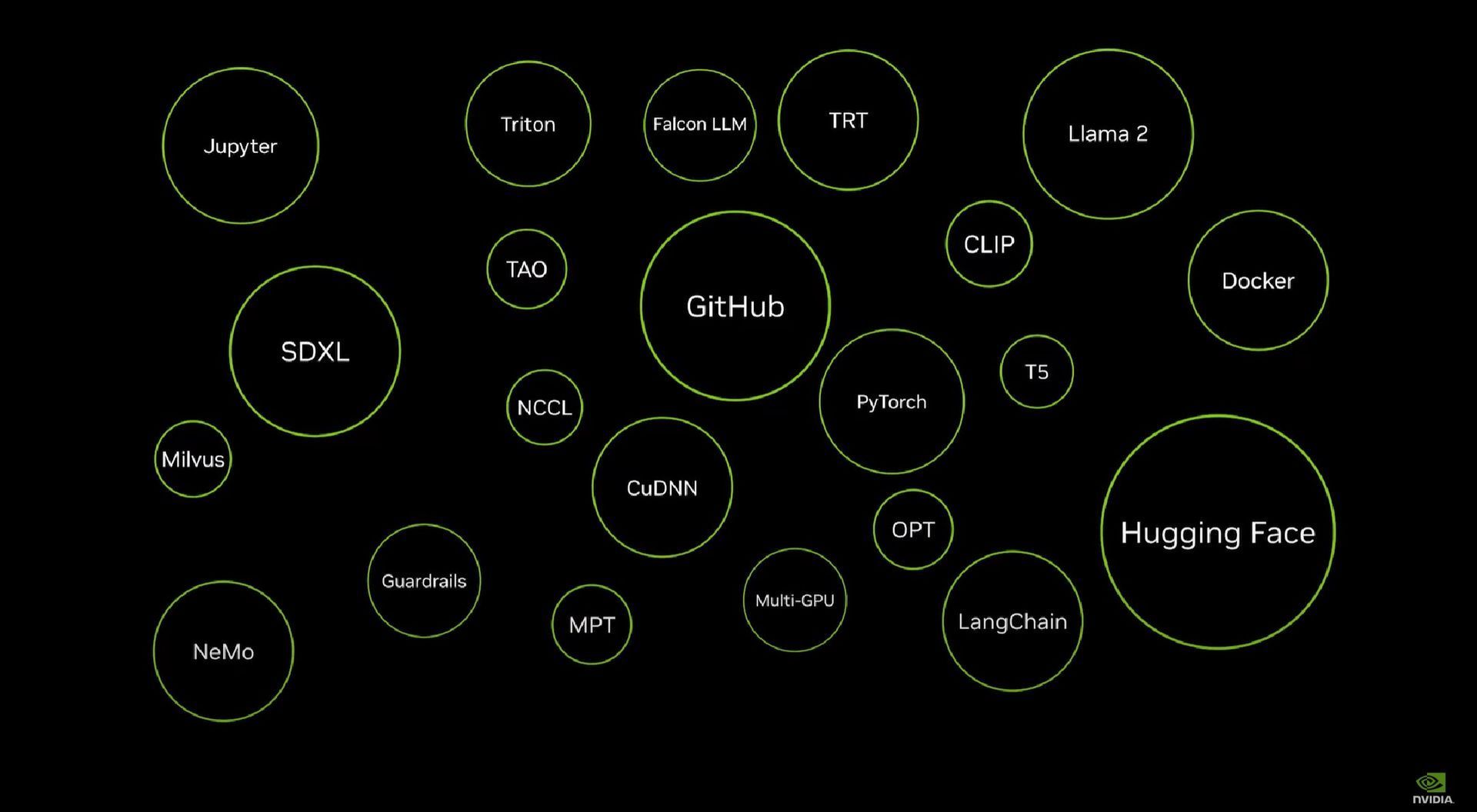

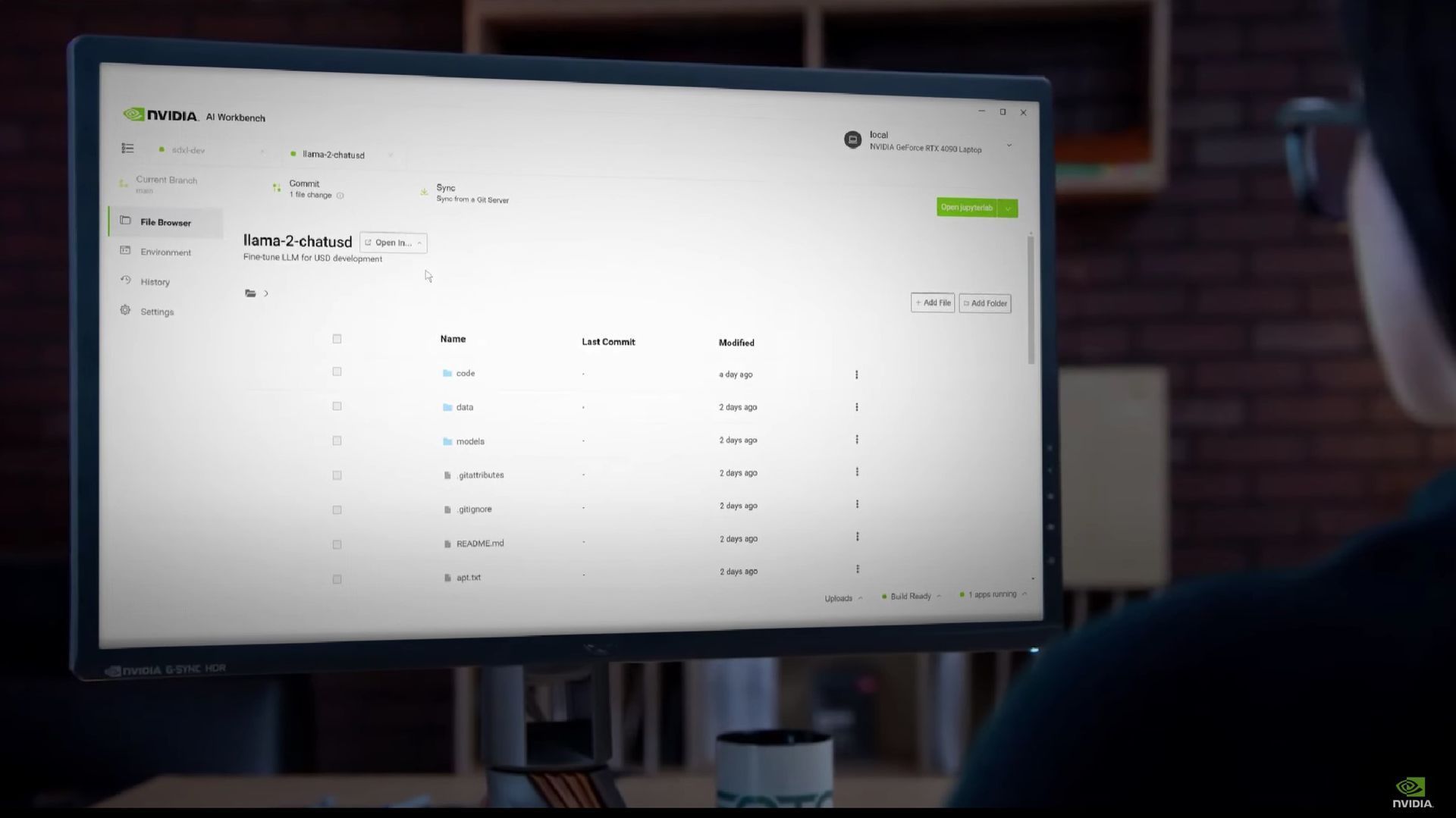

Connecting to popular repositories like HuggingFace, GitHub, Nvidia’s enterprise web portal NVIDIA NGC, and various sources of open-source or commercial AI code, the Nvidia AI Workbench provides a centralized space for developers.

They can access these resources without the need to navigate through multiple browser windows, streamlining the process of importing and customizing model code according to their preferences and needs.

In a recent announcement, the company stressed out that the Nvidia AI Workbench could revolutionize the way developers interact with the hundreds of thousands of pretrained AI models in existence. Traditionally, customizing these models has been a cumbersome task, but the Nvidia AI Workbench is designed to greatly simplify the process.

With this new tool, developers can effortlessly fine-tune and run generative AI, accessing every essential enterprise-grade model. The support extends to various frameworks, libraries, and SDKs from Nvidia’s own AI platform, as well as prominent open-source repositories like GitHub and Hugging Face.

The Nvidia AI Workbench doesn’t just stop at customization; it also facilitates sharing across multiple platforms. For developers utilizing an Nvidia RTX graphics card on their PC or workstation, they can manage these generative models locally. When required, scaling up to data center and cloud computing resources becomes a seamless transition.

“Nvidia AI Workbench provides a simplified path for cross-organizational teams to create the AI-based applications that are increasingly becoming essential in modern business,” stated Manuvir Das, Nvidia’s VP of enterprise computing.

Nvidia AI Enterprise 4.0 is also out

Alongside the Nvidia AI Workbench, the tech giant has released the fourth version of its Nvidia AI Enterprise software platform, specifically designed to furnish essential tools for the adoption and customization of generative AI within a business setting.

Nvidia AI Enterprise 4.0 aims to facilitate a seamless and secure integration of generative AI models into various operations, backed by stable API connections.

The newly launched platform features an array of tools, including Nvidia NeMo for cloud-native, end-to-end support for large language model (LLM) applications, and Nvidia Triton Management Service to automate and enhance production deployments.

Additionally, Nvidia Base Command Manager Essentials, a cluster management software, has been integrated to assist businesses in optimizing performance across data centers, multicloud, and hybrid-cloud environments.

The announcement also highlighted collaborations with industry giants ServiceNow, Snowflake, and Dell Technologies, setting the stage for an expansive range of new AI products, further demonstrating Nvidia’s commitment to leading innovation in the AI domain.

Zoom AI tools will be trained with user data

Nvidia’s entry into the AI market appears to be well-timed, offering not just the Workbench but other tools like Nvidia ACE for gaming applications. With generative AI models like ChatGPT becoming increasingly popular, many developers are likely to be attracted to Nvidia’s holistic and user-friendly solutions.

However, this accessibility also brings a degree of uncertainty, as the use of generative AI can sometimes lead to questionable applications. The broader implications of Nvidia’s innovations in this space are yet to be fully realized.

Featured image credit: Zan/Unsplash