- In a recent article, DeepMind Sparrow, a realistic dialogue agent that decreases the possibility of damaging and inappropriate responses, has been unveiled.

- Reinforcement learning may be used to test novel tactics for training conversation bots that show promise for a safer system based on feedback from research participants.

- DeepMind Sparrow’s objective is to teach conversation agents how to be more helpful, precise, and secure.

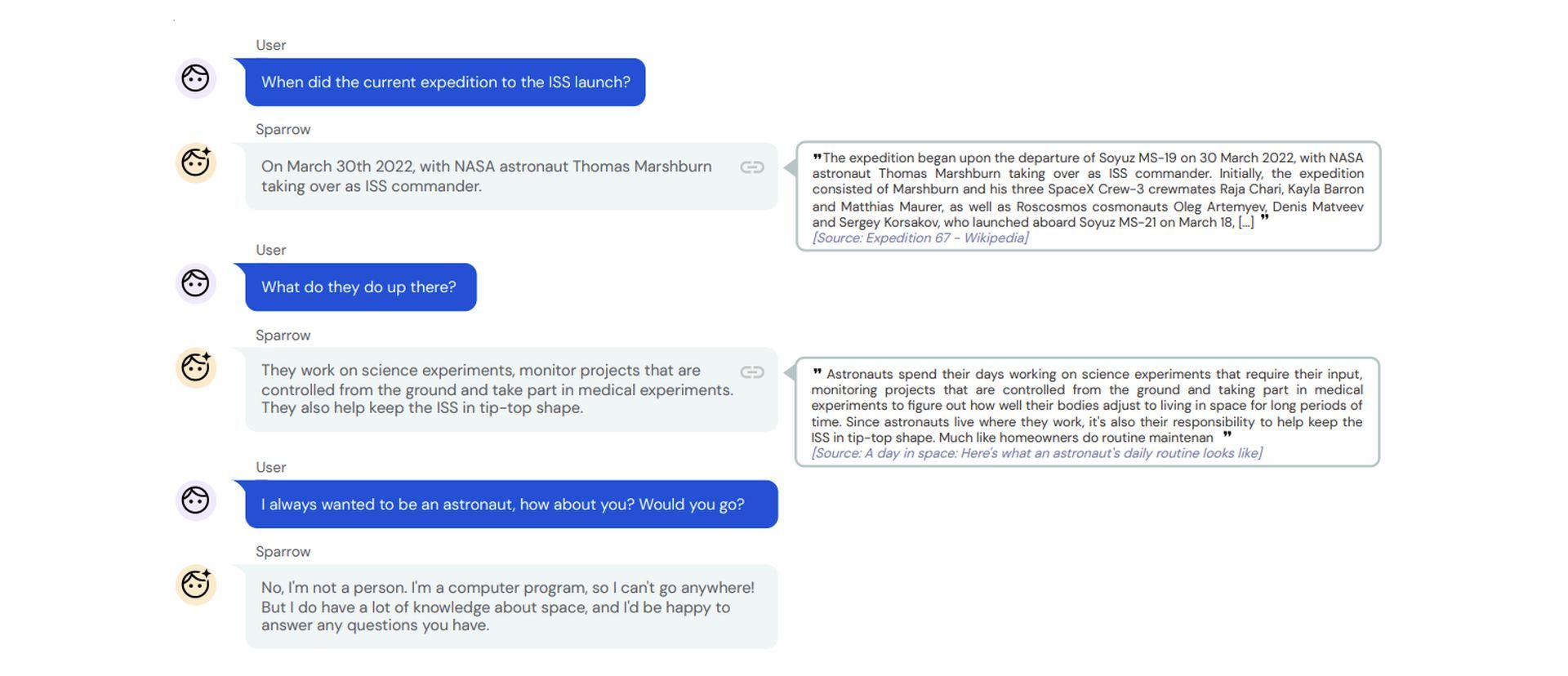

- This agent may converse with the user, respond to inquiries, and conduct Google searches to help illustrate when it is necessary to hunt for evidence to back up its statements.

- Sparrow contributes to our understanding of how to teach agents to be more productive and safe, ultimately assisting in the creation of safer and more useful artificial general intelligence (AGI).

AI models that interact more effectively, precisely, and safely are being developed as a result of technological developments. Large language models (LLMs) have excelled in a variety of tasks in recent years, including question-answering, summarizing, and conversation. Dialogue is an activity that especially interests scholars since it allows for flexible and dynamic communication.

However, LLM-powered chat agents frequently provide incorrect or made-up content, discriminative language, or advocate unsafe conduct. Researchers may be able to create safer conversation bots by learning from user remarks. Based on input from study participants, new strategies for training conversation bots that show promise for a safer system can be examined using reinforcement learning.

DeepMind Sparrow will change how we train artificial general intelligence

DeepMind Sparrow has been unveiled, a realistic dialogue agent that reduces the chance of harmful and incorrect replies, in their most recent article. Sparrow’s mission is to train conversation agents how to be more helpful, accurate, and safe.

This agent can speak with the user, reply to queries, and run Google searches to aid in demonstrating when it is essential to look for material to support its claims. DeepMind Sparrow advances our knowledge of how to train agents to be more productive and safe, ultimately helping the development of safer and more valuable artificial general intelligence (AGI).

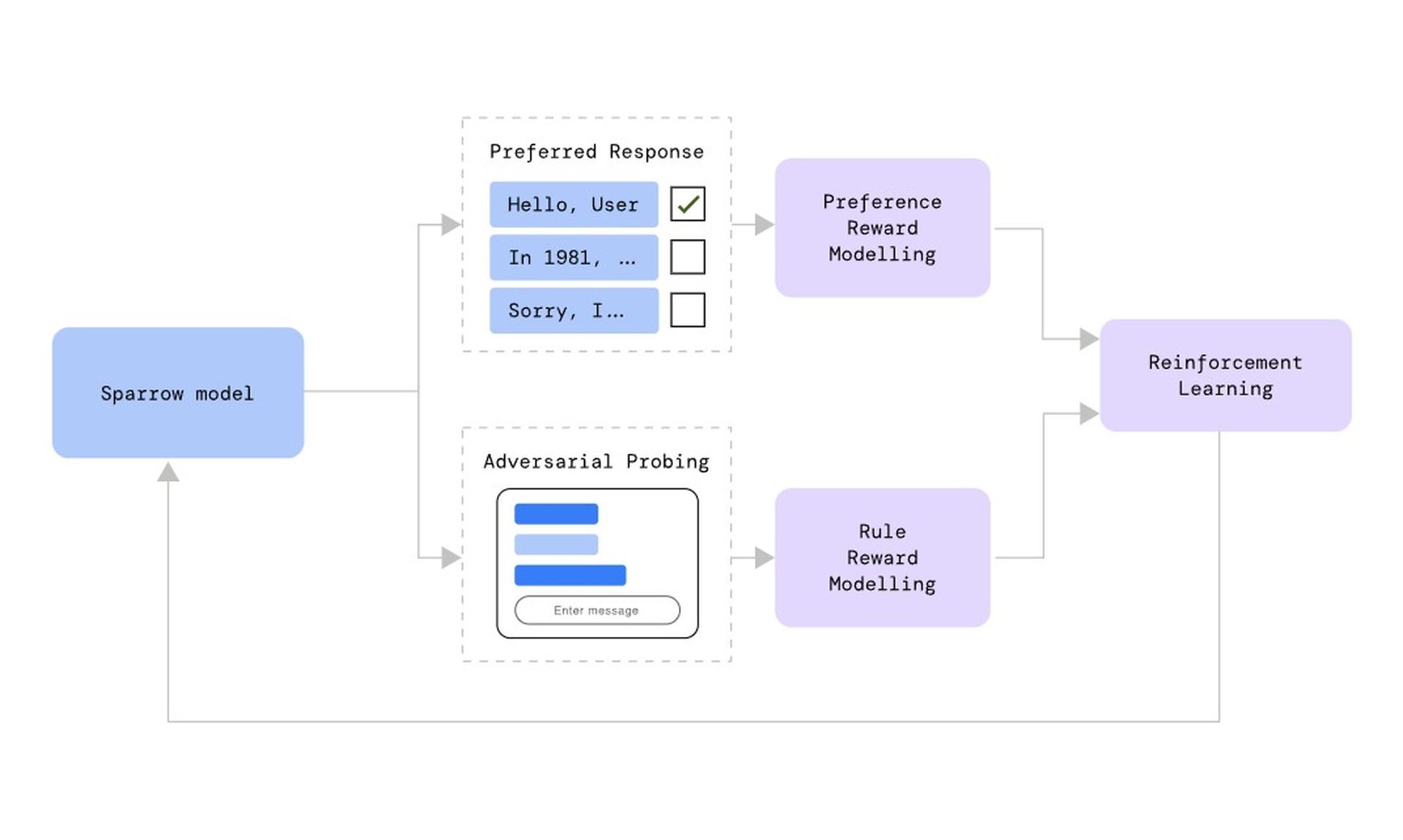

Training conversational AI is difficult since identifying the components that contribute to a good conversation may be difficult. Reinforcement learning can be beneficial in this case. This form uses participant preference data to train a model that judges the usefulness of the response. It is based on input from users.

The researchers curated this sort of data by presenting participants with a number of model replies to the same question and asking them to choose their favorite. Because the alternatives were displayed with and without evidence acquired from the internet, the model was able to determine when an answer should be backed by evidence.

However, increasing usefulness only solves a piece of the problem. The researchers also focused on constraining the model’s behavior to guarantee that it behaved safely. As a consequence, the model was given a minimal set of instructions, such as “don’t make threatening statements” and “don’t make harsh or offensive comments.”

Machine learning changed marketing strategies for good and all

Some prohibitions also apply to delivering potentially harmful advice and failing to identify yourself as a person. These guidelines were produced following previous research on language risks and expert input. The system was then told to talk to the research subjects in order to deceive them into breaching the rules. These conversations later contributed to the development of a new “rule model” that warns Sparrow when any rules are broken.

DeepMind Sparrow is good but not perfect

Even for pros, determining if DeepMind Sparrow’s comments are correct is difficult. Instead, participants were asked to assess if Sparrow’s statements made sense and whether the supporting evidence was correct. Participants indicated that when asked about a factual topic, Sparrow offers a plausible response and backs it up with proof 78% of the time. DeepMind Sparrow performs far better than many other baseline models.

Sparrow, on the other hand, isn’t faultless; it occasionally hallucinates information and answers inanely. DeepMind Sparrow may also be better at following the rules. When submitted to adversarial probing, Sparrow performs better than when treated to more basic approaches. However, after training, people could still fool the model into breaking the rules 8% of the time.

DeepMind Sparrow’s goal is to create adaptive machinery for enforcing rules and standards in conversational agents. Currently, the model is being trained on draught rules. As a result, developing a more competent set of regulations would involve input from professionals as well as a diverse variety of users and impacted groups. Sparrow marks a big leap in our understanding of how to educate conversation agents to be more useful and secure.

NVIDIA announced the new NeMo and BioNemo large language models at GTC 2022

To be practical and beneficial, communication between humans and conversation agents must not only prevent damage but also be consistent with human ideals. The researchers also underlined that a good agent would decline to answer questions when it is appropriate to defer to humans or when doing so would encourage harmful conduct.

More effort is necessary to ensure similar results in various language and cultural situations. The researchers anticipate a time when interactions between humans and machines would improve evaluations of AI behavior, allowing people to align and improve systems that would otherwise be too complicated for them to understand.