Google DreamBooth AI is in the house. The internet is already being stormed by newly released technologies like OpenAI’s DALL.E2 or StabilityAI’s Stable Diffusion and Midjourney. Now it is time to personalize the outputs. But how? Google and Boston University answered, and we explained it for you.

DreamBooth is capable of comprehending the subject of a given image, separating it from the image’s existing context, and then accurately synthesizing it into a new desired context. Plus, it can work with existing AI image generators. Keep reading and learn more about AI-powered imagination.

What is Google’s DreamBooth AI?

DreamBooth, Google’s new text-to-image diffusion model, was introduced. Using a written prompt as direction, DreamBooth AI can produce a wide range of photos of a user’s selected subject in various settings.

A team of researchers from Google and Boston University developed DreamBooth, which is based on a novel technique for customizing massively pre-trained text-to-image models. The overall concept is rather straightforward: they want to increase the language-vision dictionary such that uncommon token IDs are linked to a particular subject that the user wishes to generate.

DreamBooth AI key features:

- DreamBooth AI can improve a text-to-image model with 3–5 photos.

- Fully unique photorealistic photographs of the subject can be created using DreamBooth AI.

- The DreamBooth AI can also create pictures of a subject from several angles of view.

The main goal of the model is to enable users to produce photorealistic representations of their chosen subject instances and connect them to the text-to-image diffusion model. As a result, this technique seems to be useful for synthesizing topics in many contexts.

Compared to other recently launched text-to-image tools like DALL-E 2, Stable Diffusion, and Midjourney, Google’s DreamBooth adopts a somewhat different strategy by giving users more control over the subject image and then directing the diffusion model using text-based inputs.

With just a few input photographs, DreamBooth can also depict the subject from several camera angles. Even if the input photographs don’t provide data on the subject from various angles, artificial intelligence (AI) may still forecast the subject’s characteristics and synthesize them in text-guided navigation.

With the aid of text prompts, this model can also synthesize the photos to produce other emotions, accessories, or color alterations. DreamBooth Google AI gives users even more creative freedom and customization with these features.

Check out Facebook’s text-to-video AI generator: Make-A-Video Meta AI

DreamBooth paper

According to the DreamBooth paper, they deliver one new problem and technique:

Subject-driven generation is a fresh issue.

- The objective is to synthesize fresh representations of a subject in various circumstances while maintaining high fidelity to its essential visual traits, given a few hastily taken pictures of the subject.

DreamBooth AI applications

These are the best DreamBooth AI applications:

- Recontextualization

- Art renditions

- Expression manipulation

- Novel view synthesis

- Accessorization

- Property modification

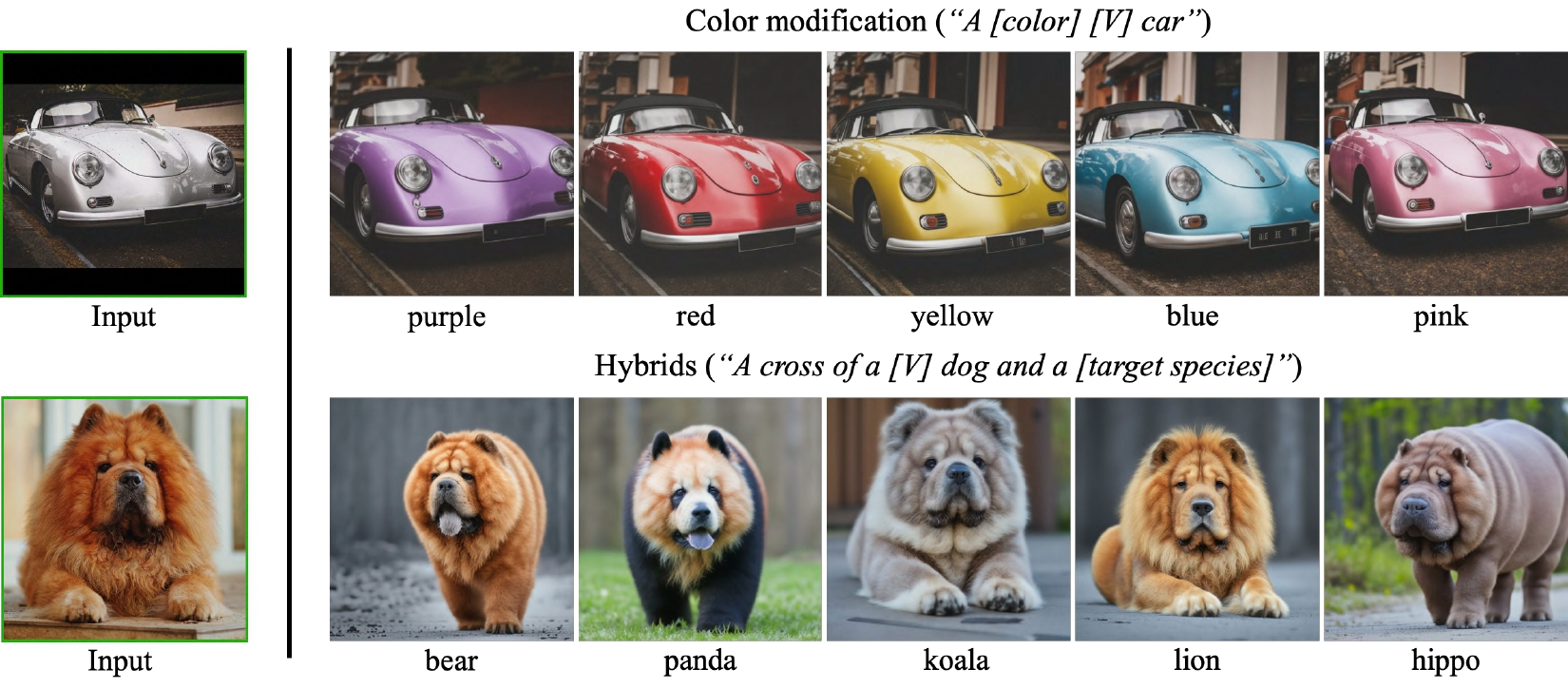

Are you ready to say goodbye to PhotoShop? Let’s take a closer look at them with the informative images from Nataniel Ruiz and DreamBooth team.

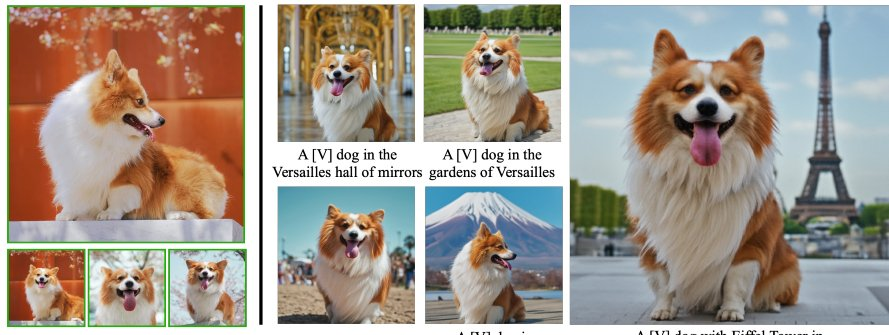

Recontextualization

DreamBooth AI could produce original images for a particular subject instance by providing the trained model with a sentence containing the unique identifier and the class noun.

DreamBooth AI can create the subject in new positions and articulations and with novel, previously unobserved scene structure instead of changing the background. The realistic interaction of the subject with other objects, as well as realistic shadows and reflections. This demonstrates that their method provides more than just interpolating or recovering relevant details.

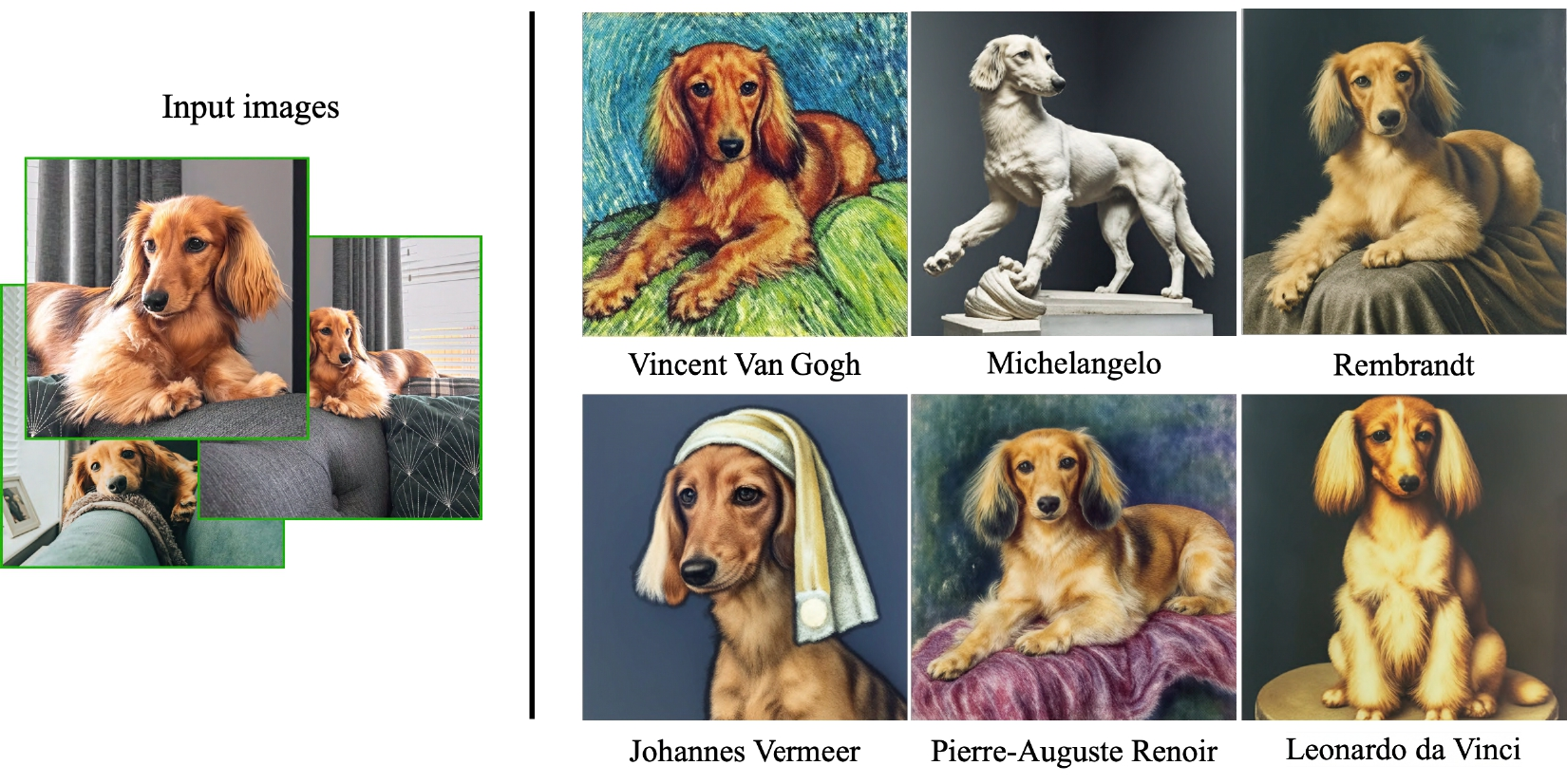

Art renditions

Given a choice between “a statue of a [V] [class noun] in the style of [great sculptor]” or “a painting of a [V] [class noun] in the style of [renowned painter],” Original creative renderings can be produced using DreamBooth AI.

This task, in particular, differs from style transfer, in which the semantics of the source scene is maintained, and the style of another image is applied to the original scene. As opposed to this, the AI can obtain significant alterations in the scene, with subject instance details and identity retention, depending on the artistic style.

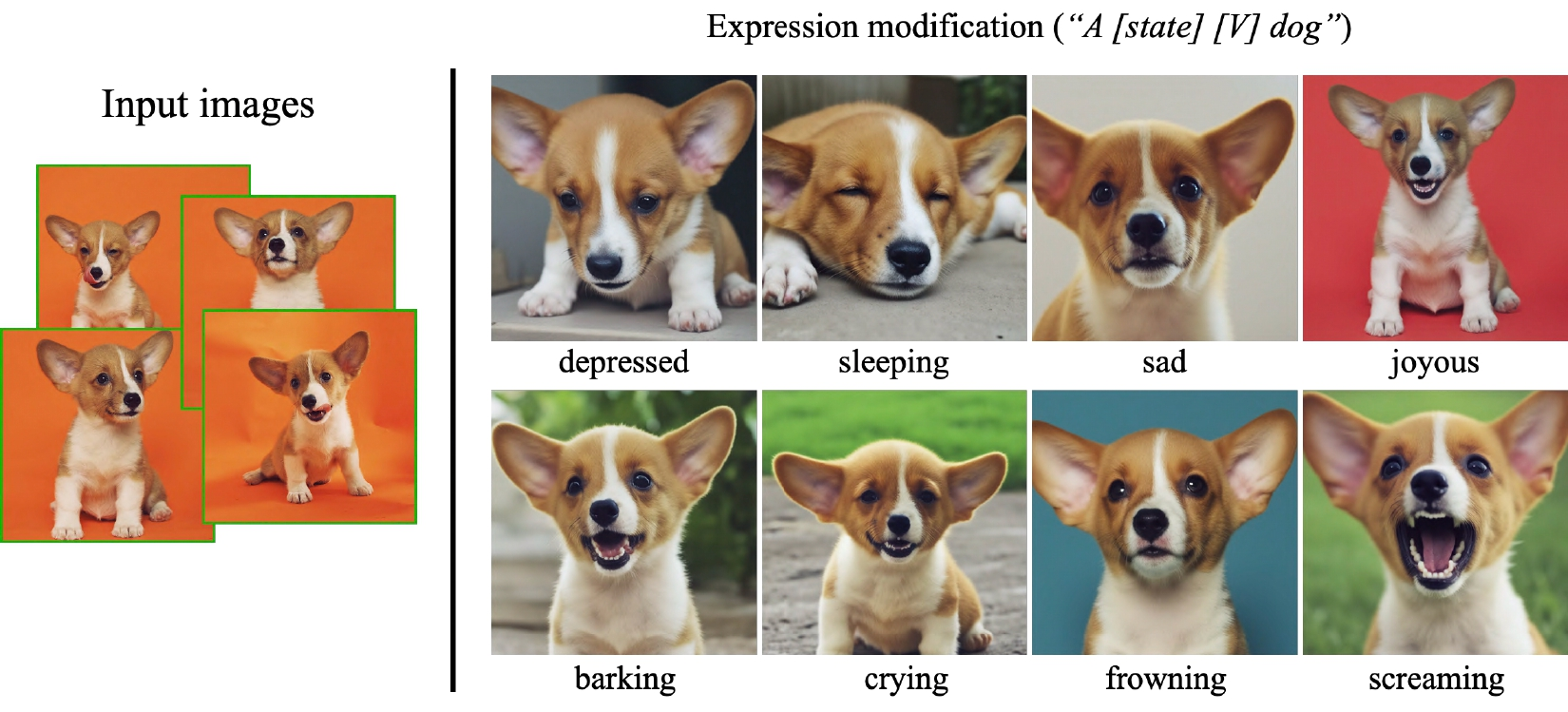

Expression manipulation

The technique used by DreamBooth AI enables the creation of updated photographs of the subject with altered facial expressions that are not included in the subject’s first collection of images.

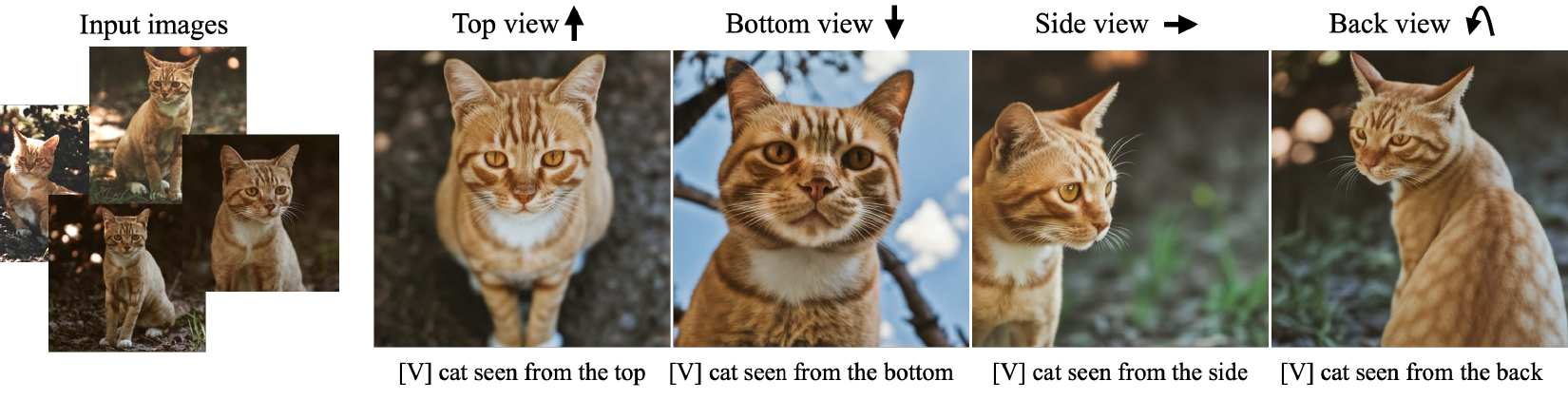

Novel view synthesis

DreamBooth AI can portray the subject from a variety of creative angles. For example, DreamBooth AI may generate new photographs of the same cat, complete with consistently intricate fur patterns, using different camera angles.

DreamBooth AI, despite the model only having four frontal photos of the cat, can deduce knowledge from the class before generating these innovative perspectives despite never having seen this particular cat from the side, from below, or above.

Accessorization

DreamBooth AI’s capacity to decorate subjects is a fascinating property resulting from the generation model’s robust compositional prior.

For example, a statement of the type “a [V] [class noun] wearing [accessory]” is used to prompt the model. This allows us to attach various items to the dog with aesthetically pleasant outcomes.

Property modification

Subject instance characteristics are modifiable by DreamBooth AI.

For instance, the prompt phrase “a [color adjective] [V] [class noun]” could include a color adjective. Doing so can produce brand-new, colorful examples of the subject.

These features also describe how to use DreamBooth AI, although there are a few requirements.

How to use DreamBooth AI?

The method used by DreamBooth AI accepts as input a limited number of images (typically 3-5 images are sufficient) of a subject (for example, a specific dog) and the class name associated with it (for example, “dog”). It then outputs a fine-tuned/”personalized” text-to-image model that encodes a specific identifier that refers to the subject. The unique identification can then be inserted by DreamBooth AI at inference into various sentences to synthesize the subjects in various circumstances.

In two steps, you can fine-tune a text-to-image diffusion given three to five photographs of a subject:

- Perfecting the low-resolution text-to-image model using input photographs and a text prompt with a special code and the name of the class the topic belongs to (for example, “a picture of a [T] canine Parallel to this, they apply a class-specific prior preservation loss, which takes advantage of the model’s semantic prior on the class and motivates it to produce a variety of examples that belong to the subject’s class by including the class name in the text prompt (e.g., “a picture of a dog.”

- Using pairings of low-resolution and high-resolution photos from our input image set to fine-tune the super-resolution components allows us to preserve high fidelity.

Based on Imagen‘s text-to-image model, the original Dreambooth was created. However, Imagen’s model and pre-trained weights are not accessible. But Dreambooth on Stable Diffusion lets users fine-tune a text-to-image model with a few samples.

DreamBooth Stable Diffusion: How to use DreamBooth AI on Stable Diffusion?

Follow these steps to use DreamBooth AI on Stable Diffusion:

- Set up your LDM environment following the directions in the Textual Inversion repository or the original Stable Diffusion repository.

- You must obtain the pre-trained stable diffusion models and follow their instructions to fine-tune a stable diffusion model. On HuggingFace, weights can be downloaded.

- As Dreambooth’s fine-tuning technique requires, prepare a set of photos for regularization.

- You can train by executing the next command:

python main.py --base configs/stable-diffusion/v1-finetune_unfrozen.yaml

-t

--actual_resume /path/to/original/stable-diffusion/sd-v1-4-full-ema.ckpt

-n <job name>

--gpus 0,

--data_root /root/to/training/images

--reg_data_root /root/to/regularization/images

--class_word <xxx>Generation:

By executing the command after training, customized examples can be obtained.

python scripts/stable_txt2img.py --ddim_eta 0.0

--n_samples 8

--n_iter 1

--scale 10.0

--ddim_steps 100

--ckpt /path/to/saved/checkpoint/from/training

--prompt "photo of a sks <class>" Particularly, sks is the identifier (which, if you decide to change it, should be substituted by your choice), and class> is the class word —class word for training.

Check out the DreamBooth Stable Diffusion GitHub page for detailed information.

DreamBooth AI limitations

These are the DreamBooth AI limitations:

- Language drift

- Overfitting

- Preservation loss

Let’s take a closer look at them.

Language drift

The command prompt becomes a barrier to producing iterations in the subject with high levels of detail. DreamBooth can alter the subject’s context, but if the model wants to alter the subject itself, there are problems with the frame.

Overfitting

Overfitting the output image onto the input image is another problem. If there are not enough input photographs, the subject may not be evaluated or may be merged with the context of the submitted images. This also happens when a context for a generation that is unusual is prompted.

Preservation loss

Other restrictions include the inability to synthesize images of rarer or more complicated subjects and inconsistent subject fidelity, leading to hallucinatory changes and discontinuous characteristics. The subject from the input photographs frequently incorporates the input context.

DreamBooth societal impact

The goal of the DreamBooth project is to give users a useful tool for synthesizing personal subjects (animals, objects) in various circumstances. It allows the user to reconstruct their desired subjects better, while general text-to-image algorithms may be biased towards certain features when synthesizing images from text.

On the other hand, nefarious parties can try to deceive viewers by using similar visuals. This widespread problem can be seen in different generative model approaches or content manipulation techniques.

Conclusion

Most text-to-image models render outputs based on a single text input employing millions of parameters and libraries. DreamBooth facilitates user access and ease of use by requiring only the input of three to five collected subject photographs coupled with a textual background.

The trained model may then reuse the materialistic characteristics of the subject learned from the photos to recreate them in other contexts and angles while preserving the subject’s distinguishing characteristics.

The majority of text-to-image models rely on particular keywords and may favor particular features when displaying images. DreamBooth users can create photorealistic outputs by imagining their preferred subject in a novel setting or situation. So, stop waiting now. Try it today!

Bonus: Wombo AI art

WOMBO Dream app was recently created by the Canadian artificial intelligence company WOMBO AI.

The software, accessible through most online retailers and web browsers, uses AI to mix word prompts with a particular art form to produce stunning, entirely unique paintings in seconds. If you want to learn more, we have already explained how to use Wombo Dream. Show your imagination!