The process of copying data to guarantee that all information remains similar in real-time between all data resources is known as data replication, also known as database replication. Consider database replication as a net that prevents your information from slipping through the cracks and disappearing. Data rarely remain constant. It changes constantly. Thanks to an ongoing process, data from a primary database is continuously replicated in a replica, even if it’s on the opposite side of the world.

The common goal of data replication is to reduce latency to sub-millisecond periods. Pressing the refresh button on a website and waiting for what seems like an eternity (seconds) to see your information refreshed is a scenario we have all experienced. A user’s productivity is reduced by latency. The objective is achieving near-real-time. For whatever use scenario, zero time lag is the new ideal.

What is data replication?

Data replication is the process of creating numerous copies of a piece of data and storing them at various locations to improve accessibility across a network, provide fault tolerance, and serve as a backup copy. Data replication is similar to data mirroring in that it can be used on both servers and individual computers. The same system, on-site and off-site servers, and cloud-based hosts can store data duplicates.

Modern database solutions frequently leverage third-party tools or built-in features to replicate data. Although Microsoft SQL and Oracle Database actively provide data replication, some traditional technologies might not come with this feature by default.

Data replication in distributed database

Data replication is the process of making several copies of data. These copies, also known as replicas, are then kept in some places for backup, fault tolerance, and enhanced overall network accessibility. The replicated data might be kept on local and remote servers, cloud-based hosts, or even all within the same system.

Data replication in a distributed database is the process of distributing data from a source server to other servers while keeping the data updated and in sync with the source so that users can get the data they need without interfering with the work of others.

What do you mean by data replication?

For instance, your standby instance should be on your local area network in case you need to recover from a system outage (LAN). You can then replicate data synchronously from the primary instance over the LAN to the secondary instance for essential database applications. Because it is now in sync with your active instance and “hot,” your backup instance is prepared to take over immediately in case of a breakdown. High availability (HA) is the term used for this action.

Make sure your secondary instance is not positioned where your primary instance is in the event of an emergency. This means that you should locate your backup instance in a cloud environment connected by a WAN or at a location far from the first instance. Data replication on a WAN is asynchronous to prevent adversely affecting throughput performance. As a result, updates to standby instances will be made later than updates to active instances, delaying the recovery process.

A study paper titled “Efficient privacy-preserving data replication in fog-enabled IoT” outlines the dependency of cloud computing on network performance:

“According to research, 41.6 billion Internet of Things (IoT) devices will be generating 79.4 zettabytes of data by the year 2025. A high volume of data generated by IoT devices is processed and stored in cloud computing. Cloud computing has a strong dependency on network performance, bandwidth, and response time for IoT devices’ data processing and storage. Data access and processing can be a bottleneck due to long turnaround delays and high demand for network bandwidth in remote cloud systems.”

What is the purpose of data replication?

You should duplicate your data to the cloud for five reasons:

- As we described previously, cloud replication keeps your data off-site and away from the business’s site. Although a significant disaster, such as a fire, flood, storm, etc., can destroy your primary instance, your secondary instance is secure in the cloud. It can be utilized to restore any lost data and applications.

- Replicating data to the cloud is less expensive than doing so in your own data center. You may eliminate the expenses of running a second data center, such as hardware, upkeep, and support fees.

- Replicating data to the cloud for smaller firms can be safer, especially if you don’t have security expertise on staff. The network and physical security offered by cloud providers are unrivaled.

- On-demand scalability is provided by replicating data to the cloud. You don’t have to spend money on more hardware to maintain your secondary instance if your business expands, contracts, or let that hardware lie idle if the business picks up. You don’t have any long-term contracts either.

- You have a wide range of geographic options for replicating data to the cloud, including having a cloud instance in the next city, across the country, or another country, depending on your business’s requirements.

How do you replicate a database?

Database replication can be a one-time event or a continuous procedure. It involves every data source in the dispersed infrastructure of a company. The data is replicated and fairly distributed among all the sources using the organization’s distributed management system.

Data mature businesses are more profitable than others

DDBMS, or distributed database management systems, generally make sure that any alterations, additions, or deletions made to the data in one place are automatically reflected in the data kept at all the other locations. The system that oversees the distributed database, which results from database replication, is known as a distributed database management system, or DDBMS.

One or more applications that link a primary storage location with a secondary location—often off-site—represent the traditional situation of database replication. Individual source databases like Oracle, MySQL, Microsoft SQL, and MongoDB, as well as data warehouses that combine data from these sources and provide storage and analytics services on greater amounts of data are most frequently used as the primary and secondary storage locations. Cloud hosting is frequently used for data warehouses.

What are the types of data replication?

There are three main types of data replication.

Data replication types

Data replication can be categorized into 3 main types: Transactional, snapshot, and, merge data replication.

Transactional replication

Transactional replication automatically distributes frequent data changes amongst servers. Changes are replicated from the publisher to the subscriber almost instantly. It captures each stage of the transaction and the sequence in which the changes occur, rather than just copying the outcome.

For instance, in the case of ATM transactions, the replication from the publisher to the subscriber includes all of the individual transactions made between the start and end balances. The fact that data changes at the publisher are duplicated at the subscriber but not the other way around is another important aspect of transactional replication. By default, data updates don’t take place at the subscriber level.

If only you knew the power of the dark data…

Snapshot replication

Data is synchronized between the publisher and subscriber at a specific moment via snapshot replication. A single transaction transfers data chunks from the publisher to the subscriber. As opposed to transactional replication, updates in a snapshot replication happen less often. To create a baseline state for the two servers before transactional replication is possible though. Both the order of data changes and every transaction between servers are not updated.

Data that changes over time is synchronized via this process. For instance, many businesses clone information from a cloud CRM to a local database for reporting purposes, such as accounts, contacts, and opportunities. Depending on how frequently the data changes, this might be done once every 15 minutes, once every hour, or once every day. Instead of taking a complete snapshot at each replication period, the replication process may recognize when data has changed in the publisher and duplicate only the changes.

Merge replication

Merge replication is a little more complicated than standard replication. Snapshot replication is used for the initial synchronization from the publisher. However, data modifications can take place at the publisher and subscriber levels in this fashion. The merging agent, installed on all servers, receives the updated data after that. The merging agent uses algorithms for resolving conflicts to update and distribute the data.

For instance, transactional replication would occur if a worker was online and revising a document directly stored on a cloud server (publisher) on their laptop or phone (subscriber). This is possible since the content is saved almost instantly. However, since the data was updated at the subscriber’s end, there would be contradictions if the document was downloaded from the cloud server and updated offline on the laptop or phone. Once online again, it would travel through a merging agent, which would compare the two files to update the document at the publisher using a conflict resolution procedure.

Merge replication is utilized in a variety of situations where a user does not always have direct access to the publisher, such as with mobile users who may go offline while the data is being updated. It would also be utilized if many subscribers had access to, updated, and synced the same data with the publisher or other subscribers at different periods. It might also be utilized when numerous subscribers are simultaneously updating the same publication data in pieces.

What is the data replication strategy?

The majority of database-based solutions monitor all database changes from the very beginning. Additionally, it creates a record for the same that is referred to as a log file or changelog. Every log file functions as a collection of log messages, each of which contains information such as the time, user, change, cascade effects, and manner of the change. The database then gives each of them a distinct position ID and keeps them in a chronological sequence depending on their IDs.

Enabling customer data compliance with identity-based retention

What are the three data replication strategies?

Although businesses may use many methods for replicating data, the following are the most typical replication strategies:

Full-table replication

Every transaction involves full-table replication, which ensures that all data, including new, updated, and existing data, is replicated. Hard-deleted data can be successfully recovered using this replication strategy, as can data from databases without replication keys.

Key-based incremental replication

Data that has changed since the last update is captured incrementally using keys. Keys are components found in databases that start data replication. This method works for databases that hold data records on distinct elements and concentrate on recent changes rather than historical values.

Log-based incremental replication

Log-based data replication is a technique in which essential changes are made and recorded in a log file or changelog. Only the backend databases for MySQL, PostgreSQL, and MongoDB support this data replication mechanism.

Database replication schemes

For database replication, the following replication schemes are employed:

Full replication

Full replication entails replicating the entire database across all nodes in the distributed system. This plan improves worldwide performance and data accessibility while maximizing data redundancy.

Partial replication

Based on the importance of the data at each site, partial replication happens when specific portions of a database are duplicated. As a result, the number of replicas in a distributed system can be anywhere between one and the precise number of nodes.

No replication

There is no replication when there is only one fragment on each distributed system node. The easiest data synchronization may be accomplished with this replication strategy, which is also the fastest to execute.

What are some advantages and disadvantages of data replication?

Data replication enables extensive data sharing among systems and divides the network burden among multisite systems by making data accessible on several hosts or data centers.

Data replication advantages

Consistent access to data can be provided by using data replication. Additionally, it expands the number of concurrent users with data access. By combining databases and updating slave databases with partial data, data redundancies are eliminated. Additionally, databases are accessible faster with data replication.

- Reliability: A different site can be used to access the data if one system is unavailable due to malfunctioning hardware, a virus attack, or another issue.

- Better network performance: Having the same data in various places might reduce data access latency since the data is retrieved closer to the point where the transaction is being executed.

- Enhanced support for data analytics: Replicating data to a data warehouse enables distributed analytics teams to collaborate on shared business intelligence projects.

- Performance improvements for test systems: Data replication makes it easier to distribute and synchronize data for test systems that require quick data accessibility.

Data replication disadvantages

Large amounts of storage space and equipment are needed to maintain data replication. Replication is expensive, and infrastructure upkeep is complicated to preserve data consistency. Additionally, it exposes additional software components to security and privacy flaws.

Cloud costs have started to become a heavy burden for the IT sector

Organizations should balance the advantages and disadvantages of replication, even though it has many advantages. Limited resources are the key barrier to maintaining consistent data across an organization:

- Costs: It costs more to store and process data when copies are kept in several places.

- Time: A team within the organization must commit time to setting up and maintaining a data replication system.

- Dense network: Consistency across data copies necessitates new processes and increases network traffic.

Data replication tools

Data replication on your end may occur for a variety of reasons. You might require application migration to the cloud or be searching for a hybrid cloud solution. It depends on whether you need real-time setup analysis or replication for synchronization in your instance Understanding why you want to perform a replication in the first place is the first step.

Database replication technologies frequently provide a variety of replication functions, as well as other ancillary functionality. Your needs and expectations for the tool must be written down. It might depend on how many sources and targets are involved, how much data you’ll be dealing with, etc.

You must choose the best mix of appropriate elements for your circumstance. Your choice of database replication tools may be influenced by cost, features, and accessibility factors. A business should invest in its budget. Finding the best Database Replication solutions for the job within your allocated budget is the objective.

These are some of the best data replication tools:

Rubrik

Rubrik is a solution for managing and backing up data in the cloud that provides quick backups, archiving, immediate recovery, analytics, and copy management. It provides streamlined backups and incorporates cutting-edge data center technologies. You may assign tasks to any user group with ease, thanks to an intuitive user interface. The integration of different clusters into a single dashboard, which is necessary depending on the use case, has some limitations.

SharePlex

Another database replication tool that uses real-time replication is called SharePlex. The program is very flexible and works with many different databases. Fast data transport is available and extremely scalable thanks to a message queuing mechanism. Both the tool’s change data collecting process and its monitoring services have some shortcomings.

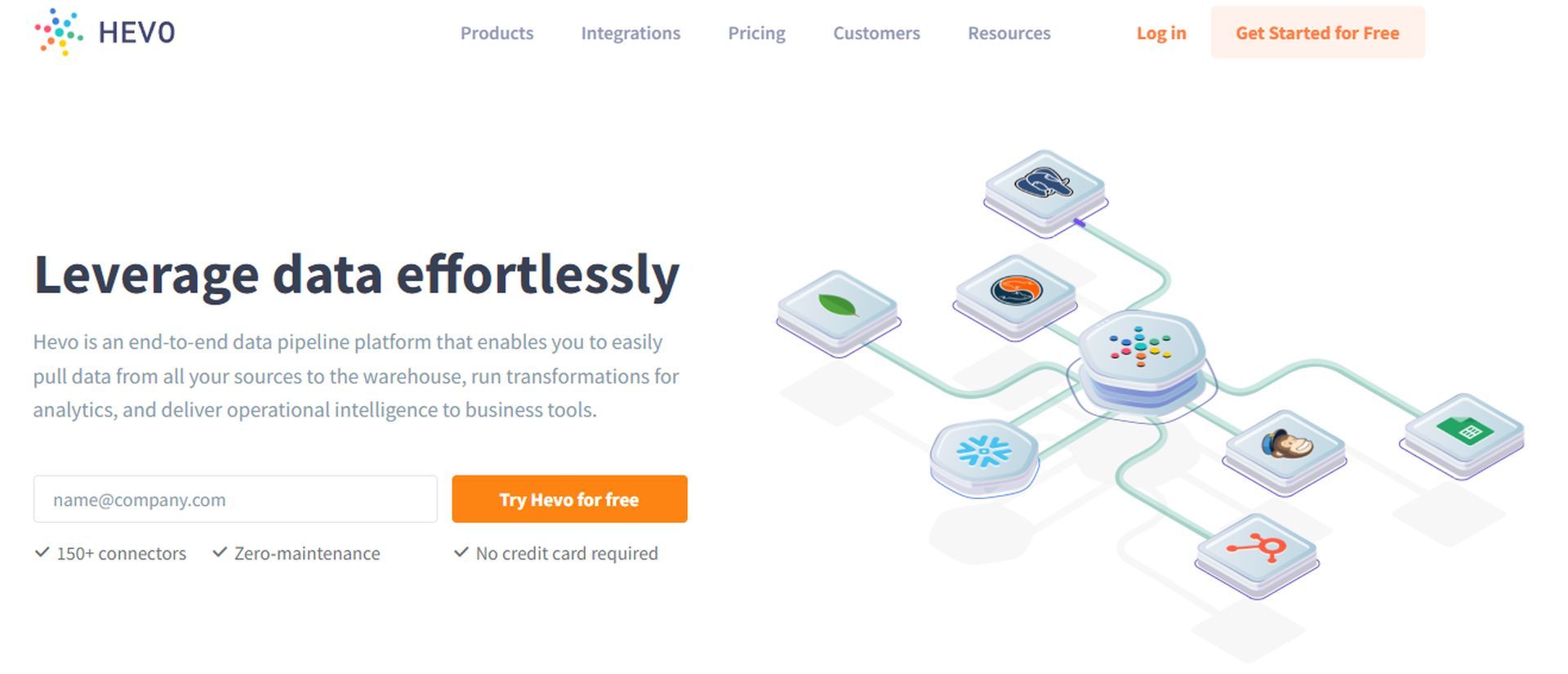

Hevo Data

Data teams are essential to driving data-driven decisions as firms’ capacity to collect data grows exponentially. Even yet, they find it difficult to combine the disparate data in their warehouse to create a single source of truth. Data integration is a headache because of faulty pipelines, problems with the data quality, glitches, errors, and a lack of control and visibility over the data flow.

Hevo’s Data Pipeline Platform is used by 1000+ data teams to quickly and seamlessly combine data from more than 150 sources. With Hevo’s fault-tolerant architecture, billions of data events from sources as diverse as SaaS apps, databases, file storage, and streaming sources may be duplicated in almost real-time.

Key takeaways

- By synchronizing cloud-based reporting and facilitating data migration from many sources into data stores, such as data warehouses or data lakes, to provide business intelligence and machine learning, data replication supports advanced analytics.

- Users can retrieve data from the servers nearest to them and experience lower latency because data is stored in various locations.

- Data replication enables businesses to distribute traffic over several servers, improving server performance and reducing the strain on individual servers.

- Data replication offers effective disaster recovery and data protection. Millions of dollars can be lost each hour that a critical data source is down due to data availability.

- Depending on the use case and the current data architecture, businesses can employ a variety of data replication approaches.

- A data replication method can be expensive and time-consuming to invest in. To obtain a competitive edge and protect their data from downtime and data loss, it is crucial for businesses that wish to use data for a variety of analytical and business use cases.

FAQ

What is the difference between replication and backup?

For many companies that must maintain long-term data for compliance reasons, backup remains the go-to option.

But data replication focuses on business continuity—providing mission-critical and customer-facing programs with uninterrupted operations following a disaster.

What is data replication in DBMS?

The technique of storing data across multiple sites or nodes is known as data replication. It helps increase the accessibility of data. It merely entails copying data from a database from one server to another so that every user can get the same information without any discrepancies. As a result, users can access data pertinent to their duties without interfering with the work of others using a distributed database.

Data replication includes the continuous copying of transactions to keep the replicate up to current and synced with the source. In contrast, data replication makes use of several locations for data availability, but each relation only needs to be stored in one place.

What is data replication in SQL?

A group of technologies known as replication is used to distribute and replicate data and database objects from one database to another, then synchronize data between databases to ensure consistency. Replication allows you to send data through local and wide area networks, dial-up connections, wireless connections, and the Internet to many locations as well as distant or mobile users.

What is data replication in AWS?

By automatically transforming the source database schema, AWS SCT facilitates heterogeneous database migrations. The majority of custom code, including views and functions, are also converted by AWS SCT into a format appropriate for the target database.

There are two steps involved in heterogeneous database replication. You can duplicate data between the source and target using AWS DMS, or you can use AWS SCT to convert the source database schema (from SQL Server) to a format compatible with the target database, in this case, PostgreSQL.

Conclusion

Instances of database replication defined as master-slave configurations in the past are now more commonly described as master-replica, leader-follower, primary-secondary, and server-client configurations.

With the development of virtual machines and distributed cloud computing, replication techniques originally focused on relational database management systems have been broadened to cover nonrelational database types. Once more, different non-relational databases like Redis, MongoDB, and others use different replication techniques.

While horizontally scaling distributed database configurations, both on-premises and on cloud computing platforms, as well as remote office database replication may have emerged as drivers of replication activity, and remote office database replication may have been the canonical example of replication for many years. Relational databases like IBM Db2, Microsoft SQL Server, Sybase, MySQL, and PostgreSQL all have different replication specifications.

Data replication design always involves striking a balance between system performance and data consistency. At least three methods exist for database replication. In snapshot replication, data from one server is simply moved to another server or a different database on the same server. Data from two or more databases are merged into one database during merging replication. In addition, user systems receive full initial copies of the database in transactional replication and periodic updates as data changes.