- Enterprises continue to struggle acquiring sufficient, clean data to support their AI and machine learning attempts, according to Appen’s State of AI and Machine Learning study, which was published this week.

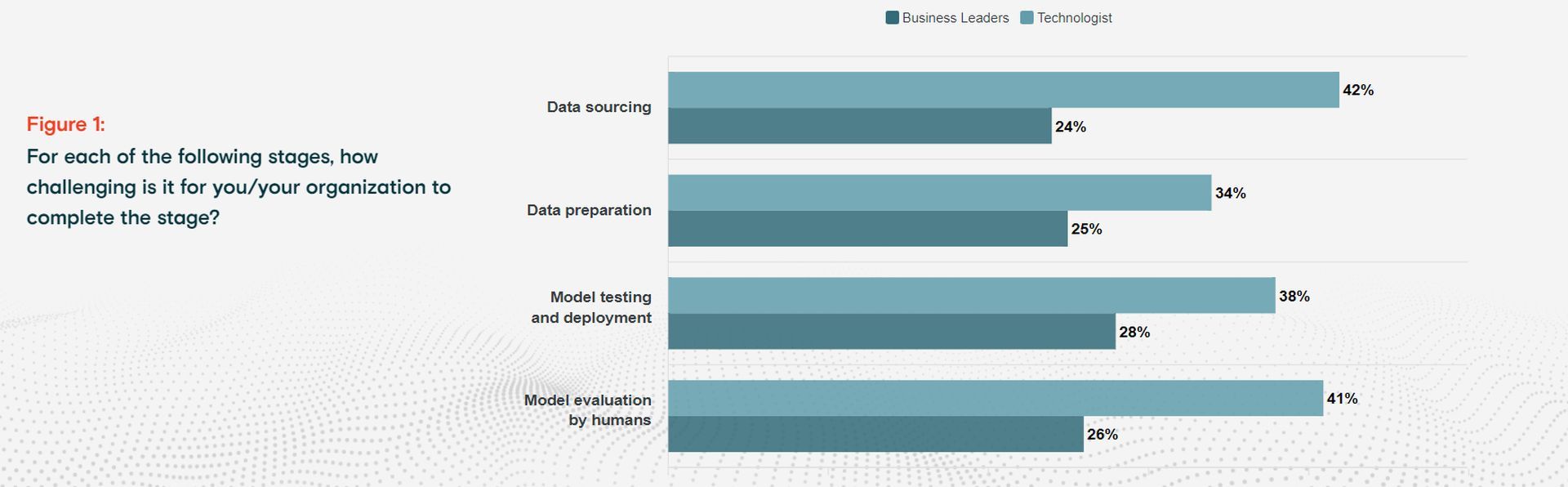

- Data sourcing is the most resource-intensive, time-consuming, and challenging stage of the four steps of AI, including data sourcing, data preparation, model training and deployment, and human-guided model evaluation, according to Appen’s survey of 504 business leaders and engineers.

- Data management remains to be the main challenge for AI, according to Appen.

- Data management is cited by 41% of respondents who work in the AI loop as their biggest challenge.

- Additionally, the survey found that 93% of organizations strongly or somewhat agree that all AI initiatives should include ethics as their “foundation.”

Data is the cornerstone of a machine. Without it, you cannot construct any AI-related projects. But businesses are having difficulties when it comes to data sourcing, mostly budget-wise. Analytics are now not just used by large, wealthy corporations. It is now widely used, and businesses are making a variety of profits from it. Organizations can set baselines, benchmarks, and targets using good data in order to keep moving forward.

Data sourcing covers over 30% of an organization’s AI budget

According to Appen’s State of AI and Machine Learning report released this week, enterprises still have difficulty obtaining adequate, clean data to support their AI and machine learning endeavors.

According to Appen’s survey of 504 business leaders and engineers, of the four steps of AI—data sourcing, data preparation, model training and deployment, and human-guided model evaluation—data sourcing is the most resource-intensive, time-consuming, and difficult stage.

Data sourcing takes up 34% of an organization’s typical AI budget, compared to 24% for data preparation, 24% for model testing and deployment, and 15% for model evaluation, says Appen’s survey. The study was conducted by the Harris Poll and included IT decision makers, business leaders, and managers from the US, UK, Ireland, and Germany.

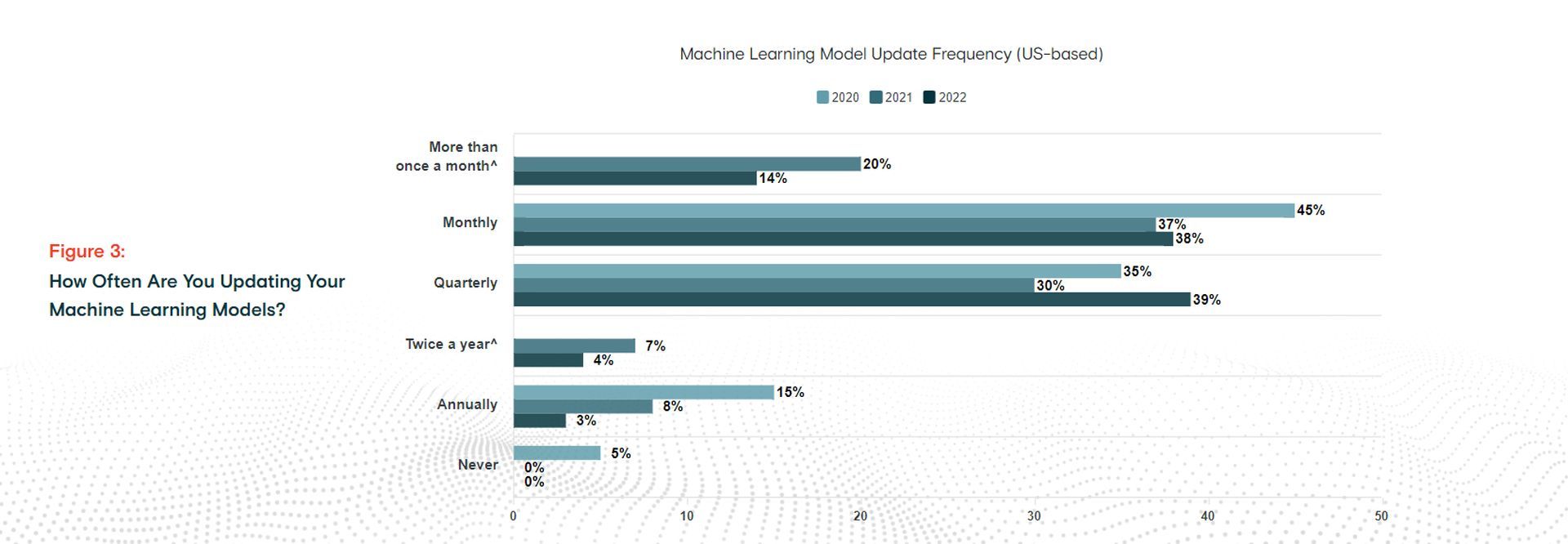

Data sourcing takes up around 26% of an organization’s work, compared to 24% for data preparation, 23% for model testing and review, and 17% for model deployment. Finally, compared to model review (41%), model testing and deployment (38%), and data preparation (34%), 42% of engineers regard data sourcing to be the most difficult stage of the AI lifecycle.

Despite the difficulties, organizations are managing. According to Appen, four out of five (81%) survey respondents feel that they have access to enough data to assist their AI activities. This could be a key to that success: The overwhelming majority (88%) are enhancing their data by utilizing third-party providers of AI training data (such as Appen).

However, the data’s veracity is under doubt. Only 20% of survey respondents claimed to have data accuracy rates higher than 80%, according to Appen’s research. Only 6% of people, or roughly one in twenty, claim that their data accuracy is 90% or higher. In other words, for more than 80% of firms, one in five pieces of data is inaccurate.

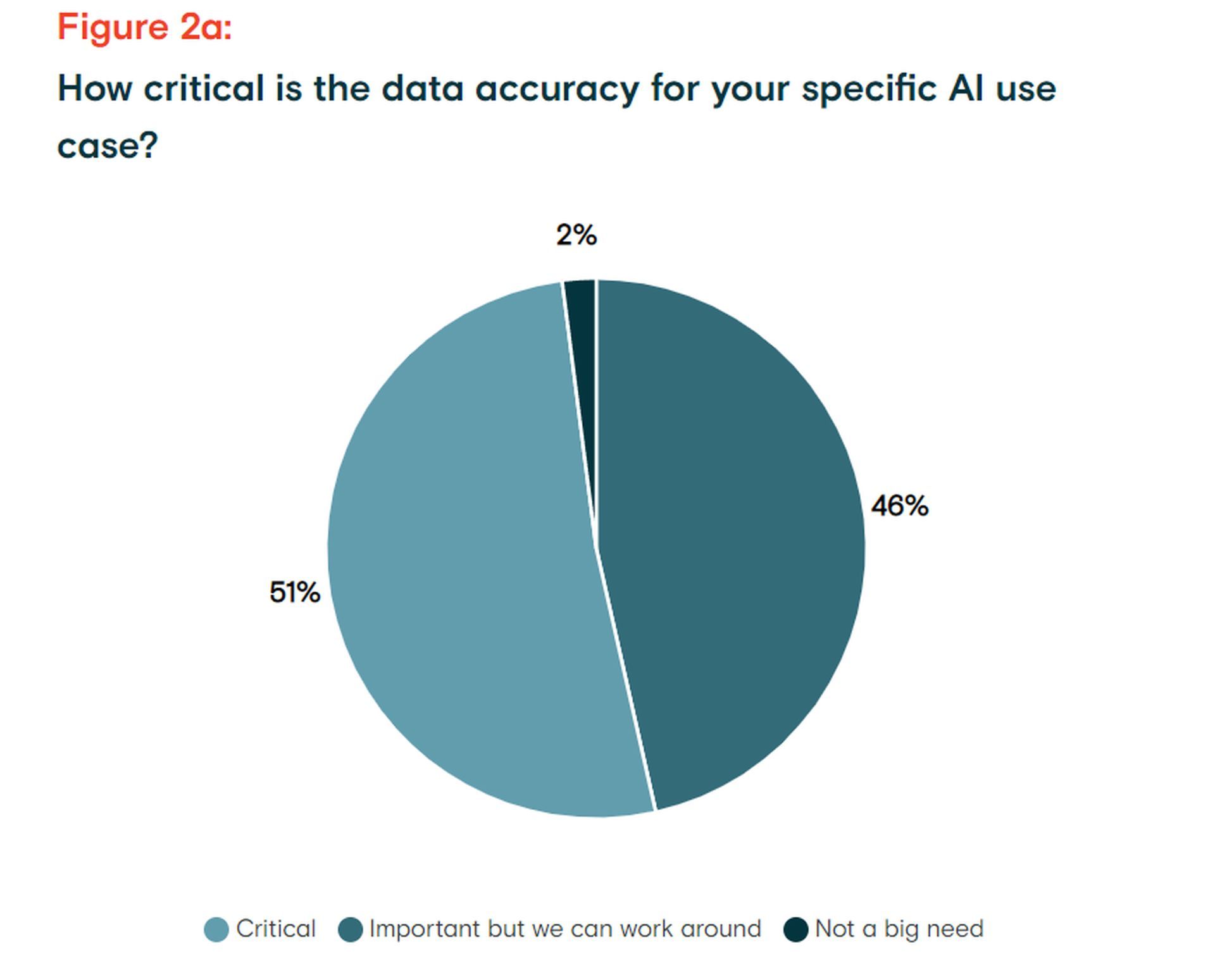

With that in mind, it is perhaps not surprising that, according to Appen’s survey, nearly half (46%) of respondents concur that data accuracy is important, “but we can work around it.” Only 2% of respondents disagree that there is a critical need for data accuracy, while 51% do.

Wilson Pang, the chief technology officer at Appen, seems to view data quality differently than the 48% of his clients that do not.

“Data accuracy is critical to the success of AI and ML models, as qualitatively rich data yields better model outputs and consistent processing and decision-making,” Pang says in the report. “For good results, datasets must be accurate, comprehensive, and scalable.”

Enabling customer data compliance with identity-based retention

The success of AI now depends more on effective data sourcing, collection, management, and labeling than on sound data science and machine learning models due to the rise of deep learning and data-centric AI. This is especially true with today’s transfer learning techniques, where AI practitioners throw off the top of a sizable pre-trained language or computer vision model and retrain only some of the layers with their own data.

Rich data can also aid in preventing unintentional bias from entering AI models and, in general, preventing AI from producing undesirable results. Ilia Shifrin, senior director of AI specialists at Appen, claims that this is especially true of large language models.

“With the rise of large language models (LLM) trained on multilingual web crawl data, companies are facing yet another challenge. These models oftentimes exhibit undesirable behavior due to the abundance of toxic language, as well as racial, gender, and religious biases in the training corpora,” said Shifrin.

Although there are several solutions (modifying training regimens, filtering training data and model outputs, and learning from human input and testing), Shifrin argues that additional research is required to establish a good benchmark for “human-centric LLM” procedures and model evaluation standards.

Machine learning makes life easier for data scientists

According to Appen, data management continues to be the top obstacle for AI. According to the poll, 41% of those working in the AI loop name data management as their major obstacle. The fourth position went to a lack of data, which was cited as the biggest barrier to AI success by 30% of respondents.

But how businesses are dealing with data sourcing?

However, there is some positive news: Businesses are spending less time organizing and maintaining their data. According to Appen, it was little more than 47% this year as opposed to 53% in the previous year.

“With a large majority of respondents using external data providers, it can be inferred that by outsourcing data sourcing and preparation, data scientists are saving the time needed to properly manage, clean, and label their data,” the company said.

But perhaps firms shouldn’t be reducing their data sourcing and preparation processes, given the relatively high prevalence of inaccuracies in the data (whether internal or external). When it comes to building and maintaining an AI process, there are several competing needs, with the necessity to hire qualified data specialists being another top need noted by Appen. However, businesses should continue to put pressure on their teams to emphasize the value of data quality until sufficient progress in data management has been made.

Data science conquers the customer journey and sales funnel

In addition, the poll revealed that 93% of businesses strongly or somewhat concur that ethical AI should serve as the “basis” for all AI initiatives. According to Mark Brayan, CEO of Appen, that is a promising beginning, but there is still work to be done.

“The problem is, many are facing the challenges of trying to build great AI with poor datasets, and it’s creating a significant roadblock to reaching their goals,” explained Brayan.

According to Appen’s analysis, internal, specially-collected data continues to make up the majority of enterprises’ data sets utilized for AI, accounting for 38% to 42% of the data. Surprisingly, synthetic data made a big showing, accounting for 24% to 38% of the data in organizations, while pre-labeled data—often from a data service provider—accounted for 23% to 31%.

Particularly synthetic data, which 97% of respondents to Appen’s survey said they utilize “in developing inclusive training data sets,” has the potential to lower the occurrence of bias in sensitive AI projects.

Some key points from the report suggest that:

- 77% of organizations retrain their models monthly or quarterly;

- 55% of US organizations claim they’re ahead of competitors versus 44% in Europe;

- 42% of organizations report “widespread” AI rollouts versus 51% in the 2021 State of AI report;

- 7% of organizations report having an AI budget over $5 million, compared to 9% last year.

Read Appen’s State of AI and Machine Learning report here.