The amount that specific features employed in the model contribute to its prediction is frequently described in explanation techniques that help consumers comprehend and trust machine-learning models. For instance, a doctor may be interested in learning how much the patient’s heart rate data affects a model that forecasts a patient’s chance of developing a cardiac disease.

Does the explanation technique help, though, if those characteristics are so difficult to understand for the end-user? If you are curious about the development of ML, check out the history of machine learning, it dates back to the 17th century.

A taxonomy is created to improve the interpretability of ML features

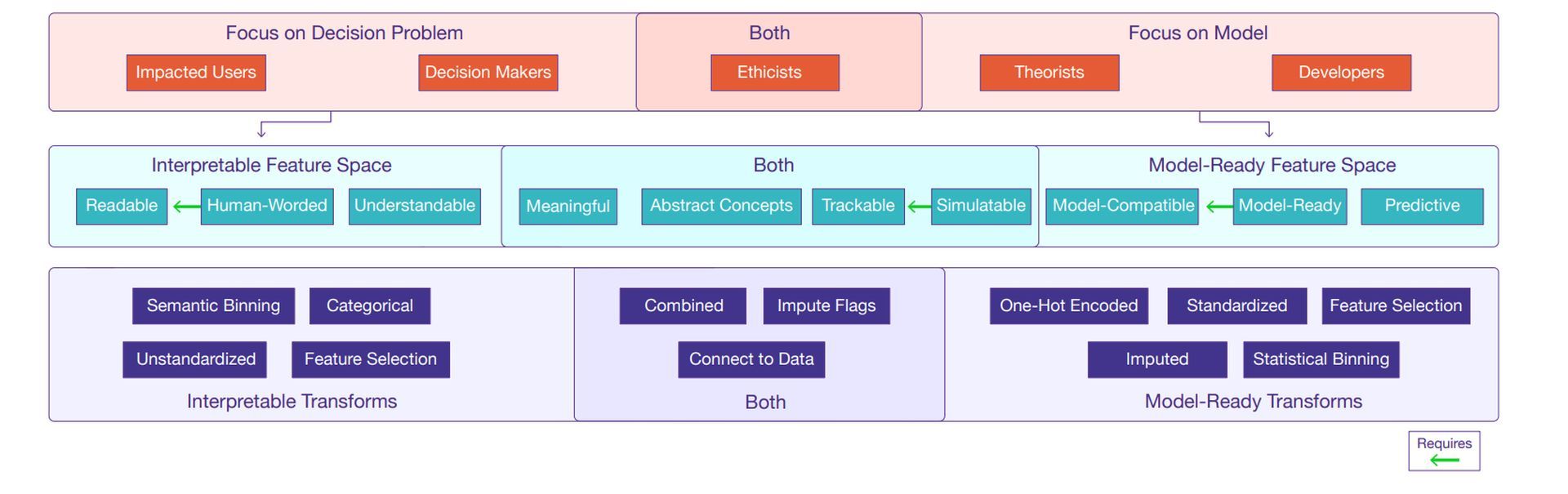

To make it easier for decision-makers to use the results of machine-learning algorithms, MIT researchers are working to make more interpretable features. They created a taxonomy based on years of fieldwork to assist developers in creating features that are simpler for their target audience to understand.

“We found that out in the real world, even though we were using state-of-the-art ways of explaining machine-learning models, there is still a lot of confusion stemming from the features, not from the model itself,” says Alexandra Zytek, an electrical engineering and computer science PhD student and lead author of a paper introducing the taxonomy.

The researchers identified the properties that enable features to be understood by five different user types—from artificial intelligence professionals to those who may be impacted by a machine-learning model’s prediction—to create the taxonomy. They also provide guidelines on how model makers can convert features into simpler formats for laypeople to understand.

They believe that their study would encourage model developers to think about incorporating interpretable elements early on rather than trying to move backward and concentrate on explainability later.

Laure Berti-Équille, a visiting professor at MIT and the research director at IRD, Dongyu Liu, a postdoc, and Kalyan Veeramachaneni, a principal research scientist at the Laboratory for Information and Decision Systems (LIDS) and the head of the Data to AI group, are the co-authors of the MIT research. Ignacio Arnaldo, a key data scientist at Corelight, has joined them. The study is in the peer-reviewed Explorations Newsletter, June edition of the Association for Computing Machinery Special Interest Group on Knowledge Discovery and Data Mining.

The baseline of the study

Machine-learning models use features as input variables, often taken from the dataset’s columns. According to Veeramachaneni, data scientists often hand-pick and create the model’s features to ensure that the characteristics are generated to increase model accuracy rather than whether a decision-maker can understand them.

He and his team have been working with decision-makers to address machine learning usability issues for several years. Due to their lack of understanding of the characteristics influencing forecasts, these domain experts, most of whom lack machine-learning expertise, frequently lack confidence in models.

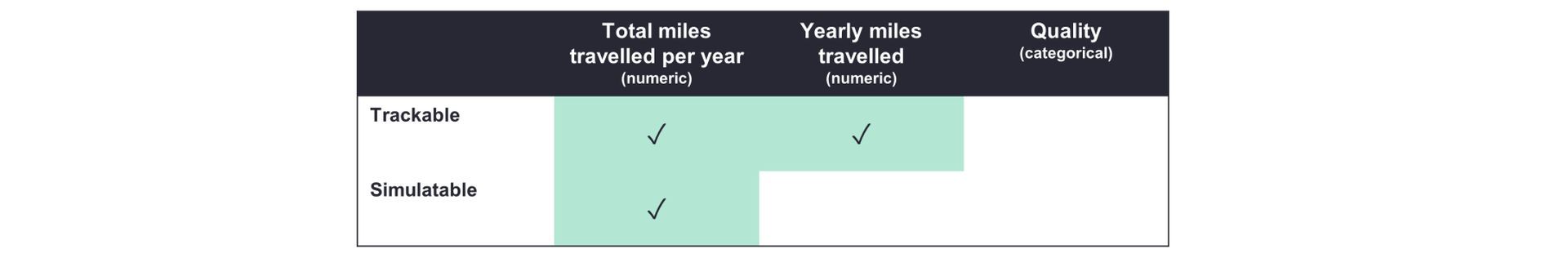

They collaborated on one study with doctors from an ICU at a hospital who utilized machine learning to forecast the likelihood that a patient may experience difficulties following cardiac surgery. A patient’s heart rate trend over time is one example of a feature displayed as aggregated values. Even though features defined in this way were “model ready” (the model could interpret the data), physicians didn’t know how to calculate them. According to Liu, they would prefer to observe how these aggregated attributes connect to the original measurements to spot irregularities in a patient’s heart rate.

A team of learning scientists, on the other hand, valued qualities that were aggregated. They would prefer relevant features to be grouped and labeled using words they recognized, like “participation,” rather than having a feature like “number of posts a student made on discussion forums.”

“With interpretability, one size doesn’t fit all. When you go from area to area, there are different needs. And interpretability itself has many levels,” explained Veeramachaneni.

The cornerstone of the researchers’ taxonomy is the notion that one size does not fit all. They detail which characteristics are probably the most significant to particular users and specify characteristics that can make features more or less interpretable for various decision-makers.

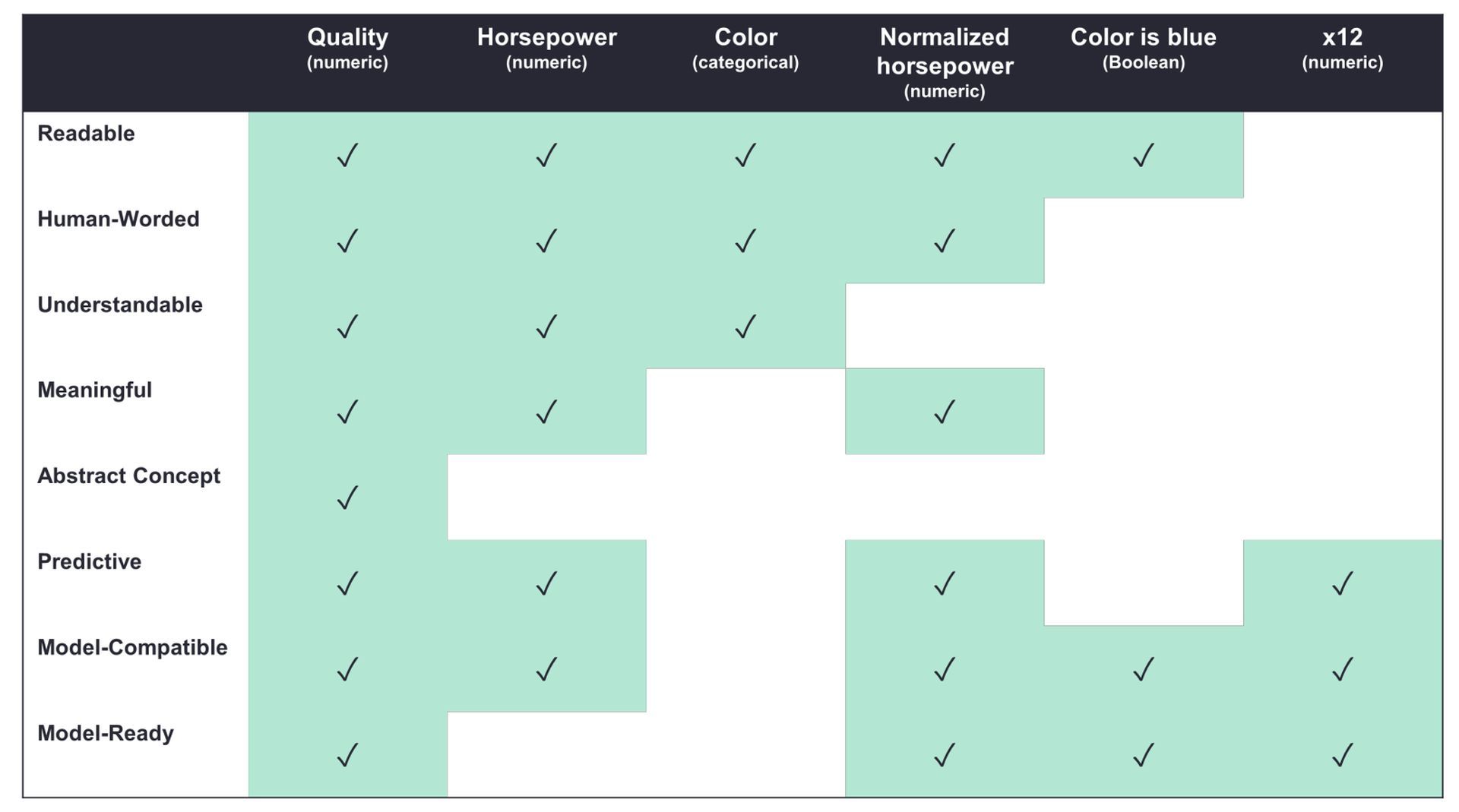

For instance, machine-learning developers may prioritize predictive and compatible variables, as these features are anticipated to enhance the model’s performance.

The needs of decision-makers who have no prior experience with machine learning, however, might be better served by features that are human-worded, that is, they are described in a way that is natural for users.

“The taxonomy says, if you are making interpretable features, to what level are they interpretable? You may not need all levels, depending on the type of domain experts you are working with,” said Zytek.

Interpretability of ML features for professionals

To make features easier for a particular audience to understand, the researchers also provide feature engineering strategies that developers can use.

Data scientists use methods like aggregating data or normalizing values to change data throughout the feature engineering process so that machine-learning models can process it. Additionally, most models cannot handle categorical input without first converting it to a numerical code. Often, it is almost impossible for laypeople to unravel these transformations.

Undoing some of that encoding may be necessary to produce interpretable characteristics, according to Zytek. As an illustration, a typical feature engineering method arranges data spans so that they all have the same amount of years. To make these qualities easier to understand, one may categorize age ranges using human terminologies, such as newborn, toddler, kid, and teen. Or, says Liu, an interpretable feature can be the raw pulse rate data rather than a processed feature like average pulse rate.

“In a lot of domains, the tradeoff between interpretable features and model accuracy is actually very small. When we were working with child welfare screeners, for example, we retrained the model using only features that met our definitions for interpretability, and the performance decrease was almost negligible,” explained Zytek.

Based on this research, the researchers are creating a system that enables a model developer to manage intricate feature transformations more effectively and produce explanations for machine-learning models oriented toward people.

Additionally, this new system will translate algorithms created to explain model-ready datasets into formats decision-makers can comprehend. Thus it will be possible to improve the interpretability of ML features for everyone. The industry is focused on these machine learning models. For instance, a new ML method will be the driving force toward improving algorithms.