Machine learning (ML) may assist human decision-makers in high-risk situations. For example, an ML model might forecast which law school applicants have the greatest chances of passing the bar exam and help an admissions officer choose which students should be admitted. Some even found out that ML systems could detect deadly earthquakes swiftly.

MIT researchers examined explanation methods

These models typically have millions of variables, so how they come to conclusions is nearly impossible for researchers to fully comprehend, much alone an admissions officer with no machine-learning expertise. Researchers sometimes use explanatory methods that mimic a larger model by generating simple approximations of its predictions. These approximations, which are considerably simpler to understand, aid in deciding whether or not to trust the model’s predictions.

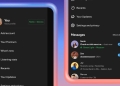

Is it acceptable for researchers to employ these explanation approaches? If explanation methods produce better approximations for males than women or white people than black people, it may persuade some users to trust the model’s predictions while others do not.

Some widely used explanation methods were examined closely by MIT researchers. They discovered that these explanations’ approximation quality varies considerably across subgroups and is often bad for minorities.

In practice, this implies that if the approximation accuracy of female candidates is lower than that of males, there may be an inconsistency between the explanation methods and the model’s estimates.

When the MIT researchers learned how broad these inequality gaps are, they attempted various methods to level the playing field. They could narrow some of them but not eliminate them.

“What this means in the real world is that people might incorrectly trust predictions more for some subgroups than for others. So, improving explanation methods is important, but communicating the details of these models to end-users is equally important. These gaps exist, so users may want to adjust their expectations as to what they are getting when they use these explanations,” explained Aparna Balagopalan, the lead author of the study and a graduate student in the Healthy ML group of the MIT Computer Science and Artificial Intelligence Laboratory (CSAIL).

The study’s authors include Marzyeh Ghassemi, an assistant professor and head of the Healthy ML Group at UMass Amherst; graduate students Haoran Zhang and Kimia Hamidieh from CSAIL; postdoc Thomas Hartvigsen from CSAIL; Frank Rudzicz, an associate professor of computer science at the University of Toronto; and senior author Balagopalan. The work will be presented at the ACM Conference on Fairness, Accountability, and Transparency.

Can we fully trust ML models?

Simplified explanation methods can produce predictions of a more complicated machine-learning model in a way that humans can comprehend. The fidelity property, which measures how well the simplified explanation methods match the larger model’s forecasts, is maximized by an effective explanation mechanism.

The MIT researchers instead focused on average fidelity for the entire explanation model while looking at fidelity for specific subsets of individuals in the data set. The fidelity should be very similar between each gender in a dataset with males and females and both groups having fidelity close to that of the overall explanation model.

“When you are just looking at the average fidelity across all instances, you might be missing out on artifacts that could exist in the explanation model,” said Balagopalan.

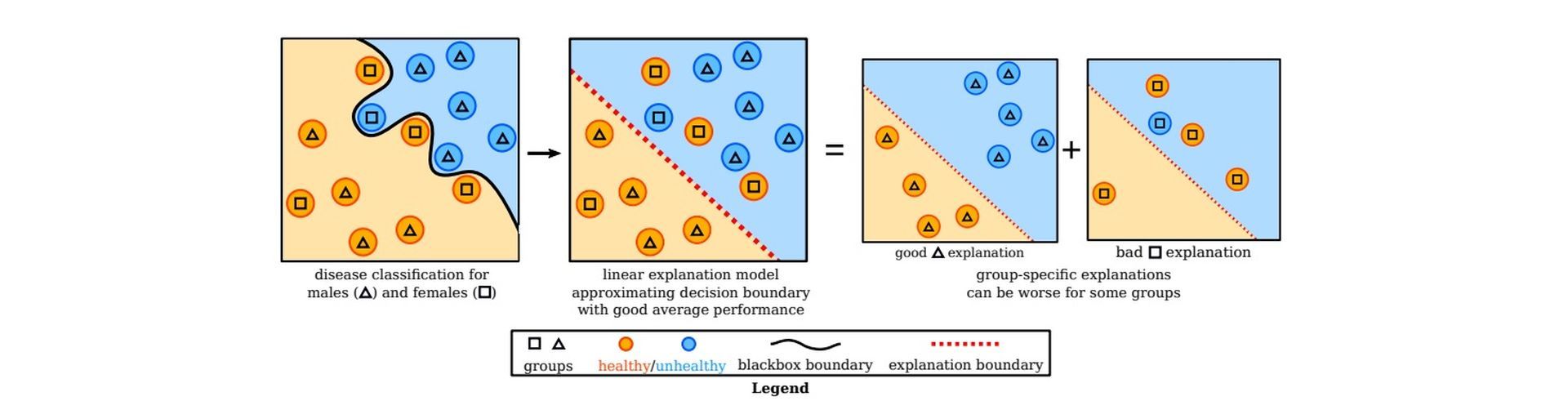

Two fidelity gaps metrics were created to measure the differences in fidelity between subgroups. The difference between the overall explanatory model’s fidelity and the worst-performing group’s fidelity is one. The second compares all conceivable pairs of subsets to each other, then averages the results.

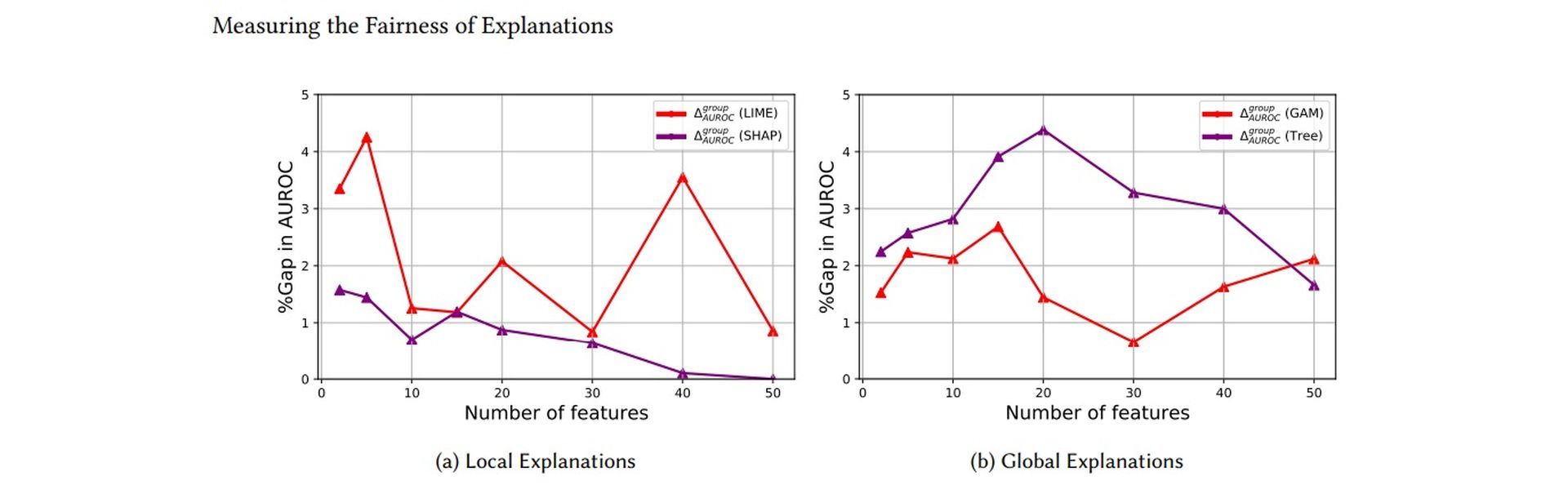

They used two types of explanation methods trained on four real-world data sets for high-stakes situations like predicting whether a patient dies in the ICU, whether a defendant reoffends, or whether a law school applicant will pass the bar exam to search for fidelity gaps. The sex and race of each individual were included in each dataset. Protected attributes are characteristics that may not be utilized in decisions because of legislation or company rules. Depending on the particular decision requirement, these terms might have various meanings.

According to their findings, the researchers discovered clear fidelity gaps for all datasets and explanation methods. In certain situations, the fidelity of disadvantaged individuals was considerably lower, with one example showing a difference of 21%. The law school dataset had a gap in fidelity between race subgroups of 7%, implying that some approximation errors were made 7% more frequently on average. For example, if there are 10,000 applicants from these subgroups in the data set, approximately a large portion may be rejected incorrectly owing to approximations that are wrong by 7%.

“I was surprised by how pervasive these fidelity gaps are in all the datasets we evaluated. It is hard to overemphasize how commonly explanations are used as a ‘fix’ for black-box machine-learning models. In this paper, we are showing that the explanation methods themselves are imperfect approximations that may be worse for some subgroups,” explained Ghassemi.

The researchers then tried several machine-learning techniques to close the fidelity gaps. They trained the explanation methods to recognize regions in a dataset that might be prone to low fidelity and then concentrate on those samples. They also experimented with balanced datasets that included an equal number of items from all subgroups.

These training methods reduced some fidelity gaps, but they didn’t eliminate them. The researchers then modified the explanation methods to see why fidelity disparities arise in the first place. Their study revealed that a theory model, even if group labels are hidden, may indirectly use protected group data like sex or race from the dataset and cause fidelity gaps.

Because they want to delve deeper into this problem in the future, they’ve started a new research project. They also intend to look at the consequences of fidelity gaps in the real world when making decisions. Balagopalan is pleased that concurrent work by an independent lab has arrived at similar findings, illustrating how critical it is to understand this issue thoroughly.

“Choose the explanation model carefully. But even more importantly, think carefully about the goals of using an explanation model and who it eventually affects,” added Balagopalan. By the way, undetectable backdoors can be implemented in any ML algorithm, so it is important to address the issues regarding machine learning for the well-being and security of everyone.