Some days are so wild that I wish I could clone myself to keep up with everything. Well, you and I weren’t the only ones thinking about this. Artificial intelligence, which is notoriously disrupting the world nowadays, was born from the idea of cloning human beings mentally instead of physically. And the result is tremendous… From the timely collection of our garbage to the challenging and pivoting of a bad decision that otherwise would lead a company to disaster a decade later, artificial intelligence adds tremendous power to humanity in a wide spectrum that, ironically more than enough to push the limits of my feeble mortal mind.

Precursors of artificial intelligence

Long, long before artificial intelligence came along, and I don’t mean a mere few centuries here, humanity started to dream of smart things as smart as the smartest creature we know, of course, ourselves. You are invited to join me in exploring the precursors of artificial intelligence and the origins of the mythical, fictional, and speculative ideas that fueled our obsession with adding the adjective “smart” to almost everything we get our hands on.

RoboGod: Talos the Unerring

As with many things, the first to think and dream about artificial intelligence were, unsurprisingly, the Greeks. The oldest trace of artificial intelligence in the known pages of history is from Greek Mythology. An exciting idea of intelligent automation with its “artificial will” is called Talos.

According to myth, Talos, a bronze giant, was built with, ahem, a blacksmith hammer, by Hephaestus to protect the beautiful island of Crete. Apparently, it was very different back then. This smart robot would guard the island’s perimeter and throw giant rocks at the invaders. Albeit a myth, the first artificial intelligence Talos was defeated by being unplugged by his toe. And we are still using wired technologies in 2022. I blame Mr. Cook. Sir, come on already!

Mythology and tech geeks can explore Greek Mythology’s other and, without a doubt, the most stimulating AI fiction in the 10th book of Ovid’s Metamorphoses.

In another time and part of Ancient Greece, Euclid and Aristotle did not know at the time that the next generations would use their ideas to create synthetic things with their own will. We’ll come back to this later.

Middle Age lab-grown life

The Greeks imagined it and wrote it down. By the 9th century, humans had developed different and extremely complex ideas about what they were doing in the world. Islamic alchemist Jabir ibn Hayyan worked on creating synthetic life in the laboratory, including a human, and published his work in a highly esoteric code for his students. Jabir ibn Hayyan’s book was so cryptic that it still gives no secrets today. One might wish Paracelsus, the father of toxicology, did the same with his book “Of the Nature of Things,” where he openly shares his jaw-dropping ideas on artificial life creation.

Goethe alchemically imagined an artificial intelligence in a flask and wrote one of the best tragedies in the world. Homunculus, the mixture of synthetic and organic life, spent its entire existence striving to be born into a complete human body. However, when the transformation starts, the flask breaks and it dies. Thanks, Goethe.

Following Goethe, other ideas about artificial intelligence and thinking machines began to be explored in fiction, with Mary Shelley’s Frankenstein or Karel Čapek’s R.U.R and speculations like Samuel Butler’s Darwin Among the Machines and Edgar Allan Poe’s Maelzel’s Chess Player. Since then, AI has become a regular topic of science fiction, sometimes as an inspiration to technology and sometimes as a cause for concern.

Romantic automation

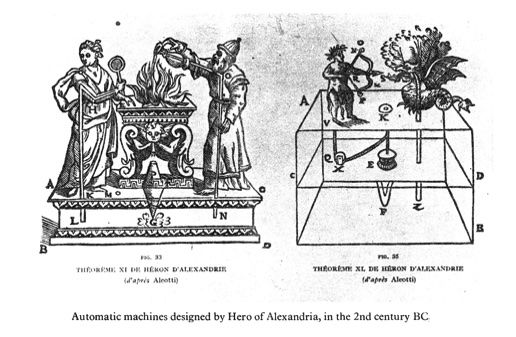

Automata, also known as automatons, were humanity’s first step toward real automation. An automaton is a machine designed to execute a set of operations automatically or in response to predetermined instructions.

In the past, almost every advanced civilization has crafted realistic humanoids. The earliest known automata were the sacred statues of ancient Egypt and Greece. It was said that these figures were endowed with real minds that could reason and feel.

During the early modern period, automatons were said to be able to respond to inquiries put to them. Roger Bacon, a late medieval alchemist and scholar is said to have fashioned a brazen head after inventing a tale about being a wizard. The Norse myth of the Head of Mímir is a similar case. Mímir, according to legend, was praised for his intellect and wisdom and was beheaded during the Æsir-Vanir War. After taking the head, Odin “embalmed” it with herbs and spoke incantations over it, ensuring that Mímir’s head was still capable of speaking counsel to Odin.

And then, we finally started to ask the right questions!

Artificial intelligence is built on the idea that human thought can be mechanized. For centuries, philosophers have studied formal reasoning. Structured methods of deductive reasoning were developed by Chinese, Indian, and Greek scholars throughout the last millennium BC. Their ideas were developed over the centuries with Aristotle’s formal analysis of the syllogism, Euclid’s Elements, a model of formal reasoning, and al-Khwārizmī’s algebra and the idea of the algorithm.

Ramon Llull, a Spanish philosopher who lived in the 14th century, created several logical machines intended to create knowledge through logic; his devices were mechanical creatures that could combine fundamental and indisputable facts through simple logical operations, which produced all of the possible knowledge through mechanical meanings that performed such tasks as combining basic and undeniable truths. Gottfried Leibniz was greatly influenced by Llull’s thinking, which evolved into his own.

In the 16th century, Leibniz, Thomas Hobbes, and René Descartes investigated whether all rational thinking might be reduced to algebra or geometry in the same way. “Reason” was mere “reckoning,” according to Hobbes, who opined that reason is nothing more than calculation. Leibniz conceived a worldwide language of reasoning, the Characteristica Universalis, which theoretically simplifies any discussion with arithmetic.

The British philosopher David Hume was the first to propose a formalist approach to mathematics in 1738, while the Hungarian mathematician George Boole published his work “An Investigation of the Principles of Natural Selection” in 1854.

The 20th century witnessed the development of mathematical logic, which was crucial to artificial intelligence. Such texts laid the groundwork for Boole’s The Laws of Thought and Frege’s Begriffsschrift. Russell and Whitehead developed a rigorous treatment of the foundations of mathematics in their masterpiece, the Principia Mathematica, published in 1913.

Their conclusion was surprising in many ways. First, they demonstrated that mathematical logic could not represent everything. But more significantly, their research indicated that any sort of mathematical reasoning might be mechanized within these newly discovered limitations.

The Church-Turing thesis, first published in the 1940s and 1950s by Alan Turing, asserted that a mechanical device manipulating symbols as basic as 0 and 1 could duplicate any mathematical deduction conceivable. The Turing machine—a basic theoretical device that captured the essence of abstract symbol manipulation—provided the key insight. This invention would encourage many scientists to consider whether thinking machines were possible.

The birth of computers (and their minds)

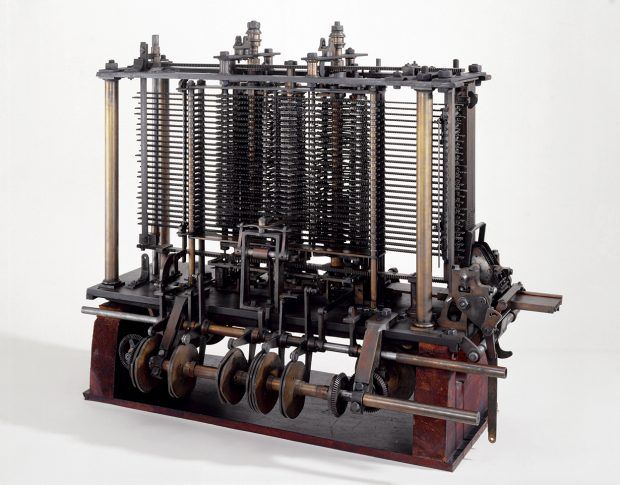

Calculating machines have been created and improved by various mathematicians throughout history, including philosopher Gottfried Leibniz. The Analytical Engine devised by Charles Babbage was an exciting idea in the early 19th century, but it never sprang across the prototyping phase.

In her 1843 essay “A Memoir of the Time She Contributed to the Development of Mechanical Music,” Ada Lovelace wrote that a machine might produce “extremely complex and scientific” pieces of music. Percy Ludgate, a clerk for a corn merchant in Dublin, Ireland, independently constructed a programmable mechanical computer.

Following in the footsteps of Charles Babbage, in his Essays on Automatics (1913), Torres y Quevedo constructed a calculating machine based on Babbage’s technology that utilized floating-point number representations and demonstrated a prototype in 1920. Torres y Quevedo is also considered the creator of the 1912 chess-playing autonomous mechanism El Ajedrecista.

This was the first mechanical chess machine built in modern times. It played an endgame with only three chess pieces, automatically moving a white king and rook to checkmate a human opponent’s black king.

Vannevar Bush’s paper Instrumental Analysis (1936) detailed using existing IBM punch-card machines to construct Babbage’s design. He began the Rapid Arithmetical Machine project in 1936 to study the difficulties of constructing an electronic digital computer.

The first modern computers were large code-breaking machines used during World War II, such as Z3, ENIAC, and Colossus. These two machines were built on the theoretical foundation established by Alan Turing and expanded by John von Neumann.

Finally, in the 1940s and 1950s, a few scientists from several disciplines, such as mathematics, psychology, engineering, economics, and political science, began to consider the possibility of building an artificial mind. In 1956, AI research was established as an academic discipline.

Now we know AI’s fantastical background. Our next topic will explore the increasingly obvious fundamental differences of opinion and vision about future AI technology. And after all these shenanigans, would you believe that some of the brightest minds of our time think that the ideal route for artificial intelligence might not be replicating the human mind? And these differences are not limited to the theoretical ideas: Many contemporary schools of thought are accomplishing concrete scientific progress to make future AI what they think will be most beneficial to humanity.