This week, Nvidia laid out its strategy for moving forward with the next generation of computing. Nvidia Graphics Technology Conference (GTC) saw CEO Jensen Huang reveal a variety of technologies that he claims will power the future wave of AI and virtual reality worlds.

Even though Nvidia’s GPU Technology Conference, more popularly known as GTC, was held virtually this year, it still offered exciting news. A keynote speech by Nvidia CEO Jensen Huang was the event’s main highlight. So, what are the newest goods, and how do they relate to data science?

At Nvidia GTC 2022, the company revealed its next-generation Hopper GPU architecture and Hopper H100 GPU and a new data center chip that integrates the GPU with a high-performance CPU, dubbed the “Grace CPU Superchip” and, of course, Nvidia Omniverse.

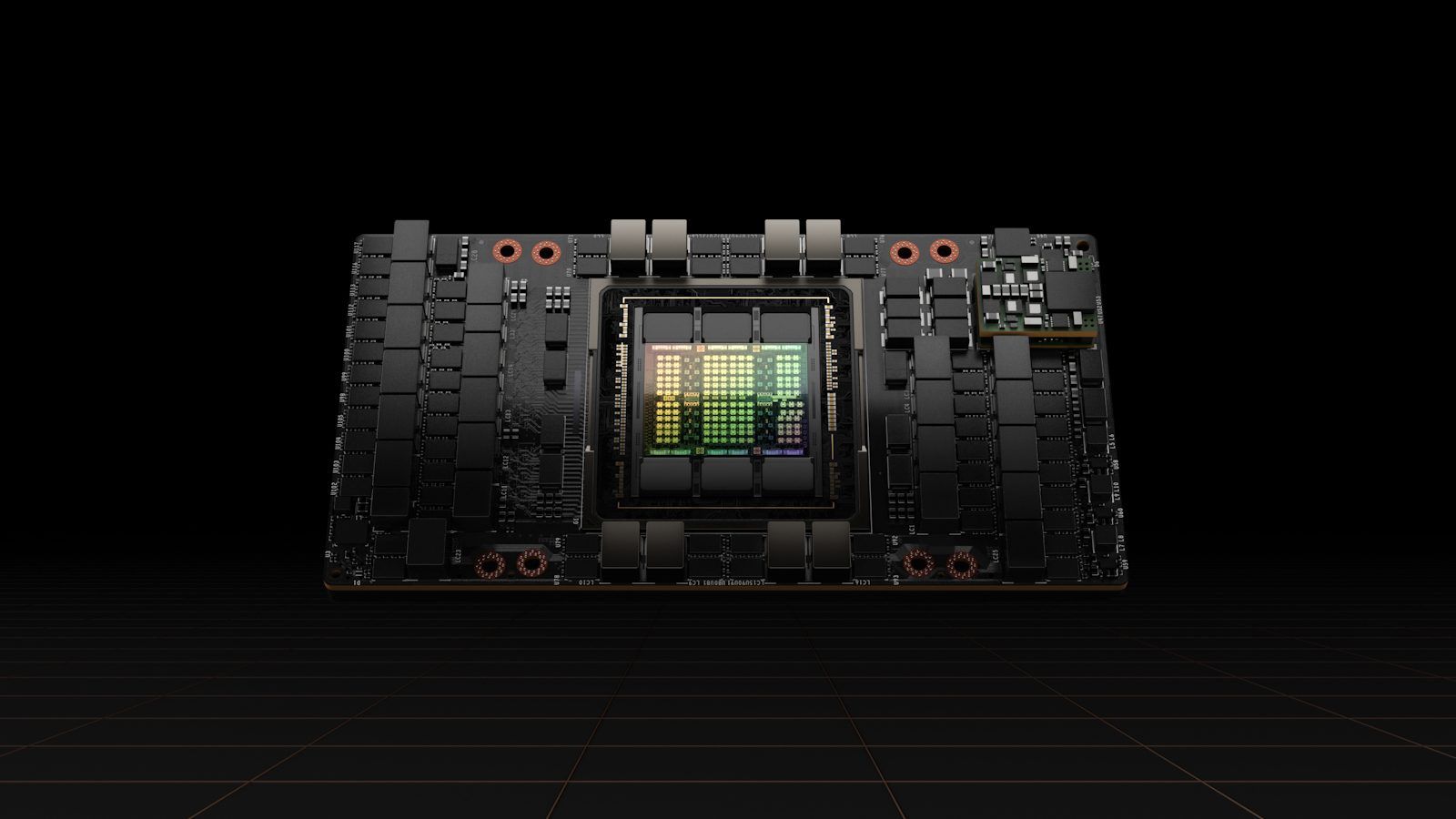

Nvidia H100 “Hopper GPU”

Nvidia is launching a slew of new and enhanced Hopper features. Still, the emphasis is on transformer models, which have become the machine learning technique of choice for many applications and which power models like GPT-3 and asBERT may be the most important.

The new Transformer Engine in the H100 chip promises to speed up model learning by up to six times. Because this new architecture incorporates Nvidia’s NVLink Switch technology for linking many nodes, massive server clusters powered by these chips will be able to scale up to support enormous networks with less overhead.

Customers’ Tensor Cores, which can handle up to 16 bits of precision while maintaining accuracy, are utilized in the new Transformer Engine.

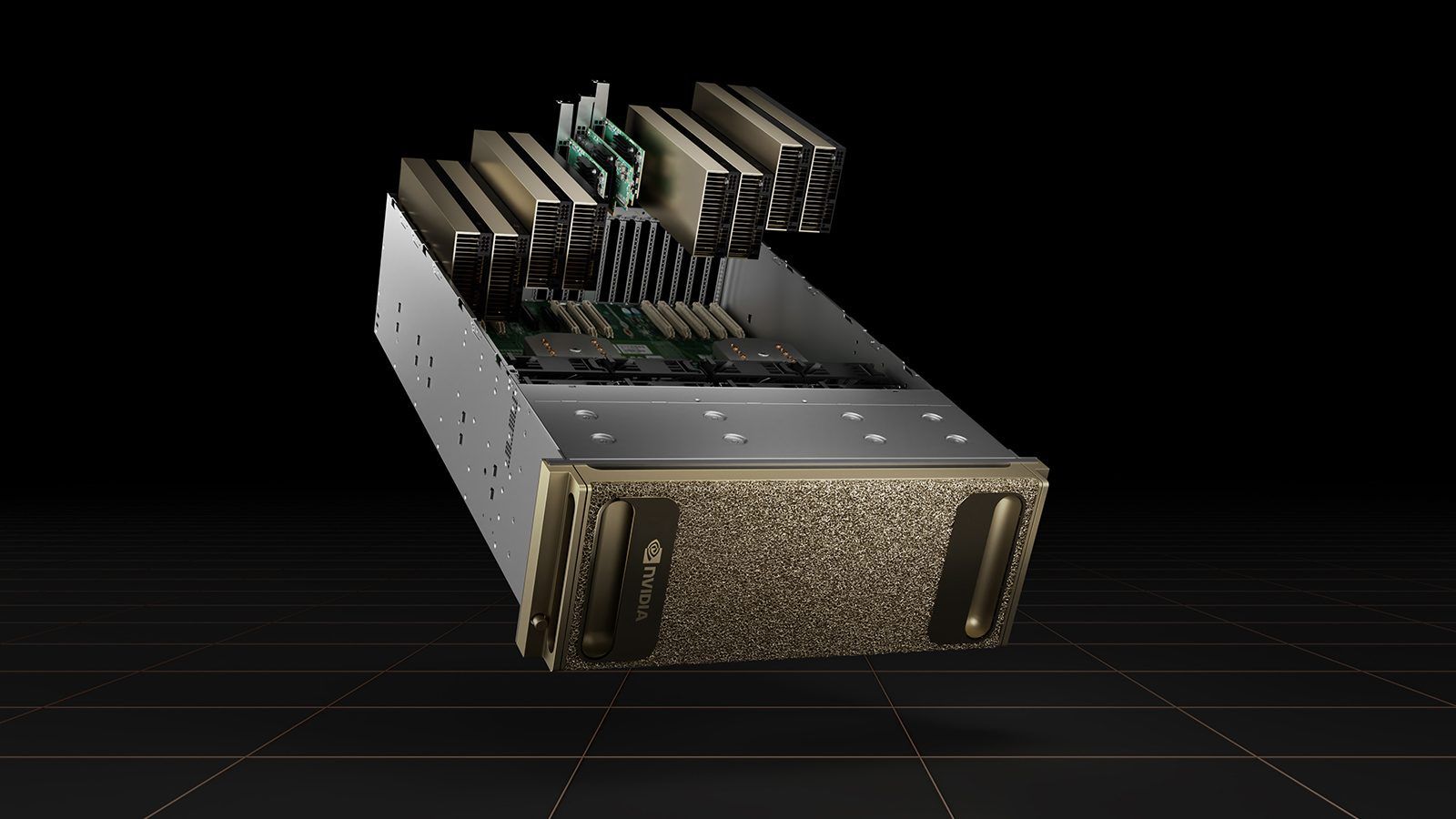

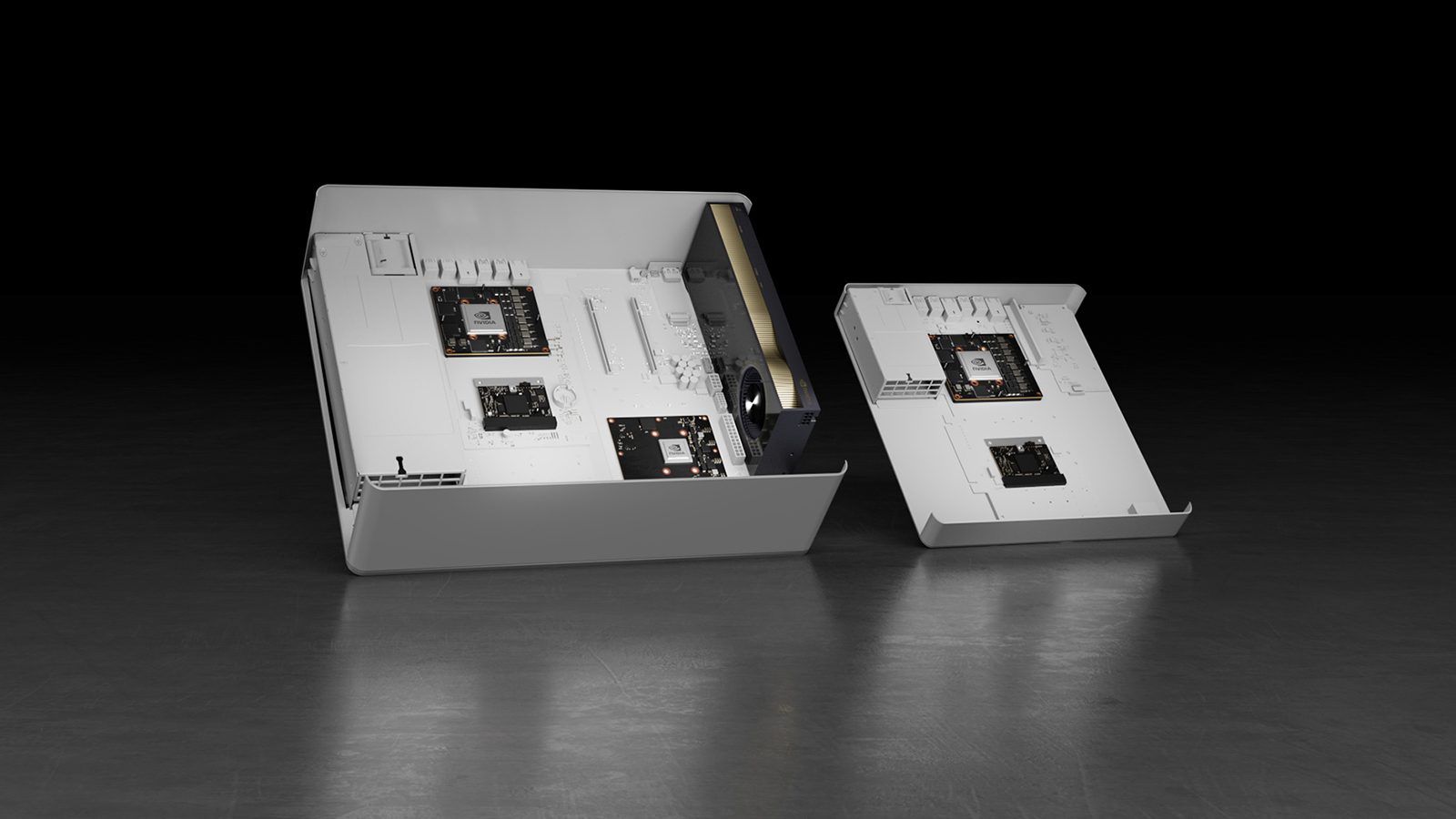

Nvidia Grace CPU

The Nvidia GPU Superchip is Nvidia’s first foray into the data center CPU market. The Arm-based chip will have a staggering 144 cores and one terabyte per second of memory bandwidth, according to Intel leaks. It combines two Grace CPUs connected via Nvidia’s NVLink interconnect, comparable to Apple’s M1 Ultra architecture.

The new CPU, powered by fast LPDDR5X RAM, is expected to arrive in the first half of 2023 and will deliver 2x the performance of previous servers. Nvidia expects the chip to score 740 on the SPECrate®2017_int_base benchmark, which is against high-end AMD and Intel data center processors.

The firm is collaborating with “leading HPC, supercomputing, hyper-scale and cloud customers,” implying that these systems will be accessible on a cloud provider near you.

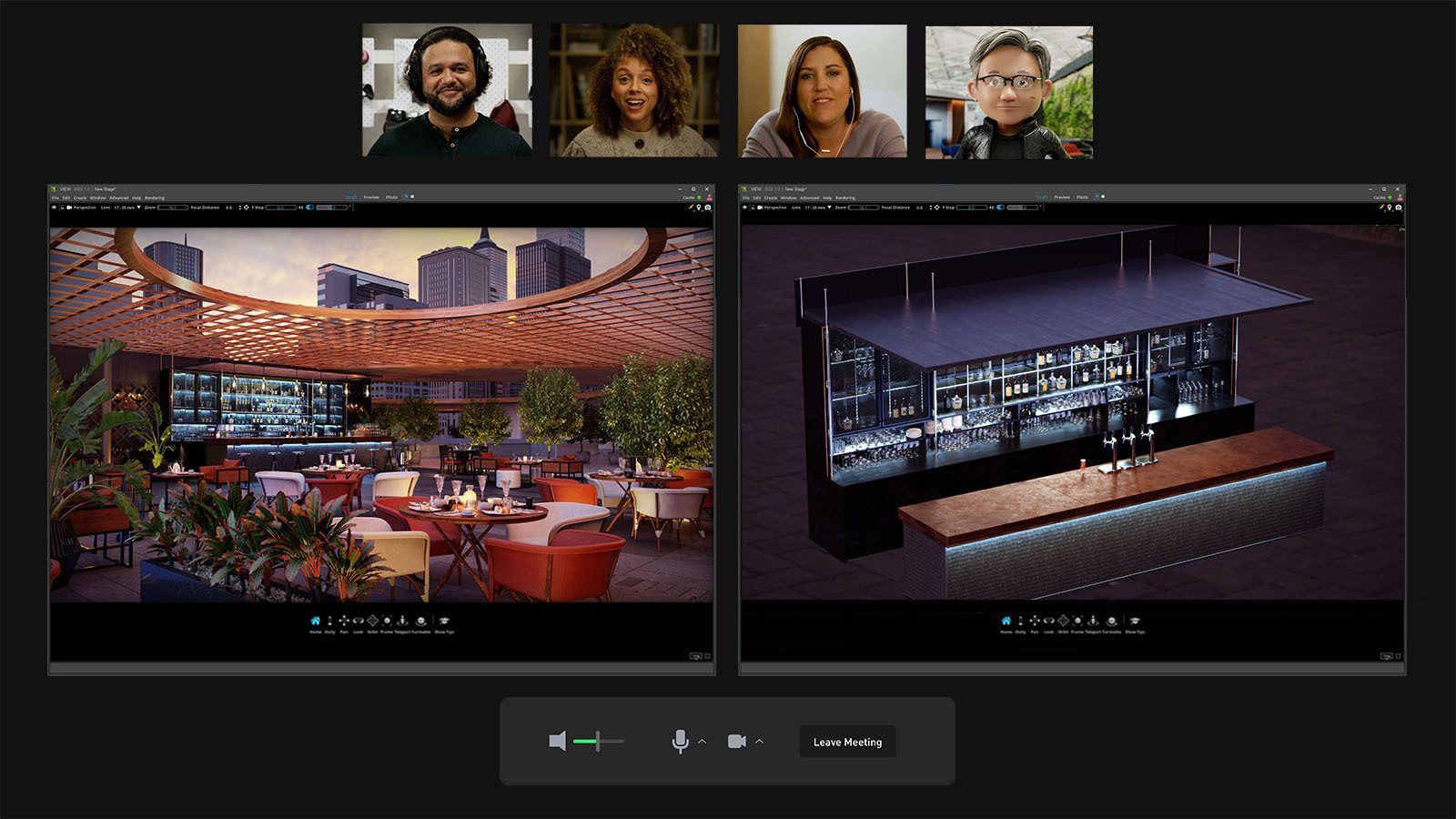

What is Nvidia Omniverse?

At GTC 2022, Nvidia is releasing Omniverse Cloud, a suite of cloud services that allows artists, creators, architects, and developers to collaborate on 3D design and simulation from any device.

The Nucleus Cloud, a one-click-to-collaborate sharing software, is included in Omniverse Cloud’s portfolio of services. It enables artists to access and change enormous 3D environments from anywhere without sending large data files. The View is an app for non-technical users that streams full Omniverse scenes with Nvidia GeForce Now technology powered by Nvidia RTX GPUs in the cloud.

The other app, Create, is for technical designers, artists, and creators to create 3D worlds in real time are among the suite of services provided by Omniverse Cloud. Users will be able to stream, create, view, and collaborate with other Omniverse customers from any device.

GPU Accelerated Data Science with RAPIDS

These new hardware and cloud service initiatives are for handling big data. “Big data” refers to more varied data that arrive in greater numbers and move faster. The three Vs are also known as this.

Big data is a bigger, more complex set of data, especially from new sources. Traditional data processing software cannot deal with these massive amounts of information. However, employing these vast quantities of data may solve issues that you couldn’t previously address. But Nvidia has a RAPIDS solution and is the heart of all data science-related GTC 2022 sessions.

What is RAPIDS?

At GTC 2022, there were 131 data-related sessions, and the first one was Fundamentals of Accelerated Data Science, which explained what RAPIDS is.

RAPIDS is a collection of open-source GPU code libraries that can be used to develop end-to-end data science and analytics pipelines. RAPIDS boosts data science pipeline speeds to help organizations produce more productive workflows.

The GPU-only library of open-source software called RAPIDS is the heart of data processing, and Nvidia may produce maybe one of the best hardware for this. It helps accelerate the processing speed of machine learning algorithms without incurring data serialization expenses. RAPIDS also supports multi-GPU deployments for end-to-end data science pipelines on large data sets.

How does RAPIDS work?

RAPIDS uses GPU acceleration to accelerate the entire data science and analytics processes. A GPU-optimized core data frame aids in database and machine learning application development.

RAPIDS is a Python-based framework that allows you to write your code in C++ and run it entirely on GPUs. It includes libraries for executing a data science pipeline completely on GPUs.

Relation between RAPIDS and data science

RAPIDS strives to reduce the time it takes to get data in order, often a significant barrier in data science. RAPIDS helps you develop more dynamic and exploratory workflows by improving the data transfer process.

RAPIDS advantages

RAPIDS brings several advantages into the mix:

- Integration — Create a data science toolchain that is as easy to maintain.

- Scale — GPU scaling on various GPUs, including multi-GPU configurations and multi-node clusters.

- Accuracy — Allows for the rapid creation, testing, and modification of machine learning models to improve their accuracy.

- Speed — Increased data science productivity and reduced training time.

- Open source — The world’s first GPU-optimized SPV chain architecture. It is compatible with NVIDIA and built on the Apache Arrow open-source sonviftware platform.

The new GPU and CPU introduced at GTC 2022 will make a significant contribution to data processing with the support of RAPIDS. Also, you can use these systems on every PC, thanks to Nvidia Omniverse.

GTC 2022 was a virtual conference that offered over 900 sessions, including keynote addresses, technical instructionals, panel discussions, and roundtable talks with industry experts. Despite the lack of audience and the opportunity to interact with the diverse array of attendees from both private and public sectors, there was plenty of information at this event. Even if you miss out on the actual event live, many sessions, such as Jensen Huang’s keynote speech, will be archived for later viewing.

Nvidia’s ecosystem continues to expand and scale at breakneck speed, as seen at GTC 2022. This article outlined some of the new features and how they were connected to data science. Do you think the further improvements are enough to move data science forward?