Traditional databases historically haven’t been fast enough to act on real-time data, forcing developers to go to great effort to write applications that capture and process fast data. Some developers have turned to tools like Storm or Spark Streaming; others have patched together a set of open source projects in the style of the Lambda Architecture. These complex, multi-tier systems often require the use of a dozen or more servers.

What we’ve learned developing the world’s fastest in-memory database is that OLTP at scale starts to look a lot like a streaming application. Whether you view your problem as OLTP with real-time analysis or as stream processing, VoltDB is the only system that combines ingestion, speed, robustness, and strong consistency to make developing and supporting real-time apps easier than ever.

In our latest release, VoltDB v5.0, we provide – in one system – a unified Fast Data/Big Data pipeline. On the streaming front-end, VoltDB connects to message queue tools like Kafka. On the backend, VoltDB efficiently exports streams of data to Hadoop/HDFS, or to another OLAP system of choice for historical archiving. In between, VoltDB v5.0 provides high velocity, transactional ingestion of data and events; real-time analytics on windows of streaming data; and also provides low-latency, per-event decision-making: the ability to react and apply business decisions to individual events.

Challenging Lambda

VoltDB is an ideal alternative to the Lambda Architecture’s speed layer. It offers horizontal scaling and high per-machine throughput. It can easily ingest and process millions of tuples per second with redundancy, while using fewer resources than alternative solutions. VoltDB requires an order of magnitude fewer nodes to achieve the scale and speed of the Lambda speed layer. As a benefit, substantially smaller clusters are cheaper to build and run, and easier to manage.

VoltDB also offers strong consistency, a strong development model, and can be directly queried using industry-standard SQL. These features enable developers to focus on business logic when building distributed systems at scale. If you can express your logic in single-threaded Java code and SQL, with VoltDB you can scale that logic to millions of operations per second.

Finally, VoltDB is operationally simpler than competing solutions. Within a VoltDB cluster, all nodes are the same; there are no leader nodes, no agreement nodes; there are no tiers or processing graphs. If a node fails, it can be replaced with any available hardware or cloud instance and that new node will assume the role of the failed node. Furthermore, by integrating processing and state, a Fast Data solution based on VoltDB requires fewer total systems to monitor and manage.

A Speed Layer Example

As part of the work that went into VoltDB v5.0, our developers prepared several sample applications that illustrate the power and flexibility of the in-memory, scale-out NewSQL database for developing fast data applications. One application in particular, the ‘Unique Devices’ example, demonstrates, in approximately 30 lines of code, how to handle volumes of fast streaming data while maintaining state and data consistency, and achieving the benefits of real-time analytics and near real-time decisions.

The VoltDB ‘Unique Devices” example represents a typical Lambda speed layer application. This isn’t a contrived sample – it is based on a real-world application hosted by Twitter that is designed to help mobile developers understand how many people are using their app. Every time an end-user uses a smartphone app, a message is sent with an app identifier and a unique device id. This happens 800,000 times per second over thousands of apps. App developers pay to see how many unique users have used their app each day, with per-day history available for some amount of time going back.

This Twitter system was built using the Lambda Architecture. In the speed layer, Kafka was used for ingestion, Storm for processing, Cassandra for state and Zookeeper for distributed agreement. In the batch layer, tuples were loaded in batches into S3, then processed with Cascading and Amazon Elastic MapReduce. To reduce processing and storage load on the system, the HyperLogLog cardinality estimation algorithm was used as well.

Replicating these requirements using VoltDB involves simplifying the architecture considerably. The VoltDB Unique Devices sample application has several components:

The Sample Data Generator

Generating fake but plausible data is often the hardest part of a sample app. Our generator generates tuples of ApplicationID, DeviceID with non-linear distributions. This component is also easily changed to support more/fewer applications/devices, or different distributions. View the code on Github.

The Client Code

The client code takes generated data and feeds it to VoltDB. The code looks like most VoltDB sample apps: it offers some configuration logic, some performance monitoring, and the connection code. View the code on Github.

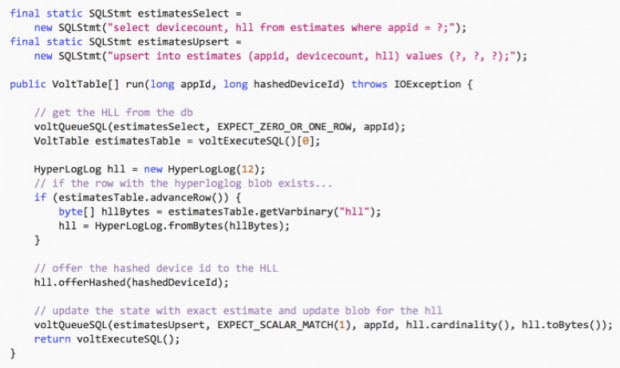

The Ingest Logic

This is the key part of the application, and it’s what separates VoltDB from other solutions. The idea was to build a stored procedure to handle the incoming ApplicationID and DeviceID tuples, and then write to the relational state any updated estimate for the ApplicationID given.

First we found a HyperLogLog implementation in Java on Github. Then we simply wrote down the straightforward logic to achieve the test, trying a few variations and using the performance monitoring in the client code to pick the best choice. The bulk of the logic is about 30 new lines of code (see below), which can easily scale to over a million ops/sec with full fault tolerance on a cluster of fewer than 10 commodity nodes. This is a significant win over alternative stacks. View the code on Github.

Web Dashboard

Finally, the Unique Devices app includes an HTML file with accompanying Javascript source to query VoltDB using SQL over HTTP, displaying query results and statistics in a browser. The provided dashboard is very simple; it shows ingestion throughput and a top-ten list of most popular apps. Still, because the VoltDB ingestion logic hides most implementation complexity – such as the use of HyperLogLog – and boils the processing down to relational tuples, the top-ten query is something any SQL beginner could write. View the code on Github.

It took a little more than a day to build the app and the data generator; some time was lost adding some optimizations to the HyperLogLog library used. It was not hard to build the app with VoltDB.

Example in hand, we then decided to push our luck. We created versions that don’t use a cardinality estimator, but use exact counts. As expected the performance is good when the data size is smaller, but is slower as it grows, unlike the HyperLogLog version. We also created a hybrid version that keeps exact counts until 1,000 devices have been seen, then switches to the estimating version. You can really see the power of strong consistency when it’s so trivial to add what would be a complex feature in another stack. Furthermore, changing this behavior required changing only one file, the ingestion code; the client code and dashboard code are unaffected. Both modifications are bundled with the example.

Finally, we made one more modification. We added a history table, and logic in the ingestion code that would check on each call if a time period had rolled over. If so, the code would copy the current estimate into a historical record and then reset the active estimate data. This allows the app to store daily or hourly history within VoltDB. We didn’t include this code in the example, as we decided too many options and the additional schema might muddy the message, but it’s quite doable to add.

VoltDB isn’t just a good platform for static Fast Data apps. The strong consistency, developer-friendly interfaces and standard SQL access make VoltDB apps really easy to enhance and evolve over time. It’s not just easier to start: it’s more nimble when it’s finished.

![Challenging the Lambda Architecture: Building Apps for Fast Data with VoltDB v5.0 2 J.Hugg1[3]](https://dataconomy.com/wp-content/uploads/2015/03/J.Hugg13-150x150.jpg) About the author: John Hugg is the Founding Software Engineer for VoltDB. VoltDB provides a fully durable, in-memory relational database that combines high-velocity data ingestion and real-time data analytics and decisioning to enable organizations to unleash a new generation of big data applications that deliver unprecedented business value.

About the author: John Hugg is the Founding Software Engineer for VoltDB. VoltDB provides a fully durable, in-memory relational database that combines high-velocity data ingestion and real-time data analytics and decisioning to enable organizations to unleash a new generation of big data applications that deliver unprecedented business value.

Photo credit: xTom