Apple is positioning the new M5-powered MacBook Pro as a far more capable machine for running and experimenting with large language models, thanks to upgrades to both its MLX framework and the GPU Neural Accelerators built into the chip. For researchers and developers who increasingly prefer to work directly on Apple silicon hardware, the company is pitching the M5 line as a meaningful step forward in on-device inference performance, especially for LLMs and other workloads dominated by matrix operations.

At the center of this effort is MLX, Apple’s open-source array framework designed specifically for its unified memory architecture. MLX provides a NumPy-like interface for numerical computing, supports both training and inference for neural networks, and lets developers move seamlessly between CPU and GPU execution without shuttling data across different memory pools. It works across all Apple silicon systems, but the latest macOS beta unlocks a new layer of acceleration by tapping into the dedicated matrix-multiply units inside the M5’s GPU. These Neural Accelerators are exposed through TensorOps in Metal 4 and give MLX access to performance Apple argues is crucial for workloads dominated by large tensor multiplications.

On top of MLX sits MLX LM, a package for text generation and fine-tuning that supports most language models hosted on Hugging Face. Users can install it via pip, initiate chat sessions from the terminal, and quantize models directly on-device. Quantization is a core feature: converting a 7B-parameter Mistral model to 4-bit takes only seconds, dramatically shrinking memory requirements while preserving usability on consumer machines.

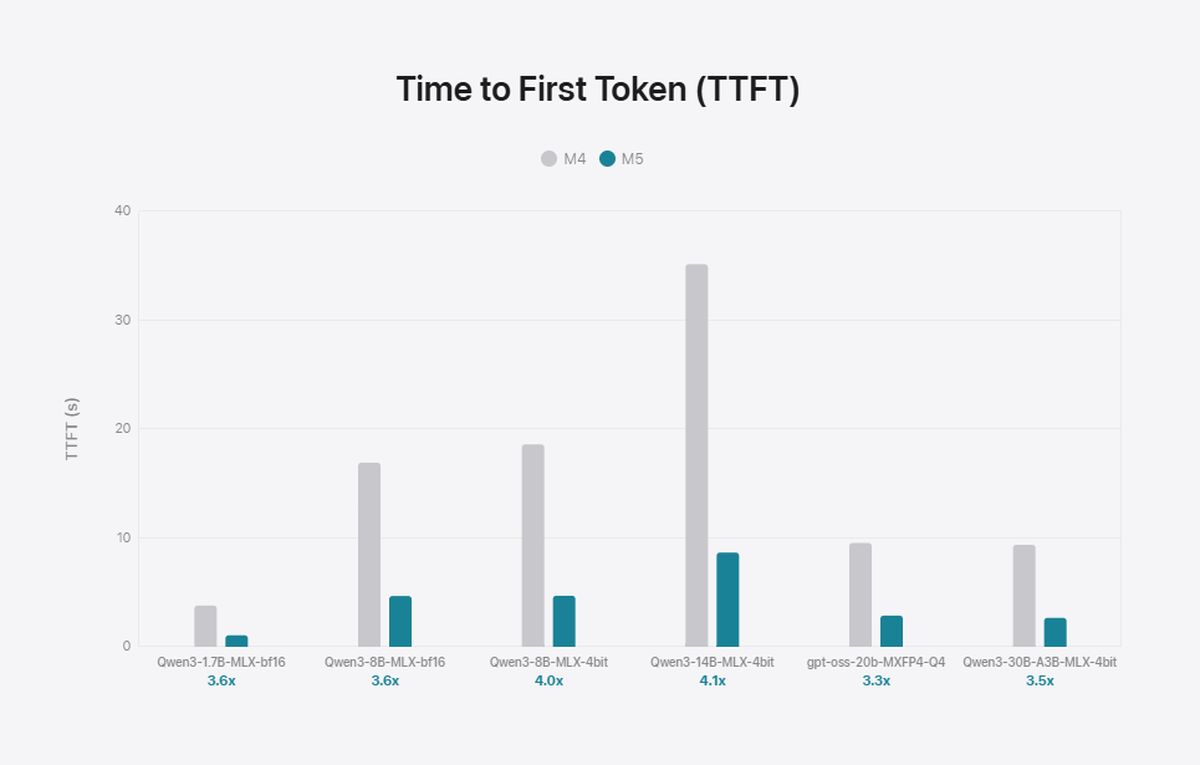

To showcase the M5’s gains, Apple benchmarked several models—including Qwen 1.7B and 8B (BF16), 4-bit quantized Qwen 8B and 14B, and two mixture-of-experts architectures: Qwen 30B (3B active) and GPT-OSS 20B (MXFP4). The results focus on time to first token (TTFT) and generation speed when producing 128 additional tokens from a 4,096-token prompt.

The M5’s Neural Accelerators markedly improve TTFT, cutting the wait under 10 seconds for a dense 14B model and under 3 seconds for a 30B MoE. Apple reports TTFT speedups between 3.3x and 4x compared with the previous M4 generation. Subsequent token generation—which is limited by memory bandwidth rather than compute—sees smaller but consistent gains of roughly 19–27%, aligned with the M5’s 28% increase in bandwidth (153GB/s versus 120GB/s on M4).

The tests also highlight how much model capacity fits comfortably into unified memory. A 24GB MacBook Pro can host an 8B model in BF16 or a 30B MoE at 4-bit with headroom to spare, keeping total usage under 18GB in both cases.

Apple says the same accelerator advantages extend beyond language models. For example, generating a 1024×1024 image with FLUX-dev-4bit (12B parameters) runs more than 3.8x faster on an M5 than on an M4. As MLX continues to add features and broaden model support, the company is betting that more of the ML research community will treat Apple silicon not just as a development environment but as a viable inference and experimentation platform.