In the modern enterprise, data is the lifeblood of innovation, driving everything from operational efficiency to strategic decision-making. Yet, the process of transforming raw data into actionable insights is often bottlenecked by complex, time-consuming, and resource-intensive data engineering workflows. From understanding business requirements to designing data pipelines, developing Extract, Transform, Load (ETL) code, and orchestrating data flows, each step demands significant human effort and expertise. As organizations strive for ever-faster insights and pervasive AI integration, the traditional data engineering paradigm is struggling to keep pace.

This article proposes a transformative approach: leveraging agentic solutions – a set of interconnected AI agents working collaboratively – to automate the entire data engineering lifecycle. Moving beyond simple scripting or singular AI models, agentic systems can interpret complex requirements, generate code, execute tasks, and even adapt to feedback, mimicking the decision-making and problem-solving capabilities of human engineers. This shift promises not only to accelerate data delivery but also to democratize data access, reduce operational costs, and free up skilled data professionals to focus on higher-value strategic initiatives. We will explore the typical data engineering process, delve into the specific scope where agents can intervene, outline a practical implementation strategy, discuss scaling considerations, integrate human feedback mechanisms, and acknowledge the current limitations, providing a comprehensive playbook for ML practitioners, architects, engineers, and product managers to construct forward-thinking ML platforms aligned with their organizational goals.

Typical data engineering process in an organization

A typical data engineering journey within a large organization involves several distinct stages, each with its own set of challenges:

- Requirements Analysis: This initial phase involves understanding business needs, identifying relevant data sources, defining data quality rules, and outlining the desired output. This often involves extensive communication between business stakeholders, data analysts, and data engineers.

Challenge: Ambiguity in requirements, difficulty translating business language into technical specifications. - Design & Architecture: Data engineers then translate requirements into a technical design. This includes creating data flow diagrams, defining source-to-target mappings, selecting appropriate technologies (e.g., Kafka, Spark, Snowflake), and planning for scalability and resilience.

Challenge: Manual diagramming, ensuring optimal performance and cost-efficiency, keeping designs up to date with evolving requirements. - ETL/ELT Development: This is the core coding phase, where data engineers write scripts (SQL, Python, Scala) to extract data from sources, transform it according to business rules (cleaning, aggregation, joining), and load it into target data warehouses or data lakes.Challenge: Time-consuming manual coding, debugging, ensuring data quality and lineage.

- Validation: Developed pipelines are rigorously tested against various data scenarios, including edge cases and high volumes, to ensure accuracy, completeness, and performance.Challenge: Creating comprehensive test cases, manual validation against expected outputs.

- Deployment & orchestration: Once validated, pipelines are deployed to production environments and scheduled for execution using orchestration tools (e.g., Apache Airflow, Databricks Workflows). Monitoring and alerting are configured to track pipeline health.Challenge: Complex deployment processes, managing dependencies, ensuring robust error handling.

- Execution & monitoring: Once deployed and orchestrated, the scheduled jobs are executed to extract, process and load data. Monitoring and alerting are configured to track the data pipeline health.Challenge: Proactive identification of issues, technical debt accumulation, manual optimization efforts.

This entire process, while critical, is characterized by repetitive tasks, a high potential for human error, and a significant demand for specialized skills.

Scope of agents in data engineering automation

Agentic solutions can automate much of the data engineering workflow by handling repetitive, rule-based, and interpretation tasks. An agent is an autonomous system that can understand its environment, plan, and act to achieve goals. When multiple agents work together, they collaborate through an orchestration layer, each focusing on specialized tasks.

How agentic data engineering can be implemented

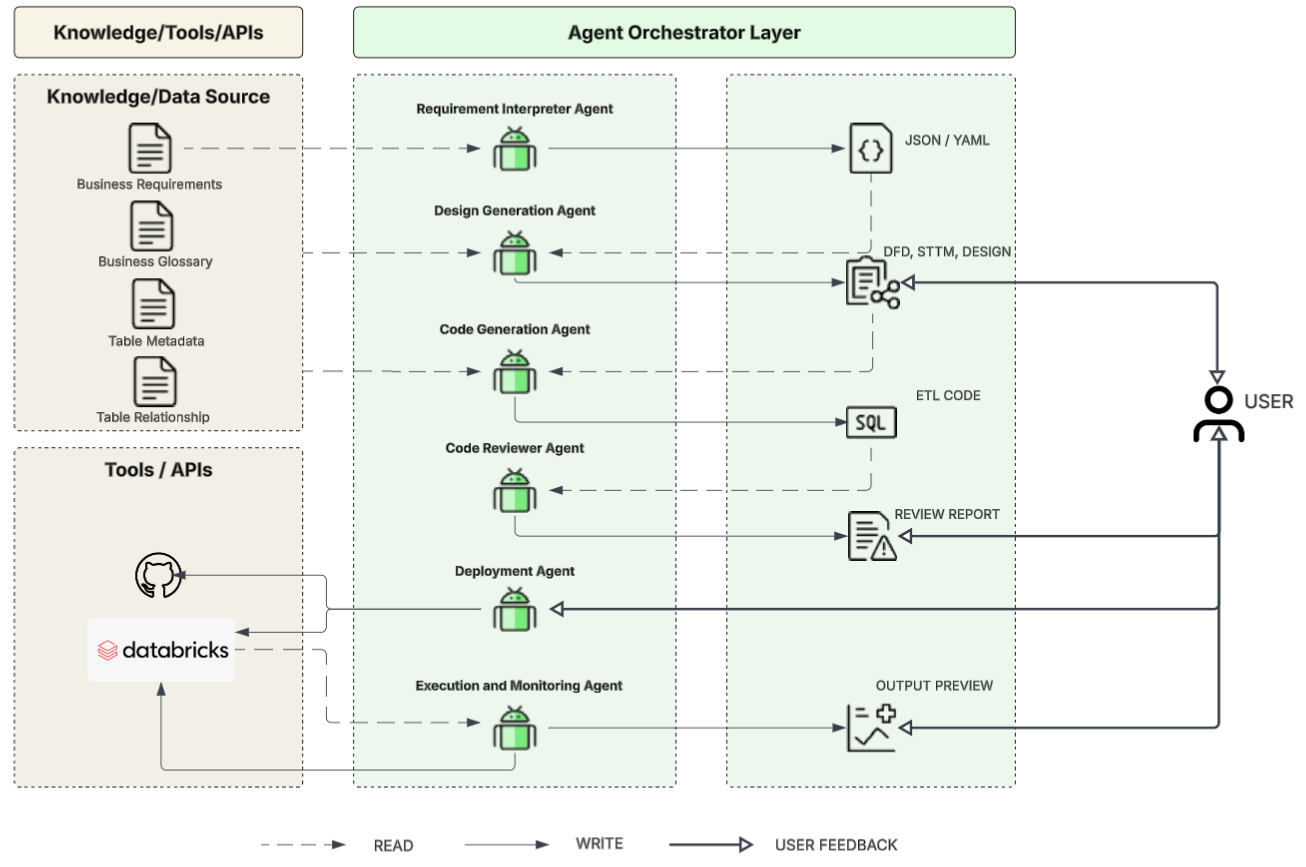

Implementing an agentic data engineering solution involves orchestrating multiple specialized AI agents, each responsible for a specific step in the workflow, with human oversight at critical junctures.

The core of this implementation relies on:

Orchestration layer: A central component (e.g., using frameworks like LangChain, CrewAI, or even custom Python orchestration) that manages the flow between agents, handles communication, and integrates human feedback.

Specialized agents: These agents act autonomously but collaborate through a shared objective: delivering a validated and production-ready data pipeline.

| Stage | Specialized agent | Role |

| Requirements | Requirements interpreter agent | Reads and understands natural language requirements and extract relevant information to map them into structured representation (YAML or JSON)

|

| Design | Design generation agent | Generates data flow diagrams, schemas, source-to-target mappings, and technical specifications.

|

| Development | Code generation agent | Produces ETL code (SQL, Python/PySpark)

|

| Validation | Code reviewer agent | Analyzes generated code for best practices, performance, and potential errors. |

| Deployment & orchestration | Deployment agent | Manages code commit to GitHub, pipeline definition in orchestration tools, and deployment.

|

| Execution & monitoring | Execution and monitoring agent | Triggers jobs on Databricks, AWS Glue, or Snowflake. Monitors execution, and alerts on anomalies.

|

How it can be implemented

Let’s consider a simple real-world use case –

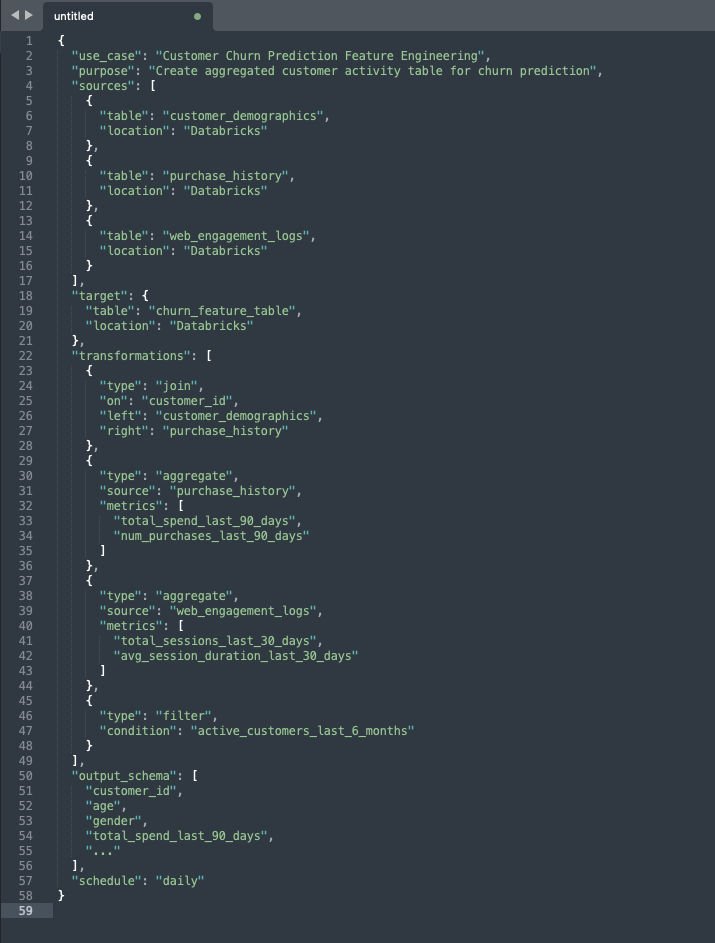

Use case: “Customer Churn Prediction Feature Engineering” where we need to develop an aggregated customer activity table for churn prediction, joining customer demographics with recent purchase history and web engagement data.

The process begins when a data scientist or product manager updates a Confluence page detailing the new feature engineering requirement. This document specifies:

- Source tables: customer_demographics (Databricks), purchase_history (Databricks), web_engagement_logs (Databricks).

- Target table: churn_feature_table (Databricks).

- Transformations:

- Join customer_demographics with purchase_history on customer_id.

- Aggregate purchase_history to get total_spend_last_90_days, num_purchases_last_90_days.

- Aggregate web_engagement_logs to get total_sessions_last_30_days, avg_session_duration_last_30_days.

- Filter for active customers in the last 6 months.

- Output schema: customer_id, age, gender, total_spend_last_90_days, num_purchases_last_90_days, total_sessions_last_30_days, avg_session_duration_last_30_days.

- Frequency: Daily

These feature engineering requirements could also be derived by an LLM agent (i.e., Requirements Interpreter Agent) directly from plain English business inputs. For now, however, let’s proceed with the well-defined requirements we have.

- Requirements interpreter agent

This agent, often based on a powerful LLM (e.g., GPT-4, Llama), continuously monitors specified Confluence spaces. Upon detecting a new or updated requirement, it reads the document, extracts key entities (source tables, transformations, output schema, frequency), and translates them into a structured intermediate representation (e.g., YAML or JSON).Example Output (Internal JSON):

- Design generation agent

This agent receives the structured requirements. It interacts with a Data Catalog (e.g., Unity Catalog in Databricks, Collibra) to understand existing table schemas, data types, and potential lineage. It then generates:- A data flow diagram (e.g., Mermaid.js or Graphviz code that can be rendered).

– A source-to-target mapping document.

– A high-level technical design document outlining the chosen approach (e.g., PySpark for complex transformations).Human feedback: The generated design is presented to a human data architect or product manager for approval. This can be via a collaboration tool (e.g., Slack, Teams) or a custom UI.

“Design Generation Agent proposes the following data flow for churn_feature_table. Please review: [link to diagram/document]. Approve/Reject?”

- Code generation agent

Upon design approval, this agent uses the detailed design and source schema information from the Data Catalog to generate the ETL code. For Databricks, this would typically be PySpark or SQL.Example (simplified PySpark snippet for Databricks):

- Code reviewer agent

This agent (e.g., integrated with static code analysis tools like SonarQube, or another LLM fine-tuned for code quality) reviews the generated code for:- Adherence to coding standards and best practices.

– Performance optimizations (e.g., efficient joins, partitioning).

– Potential data quality issues or edge cases missed.

– Security vulnerabilities.Human feedback: The generated code and the review report are presented to a human data engineer for final approval.

“Code Generator Agent has created the PySpark ETL for churn_feature_table. Review its quality report and approve/reject the code for publishing to GitHub: [link to code/report].”

- Deployment agent

Upon code approval, this agent:- Publishes the code to GitHub: Creates a new branch, commits the PySpark notebook or script, and submits a pull request (PR).

– Integrates with orchestration (Databricks Workflows/Airflow): Creates or updates the Databricks Workflow definition, specifying the notebook path, schedule, and necessary cluster configurations. - Execution & monitoring agent

– Triggers the initial run of the Databricks Workflow.

– Monitors its execution status (success/failure), duration, and resource utilization.

– If successful, it validates the schema and a sample of the data in data.churn_feature_table.Human feedback: The execution results and a sample of the output data are presented for final verification.“The churn_feature_table ETL job has completed successfully. Here is a preview of the output data and schema. Confirm accuracy: [link to Databricks table preview/report].”

Below is a high-level diagram depicting the agent orchestrator and the specialized agents working together.

This iterative process, with human touchpoints, ensures both automation efficiency and quality control.

This iterative process, with human touchpoints, ensures both automation efficiency and quality control.

Scaling plan

Scaling an agentic data engineering platform involves several dimensions:

- Orchestration scalability: The central orchestration layer must be able to manage hundreds or thousands of concurrent agent workflows. This requires a robust, distributed architecture, potentially using Kubernetes for agent deployment and a message queue (e.g., Kafka) for inter-agent communication.

- Agent specialization: As complexity grows, agents can become more specialized (e.g., a “SQL Optimizer Agent,” a “Schema Evolution Agent,” a “Data Quality Rule Agent”). This modularity enhances scalability and maintainability.

- LLM management: Efficiently manage access to various LLMs (cloud-based APIs, self-hosted models), including rate limiting, cost optimization, and model versioning. For privacy-sensitive data, consider fine-tuning smaller, open-source models (e.g., Llama 3) for specific tasks and hosting them internally.

- Integration with enterprise systems: Develop robust connectors and APIs for seamless integration with:

- Data Catalogs: (e.g., Databricks Unity Catalog, Alation, Collibra) for metadata, schema, and lineage.

- Version control systems: (e.g., GitHub Enterprise, GitLab) for code management.

- CI/CD pipelines: For automated testing and deployment.

- Monitoring & alerting systems: (e.g., Splunk, Datadog) for operational insights.

- Human collaboration tools: (e.g., Slack, Microsoft Teams) for feedback loops.

- Compute & storage: Ensure the underlying data processing infrastructure (e.g., Databricks clusters, Snowflake warehouses) can scale dynamically to handle agent-generated job demands.

Human feedback

Human feedback is not merely an optional step but a critical component of the agentic data engineering pipeline, ensuring safety, accuracy, and alignment with evolving business context. This feedback mechanism serves multiple purposes:

- Correction & refinement: Humans can correct errors or suggest improvements in agent-generated designs or code. This is particularly crucial in the early stages of agent development.

- Validation & trust: Final human approval points (e.g., for design, code deployment, and output data validation) build trust in the automated system and mitigate the risks of “black box” decisions.

- Context & nuance: Agents may struggle with implicit business context, regulatory changes, or nuanced interpretations of requirements. Human engineers provide this critical layer of understanding.

- Learning & improvement: Human feedback (approvals, rejections, suggestions) can be used to continuously fine-tune and improve the underlying LLMs and agent logic, making the system smarter over time. This can involve reinforcement learning from human feedback (RLHF) or supervised fine-tuning.

Feedback mechanisms should be integrated into existing workflows (e.g., pull request comments, chat notifications) to minimize disruption for human engineers.

Limitations

Despite their immense potential, agentic data engineering solutions face several limitations:

- Accuracy & hallucination: LLMs can “hallucinate” – generate plausible but incorrect information. This risk is higher with complex, ambiguous requirements or when generating highly specific code. Rigorous testing and human review are essential.

- Context window & complexity: While improving, LLMs have limitations on the amount of context they can process. Very large, highly complex data engineering projects with numerous interdependencies might exceed an agent’s current ability to reason comprehensively.

- Cost & compute: running powerful llms for code generation and reasoning can be computationally expensive. optimizing agent interactions and prompt engineering is crucial for cost control.

- data privacy & security: Agents interacting with sensitive data require robust security controls, including access management, data anonymization, and adherence to privacy regulations (e.g., GDPR, CCPA).

- Debugging & explainability: When agents generate incorrect code or designs, debugging the “agent’s thought process” can be challenging. Improving the explainability of agent decisions is an active area of research.

- Rapidly evolving technologies: The AI landscape is changing rapidly. Keeping agentic systems updated with the latest LLMs, frameworks, and best practices will be a continuous effort.

- Human adoption & trust: Overcoming skepticism from data engineers and analysts who might feel threatened or doubt the reliability of automated systems will require strong change management, demonstrable successes, and transparent feedback loops.

Organizations & enterprises case studies

While fully autonomous, end-to-end agentic data engineering is still emerging, several leading organizations are pioneering elements of this vision:

- Databricks itself is heavily investing in AI capabilities for its platform, including AI-driven SQL generation, cataloging, and optimization features. Their “Lakehouse AI” strategy aims to embed AI throughout the data lifecycle, simplifying engineering tasks. For example, their SQL AI functionality allows users to generate SQL queries from natural language descriptions, which is a step towards agentic code generation.

- Microsoft (Copilot for Azure Data Factory): Microsoft is integrating Copilot into its Azure Data Factory (ADF) for natural language-to-pipeline generation. Users can describe the data integration task they want to achieve (e.g., “copy data from SQL Server to Azure Data Lake and transform it”), and Copilot will generate the ADF pipeline components.

- Google Cloud (Vertex AI): Google’s Vertex AI platform offers services like “Duet AI” which assists developers with code generation and summarization, applicable to data pipeline development. Their broader strategy involves using AI to simplify cloud operations, including data management.

- Various FinTech/Tech Startups: Numerous smaller firms and startups are building specialized AI agents for specific data tasks, such as automated schema mapping, data quality anomaly detection, or dynamic data synthesis for testing. These often leverage LLMs to interpret data dictionaries and user needs. While not always a full “agentic data engineering,” they represent components that could be orchestrated in a larger agentic system.

These examples highlight a growing trend where AI is being used to augment and automate complex data-related tasks, paving the way for the vision of fully autonomous data engineering.

Conclusion

Autonomous data engineering powered by interconnected AI agents can transform how organizations manage data by automating interpretation, design, coding, and orchestration. This brings greater speed, efficiency, and scalability, though challenges around accuracy, cost, and human oversight remain. With rapid advances in LLMs and agentic frameworks, self-optimizing data pipelines are becoming a strategic reality. The path forward requires strong platforms, governance, and human-AI collaboration, marking a shift toward a new era of enterprise data management.