Paris-based artificial intelligence startup Mistral AI has announced the open-source release of its lightweight AI model, Mistral Small 3.1, which the company claims surpasses similar models created by OpenAI and Google LLC. This release is poised to intensify competition in the development of cost-effective large language models.

Mistral AI unveils game-changing lightweight model: Mistral Small 3.1

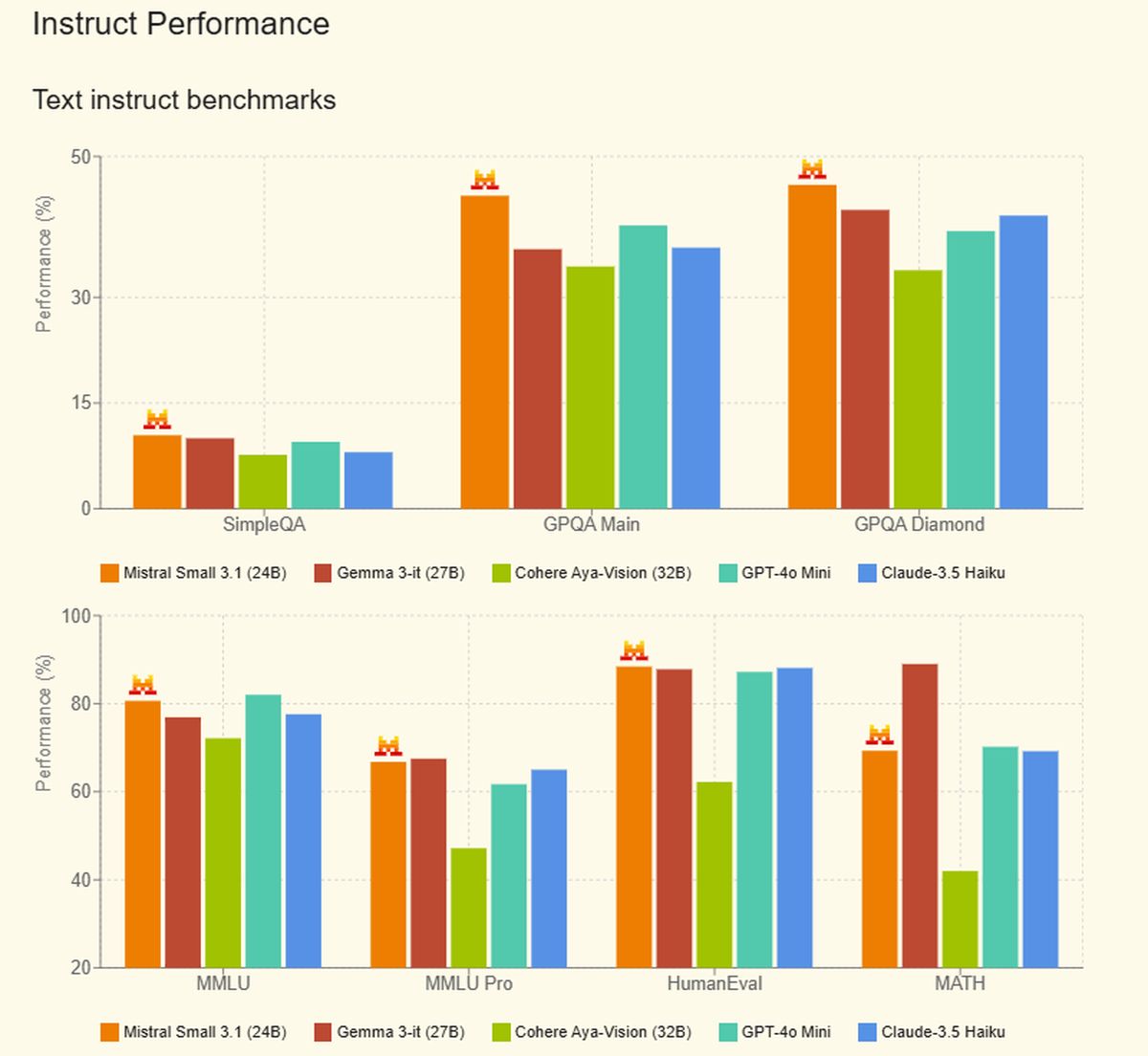

Mistral Small 3.1 processes text and images utilizing just 24 billion parameters, significantly smaller than many advanced models, yet it competes successfully in performance. The new model provides improved text performance, multimodal understanding, and an expanded content window of up to 128,000 tokens, compared to its predecessor, Mistral Small 3.

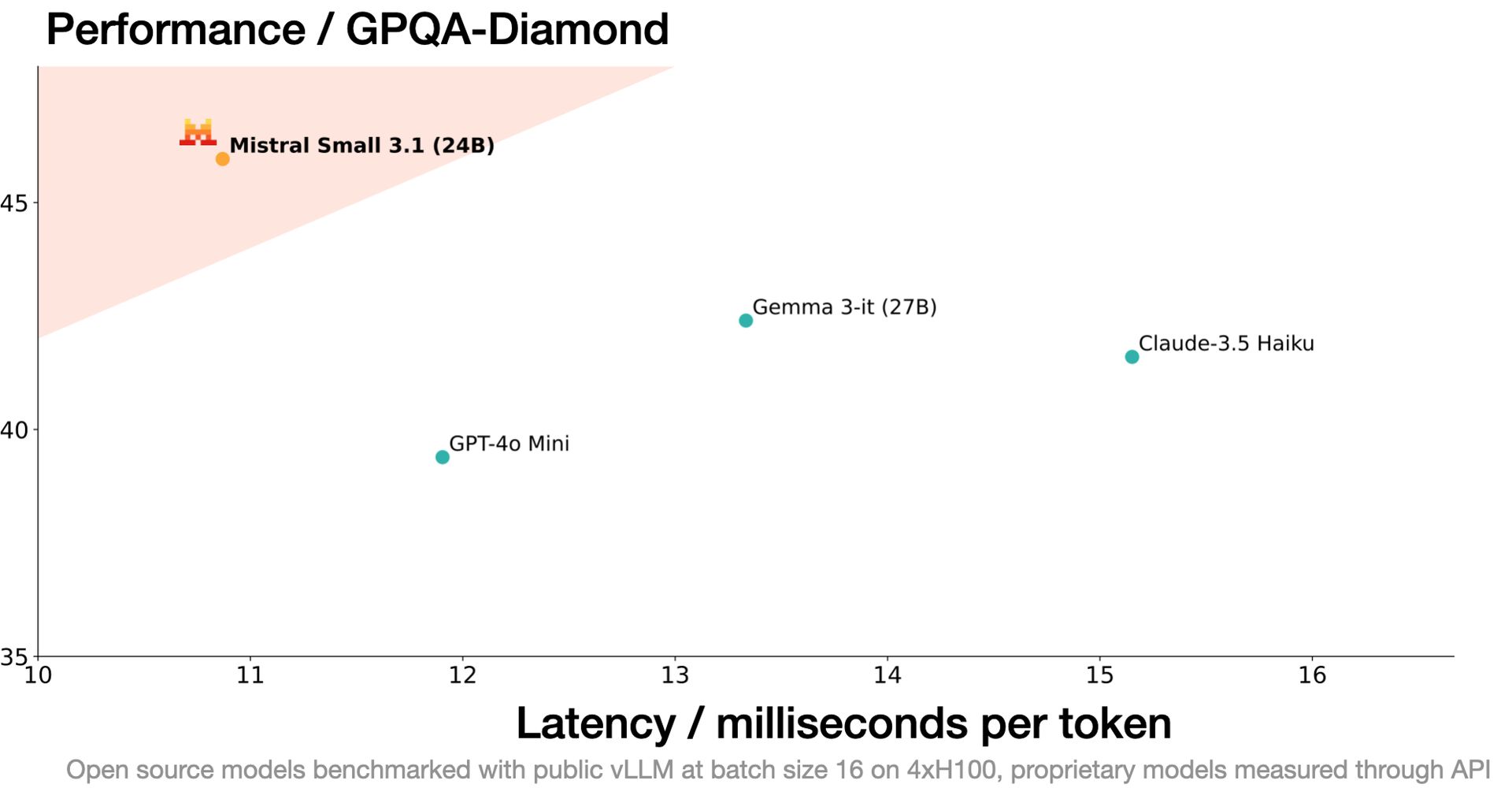

The model’s processing speed reaches approximately 150 tokens per second, facilitating applications that require rapid responses. Mistral AI focuses on algorithmic improvements and training optimization techniques, a strategy that diverges from competitors that rely on increasing computational resources. This approach enhances the performance of smaller model architectures and renders advanced AI more accessible, as Mistral Small 3.1 can run on a single RTX 4090 GPU or a Mac laptop with 32GB RAM.

Founded in 2023 by former researchers from Google’s DeepMind and Meta Platforms, Mistral AI has quickly become Europe’s premier AI firm, raising over $1.04 billion at a valuation of around $6 billion. This figure contrasts sharply with OpenAI’s reported valuation of $80 billion. For everyday document workflows, a desktop app for viewing PDFs can help when opening or annotating files before they’re converted or parsed. Mistral Small 3.1 follows a series of recent releases, including the Arabic-focused model Saba and Mistral OCR, which utilizes optical character recognition to convert PDF documents into Markdown files.

Mistral Le Chat vs. OpenAI ChatGPT: Performance, image, speed and more

The company’s diverse portfolio includes Mistral Large 2, a multimodal model called Pixtral, a code-generating model named Codestral, and optimized models for edge devices known as Les Ministraux. Mistral’s commitment to open-source differentiates it from an industry dominated by proprietary models. This strategy has enabled the community to build reasoning models on the earlier Mistral Small 3, showcasing the potential for rapid AI development through collaboration.

Open-sourcing models allows Mistral to leverage the wider AI community for research and development, though this may challenge revenue generation, leading the company to seek specialized services and enterprise applications instead. Mistral Small 3.1 is positioned as a significant technical achievement, reinforcing that powerful AI models can be accessible in smaller, efficient packages.

Mistral Small 3.1 is available for download through Hugging Face and can be accessed via Mistral’s API or Google Cloud’s Vertex AI platform. In the upcoming weeks, it will also be available through Nvidia’s NIM microservices and Microsoft’s Azure AI Foundry.

Released under the Apache 2.0 license, Mistral Small 3.1 is engineered to manage generative AI tasks, including instruction following, conversational assistance, image comprehension, and function calling. With features supporting rapid responses and domain-specific fine-tuning, Mistral Small 3.1 is tailored for enterprise and consumer applications requiring multimodal understanding.

The model has demonstrated superior performance in various benchmarks compared to similar proprietary models. Users can find Mistral Small 3.1 Base and Mistral Small 3.1 Instruct available for download on Hugging Face, with a developer playground allowing API access starting today. For enterprises, optimized inference infrastructure can be arranged through direct contact with the company.

Featured image credit: Mistral