Large language models (LLMs) are getting smarter, but they’re also hitting a wall: handling long pieces of text is slow and computationally expensive. Traditional attention mechanisms—the core of how AI processes and remembers information—struggle to scale efficiently, making models costly to train and run.

Now, researchers from DeepSeek-AI and Peking University have introduced a game-changing approach called Natively Sparse Attention (NSA). This new method promises to make AI models significantly faster, cheaper, and more efficient, all while maintaining the same level of reasoning capability as traditional approaches.

Why AI’s attention problem needs a fix

Imagine reading a book where you have to keep every sentence in mind at all times—that’s how Full Attention mechanisms work in AI. They scan and store information across long sequences, but as context length grows (think thousands of words), this approach becomes incredibly slow and computationally heavy.

To address this, researchers have explored Sparse Attention—which selectively processes only the most important information instead of everything. However, existing sparse methods have major weaknesses:

- They’re hard to train from scratch, often requiring models to first learn with Full Attention before switching to a sparse approach.

- They don’t fully optimize for modern hardware, meaning theoretical speed improvements don’t always translate to real-world efficiency.

How NSA changes the game

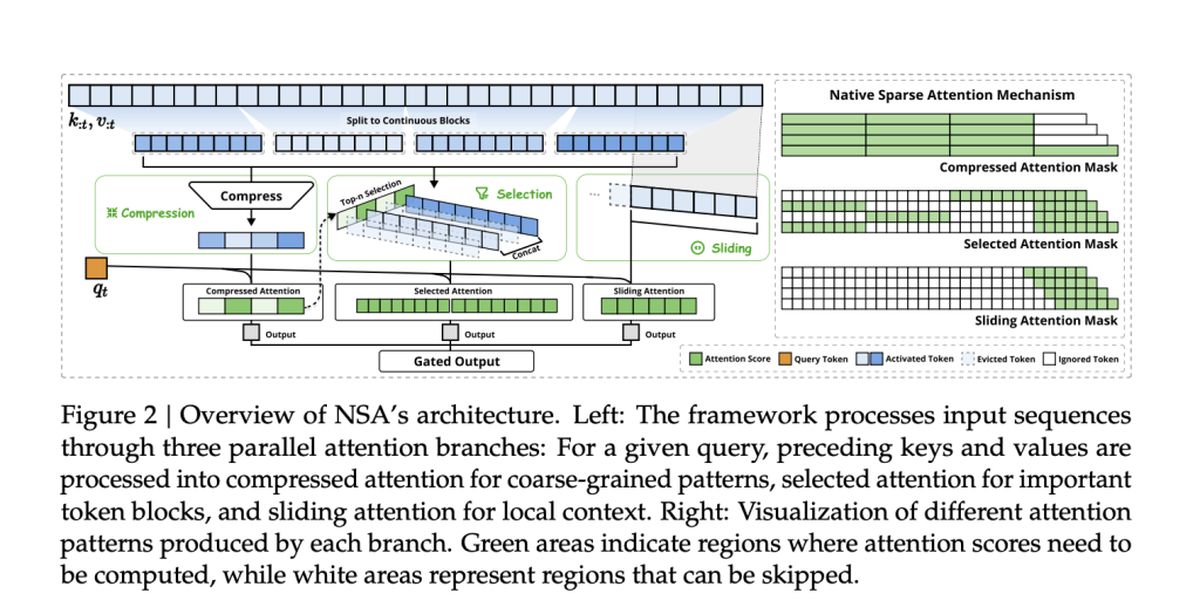

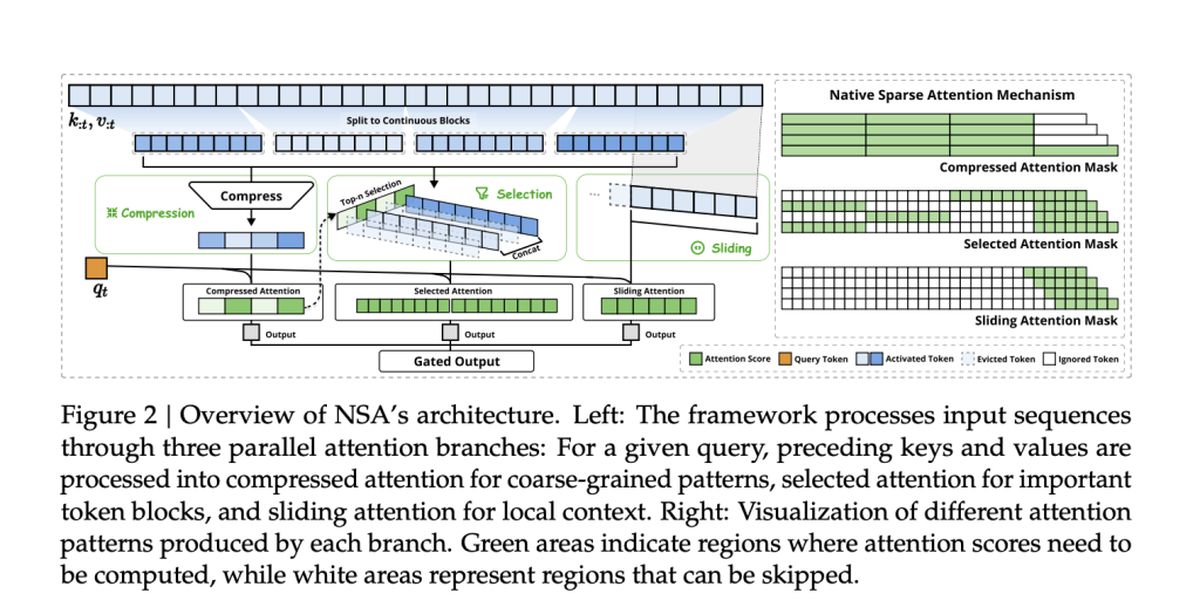

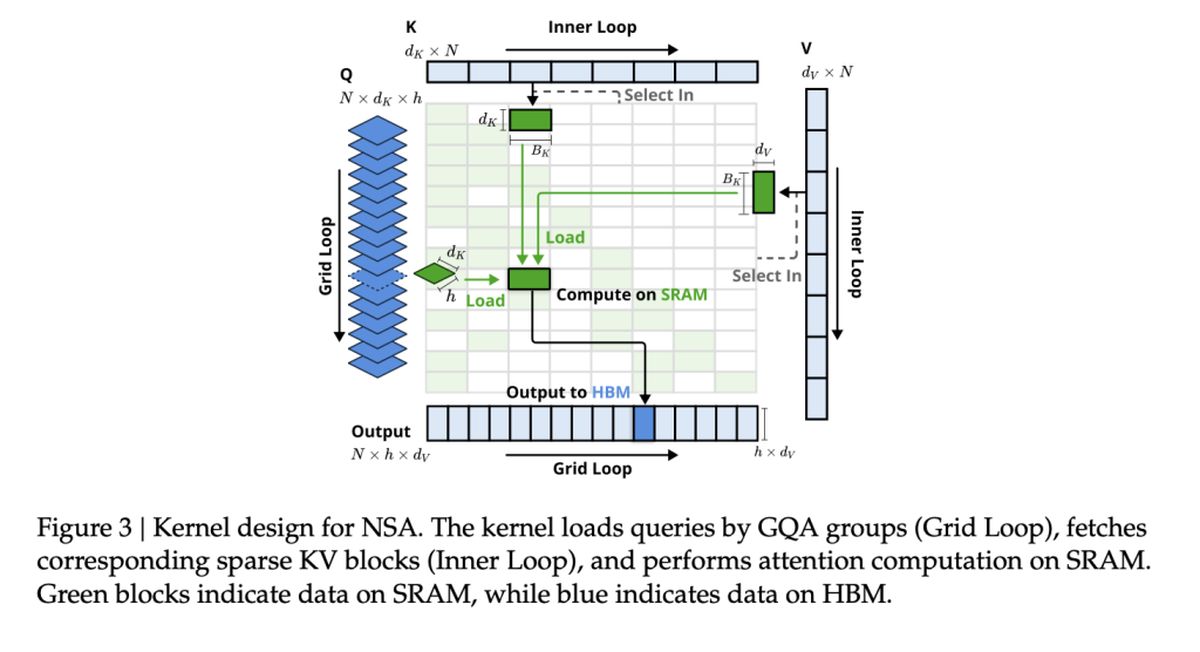

The team behind NSA, including Jingyang Yuan, Huazuo Gao, Damai Dai, and their colleagues, took a fresh approach. Their method natively integrates sparsity from the start, rather than applying it as an afterthought.

NSA achieves this with two key innovations:

- Hardware-aligned efficiency: NSA is built to maximize GPU performance, avoiding memory bottlenecks and ensuring real-world speedups.

- End-to-end trainability: Unlike previous sparse methods, NSA is fully trainable from scratch, reducing training costs without losing accuracy.

Speed and accuracy: The NSA Advantage

So, how does NSA stack up against traditional Full Attention models? According to the study, NSA achieves up to 11× speed improvements while still matching—or even outperforming—Full Attention on key benchmarks.

Some of the biggest wins include:

- Faster processing: NSA speeds up AI’s ability to handle long documents, codebases, and multi-turn conversations.

- Better reasoning: Despite being “sparse,” NSA models match or exceed Full Attention models in chain-of-thought reasoning tasks.

- Lower costs: By reducing computation without sacrificing performance, NSA could make advanced AI more affordable to train and deploy.

Existing sparse attention methods

Many existing sparse attention mechanisms attempt to reduce computational overhead by selectively pruning tokens or optimizing memory access. However, they often fall short in practical implementation, either because they introduce non-trainable components or fail to align with modern GPU architectures.

For example:

- ClusterKV and MagicPIG rely on discrete clustering or hashing techniques, which disrupt gradient flow and hinder model training.

- H2O and MInference apply sparsity only during specific stages of inference, limiting speed improvements across the full pipeline.

- Quest and InfLLM use blockwise selection methods, but their heuristic-based scoring often results in lower recall rates.

NSA addresses these limitations by integrating sparsity natively—ensuring efficiency in both training and inference while preserving model accuracy. This means no post-hoc approximations or trade-offs between speed and reasoning capability.

NSA’s performance on real-world tasks

To validate NSA’s effectiveness, researchers tested it across a range of AI tasks, comparing its performance with traditional Full Attention models and state-of-the-art sparse attention methods. The results highlight NSA’s ability to match or surpass Full Attention models while significantly reducing computational costs.

General benchmark performance

NSA demonstrated strong accuracy across knowledge, reasoning, and coding benchmarks, including:

- MMLU & CMMLU: Matching Full Attention in knowledge-based tasks

- GSM8K & MATH: Outperforming Full Attention in complex reasoning

- HumanEval & MBPP: Delivering solid coding performance

Long-context understanding

NSA excels at handling long-context sequences in benchmarks like LongBench. In tasks requiring deep contextual memory, NSA maintained:

- High recall in retrieval tasks (Needle-in-a-Haystack, document QA)

- Stable accuracy in multi-hop reasoning (HPQ, 2Wiki, GovRpt)

Real-world speed gains

The hardware-aligned optimizations in NSA lead to:

- 9× faster inference speeds for 64k-length sequences

- 6× faster training efficiency compared to Full Attention models

- Reduced memory bandwidth consumption, making large-scale AI applications more feasible