OpenAI may soon release an AI tool capable of taking control of users’ PCs and performing actions on their behalf, referred to as the Operator tool. Software engineer Tibor Blaho, known for accurately leaking upcoming AI products, claims to have found evidence supporting this development.

OpenAI plans January launch for AI tool Operator

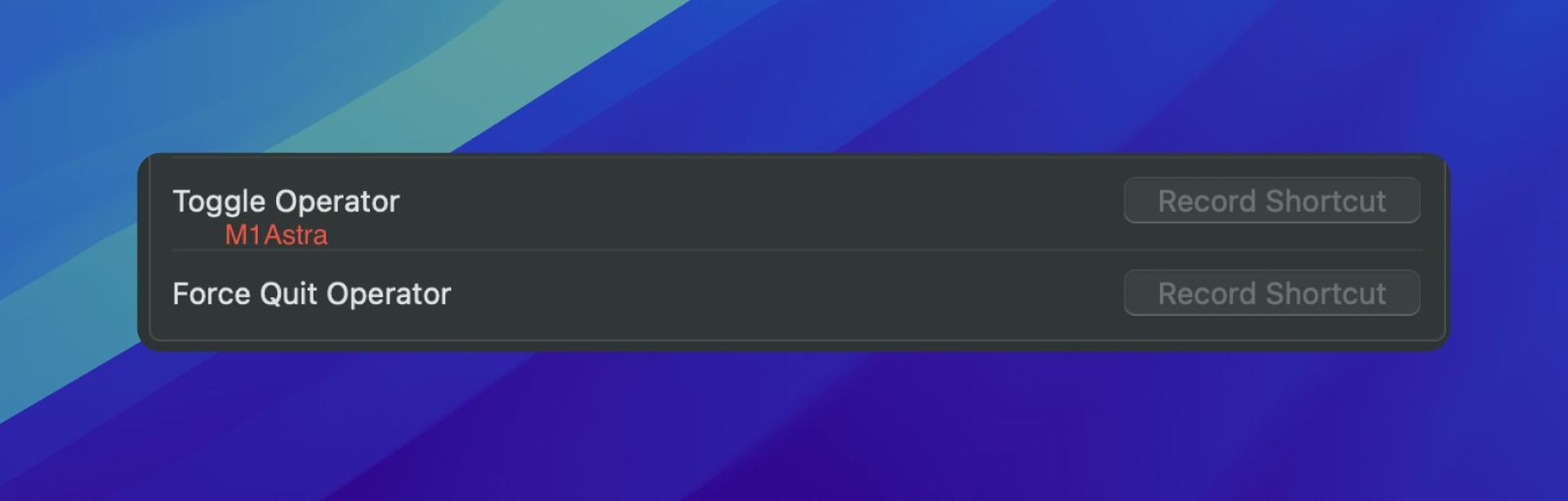

OpenAI is reportedly aiming for a January launch of Operator. Blaho’s recent discoveries include hidden options in OpenAI’s ChatGPT client for macOS that allow users to define shortcuts to “Toggle Operator” and “Force Quit Operator.” Furthermore, Blaho notes that OpenAI has added references to Operator on its website, although these references are not yet publicly visible.

OpenAI to launch autonomous AI agent Operator in January

According to Blaho, the website also contains unpublished tables comparing the performance of Operator with other computer-using AI systems. If the numbers are accurate, they indicate that Operator is not entirely reliable, depending on the task. For instance, in a benchmark on OSWorld, which simulates a real computer environment, the “OpenAI Computer Use Agent (CUA)” scored 38.1%, better than Anthropic’s model but significantly below the 72.4% score achieved by humans. The OpenAI CUA does outperform human agents on the WebVoyager test, which assesses an AI’s web navigation skills, but it underperforms on another benchmark, WebArena.

Operator appears to struggle with tasks typically easy for humans. In tests requiring Operator to sign up for a cloud provider and launch a virtual machine, it succeeded 60% of the time. Meanwhile, it managed to create a Bitcoin wallet only 10% of the time, according to the leaked benchmarks.

OpenAI is entering the AI agent space at a time when competitors like Anthropic and Google are also advancing in this area. Analytics firm Markets and Markets projects the market for AI agents could reach $47.1 billion by 2030. While AI agents remain in a primitive stage of development, some experts express concerns about their safety, especially if technology improves rapidly.

One leaked chart indicates that Operator performs well in certain safety evaluations, particularly in resisting attempts to engage in illicit activities and search for sensitive personal data. Reportedly, safety testing has contributed to the lengthy development cycle of Operator. OpenAI co-founder Wojciech Zaremba criticized Anthropic’s recent agent release for lacking safety measures, indicating potential backlash if OpenAI were to expedite a similar release.

Criticism has been directed at OpenAI by AI researchers and former staff for allegedly prioritizing the rapid productization of technology over safety measures.

Image credit: Tibor Blaho