Text-to-video generation is not what it was even just a few years ago. We transformed it into a tool with truly futuristic functionality. Users create content for personal pages, influencers leverage it for self-promotion, and companies utilize it for everything from advertising and educational materials to virtual training and movie production. The majority of text-to-video systems are built on the architecture of diffusion transformers, which are the cutting edge in the world of video generation. This tech serves as the foundation for services like Luma and Kling. However, this status was only solidified in 2024, when the first diffusion transformers for video gained market adoption.

The turning point came with OpenAI’s release of SORA, showcasing incredibly realistic shots that were almost indistinguishable from real life. OpenAI showed that their diffusion transformer could successfully generate video content. This move validated the potential of the tech and sparked a trend across the industry: now, approximately 90% of current models are based on diffusion transformers.

Diffusion is a fascinating process that deserves a more thorough exploration. Let’s understand how diffusion works, the challenges the transformer technology addresses in this process, and why it plays such a significant role in text-to-video generation.

What is the diffusion process in GenAI?

At the heart of text-to-video and text-to-image generation lies the process of diffusion. Inspired by the physical phenomenon where substances gradually mix — like ink diffusing in water — diffusion models in machine learning involve a two-step process: adding noise to data and then learning to remove it.

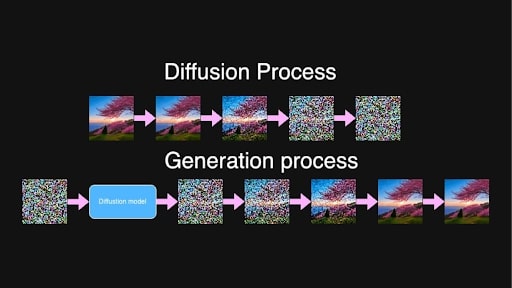

During training, the model takes images or sequences of video frames and progressively adds noise over several steps until the original content becomes indistinguishable. Essentially, it turns it into pure noise.

Diffusion and Generation processes for “Beautiful blowing sakura tree placed on the hill during sunrise” prompt

Diffusion and Generation processes for “Beautiful blowing sakura tree placed on the hill during sunrise” prompt

When generating new content, the process works in reverse. The model is trained to predict and remove noise in increments, focusing on a random intermediate noise step between two points, t, and t+1. Because of the long training process, the model has observed all steps in the progression from pure noise to almost clean images and now has quite the ability to identify and reduce noise at basically any level.

From random, pure noise, the model, guided by the input text, iteratively creates video frames that are coherent and match the textual description. High-quality, detailed video content is a result of this very gradual process.

Latent diffusion is what makes this computationally possible. Instead of working directly with high-resolution images or videos, the data is compressed into a latent space by an encoder.

This is done to (significantly) reduce the amount of data the model needs to process, accelerating the generation without compromising quality. After the diffusion process refines the latent representations, a decoder transforms them back into full-resolution images or videos.

The issue with video generation

Unlike a single image, video requires objects and characters to remain stable throughout, preventing unexpected shifts or changes in appearance. We have all seen the wonders generative AI is capable of, but the occasional missing arm or indistinguishable facial expression is well within the norm. In the video, however, the stakes are higher; consistency is paramount for a fluid feel.

So, if a character appears in the first frame wearing a specific outfit, that outfit must look identical in each subsequent frame. Any change in the character’s appearance, or any “morphing” of objects in the background, breaks the continuity and makes the video feel unnatural or even eerie.

Image provided by the author

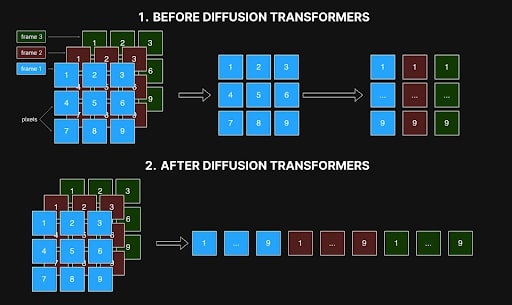

Early methods approached video generation by processing frames individually, with each pixel in one frame only referencing its corresponding pixel in others. However, this frame-by-frame approach often resulted in inconsistencies, as it couldn’t capture the spatial and temporal relationships between frames that are essential for smooth transitions and realistic motion. Artifacts like shifting colors, fluctuating shapes, or misaligned features are a result of this lack of coherence and diminish the overall quality of the video.

Image provided by the author

Image provided by the author

The biggest blocker in solving this was computational demand — and the cost. For a 10-second video at 10 frames per second, generating 100 frames increases complexity exponentially. Creating these 100 frames is about 10,000 times more complex than generating a single frame due to the need for precise frame-to-frame coherence. This task requires 10,000 times more in terms of memory, processing time, and computational resources, often exceeding practical limits. As you can imagine, the luxury of experimenting with this process was available to a select few in the industry.

This is what made OpenAI’s release of SORA so significant: they demonstrated that diffusion transformers could indeed handle video generation despite the immense complexity of the task.

How diffusion transformers solved the self-consistency problem in video generation

The emergence of diffusion transformers tackled several problems: they enabled the generation of videos of arbitrary resolution and length while achieving high self-consistency. This is largely because they can work with long sequences, as long as they fit into memory, and due to the self-attention mechanism.

In artificial intelligence, self-attention is a mechanism that computes attention weights between elements in a sequence, determining how much each element should be influenced by others. It enables each element in a sequence to consider all other elements simultaneously and allows models to focus on relevant parts of the input data when generating output, capturing dependencies across both space and time.

In video generation, this means that every pixel in every frame can relate to every other pixel across all frames. This interconnectedness ensures that objects and characters remain consistent throughout the whole video, from beginning to end. If a character appears in one frame, self-attention helps prevent changes and maintain that character’s appearance in all subsequent frames.

Before, models incorporated a form of self-attention within a convolutional network, but this structure limited their ability to achieve the same consistency and coherence now possible with diffusion transformers.

With simultaneous spatio-temporal attention in diffusion transformers, however, the architecture can load data from different frames simultaneously and analyze them as a unified sequence. As shown in the image below, previous methods processed interactions within each frame and only linked each pixel with its corresponding position in other frames (see Figure 1). This restricted view hindered their ability to capture the spatial and temporal relationships essential for smooth and realistic motion. Now, with diffusion transformers, everything is processed simultaneously (Figure 2).

Spatio-temporal interaction in diffusion networks before and after transformers. Image provided by the author

Spatio-temporal interaction in diffusion networks before and after transformers. Image provided by the author

This holistic processing maintains stable details across frames, ensuring that scenes do not morph unexpectedly and turn into an incoherent mess of a final product. Diffusion transformers can also handle sequences of arbitrary length and resolution, provided they fit into memory. With this advancement, the generation of longer videos is feasible without sacrificing consistency or quality, addressing challenges that previous convolution-based methods could not overcome.

The arrival of diffusion transformers reshaped text-to-video generation. It enabled the production of high-quality, self-consistent videos across arbitrary lengths and resolutions. Self-attention within transformers is a key component in addressing challenges like maintaining frame consistency and handling complex spatial and temporal relationships. OpenAI’s release of SORA proved this capability, setting a new standard in the industry: now, approximately 90% of advanced text-to-video systems are based on diffusion transformers, with major players like Luma, Clink, and Runway Gen-3 leading the market.

Despite these breathtaking advances, diffusion transformers are still very resource-intensive, requiring nearly 10,000 times more resources than a single-image generation, making training high-quality models still a very costly undertaking. Nevertheless, the open-source community has taken significant steps to make this technology more accessible. Projects like Open-SORA and Open-SORA-Plan, as well as other initiatives such as Mira Video Generation, Cog, and Cog-2, have opened new possibilities for developers and researchers to experiment and innovate. Backed by companies and academic institutions, these open-source projects give hope for ongoing progress and greater accessibility in video generation, benefiting not only large corporations but also independent creators and enthusiasts keen to experiment. This, as with any other community-driven effort, opens up a future where video generation is democratized, bringing this powerful technology to many more creatives to explore.