The Allen Institute for AI (Ai2) has made public Molmo, an innovative set of open-source multimodal models that contest the guiding influence of proprietary AI systems. With strengths in superior image recognition and actionable insights, Molmo is ready to assist developers, researchers, and startups by delivering an advanced yet easy-to-use AI application development tool. The launch brings attention to an important change in the landscape of AI, uniting open-source and proprietary models and improving everyone’s access to leading AI tech.

Molmo offers features that provide an exceptional degree of image understanding, permitting it to correctly read a wide variety of visual data—from mundane items to complex charts and menus. Instead of being like most AI models, Molmo surpasses perception by enabling users to interact with virtual and real environments through pointing and a range of spatial actions. This capability denotes a breakthrough, allowing for the introduction of complex AI agents, robotics, and many other applications that depend on a granular understanding of both visual and contextual data.

Efficiency and accessibility serve as major aspects of the Molmo development strategy. Molmo’s advanced skills come from a dataset of less than one million images, in stark contrast to the billions of images processed by other models such as GPT-4V and Google’s Gemini. The implemented approach has contributed to Molmo being not just highly efficient in using computational resources but has also created a model that is equally powerful as the most effective proprietary systems and features fewer hallucinations and quicker training rates.

Making Molmo fully open-source is part of Ai2’s larger strategic effort to democratize AI development. Ai2 enables a diverse array of users—from startups to academic laboratories—to innovate and advance in AI technology without the high costs of investment or vast computing power. It gives them access to Molmo’s language and vision training data, model weights, and source code.

Matt Deitke, Researcher at the Allen Institute for AI, told “Molmo is an incredible AI model with exceptional visual understanding, which pushes the frontier of AI development by introducing a paradigm for AI to interact with the world through pointing. The model’s performance is driven by a remarkably high quality curated dataset to teach AI to understand images through text. The training is so much faster, cheaper, and simpler than what’s done today, such that the open release of how it is built will empower the entire AI community, from startups to academic labs, to work at the frontier of AI development”.

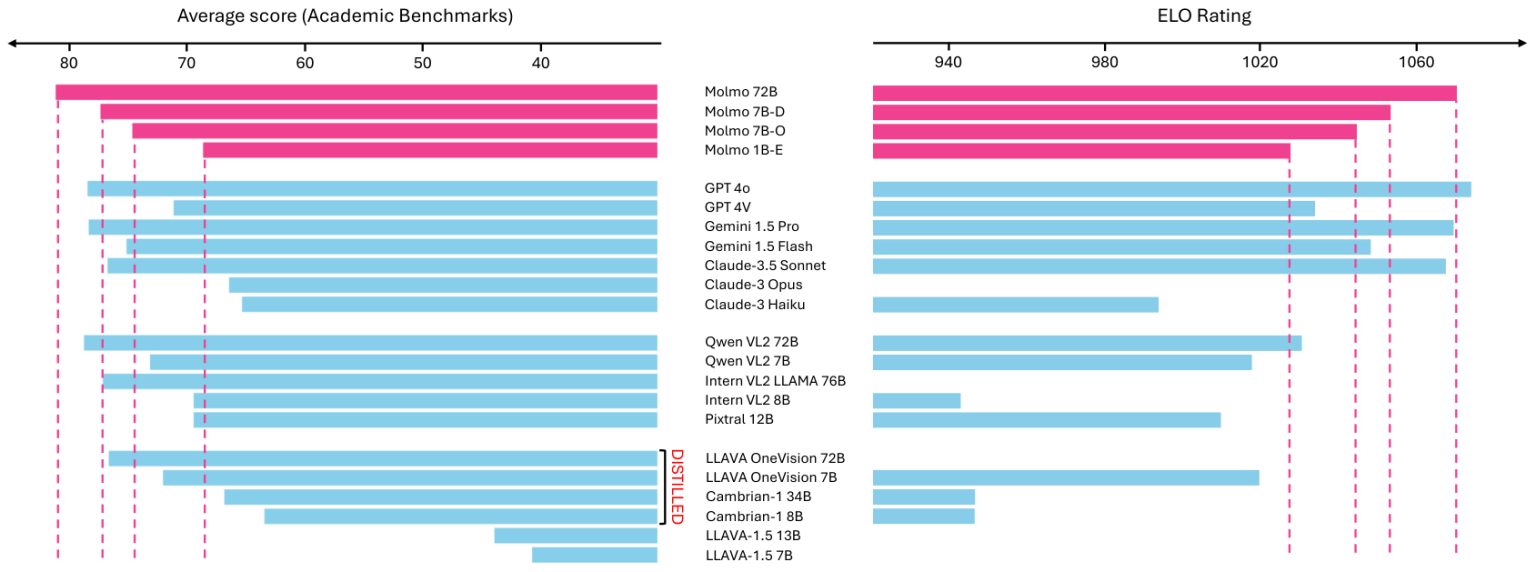

According to internal evaluations, Molmo’s largest model, sporting 72 billion parameters, surpassed OpenAI’s GPT-4V and other leading competitors on several benchmarks. The tiniest Molmo model, including only one billion parameters, is big enough to function on a mobile device while outperforming models with ten times that number of parameters. Here you can see the models and try it for yourself.