Introducing Google’s Gemma 2 2B—a compact yet powerful AI model with 2 billion parameters. Despite its size, it outperforms GPT-3.5 in various tasks

In this article, we’ll dive into what makes Gemma 2 2B stand out, from its impressive features to its versatile deployment options. We’ll see how it stacks up against larger models and explore its role in Google’s latest AI advancements, including the new ShieldGemma and Gemma Scope tools.

Gemma 2 2B: Everything you need to know about Google’s new lightweight yet powerful enough model

Google Gemma 2 2B is a compact yet powerful AI language model. It’s designed to handle various tasks like chatting, generating text, answering questions, and more—all with just 2 billion parameters. Despite its compact nature, it delivers impressive performance across various tasks. Here is how.

Gemma 2 2B is built with 2 billion parameters, leveraging advanced techniques like distillation. This process allows the model to learn from larger models, effectively compressing their knowledge and making it highly efficient. It performs exceptionally well in conversational AI, surpassing even larger models in many aspects.

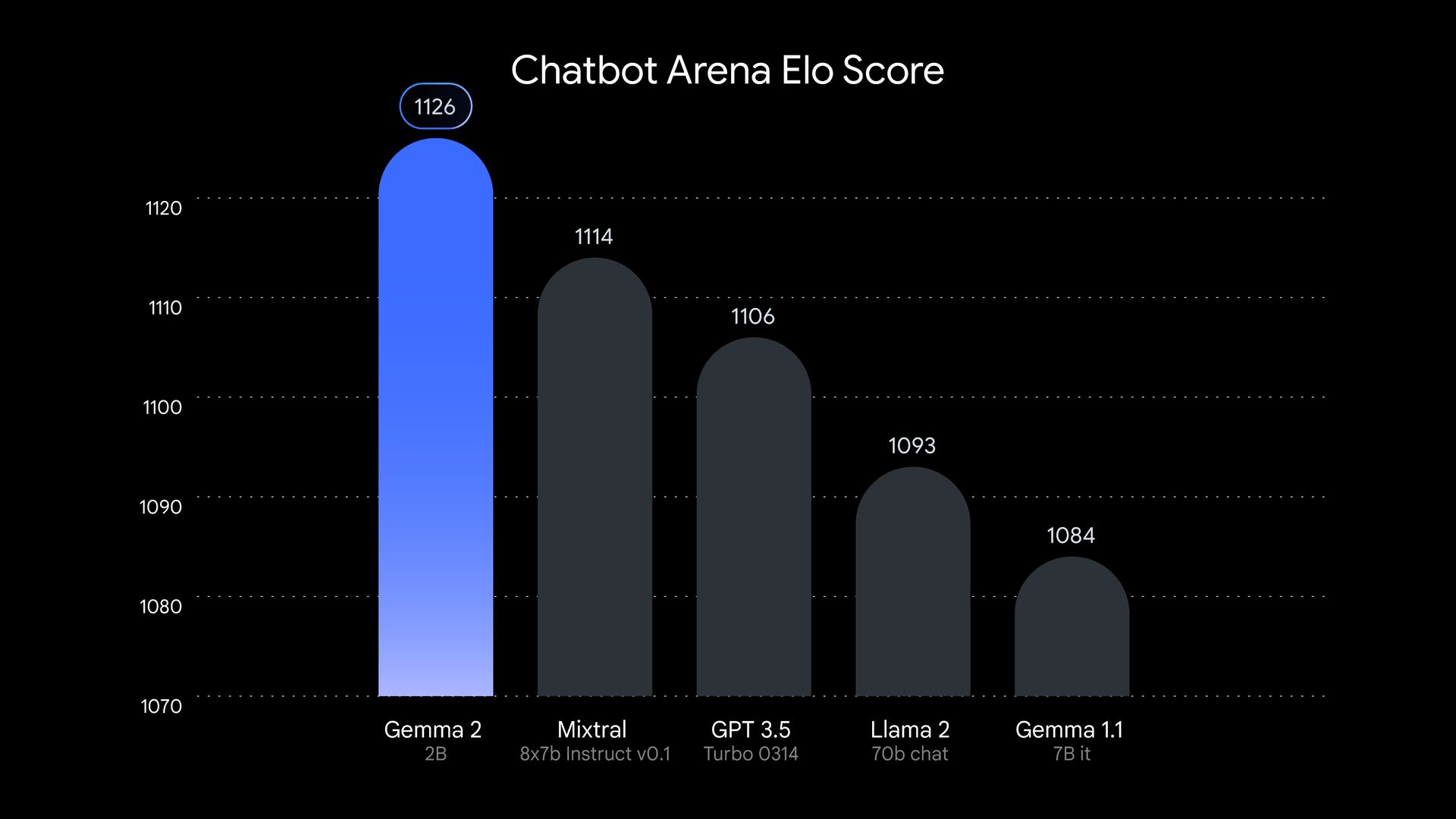

On the LMSYS Chatbot Arena leaderboard, Gemma 2 2B has consistently outperformed all GPT-3.5 models, showcasing its power in handling natural language processing tasks. It excels in text generation, question answering, summarization, and more.

Gemma 2 2B is designed for flexible deployment across various hardware configurations:

- Edge devices: Suitable for small, portable devices with limited computational power.

- Laptops and PCs: Can run efficiently on consumer-grade hardware, making it accessible to a wide range of users.

- Cloud platforms: Optimized for robust cloud infrastructures like Vertex AI and Google Kubernetes Engine (GKE).

The NVIDIA TensorRT-LLM library further enhances the model, ensuring fast and efficient performance, whether on data centers, local workstations, or edge devices. It supports frameworks and libraries like Keras, JAX, Hugging Face, NVIDIA NeMo, Ollama, Gemma.cpp, and soon, MediaPipe.

Gemma 2 2B is available under commercially-friendly terms, making it suitable for both research and commercial applications. Its accessibility is further increased by being small enough to run on the free tier of T4 GPUs in Google Colab, making it easy for developers to experiment and innovate.

Where to use Gemma 2 2B?

Gemma 2 2B is versatile, with applications ranging from:

- Conversational agents: These are used to create chatbots and virtual assistants.

- Content creation: Generating human-like text for various purposes.

- Customer support: Automating responses and assisting with inquiries.

- Educational tools: Providing explanations and answers in educational settings.

Gemma 2 2B stands out as a small yet powerful model, balancing efficiency, performance, and accessibility for every day tasks.

How to use Gemma 2 2B

To start using Gemma 2 2B, download its model weights from the following platforms:

These platforms provide easy access to the model, allowing you to integrate it into your projects. Now, setup your environment. Gemma 2 2B is optimized for various hardware setups and optimized with the NVIDIA TensorRT-LLM library, making it compatible with NVIDIA hardware, such as RTX GPUs and Jetson modules.

It’s time to integrate the model. Gemma 2 2B supports multiple frameworks. Choose a framework that suits your needs and load the model weights into your application. This step allows you to use the model’s capabilities, such as generating text, answering questions, or engaging in conversations.

Now, you can deploy Gemma 2 2B in various environments, depending on your requirements:

- Local Workstations: For development and testing.

- Cloud and Data Centers: For high-scale deployments.

- Edge Devices: For real-time applications on low-power hardware.

The model’s flexibility allows it to be used in a variety of scenarios, from simple desktop applications to complex cloud-based systems. Gemma 2 2B’s open nature makes it easy to experiment with and customize. You can fine-tune it on specific datasets to improve its performance in particular domains or applications.

Gemma 2 2B is not the only AI announcement by Google

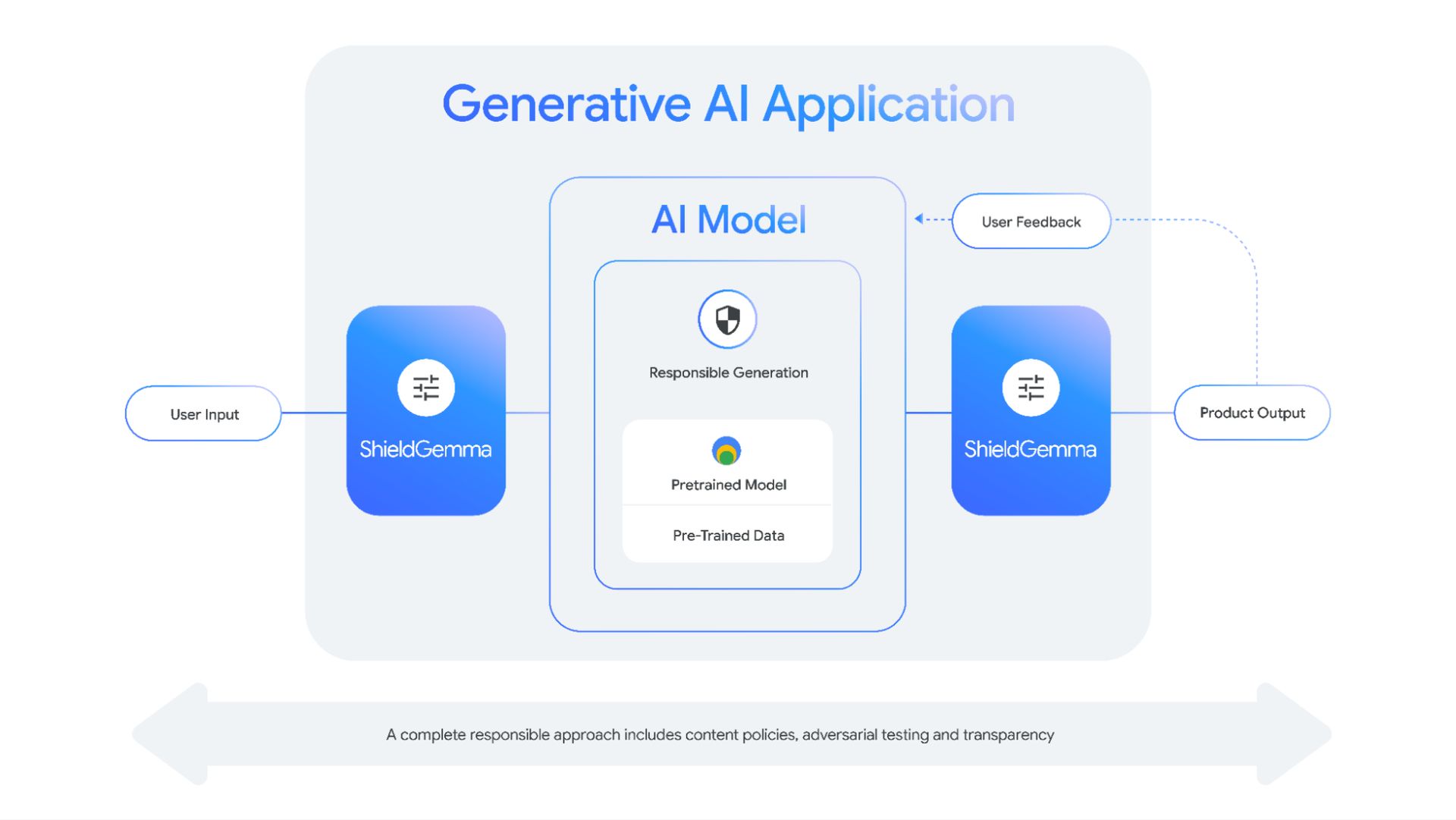

In addition to the release of Gemma 2 2B, Google has unveiled two other significant AI innovations: ShieldGemma and Gemma Scope. These tools enhance the responsible and transparent use of AI, providing a comprehensive suite for safer and more interpretable AI applications.

ShieldGemma

ShieldGemma is a suite of safety classifiers designed to protect users from harmful content, such as hate speech, harassment, sexually explicit material, and dangerous content. Built on the Gemma 2 foundation, it offers various model sizes for different applications, ensuring flexible and efficient content moderation. ShieldGemma’s open nature encourages collaboration and helps set industry safety standards.

Gemma Scope

Gemma Scope provides deep insights into the decision-making processes of Gemma 2 models using open sparse autoencoders (SAEs). This tool helps researchers and developers understand how AI models process information and make predictions. With over 400 SAEs available, Gemma Scope offers a detailed view of AI behavior, promoting accountability and reliability in AI systems.

Together, Gemma 2 2B, ShieldGemma, and Gemma Scope create a comprehensive ecosystem that balances performance, safety, and transparency. These tools equip the AI community with the resources needed to build innovative, responsible, and inclusive AI applications.