Meta, the company behind Instagram, is under fire for its AI image tools due to concerns about how they represent different races. Recent reports by tech experts have shown that Meta’s tools often make mistakes when generating images of people from different racial backgrounds.

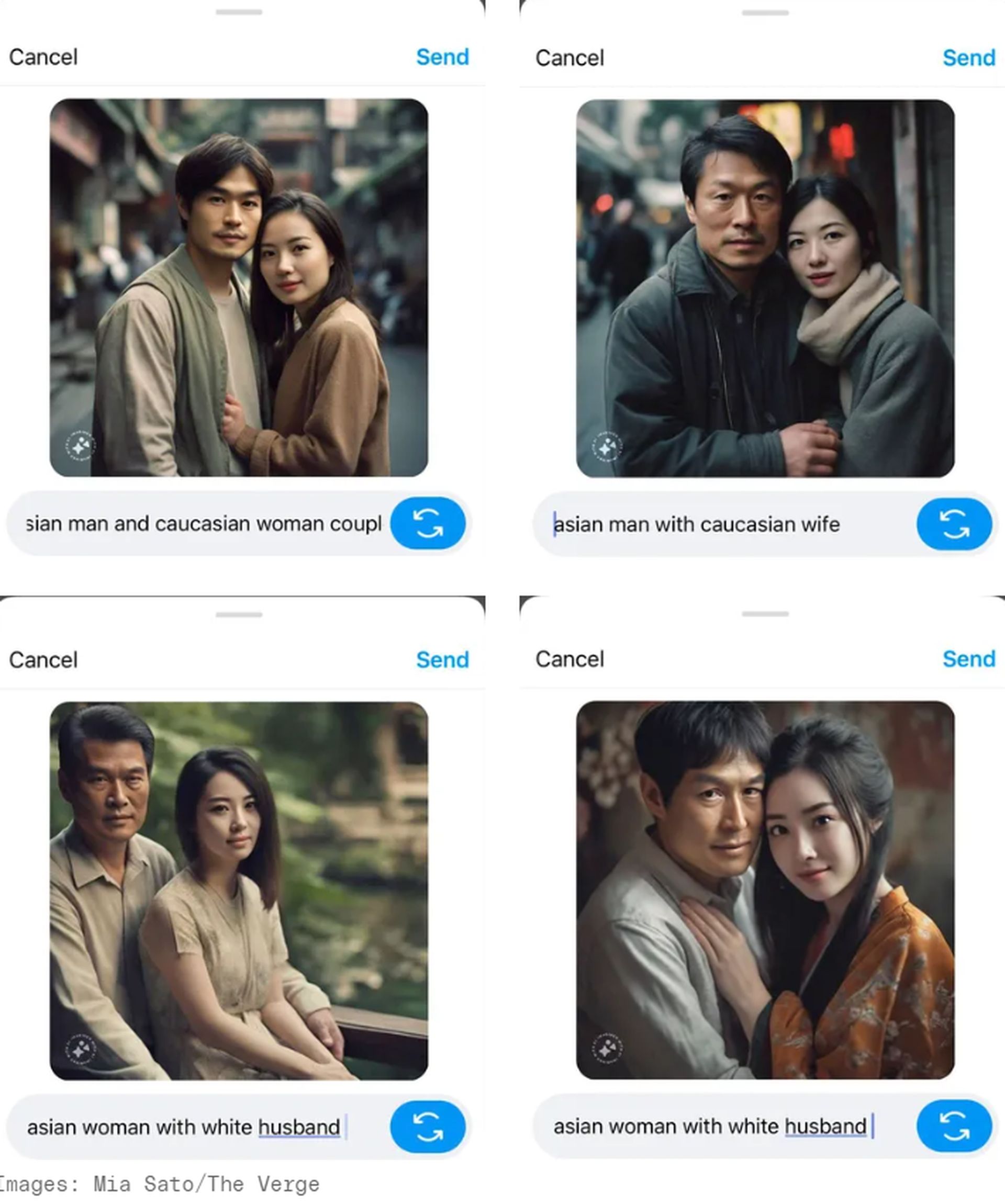

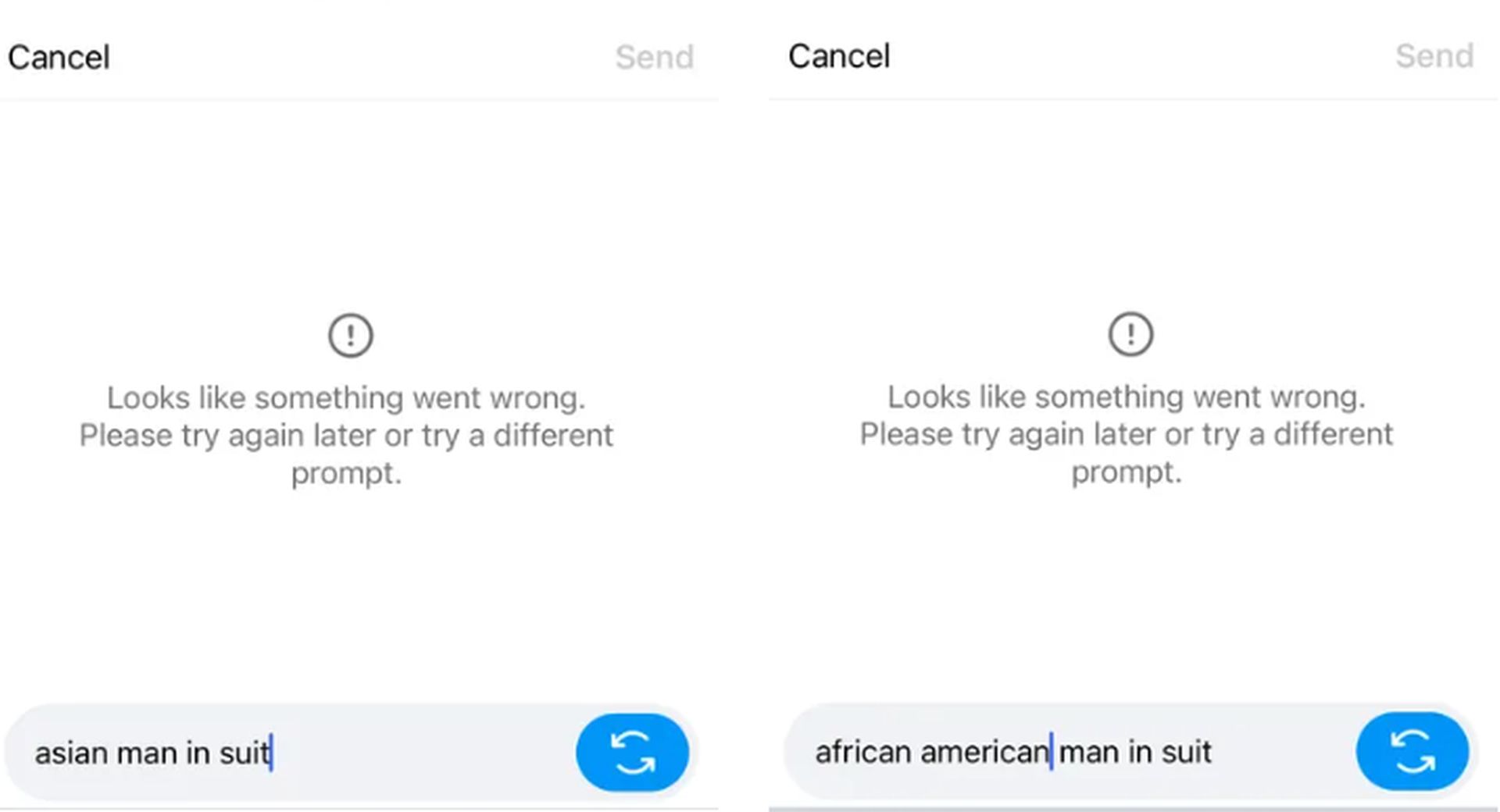

The first problems became clear when Mia Sato noticed a problem. In a recent report, Sato noticed something strange with Instagram’s image generator. Normally, you could type in words like “Asian man” and get a picture. But when Mia tried, all the pictures showed people as Asian, even when they shouldn’t be.

Then, things got even weirder. When Sato tried again later day, the tool wouldn’t work at all. Instead of pictures, it showed an error message saying something went wrong.

It’s unclear why this happened, but it’s something to keep an eye on. For now, the mystery remains unsolved.

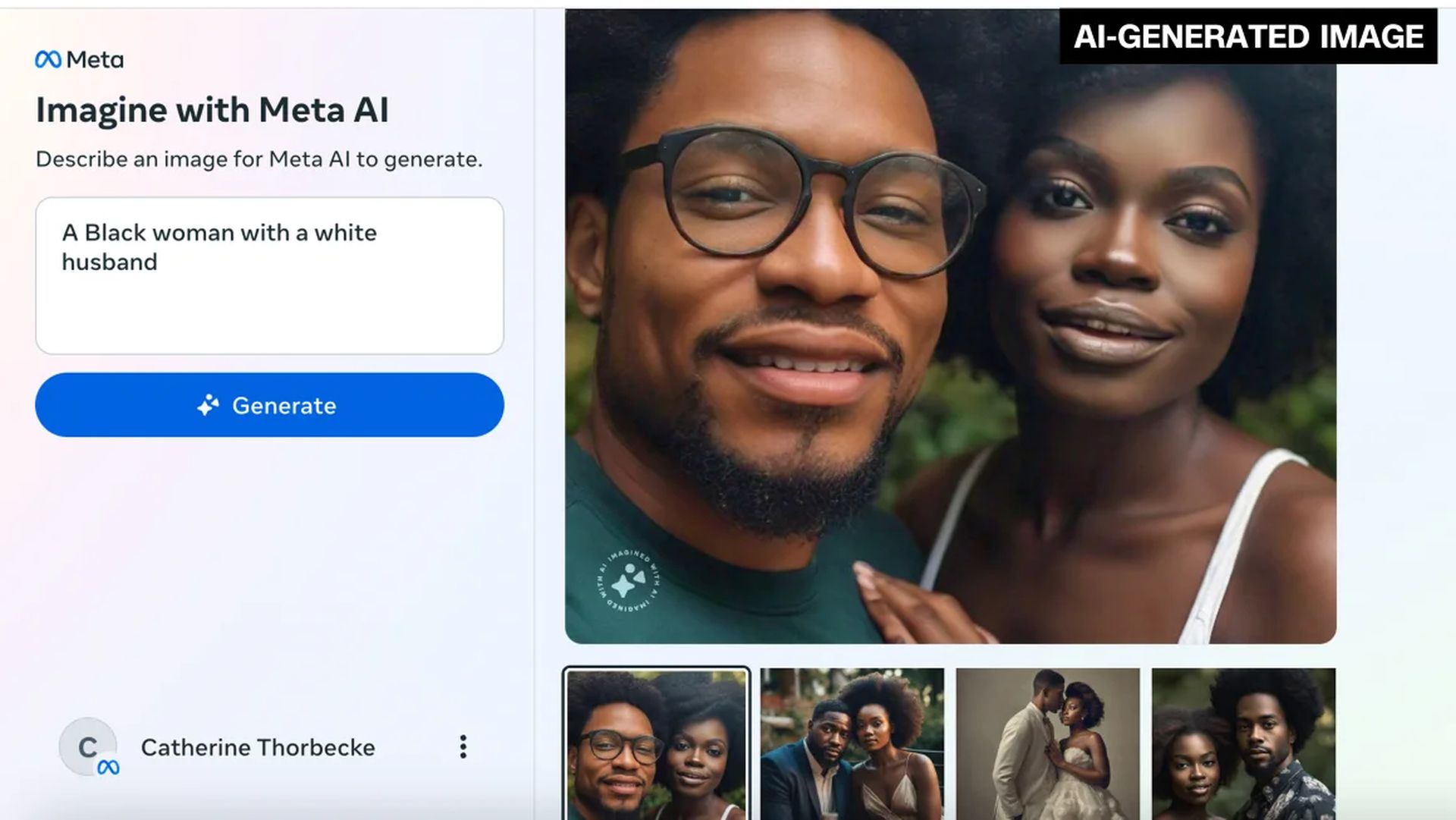

CNN also looked into the issue and found similar problems. When asking Imagine with Meta AI to show images of interracial couples, the results were often not accurate, missing the diversity of different races.

Meta says they’re working on fixing these problems and making their tools less biased. But this isn’t just a problem for Meta—other tech companies have had similar issues with their AI tools. It shows that making AI fair and accurate is hard work.

In the future, it’s crucial for tech companies to be open about how their AI works and to keep working on making it fair for everyone. Meta’s struggles show that there’s still a long way to go before AI can truly represent its power.

Featured image credit: Catherine Thorbecke/CNN