Do you think machines need to understand us better? That’s where the Empathetic Voice Interface (EVI) comes in. Hume AI is developing a new way to talk to computers.

Just last week, Hume AI got a big boost when it raised $50 million in funding. This means they have a lot of money to make EVI even better and bring it to more people. But what exactly is EVI, and why is it so special? Let’s find out!

What is EVI?

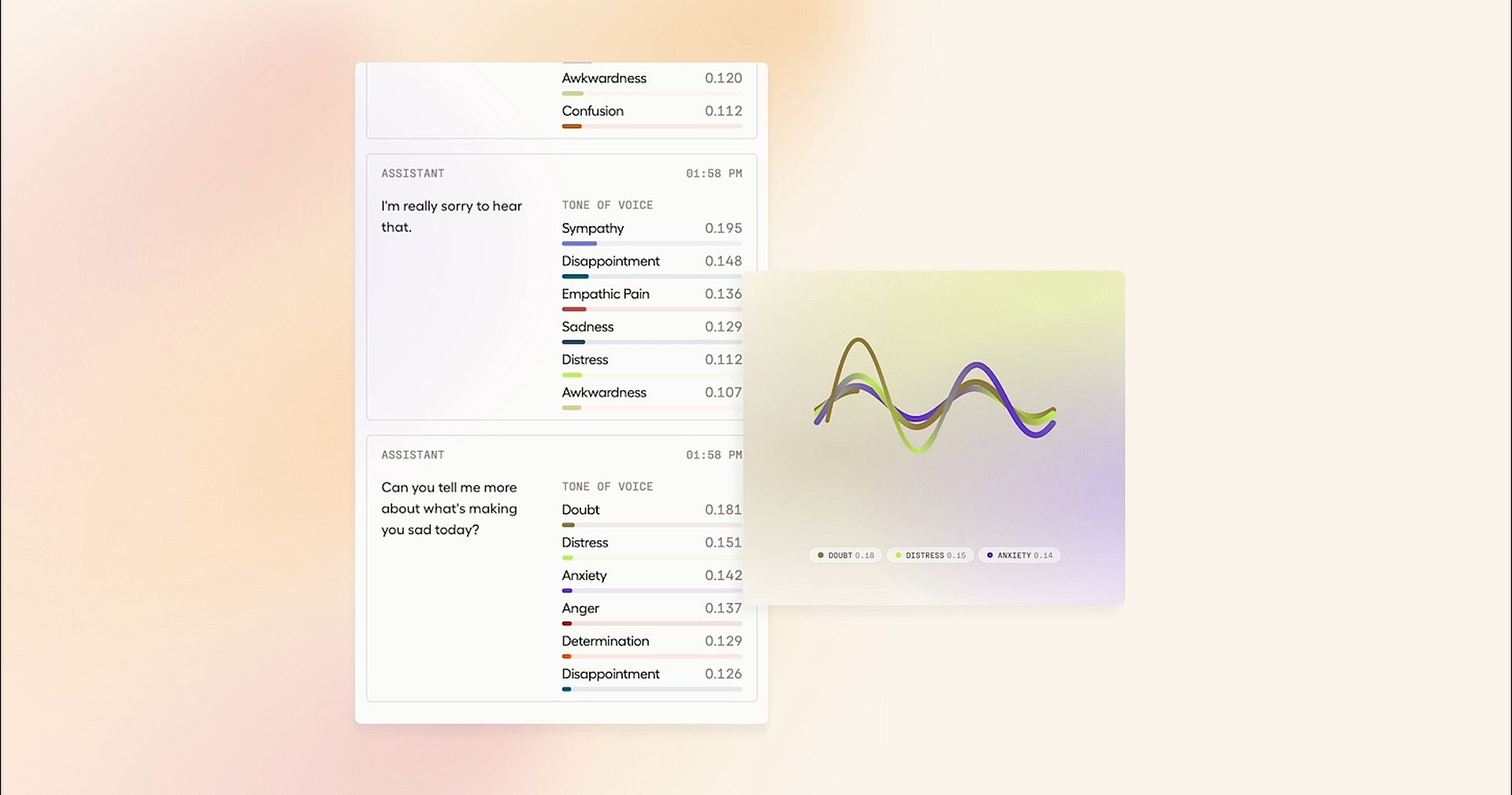

Empathetic Voice Interface (EVI) is an API made by Hume AI. Its special skill is understanding how people feel by listening to their voices. If you sound happy, sad, or anything in between, EVI knows. And it responds with words that match your feelings, like a friend would.

Meet Hume’s Empathic Voice Interface (EVI), the first conversational AI with emotional intelligence. pic.twitter.com/aAK5lIsegl

— Hume (@hume_ai) March 27, 2024

Designed to streamline communication and enhance user experience, our interface offers a seamless integration of transcription, cutting-edge language models (LLMs), and text-to-speech (TTS) capabilities—all through a single API. Here are its key features:

- End-of-turn detection: No more awkward pauses! It can tell when you’ve finished speaking, so there’s no overlap.

- Interruptibility: Just like a good listener, it stops talking when you interrupt and starts listening to you.

- Responsive to expression: It can pick up on how you’re feeling by the way you talk, not just what you say.

- Expressive text-to-speech (TTS): When it responds, it sounds natural and expressive, just like a real person.

- Alignment with your application: It learns from your interactions to make sure it’s giving you the best experience possible.

EVI is great at talking back. It understands when you’re done speaking or if you interrupt it. So, chatting with EVI feels like talking to a real person. This clever program has lots of valuable uses. It can make customer service nicer, help people when they’re feeling down, or even be a friendly companion for those who need someone to talk to.

As Hume AI gets ready to share EVI with everyone, it’s exciting to think about how it might change the way we use computers. With EVI, technology isn’t just smart—it’s starting to understand us better, making our interactions feel more personal and caring, thanks to empathic large language models (eLLM).

What is an eLLM?

An eLLM, or empathic large language model, is a sophisticated computational framework that integrates advanced natural language processing techniques with emotion recognition algorithms. Unlike traditional language models, eLLMs are designed to detect and interpret emotional cues within human language data, enabling AI systems to generate responses that are not only syntactically and semantically coherent but also emotionally attuned to the user’s input.

By leveraging sentiment analysis modules, emotion recognition algorithms, and contextual understanding mechanisms, eLLMs empower AI technologies to engage users in more empathetic and responsive dialogue. This capability enhances human-computer interaction, fostering deeper levels of engagement and rapport.

How to use EVI?

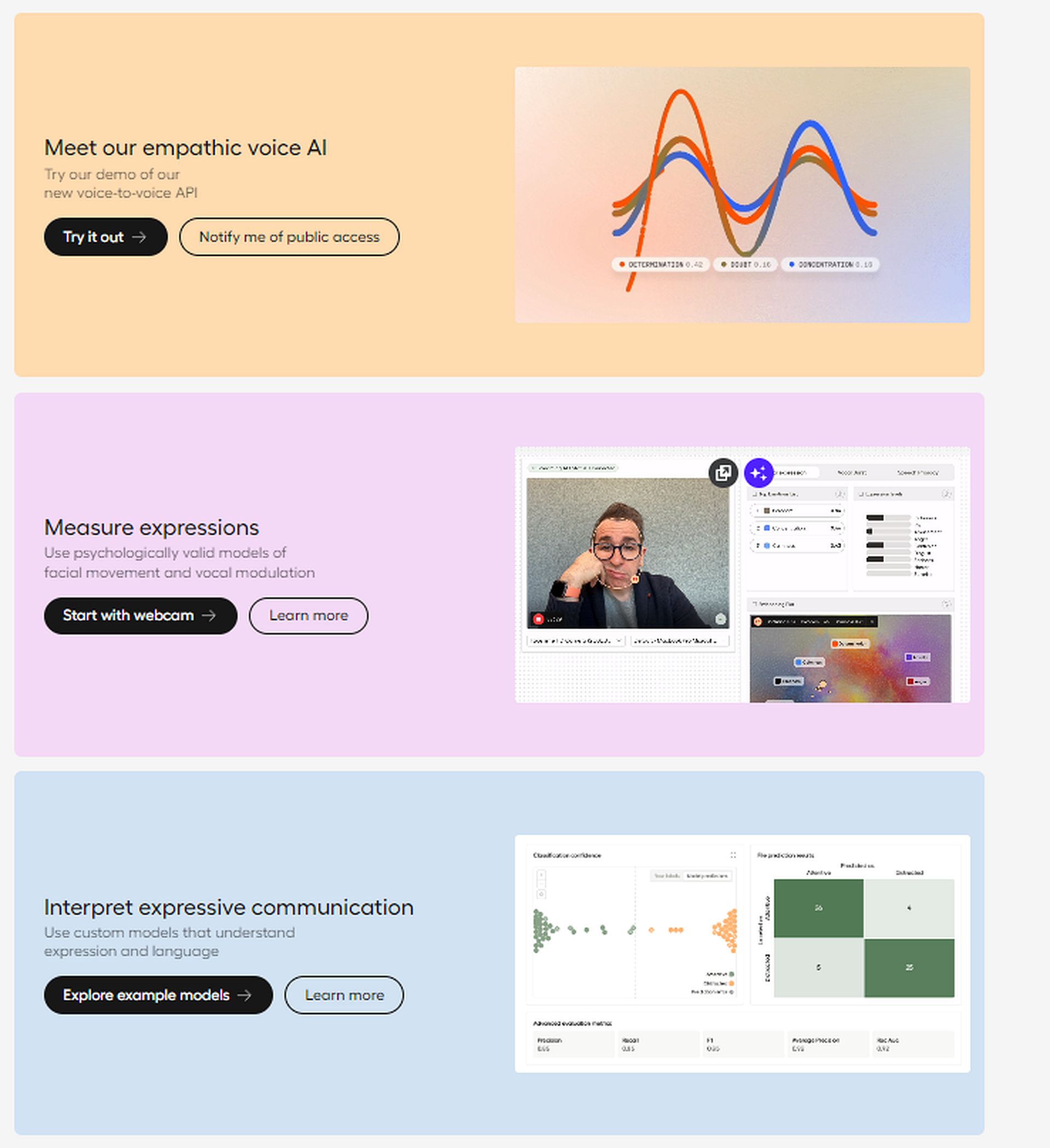

EVI is not publicly available yet. However, you can explore the playground. You can live-stream though webcam, upload multimedia files or live text analysis in the playground.

- Visit Hume AI and log in or sign in

- Choose which EVI option you want to try.

- Follow the on-screen instructions.

That’s it! However, if you want to get the most out of EVI by Hume AI, click here to see the official guide.

Featured image credit: Hume AI