With its new 1-bit LLM technology, Microsoft may have just cracked the code for creating powerful AI behind chatbots and language tools that can fit in your pocket, run lightning fast, and help save the planet.

Ok, ditch the planet part but it is a really big deal!

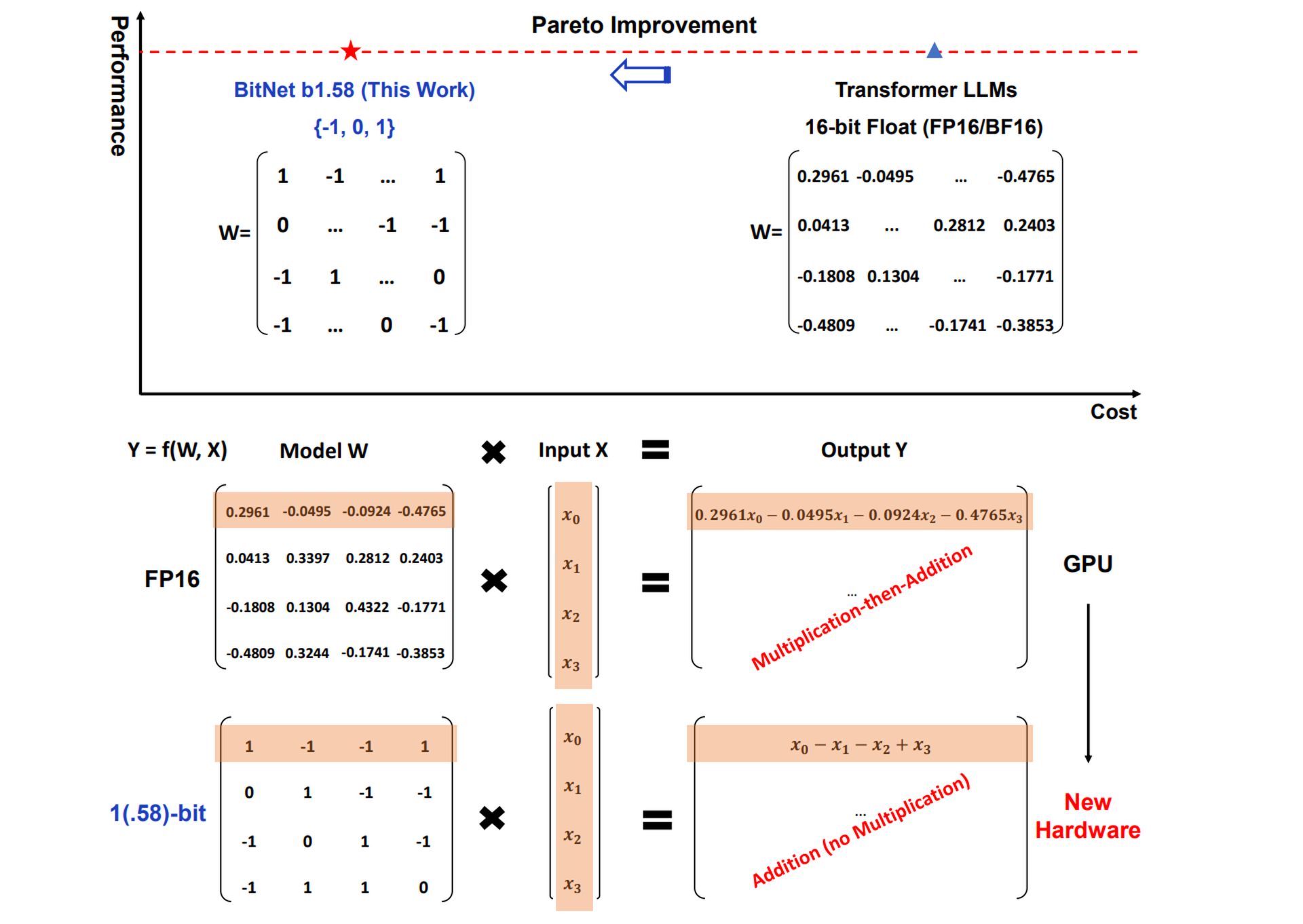

Traditional LLMs, the powerful AI models behind tools like ChatGPT and Gemini, typically use 16-bit or even 32-bit floating-point numbers to represent the model’s parameters or weights. These weights determine how the model processes information. Microsoft’s 1-bit LLM takes a radically different approach by quantizing (reducing the precision of) these weights down to just 1.58 bits.

With a 1-bit LLM, each weight can only take on one of three values: -1, 0, or 1.

This might seem drastically limiting, but it leads to remarkable advantages.

What’s the fuss about 1-Bit LLMs?

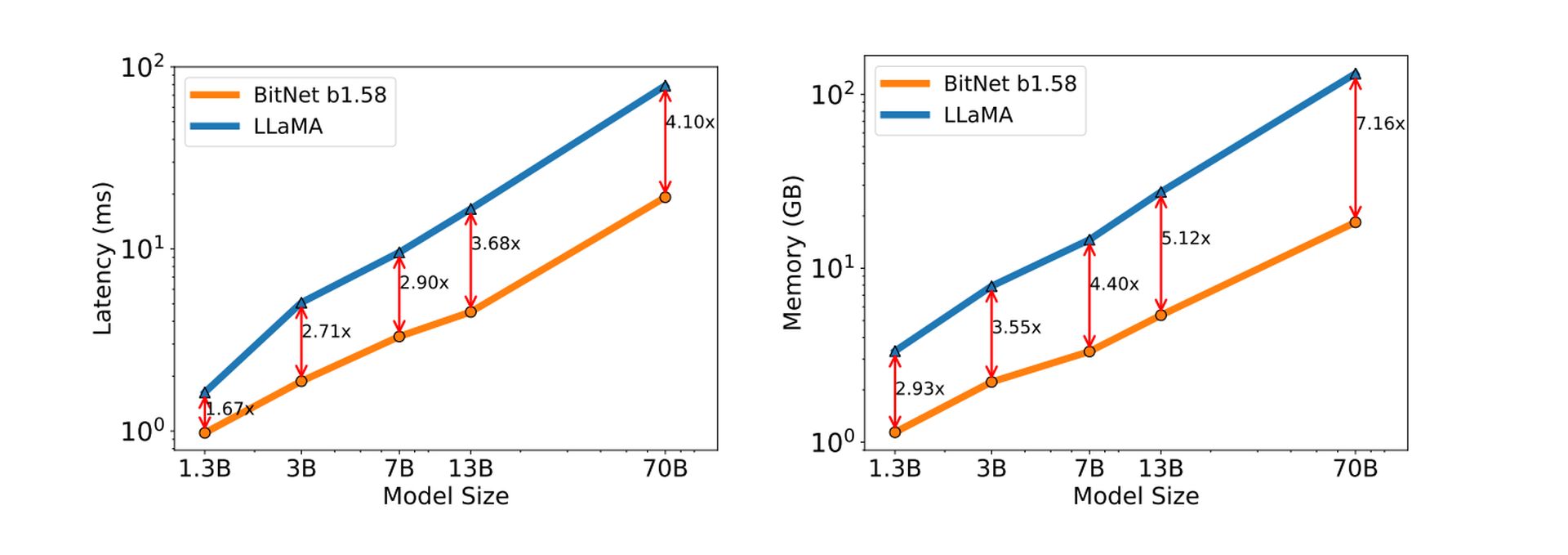

The reduced resource requirements of 1-bit LLMs could enable AI applications on a wider range of devices, even those with limited memory or computational power. This could lead to more widespread adoption of AI across various industries.

Smaller brains mean AI can run on smaller devices: Your phone, smartwatch, you name it.

The simplified representation of weights in a 1-bit LLM translates to faster inference speeds – the process of generating text, translating languages, or performing other language-related tasks.

Simpler calculations mean the AI thinks and responds way faster.

The computational efficiency of 1-bit LLMs also leads to lower energy consumption, making them more environmentally friendly and cost-effective to operate.

Less computing power equals less energy used. This is a major win for environmentally conscious tech, an ultimate step to make AI green.

Apart from all that, the unique computational characteristics of 1-bit LLMs open up possibilities for designing specialized hardware optimized for their operations, potentially leading to even further advancements in performance and efficiency.

Meet Microsoft’s BitNet LLM

Microsoft’s implementation of this technology is called BitNet b1.58. The additional 0 value (compared to true 1-bit implementations) is a crucial element that enhances the model’s performance.

BitNet b1.58 demonstrates remarkable results, approaching the performance of traditional LLMs in some cases, even with severe quantization.

Breaking the 16-bit barrier

As mentioned before, Traditional LLMs utilize 16-bit floating-point values (FP16) to represent weights within the model. While offering high precision, this approach can be memory-intensive and computationally expensive. BitNet b1.58 throws a wrench in this paradigm by adopting a 1.58-bit ternary representation for weights.

This means each weight can take on only three distinct values:

- -1: Represents a negative influence on the model’s output

- 0: Represents no influence on the output

- +1: Represents a positive influence on the output

Mapping weights efficiently

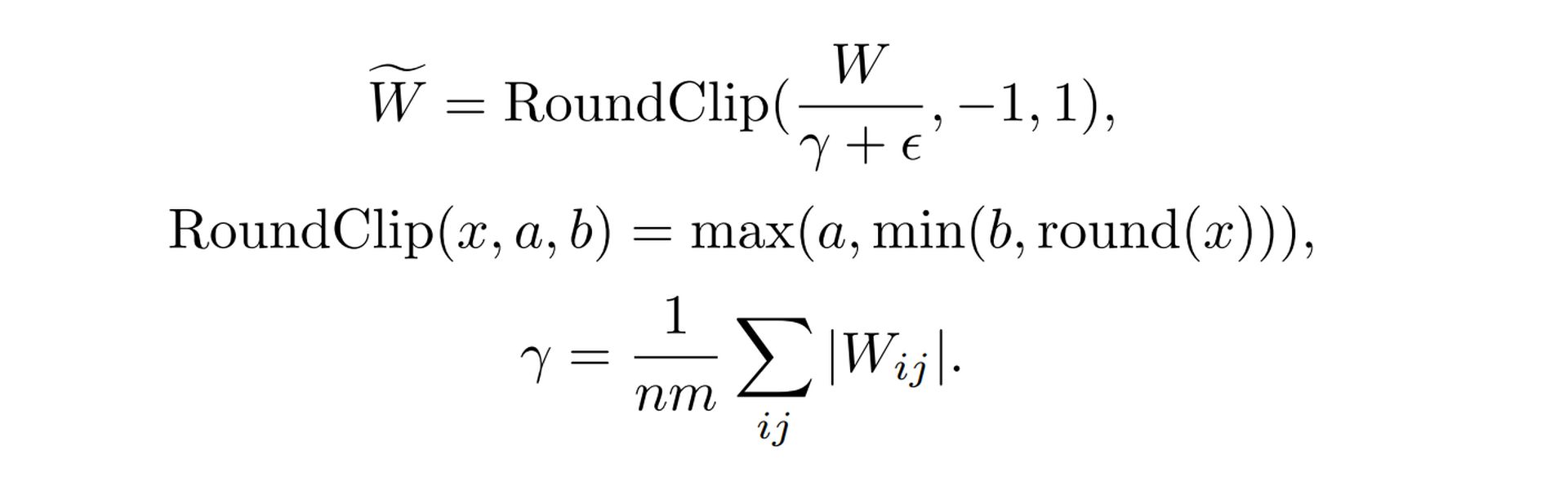

Transitioning from a continuous (FP16) to a discrete (ternary) weight space requires careful consideration. BitNet b1.58 employs a special quantization function to achieve this mapping effectively. This function takes the original FP16 weight values and applies a specific algorithm to determine the closest corresponding ternary value (-1, 0, or +1). The key here is to minimize the performance degradation caused by this conversion.

Here’s a simplified breakdown of the function

- Scaling: The function first scales the entire weight matrix by its average absolute value. This ensures the weights are centered around zero

- Rounding: Each weight value is then rounded to the nearest integer value among -1, 0, and +1. This translates the scaled weights into the discrete ternary system

See the detailed formula on Microsoft’s 1-Bit LLM research paper.

Activation scaling

Activations, another crucial component of LLMs, also undergo a scaling process in BitNet b1.58. During training and inference, activations are scaled to a specific range (e.g., -0.5 to +0.5).

This scaling serves two purposes:

- Performance Optimization: Scaling activations helps maintain optimal performance within the reduced precision environment of BitNet b1.58

- Simplification: The chosen scaling range simplifies implementation and system-level optimization without introducing significant performance drawbacks

Open-source compatibility

The LLM research community thrives on open-source collaboration. To facilitate integration with existing frameworks, BitNet b1.58 adopts components similar to those found in the popular LLaMA model architecture. This includes elements like:

- RMSNorm: A normalization technique for stabilizing the training process

- SwiGLU: An activation function offering efficiency advantages

- Rotary Embeddings: A method for representing words and positions within the model

- Removal of biases: Simplifying the model architecture

By incorporating these LLaMA-like components, BitNet b1.58 becomes readily integrable with popular open-source LLM software libraries, minimizing the effort required for adoption by the research community.

Featured image credit: Freepik.