Artificial intelligence is now a core part of how organizations protect their applications. This article explores how AI is reshaping security through smarter authentication, real-time threat detection, and adaptive access control. It examines the challenges that led to this shift, including alert fatigue, delayed response times, and increasingly sophisticated attacks. Real-world examples from JPMorgan Chase and the Mayo Clinic show how AI is being applied in practice. The article also looks ahead to trends like explainable AI, federated threat sharing, and self-patching systems. The message is clear: intelligent security is no longer optional. It is part of the foundation.

As of late 2023, one reality has cemented itself in our industry: the way we secure applications needs a serious upgrade. The volume, velocity, and sophistication of modern cyber threats have outgrown the static defenses of the past.

As someone deeply involved in both software engineering and security operations, I’ve seen how fast things have changed this year and how AI has become the driving force behind that change.

This shift wasn’t just about improving efficiency. It was a response to a growing security crisis. Legacy systems were buckling under the strain of nonstop alerts, delayed patching, and attackers using AI to automate and escalate threats.

Artificial intelligence moved from supporting defensive efforts to taking a central role. It powered smarter authentication, real-time vulnerability scanning, adaptive access control, and early steps toward self-healing applications. These capabilities signaled a deeper shift in how we now build, deploy, and protect digital systems.

Let’s walk through what happened, what worked, and what’s next.

The challenges that forced innovation

Before AI took hold in 2023, many organizations were already struggling to keep pace with evolving threats, legacy infrastructure, and outdated security workflows. The pressure to modernize was mounting fast, and the cracks were starting to show.

1. Manual threat detection was breaking down

Security Operations Centers (SOCs) often face the challenge of triaging over 20,000 alerts each week, with more than 80% typically turning out to be false positives. Research suggests that 83% of alerts in SOCs are noise. Analysts spend up to one-third of their day chasing dead ends, while the real threats quietly slip through.

This volume overload leads to alert fatigue, where analysts become desensitized and either miss high-priority signals or burn out entirely. The result is not just slower response times. It also creates serious gaps in security.

This isn’t a localized issue. According to Flashpoint’s 2022 Financial Threat Landscape report, the finance and insurance sector experienced 566 data breaches globally, exposing more than 254 million records.

In the United States, financial services remained one of the most targeted industries, underscoring how traditional detection methods were already struggling to keep pace. These breaches highlight a deeper problem: legacy systems were no longer equipped to handle the volume or complexity of threats facing the industry.

2. Slow response times put data at risk

Even in relatively mature environments, traditional systems couldn’t keep up. In organizations deploying software updates daily, waiting hours—or worse, days—for vulnerability scans or patch approvals wasn’t just inconvenient. It was dangerous. Attackers could scan for and exploit a known vulnerability within minutes of its disclosure. Every hour of delay increased the risk.

Worse still, the average time to detect a breach in some industries remained over 200 days—a figure that’s as frustrating as it is alarming.

3. Attackers were getting smarter, faster

The tipping point came when attackers began using AI themselves. Generative AI and machine learning gave them the ability to craft phishing emails that were indistinguishable from human-written messages, automate code obfuscation, and simulate user behavior to avoid detection.

Rules-based systems could not compete with adaptive, learning-driven threats. The traditional model was simply outmatched, and defenders needed a new approach. It had to scale with the threat.

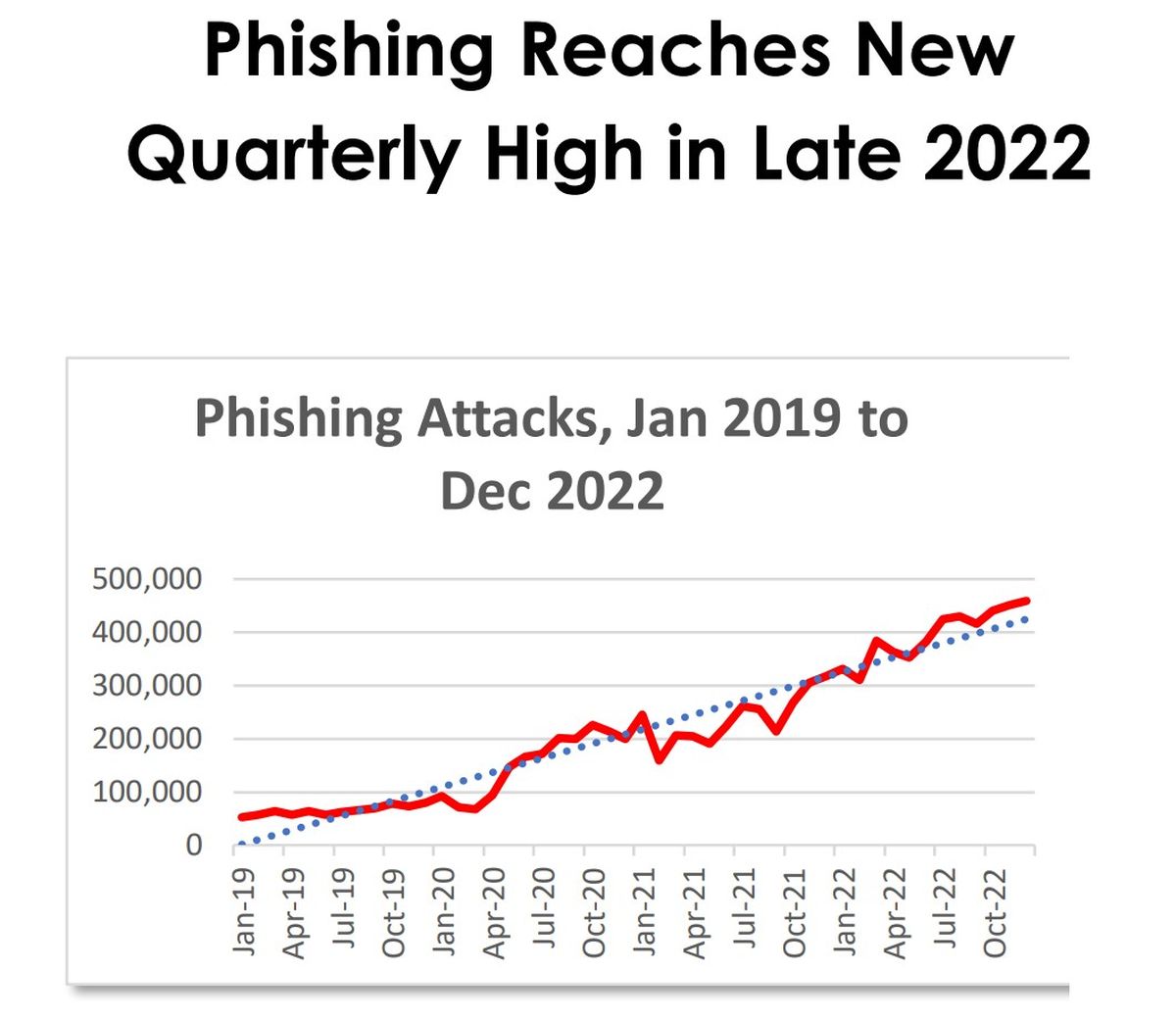

The warning signs were clear well before 2023. The Anti-Phishing Working Group (APWG) reported a record 4.7 million phishing attacks globally in 2022, making it the worst year ever recorded for phishing. Around the same time, Kaspersky’s systems blocked over 500 million attempts to access phishing websites—more than double the number from the previous year.

These attacks were becoming increasingly automated and personalized, often enhanced by AI-generated content that evades traditional detection tools. As a result, they’re not only harder to spot but also more effective at breaching defenses.

Security teams are now facing adversaries operating at machine speed. To keep up, organizations must respond with intelligent, adaptive systems. Anything less puts critical systems at risk.

Bridging the gap

These three challenges—volume, latency, and sophistication—pushed many security teams to a breaking point. In 2023, it became clear that incremental upgrades were no longer enough. Artificial intelligence wasn’t a future consideration anymore. It was a present necessity.

The breakthroughs that mattered

2023 wasn’t the first year AI was used in security. But it was the year it became table stakes.

Here are the three areas where AI made the biggest difference in securing applications: authentication, vulnerability management, and digital rights enforcement.

To illustrate the shift clearly, here’s how traditional approaches compare to the AI-enhanced systems we saw adopted across industries in 2023:

Before vs. after AI: Key security capabilities

| Security Area | Traditional Approach | AI-Enhanced Approach |

| Authentication | Static credentials, rule-based MFA | Contextual risk scoring, behavior-based access decisions |

| Vulnerability Scanning | Periodic scans, post-deployment patching | Continuous real-time code analysis and early intervention |

| Digital Rights Management | Static encryption, manual access policies | Adaptive controls based on user behavior and device data |

Each of these transformations was more than a technical upgrade. They reshaped how organizations think about trust, risk, and operational efficiency.

Real-world case studies: Where AI made impact

To understand how AI reshaped application security in 2023, it’s worth looking at how leading organizations put these technologies into action.

JPMorgan Chase: Using AI to detect insider fraud

In 2023, JPMorgan Chase advanced its fraud detection capabilities by integrating large language models (LLMs) into its cybersecurity workflows. These models are being trained to analyze unstructured data—such as emails, chat logs, and transaction histories—for patterns that could indicate insider threats or fraudulent behavior.

This marked a notable shift away from traditional rules-based systems, enabling the bank to extract meaning from context and identify anomalies earlier and more precisely.

According to American Banker, JPMorgan is moving beyond business rules and decision trees by applying LLMs to detect fraud-related activity in internal communications. Similarly, Bank Automation News reports that these models are being layered onto existing machine learning systems to enhance real-time detection and reduce manual triage.

While specific results have not been publicly disclosed, the initiative underscores a growing trend in financial services: using AI not just to investigate fraud after the fact, but to proactively detect it before damage is done.

Mayo Clinic and Google Cloud: Healthcare meets explainability

In 2023, Mayo Clinic partnered with Google Cloud to deploy generative AI tools aimed at improving how clinicians access and interpret complex patient data. Using Google’s Enterprise Search in Generative AI App Builder, they enabled faster, more intuitive retrieval of insights across medical records, imaging, genomics, and lab results.

Mayo Clinic CIO Cris Ross put it simply in the 2023 press release:

“Google Cloud’s tools have the potential to unlock sources of information that typically aren’t searchable in a conventional manner… Accessing insights more quickly and easily could drive more cures, create more connections with patients, and transform healthcare.”

While the rollout focused on search and workflow efficiency, it underscored a broader push for explainable, compliant AI systems in healthcare settings.

The next frontier: What’s coming in 2024 and beyond

As promising as 2023 has been, we’re just scratching the surface. Here’s where I see AI and application security heading next:

1. Explainable AI (XAI) will be mandated

The EU AI Act and similar regulatory frameworks are already pushing vendors to show how AI systems make decisions—especially when access or enforcement is involved. In application security, this means AI can’t just say “no.” It has to say “why.”

We’ll see more platforms offering dashboards, traceable logs, and transparent model explanations as default features.

2. Federated threat learning will go mainstream

Most threat intelligence sharing today is slow and manual. Federated learning changes that.

This method lets organizations contribute to a shared AI model without sending raw data. Local models train on private logs and sync learnings. It’s crowdsourced cyber defense—without the privacy risk.

I’ve seen early deployments in financial services and healthcare, and the upside is huge: faster pattern recognition, earlier alerts, and stronger defenses against zero-day threats.

3. AI-generated red teams

Red teaming has long been the gold standard for testing defenses. In 2024, I expect generative AI to automate much of this.

Already, platforms like MITRE Caldera simulate real-world attacks using automated agents. Soon, we’ll see commercial offerings that let teams generate adaptive, evolving attack scenarios continuously. This means constant pressure testing, with AI acting as both the attacker and the analyst.

4. Self-patching applications

Imagine a system that detects a vulnerability, writes a patch, tests it in a sandbox, and deploys it all on its own.

We’re getting closer. Security tools like Microsoft Security Copilot and container-native scanners are evolving toward this capability. While still limited, I expect to see autonomous patching roll out in closed environments—especially for microservices, containers, and edge devices—within the next 12 to 18 months.

Intelligence isn’t optional, it’s infrastructure

If you asked me two years ago whether AI would be central to application security, I would’ve said it had promise. Today, I’d say it’s a requirement.

AI is no longer just helping us do security better. It is changing what secure even means. It filters the noise, highlights what matters, and frees human analysts to focus on strategy instead of sorting through spam. It is shifting us from reactive to predictive, from fragmented to focused.

Of course, we still need controls. We need transparency, explainability, and human oversight. But for the first time in years, defenders are not outmatched. We now have tools that learn. Tools that scale. Tools that adapt.

This is not just a step forward. It is a full reset.

As we move into 2024, one thing is clear. Intelligence is no longer optional. It is infrastructure.

About the author

Shikha Gupta is a senior software engineer at Amazon and a patent-holding inventor specializing in distributed system security, tokenization, and data privacy architecture. She holds multiple U.S. patents and earned her M.S. in Computer Science from the University of Southern California. Her work bridges academic research and enterprise-scale privacy systems, with a growing focus on sustainable and secure infrastructure.