Stability AI has recently revealed FreeWilly, its new language models that were trained on “synthetic” data. The LLMs are now available for mass usage, and Stability AI’s Carper AI lab developed it.

The two new LLMs, which were unveiled on Friday by Stability AI, the company behind the Stable Diffusion image generation AI and founded by former UK hedge funder Emad Mostaque, who has been accused of inflating his resume, are both based on versions of Meta’s LLaMA and LLaMA 2 open-source models but were trained on a completely new, smaller dataset that includes synthetic data.

Both models are excellent at solving complicated problems in specialist fields like law and mathematics and delicate linguistic details.

The FreeWillys were published by Stability’s subsidiary CarperAI under a “non-commercial license,” which prohibits their usage for profit-making, enterprise, or business objectives. Instead, they are intended to further research and promote open access in the AI community.

What is FreeWilly?

In partnership with AI development firm CarperAI, Stability AI debuted FreeWilly1 and its sequel FreeWilly2 on July 21, 2023. FreeWilly1 was tuned via supervised fine-tuning (SFT) on synthetically created datasets based on Meta’s large-scale language model, LLaMA-65B. On the other hand, LLaMA 270B is used in the development of FreeWilly2.

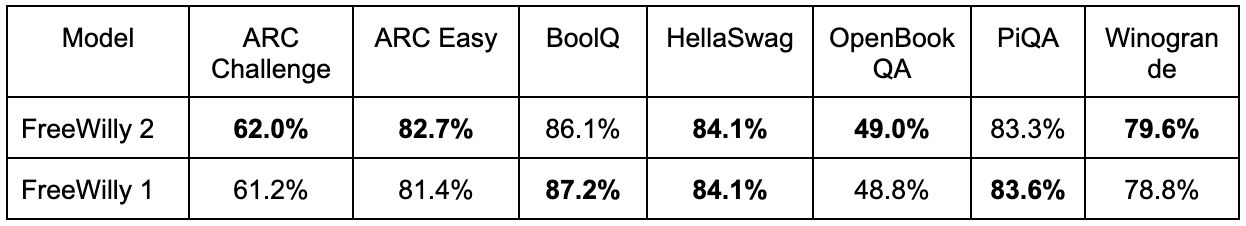

According to reports, FreeWilly2 performs several tasks on par with GPT-3.5. Independent benchmark tests carried out by Stability AI researchers showed that FreeWilly2 outperformed ChatGPT with GPT-3.5, which obtained 85.5% accuracy, with an accuracy of 86.4% in “HellaSwag,” a natural language inference task that evaluates common sense.

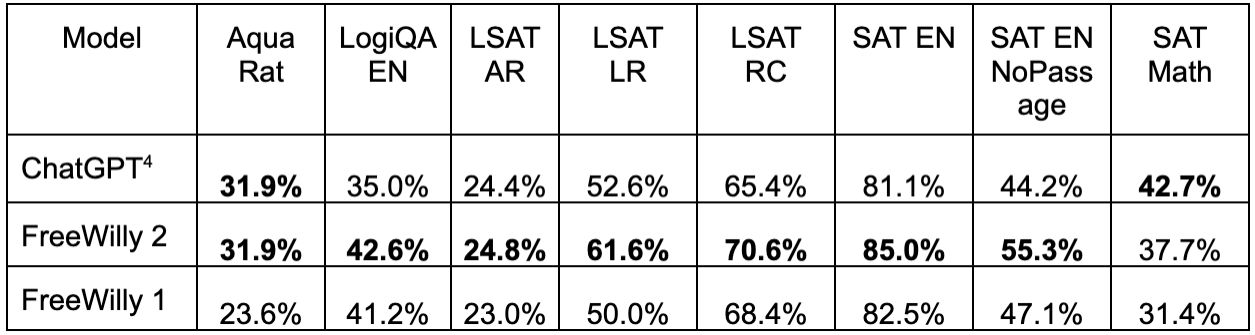

With the exception of the arithmetic portion of the US college entrance test known as “SAT Math,” FreeWilly2 outperformed GPT-3.5 in most tasks when performance against the large-scale language model benchmark software “AGIEval” was compared.

Additionally, Stability AI emphasizes the careful release of FreeWilly models and the thorough risk assessment performed by an internal, specialized team. The business regularly encourages outside input to improve safety protocols.

Stability AI reveals Stable Doodle: Sketch to HD

“FreeWilly1 and FreeWilly2 set a new standard in the field of open access Large Language Models. They both significantly advance research, enhance natural language understanding and enable complex tasks. We are excited about the endless possibilities that these models will bring to the AI community and the new applications they will inspire,” Stability AI said in its announcement.

https://twitter.com/carperai/status/1682479222579765248?s=20

Researchers used the “Orca” training process on FreeWilly

The titles of the models are puns on Microsoft researchers’ “Orca” AI training process, which enables “smaller” models (those exposed to less data) to perform as well as large core models subjected to larger datasets. (This is not a reference to the orcas that IRL sank a boat.)

FreeWilly1 and FreeWilly2 were specifically trained with 600,000 data points — just 10% of the size of the original Orca dataset — using guidance from four datasets produced by Enrico Shippole. As a result, they were both significantly more affordable and environmentally friendly (using less energy and leaving a smaller carbon footprint) than the original Orca model and the majority of leading LLMs. The models continued to deliver excellent performance, sometimes matching or even surpassing ChatGPT on GPT-3.5.

To evaluate these models, the researchers used EleutherAI’s lm-eval-harness, to which they added AGIEval. The results demonstrate that both FreeWilly models do exceptionally well in complex reasoning, language subtlety recognition, and problem-solving in specialist fields like law and mathematics.

The two models, in the team’s opinion, help humans understand spoken language better and create new, previously impractical possibilities. All the novel applications of these models in artificial intelligence are what they hope to see.

Featured image credit: Stability AI