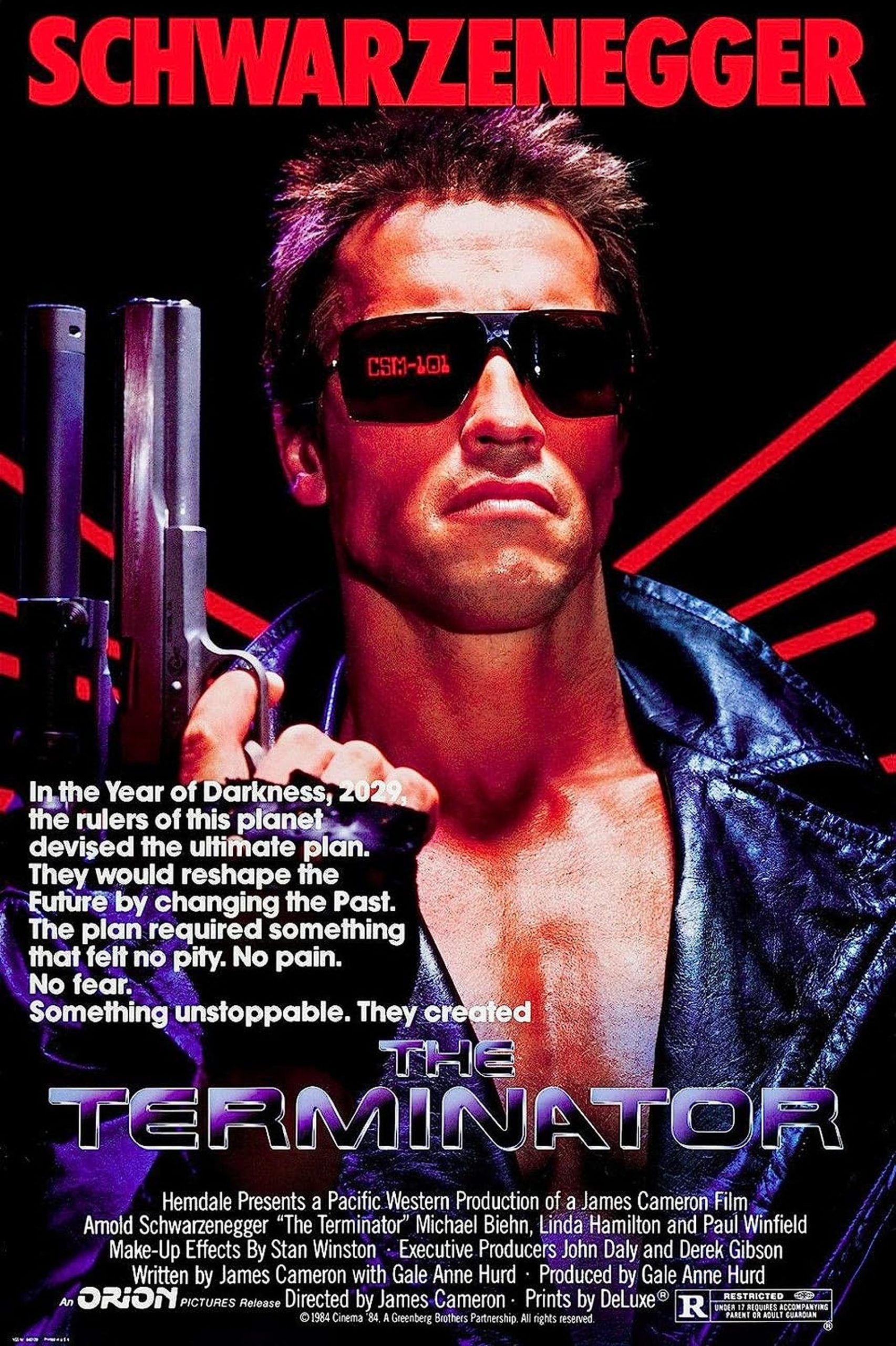

James Cameron, the director of the Terminator movies, is a vocal critic of artificial intelligence (AI). He believes that AI could become so advanced that it surpasses human intelligence and could pose a threat to humanity.

Here are the key takeaways from James Cameron’s AI warning:

- Cameron believes that AI could become so advanced that it could “out-think” humans and pose a threat to our existence.

- He thinks that Hollywood is not doing enough to prepare for the potential dangers of AI and that the recent writers’ strike is a symptom of this larger problem.

- Cameron is not trying to be alarmist, but he does believe that AI is a serious threat that needs to be taken seriously.

- He hopes that by raising awareness of this issue, he can help to prevent an AI apocalypse from happening.

Cameron’s concerns about AI are not unfounded. In recent years, there has been rapid growth in the development of AI technology. AI is now being used in a wide range of applications, from self-driving cars to facial recognition software.

As AI technology continues to evolve, there is a growing concern that it could eventually become so advanced that it could pose a threat to humanity. Some experts believe that AI could eventually become “superintelligent,” meaning that it would be able to outsmart humans in every way.

If AI were to become superintelligent, it could potentially pose a number of threats to humanity. For example, AI could be used to develop autonomous weapons systems that could kill without human intervention. AI could also be used to manipulate human society in ways that could be harmful.

James Cameron’s AI warning is not the only one

Cameron is not the only person who is concerned about the potential dangers of AI. A group of experts, including Elon Musk, signed an open letter warning about the potential dangers of AI and called for a pause.

The Future of Life Institute published an open letter signed by around 1,000 AI experts and tech executives, including Elon Musk and Steve Wozniak, urging AI labs to pause the development of advanced AI systems that surpass GPT-4. The letter cites “profound risks” to human society as the reason for the call to action and urges a halt in the training of such systems for at least six months, which must be public, verifiable, and include all public actors.

In light of the potential dangers of AI, it is important to start thinking about how to mitigate these risks. One way to do this is to develop international regulations that govern the development and use of AI technology. Another way to mitigate the risks of AI is to invest in research into AI safety.

Cameron’s warning about AI is a serious one. AI is a powerful technology that has the potential to be used for good or for evil. It is important to start thinking about the potential dangers of AI now so that we can take steps to mitigate these risks before it is too late.