Meta recently announced MusicGen, an AI-powered music generator that can turn a text description into 12 seconds of audio. The company has also open-sourced it, despite Google choosing the other way. Today, we will explain what Meta’s MusicGen is and how to use it!

The majority of artistic activities have managed to be impacted by AI, and the music business is now firmly under its influence. In the same way as ChatGPT or other huge language model-based AI create text, Meta has recently announced the availability of the open-source version of their AI model for generating music.

What is MusicGen?

MusicGen is built on a Transformer model, as are the majority of language models used today. MusicGen predicts the next segment of a piece of music in a manner similar to how a language model predicts the following letters in a phrase.

The researchers use Meta’s EnCodec audio tokenizer to break down the audio data into smaller pieces. MusicGen is a quick and effective single-stage approach that performs token processing in parallel.

For training, the crew used 20,000 hours of authorized music. They used 10,000 high-quality audio recordings from an internal dataset as well as Shutterstock and Pond5 music data, in particular.

Generative AI: The origin of the popular AI tools

MusicGen is exceptional in its capacity to handle both text and musical cues, in addition to the effectiveness of the design and the speed of creation. The text establishes the fundamental style, which the audio file’s music subsequently follows.

You cannot exactly change the melody’s direction so that you can hear it in various musical styles, for example. It is not perfectly mirrored in the output and just serves as a general guide for the generation.

There haven’t been many high-quality instances of music generation that have been made available to the public, despite the fact that many other models are running text generation, voice synthesization, created graphics, and even brief videos.

The study paper that goes along with it, which is accessible on the preprint arXiv site, claims that one of the key difficulties with music is that it necessitates running the complete frequency spectrum, which calls for more intensive sampling. Not to mention the music’s intricate compositions and overlapping instruments.

How to use MusicGen?

Through the Hugging Face API, users may test out MusicGen, though depending on how many users are using it at once, it can take some time to generate any music. For considerably quicker results, you may utilize the Hugging Face website to set up your own instance of the model. If you have the necessary skills and equipment, you may download the code and execute it manually instead.

If you are looking to try the website version, like most of the other people, here is how you do it:

- Open your web browser.

- Go to the Hugging Face website.

- Click Spaces at the top right.

- Type “MusicGen” into the search box.

- Find the one that was published by Facebook.

- Enter your prompt into the box at the left.

- Click Generate.

How does it work?

Your description is used to generate 12 seconds of audio by the MusicGen model. A reference audio file from which a complex melody will be created can also be provided. By using the reference audio, the model will make an effort to create music that better reflects the user’s tastes by adhering to both the description and the provided melody.

We present MusicGen: A simple and controllable music generation model. MusicGen can be prompted by both text and melody.

We release code (MIT) and models (CC-BY NC) for open research, reproducibility, and for the music community: https://t.co/OkYjL4xDN7 pic.twitter.com/h1l4LGzYgf— Felix Kreuk (@FelixKreuk) June 9, 2023

The model employs an EnCodec audio tokenizer based on a transformer language model, as stated by Kreuk. Through the Hugging Face API, users may test out MusicGen, albeit depending on how many users are using it at once, it can take some time to generate any music. For considerably quicker results, you may use the Hugging Face website to set up your own instance of the model. If you have the necessary skills and equipment, you may download the code and execute it manually instead.

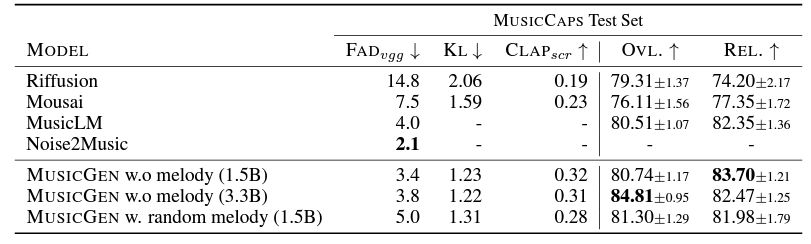

MusicGen vs. Google MusicLM

MusicGen appears to produce slightly better outcomes. The researchers demonstrate this by contrasting MusicGen’s output with that of MusicLM, Riffusion, and Musai on an example website. It comes in four model sizes, ranging from tiny (300 million parameters) to big (3.3 billion parameters), with the latter having the most potential for making intricate music. It may be operated locally (a GPU with at least 16GB of RAM is suggested).

One of the numerous instances of how Meta uses AI to build immersive and compelling experiences for its consumers is MusicGen. The goal of Meta is to create a shared virtual world called the metaverse where users may interact, collaborate, and have fun on many platforms and devices. The metaverse will become more entertaining and musical thanks to MusicGen.