Generative AI (GenAI) has garnered significant attention in recent years by exhibiting remarkable capabilities in generating text, synthesizing images, and even composing music. However, when it comes to critical decision-making in high-stakes environments—such as healthcare, finance, or autonomous systems—these AI systems fall short. Despite their potential, the current state of GenAI is marred by limitations in interpretability, data dependency, and robustness and is not yet ready for deployment in scenarios where critical decisions are at stake.

Data dependency and bias propagation

GenAI models, particularly LLMs like GPT-3, are trained on vast corpora of text scraped from the internet. While this data-driven approach allows these models to learn complex patterns in natural language, it also introduces significant risks. Data quality and bias are of paramount concern in critical decision-making contexts. A study by Bender et al. (2021) highlights the dangers of “stochastic parrots”—models that regurgitate text without understanding, potentially amplifying existing biases in the data .

In the context of data architecture, the dependency on large-scale, unstructured data introduces challenges related to data governance and lineage. Ensuring the provenance and quality of training data is critical. However, the opaque nature of many GenAI models makes it difficult to trace decisions back to specific data sources. This lack of traceability is particularly problematic in regulated industries where accountability is paramount.

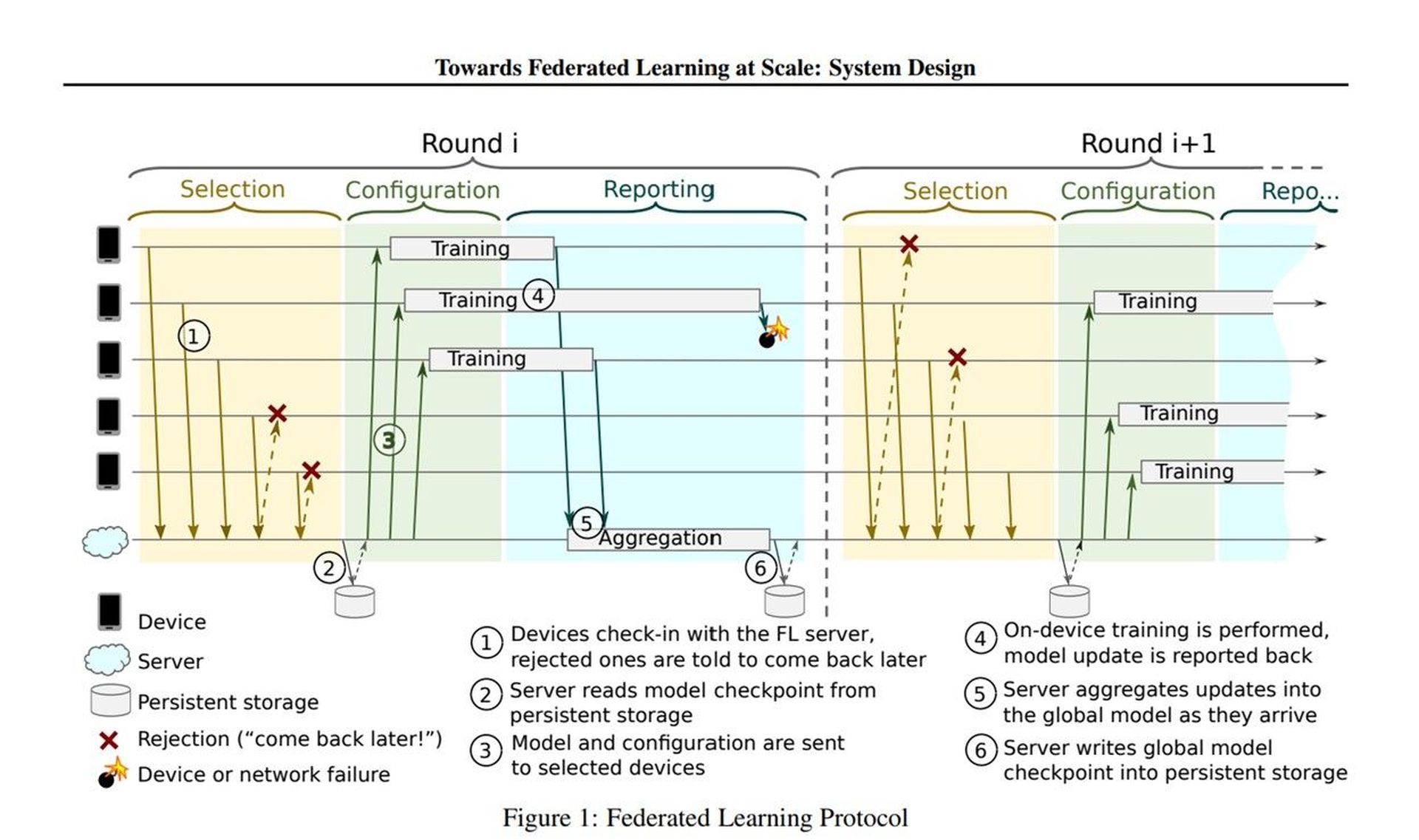

Moreover, the issue of bias is exacerbated by the black-box nature of these models. Techniques such as differential privacy and federated learning have been proposed to mitigate bias and protect data privacy (Bonawitz et al., 2019)

However, these approaches are still in their infancy, and their integration into GenAI pipelines remains a challenge. Without robust mechanisms for bias detection and correction, the deployment of GenAI in critical decision-making scenarios could lead to discriminatory outcomes.

However, these approaches are still in their infancy, and their integration into GenAI pipelines remains a challenge. Without robust mechanisms for bias detection and correction, the deployment of GenAI in critical decision-making scenarios could lead to discriminatory outcomes.

Interpretability and explainability

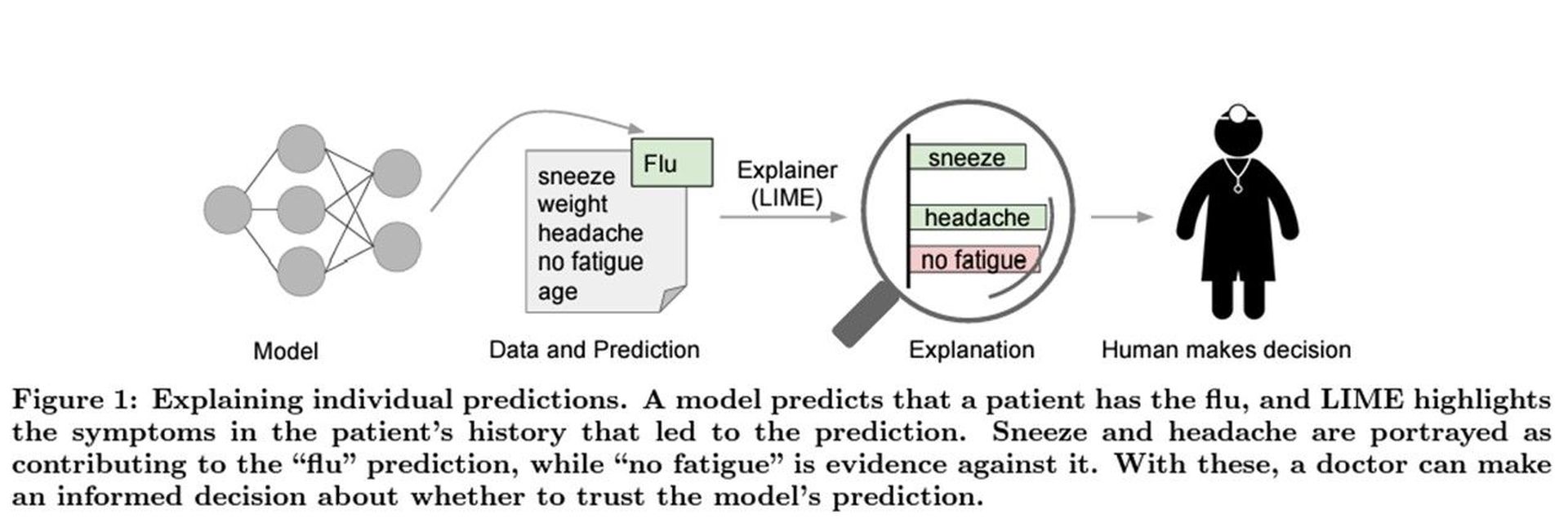

A key requirement for any decision-making system is its ability to explain its decisions. In data architecture, this aligns with the principles of transparency and accountability. However, GenAI models, particularly deep learning architectures, are notoriously difficult to interpret. While tools like SHAP (SHapley Additive exPlanations) and LIME (Local Interpretable Model-agnostic Explanations) offer some insights into model behavior, they are often insufficient for understanding the complex decision-making processes of large LLMs (Ribeiro et al., 2016) .

The challenge of interpretability is not merely academic; it has real-world implications. For instance, in the healthcare sector, decisions informed by AI must be explainable to clinicians to ensure they align with medical ethics and standards. The inability of GenAI to provide clear, understandable rationales for its decisions poses a significant barrier to its adoption in these contexts.

The challenge of interpretability is not merely academic; it has real-world implications. For instance, in the healthcare sector, decisions informed by AI must be explainable to clinicians to ensure they align with medical ethics and standards. The inability of GenAI to provide clear, understandable rationales for its decisions poses a significant barrier to its adoption in these contexts.

Moreover, the lack of interpretability is compounded by the dynamic nature of GenAI models. These systems are often updated with new data, which can alter their decision-making processes in unpredictable ways. This volatility makes it difficult to ensure consistent and reliable performance over time, a critical requirement in high-stakes decision-making environments.

Robustness and adversarial vulnerabilities

Robustness is a fundamental requirement for any system deployed in critical decision-making contexts. Unfortunately, GenAI models are particularly vulnerable to adversarial attacks—small, carefully crafted perturbations to input data that can cause the model to produce incorrect outputs. Goodfellow et al. (2014) demonstrated how even minor changes to an input image could lead to drastic misclassifications by deep neural networks.

In data architecture terms, the lack of robustness can be likened to vulnerabilities in a distributed system’s architecture. Just as a distributed system must be resilient to failures and attacks, so too must GenAI systems be resilient to adversarial inputs. However, current GenAI models lack the necessary safeguards to ensure robustness in adversarial settings. Techniques such as adversarial training and robust optimization have been proposed to address these issues, but they are not yet mature enough for deployment in critical decision-making scenarios.

Moreover, the complexity of GenAI models makes it difficult to implement effective monitoring and anomaly detection mechanisms. In traditional data architectures, monitoring systems track metrics like latency, throughput, and error rates to ensure system health. In contrast, GenAI systems require sophisticated monitoring techniques that can detect subtle shifts in model behavior, which may indicate adversarial attacks or model drift. The lack of such mechanisms is a significant barrier to the deployment of GenAI in critical contexts.

Data architecture considerations

The integration of GenAI into existing data architectures presents significant challenges. Traditional data architectures are designed with principles such as consistency, availability, and partition tolerance (CAP theorem) in mind. However, GenAI models introduce new requirements related to data storage, processing, and access patterns.

Firstly, the scale of data required to train and fine-tune GenAI models necessitates distributed storage systems capable of handling petabytes of unstructured data. Moreover, the training process itself is computationally intensive, requiring specialized hardware such as GPUs and TPUs, as well as distributed training frameworks like Horovod (Sergeev & Balso, 2018).

Secondly, the deployment of GenAI models in production requires real-time inference capabilities. This introduces latency constraints that traditional batch processing architectures are not designed to handle. To address this, organizations must adopt streaming architectures that can process data in real-time, leveraging technologies such as Apache Kafka and Apache Flink.

Finally, the integration of GenAI models into decision-making pipelines requires careful consideration of data governance and compliance. Data lineage, versioning, and auditing are critical components of a robust data architecture, ensuring that decisions can be traced back to specific data inputs and model versions. However, the dynamic nature of GenAI models—where models are continuously updated with new data—complicates these processes. Organizations must implement robust MLOps practices to manage the lifecycle of GenAI models, from training to deployment to monitoring.

Conclusion

While GenAI holds tremendous potential, it is not yet ready for deployment in critical decision-making scenarios. The challenges related to data dependency, interpretability, robustness, and data architecture integration are significant barriers that must be overcome before GenAI can be trusted in high-stakes environments. Until these issues are addressed, organizations should exercise caution in deploying GenAI systems in contexts where the cost of failure is high.

References

- Bender, E. M., Gebru, T., McMillan-Major, A., & Shmitchell, S. (2021). On the Dangers of Stochastic Parrots: Can Language Models Be Too Big? Proceedings of the 2021 ACM Conference on Fairness, Accountability, and Transparency (FAccT ’21). https://doi.org/10.1145/3442188.3445922

- Bonawitz, K., Ivanov, V., Kreuter, B., Marcedone, A., McMahan, H. B., Van Overveldt, T., … & Seth, K. (2019). Towards federated learning at scale: System design. Proceedings of Machine Learning and Systems 2019.

- Ribeiro, M. T., Singh, S., & Guestrin, C. (2016). “Why should I trust you?” Explaining the predictions of any classifier. Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining (KDD ’16). https://doi.org/10.1145/2939672.2939778

- Goodfellow, I. J., Shlens, J., & Szegedy, C. (2014). Explaining and harnessing adversarial examples. arXiv preprint arXiv:1412.6572.

- Sergeev, A., & Del Balso, M. (2018). Horovod: fast and easy distributed deep learning in TensorFlow. arXiv preprint arXiv:1802.05799

Featured image credit: Eray Eliaçık/Bing