The cart before the horse? What are we talking about:

You have the perfect scenario in front of you. You or your team just learnt about an exciting way to analyse data, found a new data-source that looks very promising, or were impressed by a new data visualisation tool. Understandably, you feel like you should put together a data-science project and show your organisation how you are exploring the frontiers of what is possible. Not only that but once you deliver the project you will make available to the organisation a completely new set of data-driven insights. What could go wrong?

Surely, those decision-makers in your organisation that always complain about the quality, quantity and availability of data will jump at this opportunity, adopt your project results, and move closer to true evidence-based management. Unfortunately, more often than not, after investing hard work and significant resources, the new and shiny data analytics solution is not taken up as expected. If this story sounds familiar to you, it is because it is a very common sight. Vendors, consultants and a long list of articles put the focus on the strengths and untapped potential of new analytics, data visualisations and the increasing volume and quality of data. All this creates excitement and an urge to act that is increased by the fear of missing out (FOMO). However, among all the rush to act we miss clarity about the actual problem that we are trying to solve.

Feeling the urge to act is not a bad thing, we need it in order to experiment and be agile. The problem is when we systematically put “the cart before the horse” in data-science projects. In our eagerness to try out new tools and data, we forget about what should be our real driver: helping our stakeholders to solve very concrete challenges by providing the best possible data-driven decision-support. This article is a call to go back to basics, to re-examine the drivers of our projects. My main aim here is to provide a few helpful tips to increase the chances of success and long-term adoption of data-science projects. For this, I use a simple set of guidelines that have the objective of re-calibrating our focus towards fundamental project drivers during the early stages of design and planning.

Back to basics: the driving challenge should always be in front of our cart

Before continuing, a note of caution: following the advice below will definitely slow you down at the start. This is a good thing. A data-science project can easily span multiple months, affect a large number of people and end up embedded within expensive systems filled with complex networks with hard to untangle dependencies. For this reason, it is best to front-load the conceptual and system level design work as much as possible, so that we can avoid hard-to-reverse mistakes later.

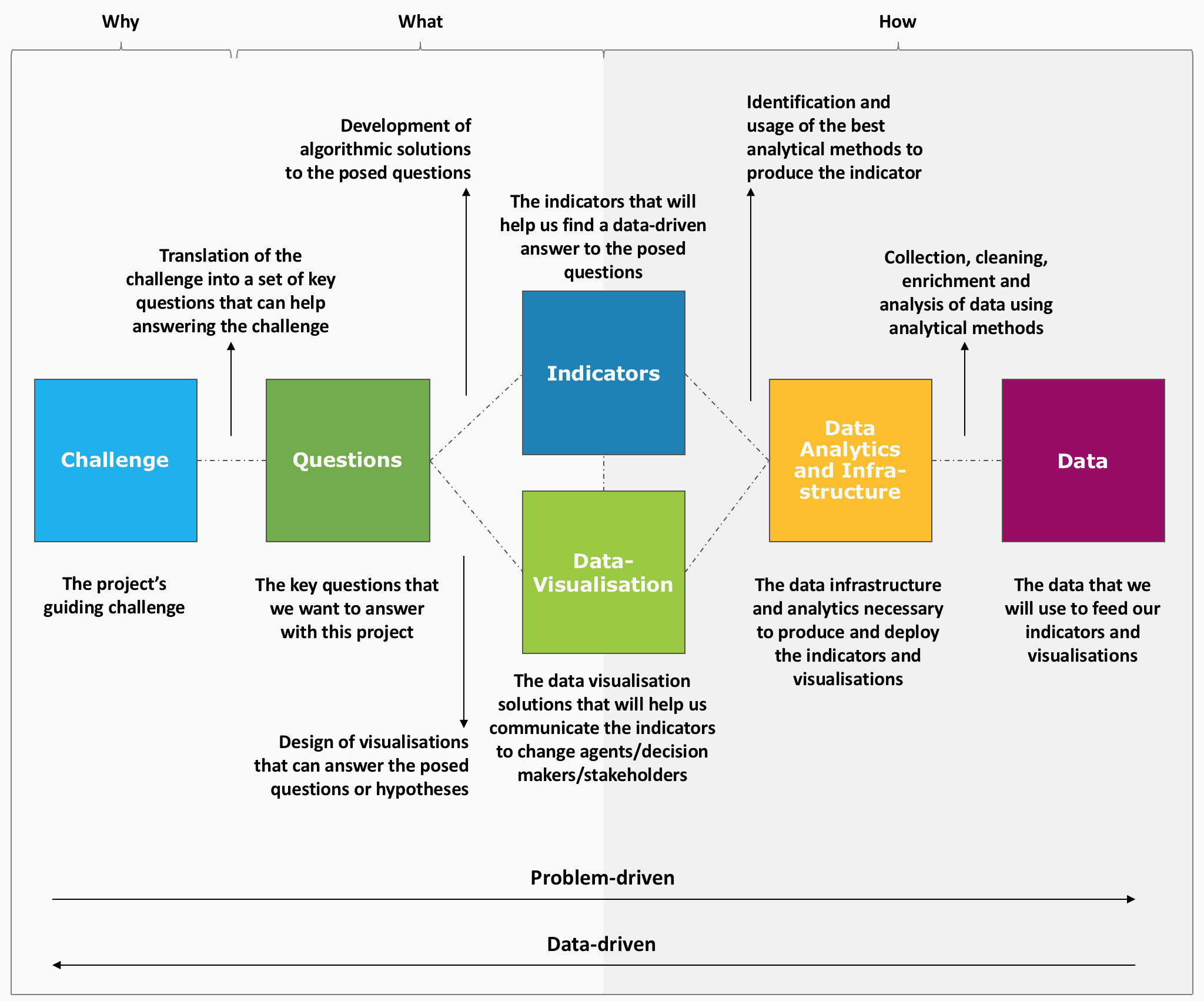

The main building blocks of the guideline are shown in figure 1 below. Overall, the idea is to start the process from left to right, drawing first from problem-driven approaches with a focus first on the “why”, and the “what” and only afterwards on the “how”. Once the first left to right is iteration is complete, we can move towards data-driven approaches, where the possibilities of data and tools become more prominent parts of our design and implementation decisions.

Figure 1: Overview of the problem-driven guidelines for data-science projects, including its key building blocks and relations

The proposed guideline is structured into six modular but interconnected building blocks that can be summarised as:

- The project’s guiding challenge, which should act as the objective by which we ultimately evaluate success

- The key questions that we want to answer with this project

- The indicators (they can be often expressed in the form algorithms) that will help us find a data-driven answer to the posed questions

- The data visualisation solutions that will help us communicate the indicators to change-agents / decision-makers / stakeholders

- The analytics and data infrastructure necessary to produce and deploy the indicators and visualisations

- The data that we will use to feed our indicators and visualisations

We should strive to clarify each of the six building blocks and their interfaces as early as possible in the project. As we obtain new information during the course of the design and implementation of the project, we should be open to how such information affects the overall alignment and project scope.

In general, changes in the first building blocks have more cascading consequences that change further down the list. For this reason, we should put special attention to the definition of the overarching challenge and the key questions early on during the design process.

In contrast, conventional data-science approaches to model the logical steps in a project typically start the other way around. A case in point is the “data collection => data analysis => interpretation” model, where only at the end of the pipeline we get to understand the potential usefulness and value that the project delivers.

Applying this guideline in practice

Each data-science project is different and there is no silver bullet or one-size-fits-all solution. However, asking and collectively answering the right questions early on can be very helpful. Answering these questions contributes to making sure everyone is on the same page and exposes dangerous hidden assumptions, which might be either plain wrong or might not be shared among our key stakeholders. In this context, for the crucial first four building blocks we can identify a few important issues that should be discussed before starting any implementation work:

1) Challenge

- Challenge description: First, spend some time crafting a precise and clear formulation of the challenge that is compelling and shared across stakeholders. The challenge formulation should be such that we can come back at the end of the project and easily determine if the project was able or not to contribute to solving the challenge.

- Identification of main stakeholders: List the main challenge stakeholders and briefly describe their roles. The list might include employees from different departments, clients, providers, regulators, etc.

- Description of the “pain” that justifies investing in solving this challenge: Start by specifying the current situation (including currently used tools, methods, processes etc.) and their limitations. Then describe the desired situation (described as the ideal solution to the challenge)

- Expected net-total value of solving this challenge in $: Assuming you are able to reach an “ideal” solution, make an effort to quantify in monetary terms the value that the organisation can capture by solving this challenge. This should be expressed as the incremental value of going from the current situation to the desired situation without considering development costs. The objective of this is to provide some context for the development budget and the maximum total effort that can be justified to solve the challenge.

- List your assumptions: make explicit what is behind your assessment of the desired situation and the calculation of the expected incremental value of moving to the desired situation

2) Questions

- Description of each question: Here is where we define each of the key questions, whose answers are needed inputs to tackle the identified challenge.

The questions should be described in ways that are answerable using data-driven algorithms. Typical questions can contain one or more of the following data-dimensions:

– Where (geographical/place)

– When (time)

– What (object/entity)

– Who (subject)

– How (process)

Example question: Who are the top organisations that I should first approach to develop a component for product “Y” and where are they located? - Goal of each question: Is the question descriptive, predictive or prescriptive? what is the description, prediction or prescription that the question is looking for?

- Ranking of the questions: Rank the questions according to their overall importance to the project, so that if necessary they can be prioritised.

3) Indicators

- Description of each indicator: The indicators are the algorithmic solutions to the posed questions. Although at an early stage we might not be able to define a fully fledged algorithm, we can express them at a higher level of abstraction, indicating the type of algorithmic solutions that are most helpful and achievable. For example, two indicators are:

– Collaboration algorithm that provides a ranked list of potential collaborators for company [X] given relational, technological and geographical proximity

– Capability mapping algorithm that identifies main technology clusters in a given industry based on the co-occurrence of key R&D-related terms

4) Data visualisations

- Define the target data visualisations: before writing any line of code or using any data, those that should benefit from the deliverables that the data-science project will produce can provide critical information about the data-visualisation formats that would be most useful for them.

A simple but powerful approach is to ask those target users to produce sketches with the types of visual representations that they think would be the best means to communicate the results that the indicators in point 2 should produce.

Other important features to consider when defining the data visualisations include simplicity, familiarity, intuitiveness, and fit with the question dimensions.

- Characteristic of each data visualisation: Characteristics of the data-visualisation solution include:

– The degree of required interactivity

– The number of dimensions that should display simultaneously

- Purpose of each data visualisation: The purpose of a visualisation can be:

– Exploration: provide free means to dig into the data and analyse relations without pre-defining specific insights or questions

– Narrative: the data visualisation is crafted to deliver a predefined message in a way that is convincing for the target user and aims at providing strong data-driven arguments

– Synthesis: The main focus of the visualisation is to integrate multiple angles of an often complex data-set condensing key features of the data in an intuitive and accessible format

– Analysis: the visualisation helps to divide an often large and complex data-set into smaller pieces, features or dimensions that can be treated separately

Wrap-up

Data science projects suffer from a tendency to over-emphasise the analytics, visualisations, data and infrastructure elements of the project too early on their design process. This translates in spending too little time in the early stages working on a joint definition of the challenge with project stakeholders, identifying the right questions, and on understanding the type of indicators and visualisations that are necessary and usable to answer those questions.

The guideline introduced in this article sought to share learning points derived from working with a new framework that helps to guide the early stages of data-driven projects and make more explicit the interdependencies between design decisions. The framework integrates elements from design and systems thinking as well as practical project experience.

The main building blocks of this framework were developed during work produced for EURITO (Grant Agreement n° 770420). EURITO is a European Union’s Horizon 2020 research and innovation framework project that seeks to build “Relevant, Inclusive, Timely, Trusted, and Open Research Innovation Indicators” leveraging new data sources and advanced analytics.

For more information about this and related topics you can visit www.parraguezr.net and www.eurito.eu

For more on how to make good questions I recommend the book “A More Beautiful Question: The Power of Inquiry to Spark Breakthrough Ideas” and the book “The Design Thinking Playbook: Mindful Digital Transformation of Teams, Products, Services, Businesses and Ecosystems” for ideas about how to include design thinking ideas in data science projects.

Pedro Parraguez will be speaking at Data Natives 2018– the data-driven conference of the future, hosted in Dataconomy’s hometown of Berlin. On the 22nd & 23rd November, 110 speakers and 1,600 attendees will come together to explore the tech of tomorrow. As well as two days of inspiring talks, Data Natives will also bring informative workshops, satellite events, art installations and food to our data-driven community, promising an immersive experience in the tech of tomorrow.