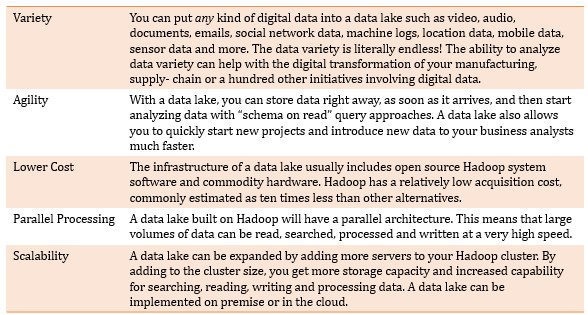

Data lakes are based on a simple idea: You can store and analyze massive amounts of raw data at scale. But why data lakes? Here are five reasons why IT leaders are excited about this idea:

Unfortunately, lots of companies end up with a data swamp instead of a data lake. A data swamp is a place where:

- You can’t find the specific data you seek

- You don’t know the source of any given file

- You don’t know what is included or excluded from any given file

- You have multiple copies of a file or object

- You don’t know the quality characteristics of any file

- It is often impractical to integrate data from different sources

After as little as three months of actual operation, many data lakes degenerate into data swamps and are unusable for business purposes. The result is an IT operational nightmare in which investments are lost and critical business goals are compromised.

So, how do you create a data lake that doesn’t turn into a swamp?

Think about a water reservoir, which is a place where water is stored until needed, under controlled conditions. Water reservoirs don’t turn into swamps because they are managed. What goes into them and comes out is controlled. Reservoirs are built to minimize biological and chemical contamination, and best practices are followed in maintaining them. If all is done right, you can actually drink water that comes from a reservoir.

To avoid a data swamp, set out to build a data reservoir, based on a systematic approach, sound architecture and a set of best practices.

The Systematic Approach

How do most people approach the creation of a data lake? They say, “We’ll figure it out as we go along.” But, what happens is that as soon as the word gets out about a data lake’s existence, employees start adding data to the lake. Data will come in very rapidly, and each user will do things his or her own way. Before you know it, you will have the proverbial data swamp.

A better approach is one in which major problems are anticipated, solutions are defined in advance and users work together.

Let’s take the example of sharing data. Imagine you want to have a copy of all the social media tweets relevant to your business. If the tweets are obtained for one employee, you don’t want someone else to have to go out to another vendor and buy them again. Effective data sharing is fundamental to the business value of the data reservoir.

It’s also important for users to be able to find what data is already present in the data lake and learn about the data to tell if it’s suitable for use. This effort requires an architecture and best practices that take data sharing into account. You will also need tools to make it easy to search data and their associated metadata.

Organizations should anticipate the importance of data sharing from the beginning of a data lake project so that data is shared and reused throughout the organization. All things considered, a successful data lake approach will identify fundamental issues up front and address them with an integrated architecture and essential best practices.

Sound Architecture

As mentioned above, one component of data reservoir architecture is the management of metadata to encourage and support data reuse. Here are other key capabilities:

Data ingestion: It must be straightforward and efficient to bring data from a new source into the data reservoir. In particular, custom coding and scripting should be avoided.

Archiving data as sourced: Many data reservoirs require that a copy of the data as originally received is available for audit, traceability, reproducibility and some data science techniques. Thus, an automatic and efficient way to archive a copy of the source data, usually with lossless compression and sometimes in encrypted form is required.

Data transformation: To prepare data for analytics, the set of all necessary transformations in a given data reservoir is likely to be large. A minimum amount of custom programming should be the goal.

Data publication: Data ingestion, data transformation and metadata capture should be completed so that data can be used. The act of “publication” makes data ready for a specific class of reports, dashboards or queries.

Security: A data reservoir should manage access to data objects, certain s services (such as Hive or HBase), specific applications and to the Hadoop cluster itself. A security architecture and strategy should protect the perimeter, handle identification and authorization of users, control access to data, address needs for encryption, masking, and tokenization, and comply with requirements for logging, reporting and auditing.

Operations and management: When a data reservoir is operating at full scale, there will be many data pipelines in operation at the same time, each ingesting, transforming and publishing data. There will also be processes for consuming data, either via extract and download or via interactive reporting and query.

Best Practices

In the creation of data pipelines – ingestion, transformation and publication – a best practice is correctly performing the necessary steps to make data consistently usable. This means capturing metadata at every touch point, handling exceptions correctly at every step, completing appropriate data quality checks, efficiently handling incorrect data as well as correct data, and performing any data conversions or normalizations according to specified standards.

Applicable standards and templates should also be applied consistently across the data reservoir. This way your data will provide an appropriate foundation for rigorous analysis to enable significant business decisions and actions.

Ready to cultivate your reservoir? Data lakes are now so common that your organization is likely to have one soon, if it does not already. However, in as little as three months of actual operation, most “figure it out as you go” data lakes are going to be unusable for business purposes.

Instead of a data swamp that no one uses, you can have a successful data reservoir in which data is well organized and provides increasing value.

Like this article? Subscribe to our weekly newsletter to never miss out!