The line between what’s real and what’s manufactured is getting awfully blurry these days and YouTube has a solution.

YouTube, the go-to place for watching just about anything, is stepping in with new rules to make sure we can tell the difference.

They know, like the rest of us, that the way artificial intelligence can create videos is both amazing and a little bit scary.

YouTube’s transparency initiative

The problem is that AI can be used to create videos that blur the lines between real and fake. Deepfakes, where someone’s likeness and voice are manipulated, can be harmful tools of impersonation. Synthetic voices might narrate videos with deceptive information, and even seemingly genuine videos could contain subtle AI-assisted edits. The potential for misuse is very real.

Think about it: AI can swap out someone’s face and voice in a video, making them say and do things they never did. That’s the whole deepfake thing, and it gets disturbing fast. Then there are those computer-made voices that sound so real, you could swear you’re listening to an actual person… until you realize it’s just a program spouting lies. Even “regular” videos might have sneaky AI edits that change the whole story.

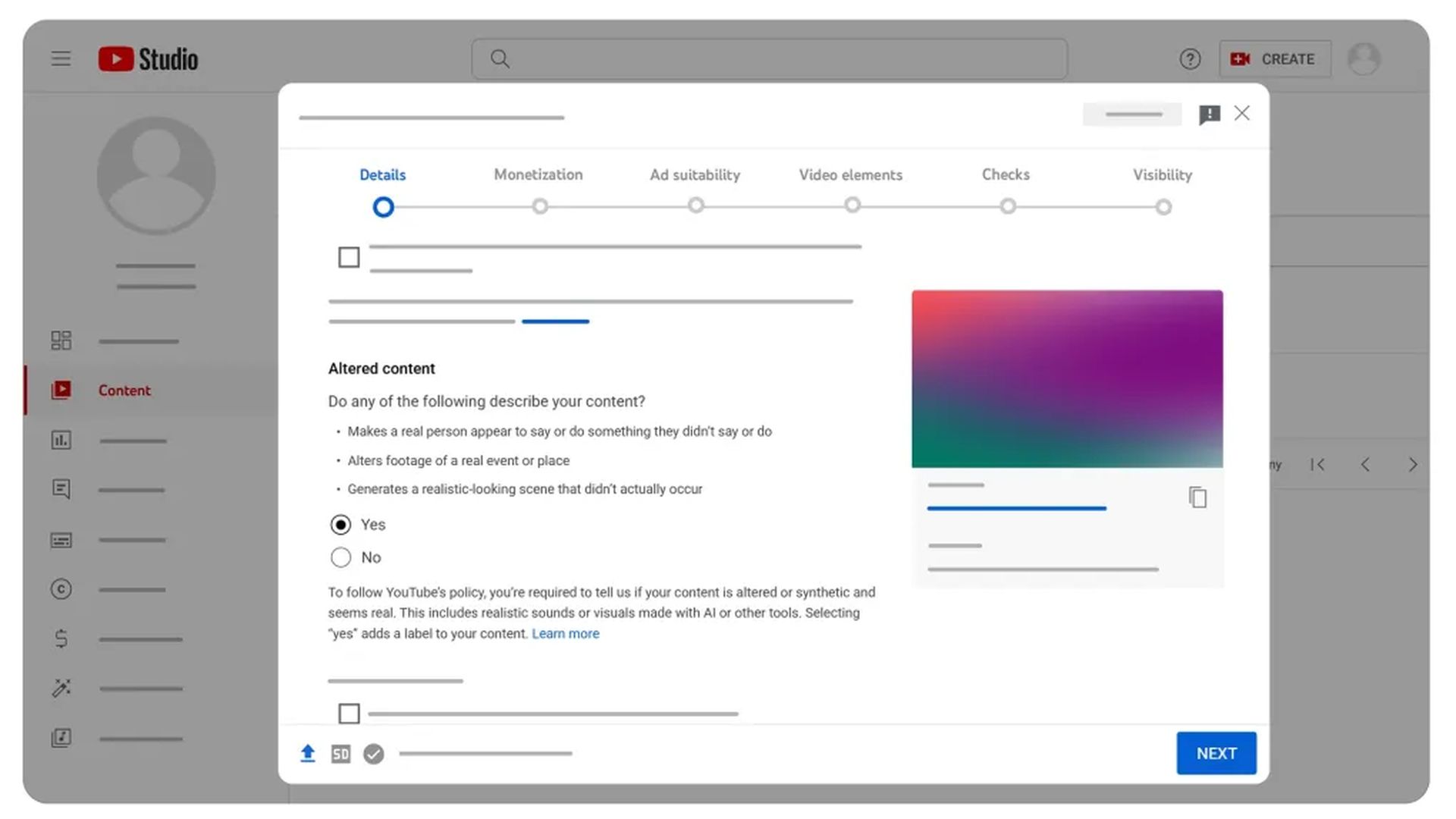

To combat this, YouTube is asking creators to disclose when their videos feature AI-generated elements that aren’t immediately obvious. This could include adding information to the video’s description

Additionally, videos addressing sensitive topics like politics or health might receive a label directly on the video player itself for extra context.

YouTube is also likely developing systems to automatically detect videos that may contain undisclosed AI elements. This is crucial because a human review of every single upload simply isn’t feasible. These AI detection tools would scan for patterns and anomalies that indicate digital manipulation. This could range from analyzing the consistency of a speaker’s voice patterns to detecting subtle visual glitches introduced by deepfake technology.

However, it also poses a challenge – as AI generation techniques advance, the detection systems must constantly evolve as well, creating a sort of technological arms race.

Manipulation vs. creative expression

There’s a difference between deceptive videos meant to harm and content creators using AI for artistic purposes. Think of those fantastical music videos where everything morphs and transforms – obviously not real, but amazing to watch. Or filmmakers using AI to create worlds we couldn’t otherwise imagine.

Where do we draw the line between harmful deception and exciting new forms of entertainment and storytelling?

Honesty is the best policy

Okay, sure, sometimes it’s just fun to watch those silly deepfakes of politicians singing pop songs. But when it comes to the serious stuff, knowing what’s real matters a whole lot.

Misinformation spreads like wildfire (remember those TikTok diesel truck on fire videos?), and if people believe something just because it’s in a video, that’s a problem. Plus, think about the person whose face is plastered onto some video they never wanted to be in – it’s just not right. That’s where transparency protects both us as viewers, and the folks who might end up the target of a deceptive AI video.

YouTube taking a stand is a good start, but we can’t get complacent. Technology changes fast, and those trying to mislead us are going to get cleverer too. It’s up to us viewers to keep our thinking caps on.

Question what you see, don’t just take a video at face value, and always be willing to dig deeper to find out if something’s the real deal.

Featured image credit: Freepik.