Banks that still rely on static bureau scorecards for underwriting are losing speed, precision, and share in segments where data volume grows every quarter. Product teams now tune pricing, limits, and engagement through the entire lifecycle based on how risk evolves month by month. That shift depends on treating underwriting as an AI-first, data-centric product capability rather than a set of legacy rules.

Credit organizations already possess dense behavioral signals, including transaction flows, income volatility, arrears patterns, device fingerprints, digital logins, and merchant graphs. When teams couple those streams with modern machine learning, they move from coarse “approve/decline” scorecards to dynamic risk intelligence.

Empirical work on card and SME portfolios consistently shows that tree ensembles, such as gradient boosting and random forests, outperform logistic regression in terms of AUC and F1, especially when non-traditional features are introduced into the pipeline.

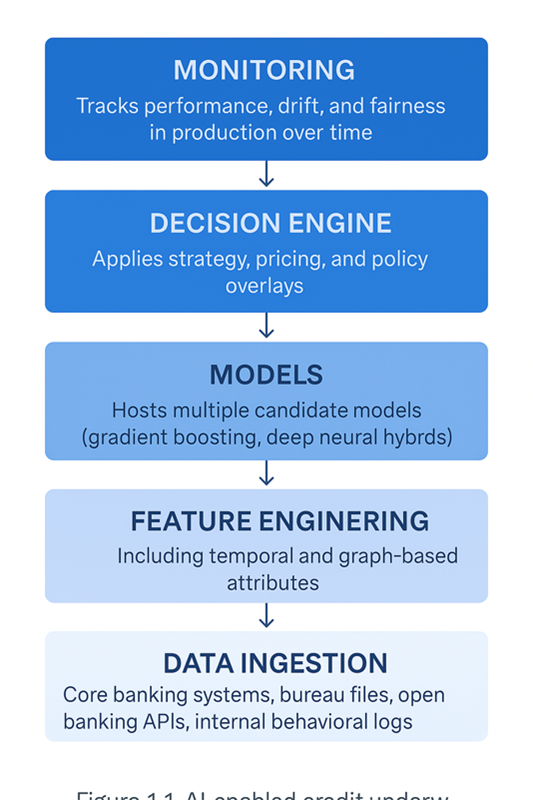

From single score to five-layer AI underwriting stack

Modern underwriting works best as a modular stack rather than a monolith.

Layer 1 – Data intake

Core banking systems, bureau files, open banking APIs, and external providers from telecom, utilities, e-commerce, and registries feed a unified intake layer. The goal is to have relevant financial and behavioral traces reside in a single, governed environment with lineage, quality checks, and near-real-time refresh.

Layer 2 – Feature engine

Raw records then convert into features that actually drive risk separation: cash-flow volatility, income proxies, spend clusters, device and session stability, repayment regularity, and network descriptors for SMEs. Teams treat this layer as a product with versioning, documentation, and clear ownership.

Layer 3 – Model garden

Candidate models run on top: gradient-boosted trees, hybrid architectures, and temporal neural networks that read sequences of transactions. Sequence models preserve the temporal structure of payment behavior while remaining compatible with explainability techniques, such as SHAP timelines or saliency maps.

Layer 4 – Decision engine

Strategy lives here: eligibility rules, risk-based pricing, limit sizing logic, early-warning triggers, and manual review queue. Product managers experiment with different cut-offs, champion–challenger strategies, and treatment paths without touching the underlying model code.

Layer 5 – Monitoring and governance

Dashboards track AUC, approval and loss rates, drift in input distributions, and fairness indicators by segment, with alerts when performance degrades or features start behaving unexpectedly.

In practice, each layer runs as a separate service with clear APIs and latency budgets. Data teams plug in new providers or features without breaking downstream decisions; product teams ship new strategies without re-implementing the plumbing.

Choosing models for impact, not just leaderboard wins

Once the data and feature layers stabilize, model choice turns into a business trade-off rather than a Kaggle competition. Across benchmark datasets, boosted trees, such as XGBoost, typically rank at the top of the leaderboard for AUC, accuracy, and F1 in retail, SME, and credit card portfolios.

Evidence from live systems is even more persuasive for executives: production-grade ML credit models often reach materially higher AUC than legacy scorecards, especially in thin-file or volatile segments. In commercial banking, ensembles trained on financial statements, behavioral flags, and external registries identify weakening borrowers months before default probabilities materially change, allowing relationship teams time to restructure or adjust limits.

For a next-generation product manager, the model is no longer a black box owned by “risk”. They own the end-to-end underwriting strategy, framing risk–return objectives, deciding where in the customer journey ML takes the wheel, designing experiments, curating the feature library, and tracking how score changes affect approval rates, losses, NPS, and unit economics. Underwriting evolves into a continuously optimized product lever, rather than a one-time control at account opening.

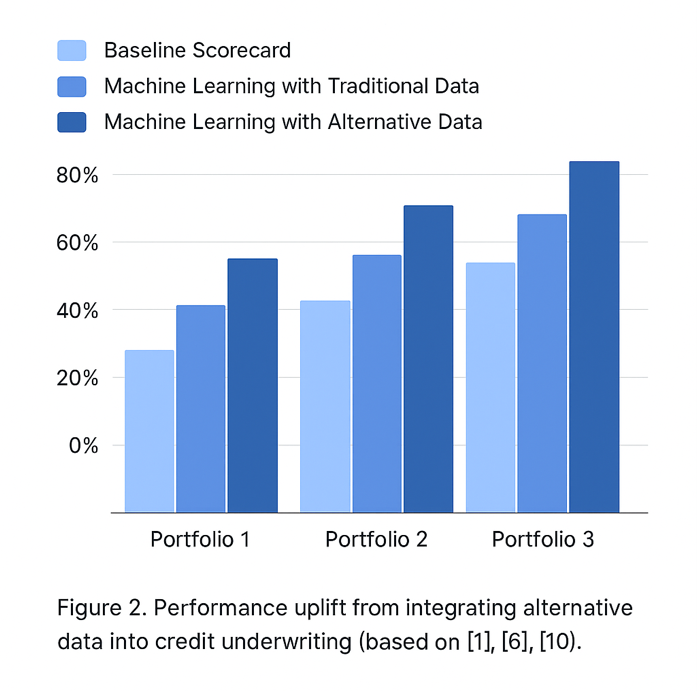

Alternative data as an engine of inclusion

The broader step-change comes from expanding the information set beyond traditional bureau files. Utility and telecom payment histories, rental flows, mobile money transactions, e-commerce activity, psychometric assessments, handset usage, and public records supply new angles on willingness and capacity to repay. Development finance and financial inclusion studies demonstrate that combining these signals with traditional data both enhances the discriminatory power and attracts previously “unscorable” clients into sustainable credit brackets.

Credit bureaus now ingest non-traditional data at scale; banks plug into specialized alternative-data scoring providers; borrowers share cash-flow and account access through consented digital channels. In microfinance, agriculture, and low-income consumer lending, psychometrics, handset activity, messaging patterns, and email metadata frequently separate diligent but invisible borrowers from high-risk profiles with similar bureau footprints.

For product teams, that uplift turns into concrete levers. Continuous score distributions support fine-grained pricing, limit curves, and loyalty benefits. Teams no longer treat underwriting as a binary gate; instead, they tune acceptance bands, upsell paths, and limit-step schedules along the full probability range.

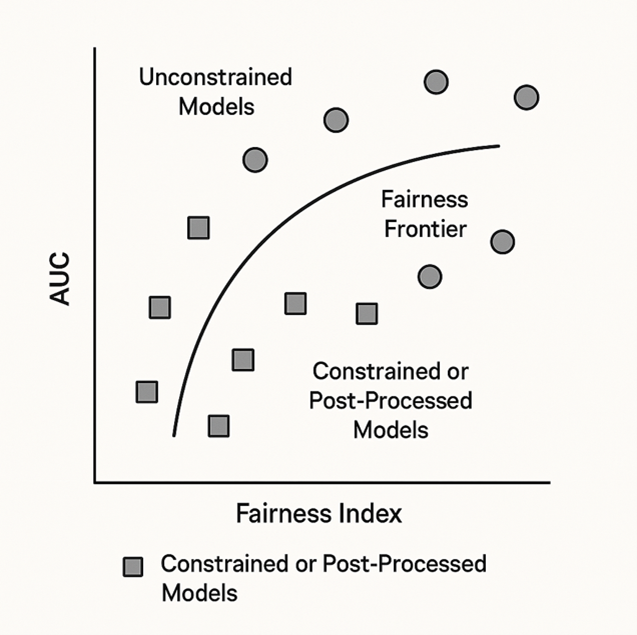

Fair, explainable, and regulator-ready underwriting

As the stack grows more complex, supervisors, customers, and internal control functions start asking sharper questions: who benefits, who gets left out, and why. Recent AI credit scoring research moves away from single-objective optimization toward joint targets: predictive power, fairness, and explainability are treated as coequal design goals rather than afterthoughts.

Shapley- and SHAP-based diagnostics help teams understand how much each variable contributes to scores across subgroups, not just on the overall sample. That lens often reveals situations where global metrics look balanced, yet certain demographic or geographic slices bear disproportionate adverse impact. Explainable deep learning adds another tool: attribution maps over temporal networks highlight which stretches of a repayment path contribute to a classification being labeled as “good” or “bad”, offering human reviewers interpretable narratives for complex models.

Policy guidance from organizations such as the Alliance for Financial Inclusion and the World Bank already sets expectations around consent, transparency, and privacy for alternative data. Lenders must explain why they use specific non-traditional variables, how those data inform their decisions, and how customers can challenge the outcomes.

Turning the underwriting engine into a lifecycle brain

Underwriting creates value when it connects with product design, collections, and loyalty. Scores should not live in isolation as an approval toggle. They inform limit sizing, introductory pricing, fee waivers, retention offers, and loyalty tiering throughout the relationship.

Loyalty programs become far more surgical when they tap into the same behavioral and risk features that power underwriting. Low-risk but low-engagement customers receive targeted fee waivers or accelerated earn rates to stimulate usage. Thin-file customers admitted through alternative data follow curated upgrade paths, where limits, bundles, and cross-sell intensity evolve with each clean repayment cycle.

Thin-file clients sourced via utility or mobile data start with conservative limits and guided onboarding journeys, then graduate rapidly as the system accumulates evidence of resilience. Large corporates flagged by early-warning ensembles trigger proactive outreach and restructuring conversations rather than silent surveillance. The same infrastructure that ingests and standardizes alternative data for risk models drives richer segmentation, experimentation, and personalization across marketing, servicing, and loyalty.

When banks treat the AI underwriting stack as a shared decision layer for acquisition, pricing, collections, and loyalty, risk models stop being plumbing and start steering growth. Institutions that move first on industrialized ML, alternative data, and lifecycle experimentation will write the next generation of credit products; the rest will keep adjusting legacy scorecards while their most profitable segments walk away.